We are also in need of a lot of reviewers! All volunteers appreciated ❤️ Express interest: forms.gle/4euQVPdFEty...

See more info on our call for papers: mechinterpworkshop.com/cfp

And learn more about the workshop: mechinterpworkshop.com

We are also in need of a lot of reviewers! All volunteers appreciated ❤️ Express interest: forms.gle/4euQVPdFEty...

See more info on our call for papers: mechinterpworkshop.com/cfp

And learn more about the workshop: mechinterpworkshop.com

All approaches encouraged, if they can give a convincing case that they further the field. Open source work, new methods, negative results, combining white + black box methods, from probing to circuit analysis, from real-world tasks to rigorous case studies - only quality matters

13.07.2025 13:00 — 👍 0 🔁 0 💬 1 📌 0

The call for papers for the NeurIPS Mechanistic Interpretability Workshop is open!

Max 4 or 9 pages, due 22 Aug, NeurIPS submissions welcome

We welcome any works that further our ability to use the internals of a model to better understand it

Details: mechinterpworkshop com

I'm curious how much other people encounter this kind of thing

01.06.2025 18:48 — 👍 4 🔁 0 💬 1 📌 0

I've been really feeling how much the general public is concerned about AI risk...

In a *weird* amount of recent interactions with normal people (eg my hairdresser) when I say I do AI research (*not* safety), they ask if AI will take over

Alas, I have no reassurances to offer

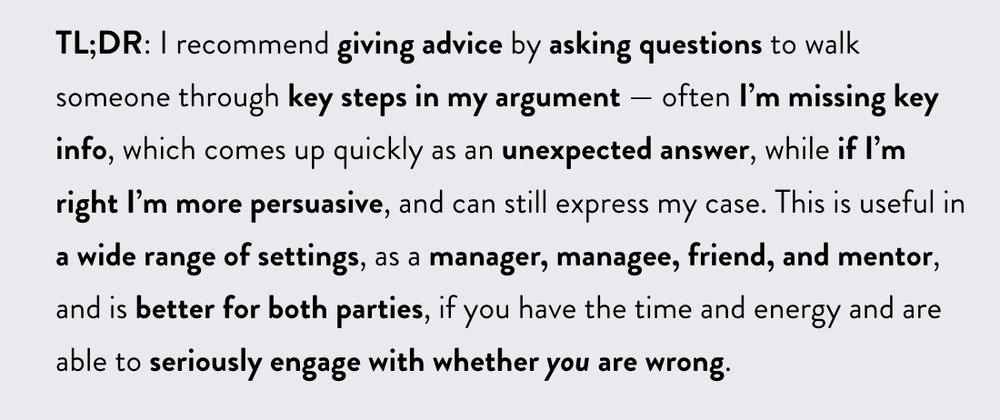

The mindset of Socratic Persuasion is shockingly versatile. I use it on a near daily basis in my personal and professional life: conflict resolution, helping prioritize, correcting misconceptions, gently giving negative feedback. My post has 8 case studies, to give you a sense:

26.05.2025 18:37 — 👍 3 🔁 0 💬 0 📌 0

Isn't this all obvious?

Maybe! Asking questions rather than lecturing people is hardly a novel insight

But people often assume the Qs must be neutral and open-ended. It can be very useful to be opinionated! But you need error correction mechanisms for when you're wrong.

Crucially, the goal is to give the right advice, not to *be* right. Asking Qs is far better received when you are genuinely listening to the answers, and open to changing your mind. No matter how much I know about a topic, they know more about their life, and I'm often wrong.

26.05.2025 18:37 — 👍 1 🔁 0 💬 1 📌 0

Socratic persuasion is more effective if I'm right: they feel more like they generated the argument themselves, and are less defensive.

Done right, I think its more collaborative - combining my perspective and their context to find the best advice. Better for both parties!

I can integrate the new info and pivot if needed, without embarrassment, and together we converge on the right advice. It's far more robust - since the other person is actively answering questions, disagreements surface fast

The post: www.neelnanda.io/blog/51-soc...

More thoughts in 🧵

Blog post: I often give advice, to mentees, friends, etc. This is hard! I'm often missing context

My favourite approach is Socratic persuasion: guiding them through my case via questions. If I'm wrong there's soon a surprising answer!

I can be opinionated *and* truth seeking

Note: This is optimised for “jump through the arbitrary hoops of conference publishing, to maximise your chance of getting in with minimal sacrifice to your scientific integrity”. I hope to have another post out soon on how to write *good* papers.

11.05.2025 22:47 — 👍 1 🔁 0 💬 0 📌 0The checklist is pretty long - this is deliberate, as there's lots of moving parts! I tried to cover literally everything I could think of that should be done when submitting. I tried to italicise everything that should be fast or skippable, and obviously use your own judgement.

11.05.2025 22:47 — 👍 0 🔁 0 💬 1 📌 0

See the checklist here:

docs.google.com/document/d/...

And check out my research sequence for more takes on the broader research process:

x.com/NeelNanda5/...

There are many moving pieces when turning a project into a machine learning conference paper, and best practices/nuances no one writes up. I made a comprehensive paper writing checklist for my mentees and am sharing a public version below - hopefully it's useful, esp for NeurIPS!

11.05.2025 22:47 — 👍 5 🔁 0 💬 1 📌 0

Check it out here - post 4 on how to choose your research problems coming out soon!

www.alignmentforum.org/posts/Ldrss...

I think of (intuitive) taste like a neural network - decisions are data points, outcomes are labels. You'll learn organically, but can speed up!

Supervision: Papers/Ask a mentor

Sample efficiency: Reflect on *why* you were wrong

Episode length: Taste for short tasks comes faster

Post 3: What is research taste?

This mystical notion separates new and experienced researchers. It's real and important. But what is it and how to learn it?

I break down taste as the mix of intuition/models behind good open-ended decisions and share tricks to speed up learning

x.com/NeelNanda5/...

Great work by @NunoSempere and co. Check out yesterday's here:

blog.sentinel-team.org/p/global-ri...

I'm very impressed with the Sentinel newsletter: by far the best aggregator of global news I've found

Expert forecasters filter for the events that actually matter (not just noise), and forecast how likely this is to affect eg war, pandemics, frontier AI etc

Highly recommended!

As a striking example of how effective this is, Matryoshka SAEs fairly reliably get better on most metrics as you make them wider, as neural networks should. Normal sparse autoencoders do not.

02.04.2025 13:01 — 👍 0 🔁 0 💬 0 📌 0

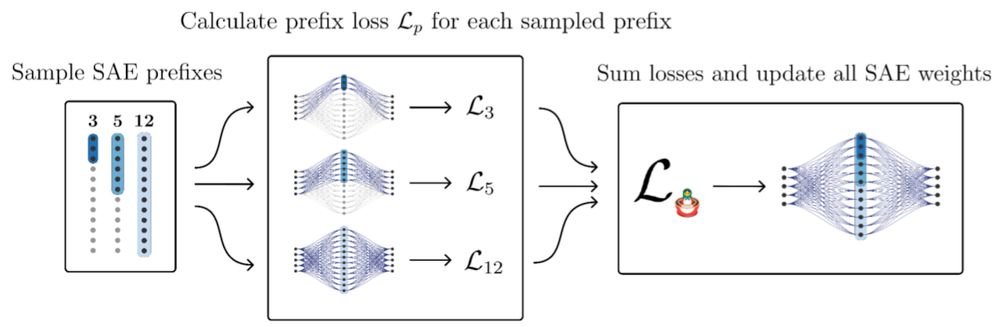

This slightly worsens reconstruction (it's basically regurisation), but improves performance substantially on some downstream tasks and measurements of sparsity issues!

02.04.2025 13:01 — 👍 0 🔁 0 💬 1 📌 0

With a small tweak to the loss, we can simultaneously train SAEs of several different sizes that all work together to reconstruct things. Small ones learn high-level features, while wide ones learn low-level features!

02.04.2025 13:01 — 👍 0 🔁 0 💬 1 📌 0

In this specific case, the work focused on how sparsity is an imperfect proxy to optimize if we actually want interpretability. In particular, wide SAEs break apart high-level concepts into narrower ones via absorption, composition, and splitting.

02.04.2025 13:01 — 👍 0 🔁 0 💬 1 📌 0

This was great to supervise - the kind of basic science of SAEs work that's most promising IMO! Find a fundamental issue with SAEs, fixing it with an adjustment (here a different loss), and rigorously measuring how much it's fixed. I recommend using Matryoshka where possible.

x.com/BartBussman...

Read it here (and thanks to my girlfriend for poking me to write the first post on my main blog in several years...)

www.neelnanda.io/blog/50-str...

New post: A weird phenomenon: because I have evidence of doing good interpretability research, people assume I have good big picture takes about how it matters for AGI X-risk. I try, but these are different skillsets! Before deferring, check for *relevant* evidence of skill.

22.03.2025 12:00 — 👍 9 🔁 0 💬 1 📌 0

More details on applied interp soon!

More broadly, the AGI Safety team is keen to get applications from both strong ML engineers and strong ML researchers.

Please apply!

DeepMind AGI Safety is hiring! I think we're among the best places in the world to do technical work to make AGI safer. I'm keen to meet some great future colleagues! Due Feb 28

One role is applied interpretability: a new subteam of my team using interp for safety in production

x.com/rohinmshah/...

Obviously, small SAEs can't capture everything. But making it larger isn't enough, as sparsity incentivises high-level concepts to be absorbed into new ones.

But not all hope is lost - our forthcoming paper on Matryoshka SAEs seems to substantially improve these issues!