My 2025 research highlights in ai+science tensorlab.cms.caltech.edu/users/anima/...

02.01.2026 20:41 — 👍 3 🔁 1 💬 0 📌 0My 2025 research highlights in ai+science tensorlab.cms.caltech.edu/users/anima/...

02.01.2026 20:41 — 👍 3 🔁 1 💬 0 📌 0An exciting collaboration with @francesarnold.bsky.social on AI+enzymes. This combines generative protein models with carefully tuned filters that resulted in functional and versatile enzymes beating natural and previously engineered enzymes.

16.12.2025 03:23 — 👍 4 🔁 2 💬 0 📌 0

Finetune a codon-level language model with 30k tryptophan synthases, then generate diverse, functional, enzymes with broad substrate scopes.

Théophile Lambert @jsunn-y.bsky.social @francesarnold.bsky.social

www.biorxiv.org/content/10.1...

Generated PLP-dependent Trp synthases are functional, stable, and exhibit usefully broad substrate scopes! fun collaboration with @anima-anandkumar.bsky.social @ramanathanlab.bsky.social Amin Takavoli. I love AI + #enzymes!

16.12.2025 00:46 — 👍 13 🔁 1 💬 0 📌 1

Analyzing Political Text at Scale with Online Tensor LDA: @sarakangaslahti.bsky.social @harvard.edu | D Ebanks @iqss.bsky.social | @jeankossaifi.bsky.social NVIDIA |A Liu @hopkinsengineer.bsky.social @rmichaelalvarez.bsky.social @caltechlcssp.bsky.social @anima-anandkumar.bsky.social @caltech.edu

10.12.2025 20:52 — 👍 3 🔁 1 💬 1 📌 0

Join our happy hour meetup today at NeurIPS to chat about AI+Science and AI+Math with me and my team from @caltechedu at:

Achilles Coffee Roasters Gaslamp, San Diego.

4:45pm - 6:30pm

I will be announcing one more meetup later this week if you can't make it to this one. Stay tuned!

Join livestream and listen to talks at @caltech.edu ai+science conference aiscienceconference.caltech.edu

10.11.2025 17:43 — 👍 1 🔁 0 💬 0 📌 0

Join @aratip.bsky.social, Vivek Vishwanathan & @anima-anandkumar.bsky.social for UC Berkeley’s #TechPolicyWeek!

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

I am thrilled to see Omar Yaghi win the Nobel Prize in Chemistry today. I have had the privilege to interact with him and collaborate with him. This is a paper from a couple of years ago using generative models for MOFs with @ucberkeleyofficial.bsky.social group. pubs.acs.org/doi/10.1021/...

08.10.2025 19:15 — 👍 1 🔁 0 💬 0 📌 0Our new paper on AI-generated TrpBs with @anima-anandkumar.bsky.social. GenSLM generated very useful promiscuous TrpB #enzymes, bypassing a lot of #directedevolution! great work by the whole team, especially Theophile Lambert. www.biorxiv.org/content/10.1...

04.09.2025 14:58 — 👍 10 🔁 1 💬 0 📌 0

Very pleased to see our AI model GenSLM designing novel and versatile enzymes in a challenging setting in

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

End-to-end learning can use both approximate and accurate training data, if the model can learn how to mix them correctly. It turns out that Neural Operators offer a perfect solution when such multi-fidelity and multi-resolution data is available, and can learn with high data efficiency.

02.09.2025 00:41 — 👍 0 🔁 0 💬 0 📌 0Our latest paper surprisingly shows that it is not the case! End to end also requires less training data compared to methods that keep existing numerical solvers and augment with AI. Where do savings come from? The approach that augments AI relies only on fully accurate expensive training data.

02.09.2025 00:40 — 👍 0 🔁 0 💬 1 📌 0We have seen end-to-end approach win in areas like weather forecasting. It is significantly better for speed: 1000-million x faster than numerical simulations in many areas such as fluid dynamics, plasma physics etc. But a big argument against it is the need for expensive training data.

02.09.2025 00:38 — 👍 2 🔁 0 💬 1 📌 0Popular prescription is to augment AI into existing workflows rather than replace them, e.g., keep the approximate numerical solver for simulations, and use AI only to correct its errors in every time step. Other extreme is to completely discard the existing workflow and replace it fully with AI.

02.09.2025 00:37 — 👍 1 🔁 0 💬 1 📌 0

How do we build AI for science? Augment with AI or replace with AI? Augment with AI involves keeping existing numerical simulations. In our latest paper, we show end-to-end learning is faster significantly and also wins in data efficiency, which is counterintuitive. arxiv.org/pdf/2408.05177 #ai

02.09.2025 00:37 — 👍 2 🔁 0 💬 1 📌 0

Thank you @cvprconference.bsky.social for hosting my

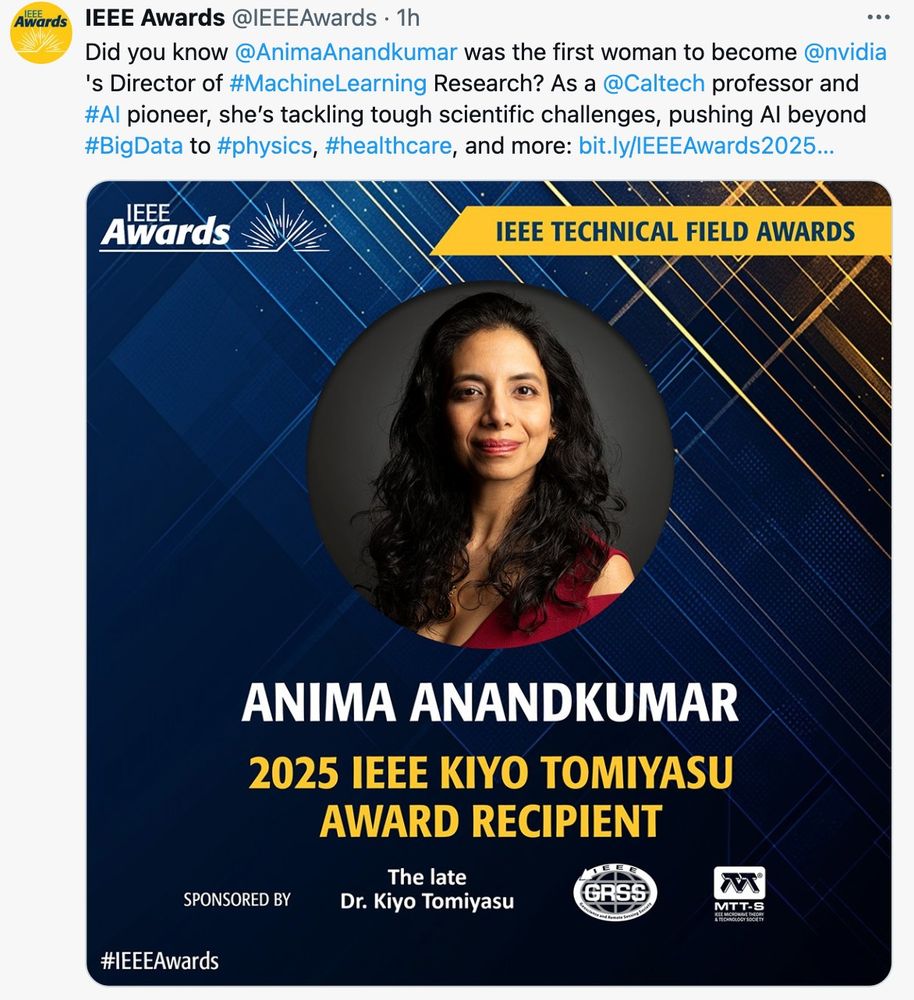

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

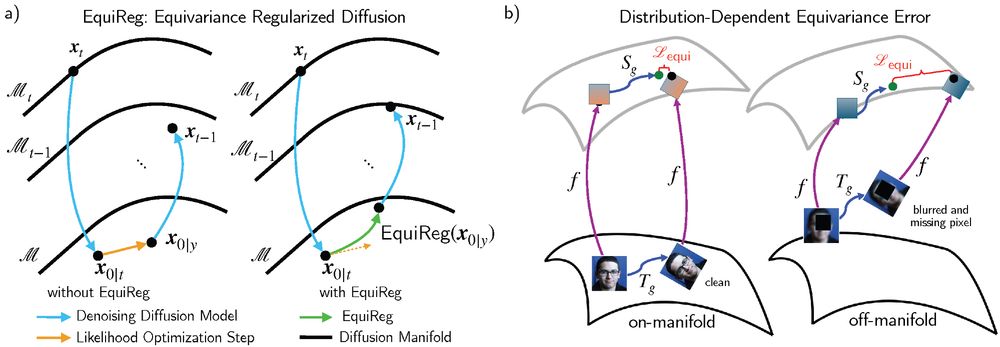

🚨We propose EquiReg, a generalized regularization framework that uses symmetry in generative diffusion models to improve solutions to inverse problems. arxiv.org/abs/2505.22973

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

Thank you @caltech.edu for including me in the history of AI. It starts with Carver Mead, John Hopfield and Richard Feynman teaching a course on physics of computation. Not many are aware that the main AI conference, NeurIPS, started at @caltech.edu

magazine.caltech.edu/post/ai-mach...

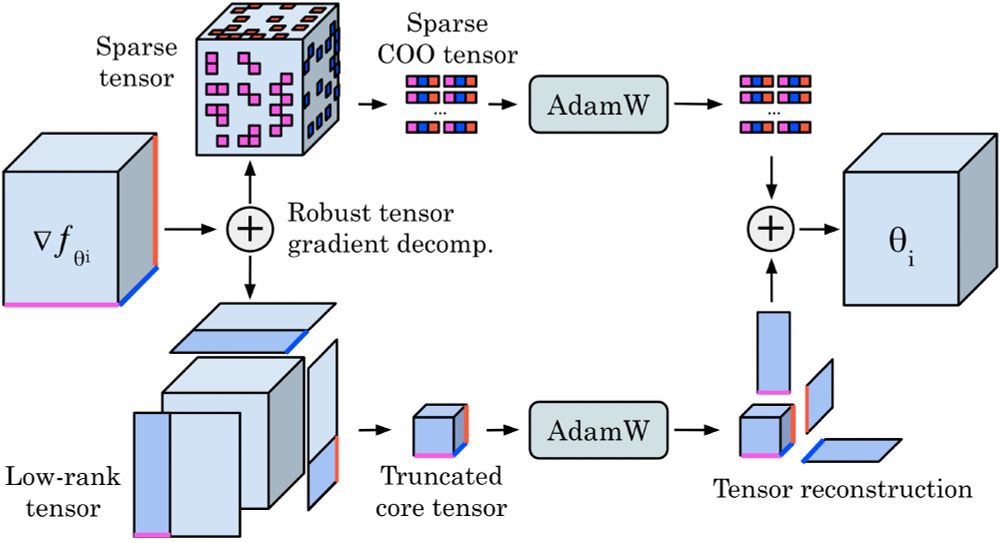

Check out our new preprint 𝐓𝐞𝐧𝐬𝐨𝐫𝐆𝐑𝐚𝐃.

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

Thanks to my co-authors David Pitt, Robert Joseph George, Jiawwei Zhao, Cheng Luo, Yuandong Tian, Jean Kossaifi, @anima-anandkumar.bsky.social, and @caltech.edu for hosting me this spring!

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

It was an honor to be part of Google IO Dialogues stage and talk about AI+Science.

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

In a recent interview I talk about what it takes for AI to make new scientific discoveries. tldr: it won’t be just LLMs. www.newindiaabroad.com/english/tech...

25.05.2025 23:26 — 👍 11 🔁 0 💬 0 📌 0

Thank you EO for coming to @caltech.edu interviewing me on #ai I talk about the need to keep being curious and use AI as a tool, rather than being afraid of AI. I talk about AI for scientific modeling and discovery, and training the first high-resolution AI-based weather model. youtu.be/FIxLJVthW6I

04.05.2025 20:41 — 👍 5 🔁 0 💬 0 📌 0

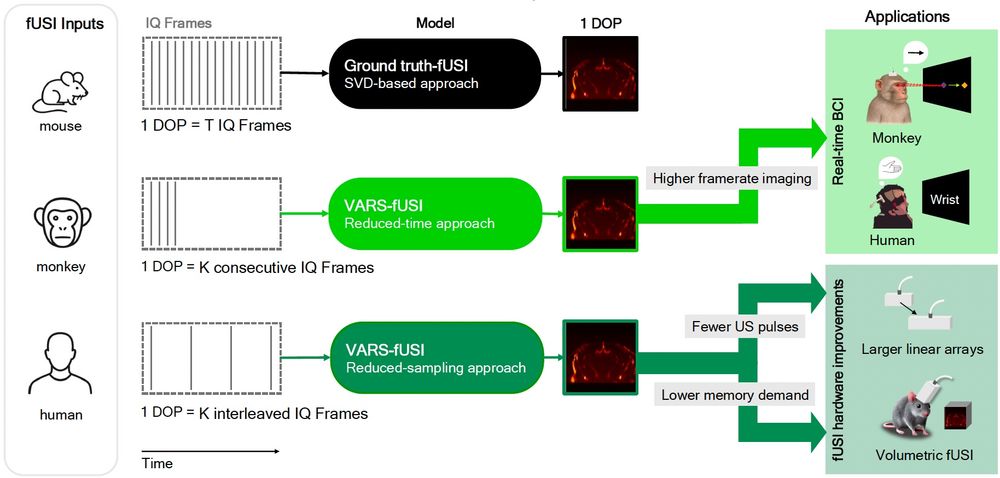

We have released VARS-fUSI: Variable sampling for fast and efficient functional ultrasound imaging (fUSI) using neural operators.

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

Rayhan Zirvi is presenting our paper "Diffusion State-Guided Projected Gradient for Inverse Problems" at #ICLR2025! Joint work with @anima-anandkumar.bsky.social 1/

paper: openreview.net/pdf?id=kRBQw...

code: github.com/Anima-Lab/Di...

website: diffstategrad.github.io

Collage with 20 trailblazing Women of AI- Anima Anandakumar, Ayanna Howard, Cynthia Breazeal, Cynthia Rudin, Daphne Koller, Devi Parikh, Doina Precup, Fei-Fei Li, Hanna Hajishirzi, Joelle Pineau, Joy Buolamwini, Latanya Sweeney, Leslie Kaelbling, Margaret Mitchell, Melanie Mitchell, Niki Parmar, Rana el Kaliouby, Regina Barzilay, Timnit Gebru, Yejin Choi

#WomensHistoryMonth: Honoring trailblazing #WomenOfAI whose research has made an impact on the current #AI/ML revolution incl. @anima-anandkumar.bsky.social @timnitgebru.bsky.social @mmitchell.bsky.social @deviparikh.bsky.social @ajlunited.bsky.social @yejinchoinka.bsky.social @drfeifei.bsky.social

30.03.2025 19:33 — 👍 43 🔁 16 💬 0 📌 0

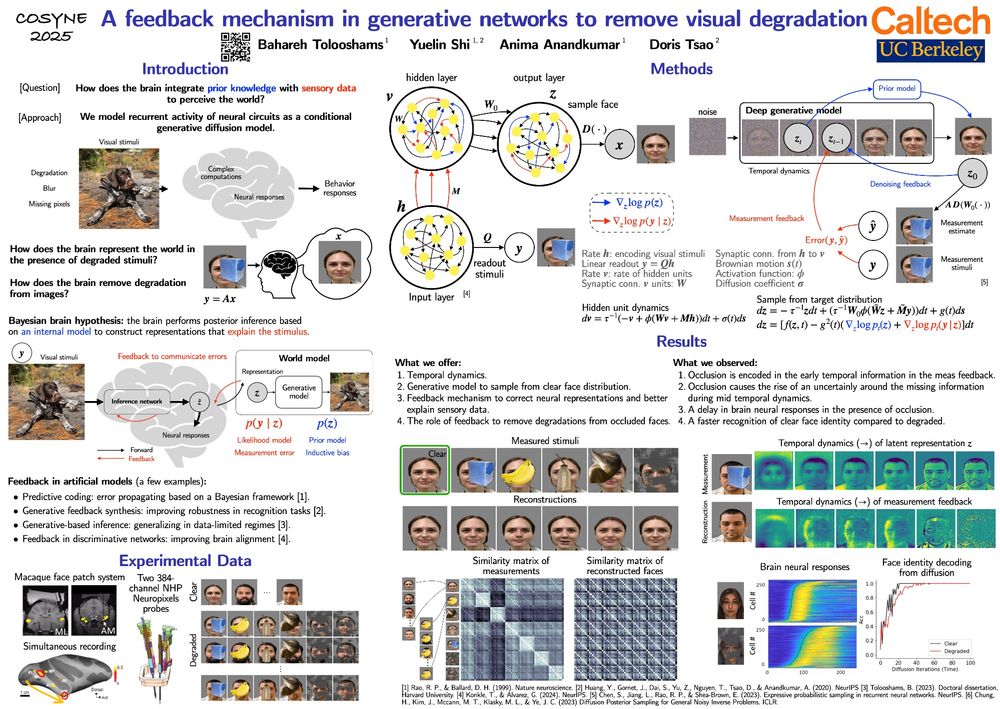

How does the brain integrate prior knowledge with sensory data to perceive the world?

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2

Thank you, IEEE, for the honor! AI+Science is here to stay. I started working on this seriously after I joined @caltech.edu in 2017. We grounded our work in principled foundations, such as Neural Operators and physics-informed learning, for accelerating modeling and making scientific discoveries.

18.03.2025 18:16 — 👍 16 🔁 4 💬 1 📌 0

LeanAgent: Lifelong learning for formal theorem proving. ~ Adarsh Kumarappan, Mo Tiwari, Peiyang Song, Robert Joseph George, Chaowei Xiao, Anima Anandkumar. arxiv.org/abs/2410.06209 #LLMs #ITP #LeanProver #Math

13.03.2025 07:42 — 👍 9 🔁 2 💬 0 📌 0