AgentCoMa

AgentCoMa is an Agentic Commonsense and Math benchmark where each compositional task requires both commonsense and mathematical reasoning to be solved. The tasks are set in real-world scenarios:…

To learn more:

Website: agentcoma.github.io

Preprint: arxiv.org/abs/2508.19988

A big thanks to my brilliant coauthors Lihu Chen, Ana Brassard, @joestacey.bsky.social, @rahmanidashti.bsky.social and @marekrei.bsky.social!

Note: We welcome submissions to the #AgentCoMa leaderboard from researchers 🚀

28.08.2025 14:01 — 👍 4 🔁 1 💬 0 📌 0

We also observe that LLMs fail to activate all the relevant neurons when they attempt to solve the tasks in Agent-CoMa. Instead, they mostly activate neurons relevant to only one reasoning type, likely as a result of single-type reasoning patterns reinforced during training.

28.08.2025 14:01 — 👍 1 🔁 0 💬 1 📌 0

So why do LLMs perform poorly on the apparently simple tasks in #AgentCoMa?

We find that tasks combining different reasoning types are a relatively unseen pattern for LLMs, leading the models to contextual hallucinations when presented with mixed-type compositional reasoning.

28.08.2025 14:01 — 👍 1 🔁 0 💬 1 📌 0

In contrast, we find that:

- LLMs perform relatively well on compositional tasks of similar difficulty when all steps require the same type of reasoning.

- Non-expert humans with no calculator or internet can solve the tasks in #AgentCoMa as accurately as the individual steps.

28.08.2025 14:01 — 👍 1 🔁 0 💬 1 📌 0

We test AgentCoMa on 61 contemporary LLMs of different sizes, including reasoning models (both SFT and RL-tuned). While the LLMs perform well on commonsense and math reasoning in isolation, they are far less effective at solving AgentCoMa tasks that require their composition!

28.08.2025 14:01 — 👍 1 🔁 0 💬 1 📌 0

We have released #AgentCoMa, an agentic reasoning benchmark where each task requires a mix of commonsense and math to be solved 🧐

LLM agents performing real-world tasks should be able to combine these different types of reasoning, but are they fit for the job? 🤔

🧵⬇️

28.08.2025 14:01 — 👍 4 🔁 2 💬 1 📌 0

Check out our preprint on ArXiv to learn more arxiv.org/abs/2505.15795

This work was done at @cohere.com with fantastic team @maxbartolo.bsky.social, Tan Yi-Chern, Jon Ander Campos, @maximilianmozes.bsky.social, @marekrei.bsky.social

22.05.2025 15:01 — 👍 0 🔁 0 💬 0 📌 0

We also postulate that the benefits of RLRE do not end at adversarial attacks. Reverse engineering human preferences could be used for a variety of applications, including but not limited to meaningful tasks such as reducing toxicity or mitigating bias 🔥

22.05.2025 15:01 — 👍 0 🔁 0 💬 1 📌 0

Interestingly, we observe substantial variations in the fluency and naturalness of the optimal preambles, suggesting that conditioning LLMs on human-readable sequences only may be overly restrictive from a performance perspective 🤯

22.05.2025 15:01 — 👍 0 🔁 0 💬 1 📌 0

We use RLRE to adversarially boost LLM-as-a-judge evaluation, and find the method is not only effective, but also virtually undetectable and transferable to previously unseen LLMs!

22.05.2025 15:01 — 👍 0 🔁 0 💬 1 📌 0

Thrilled to share our new preprint on Reinforcement Learning for Reverse Engineering (RLRE) 🚀

We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

22.05.2025 15:01 — 👍 9 🔁 1 💬 1 📌 0

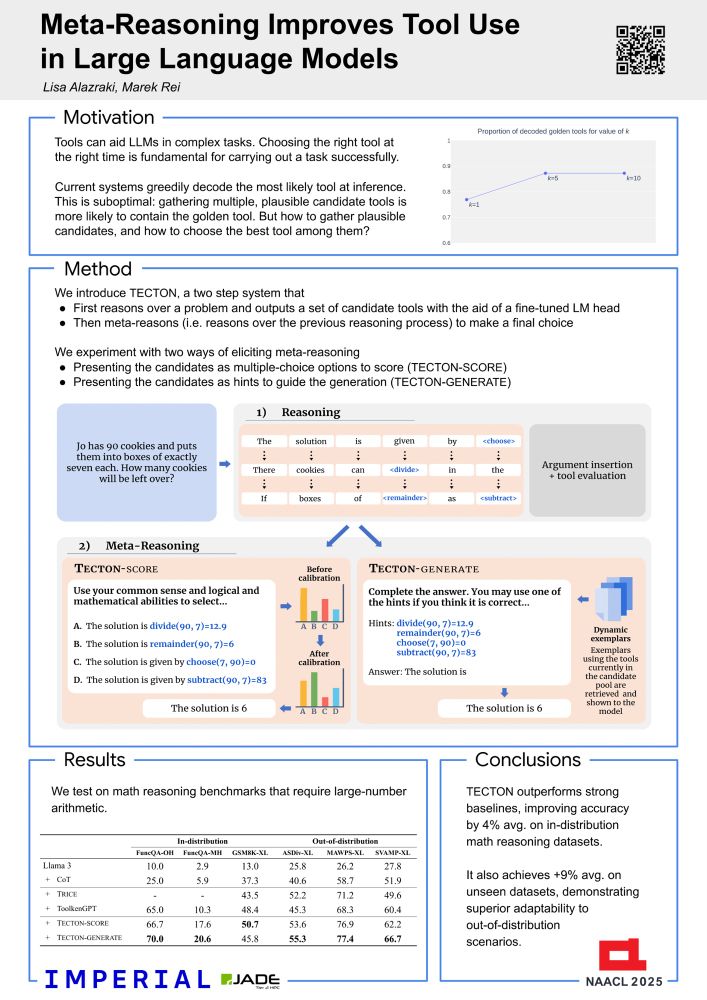

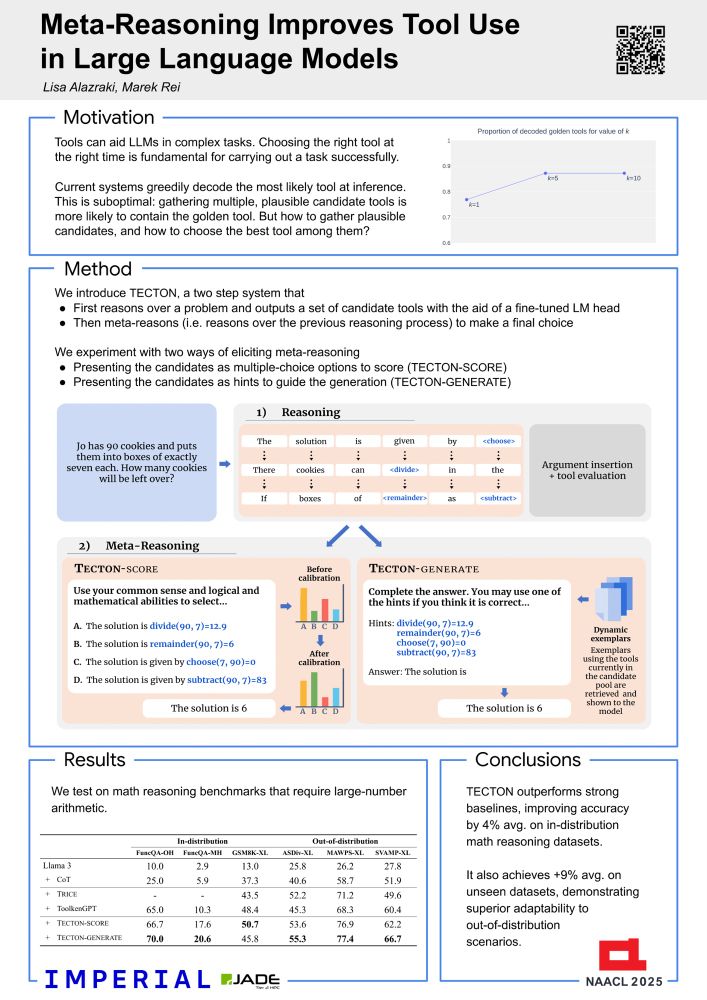

I’ll be presenting Meta-Reasoning Improves Tool Use in Large Language Models at #NAACL25 tomorrow Thursday May 1st from 2 until 3.30pm in Hall 3! Come check it out and have a friendly chat if you’re interested in LLM reasoning and tools 🙂 #NAACL

30.04.2025 20:58 — 👍 5 🔁 1 💬 1 📌 0

Excited to share our ICLR and NAACL papers! Please come and say hi, we're super friendly :)

22.04.2025 18:42 — 👍 14 🔁 5 💬 0 📌 0

New work led by @mercyxu.bsky.social

Check out the poster presentation on Sunday 27th April in Singapore!

12.04.2025 10:47 — 👍 2 🔁 0 💬 0 📌 0

I really enjoyed my MLST chat with Tim @neuripsconf.bsky.social about the research we've been doing on reasoning, robustness and human feedback. If you have an hour to spare and are interested in AI robustness, it may be worth a listen 🎧

Check it out at youtu.be/DL7qwmWWk88?...

19.03.2025 15:11 — 👍 8 🔁 3 💬 0 📌 0

Microsoft Forms

ACL Rolling Review and the EMNLP PCs are seeking input on the current state of reviewing for *CL conferences. We would love to get your feedback on the current process and how it could be improved. To contribute your ideas and opinions, please follow this link! forms.office.com/r/P68uvwXYqfemn

27.02.2025 17:01 — 👍 11 🔁 13 💬 1 📌 0

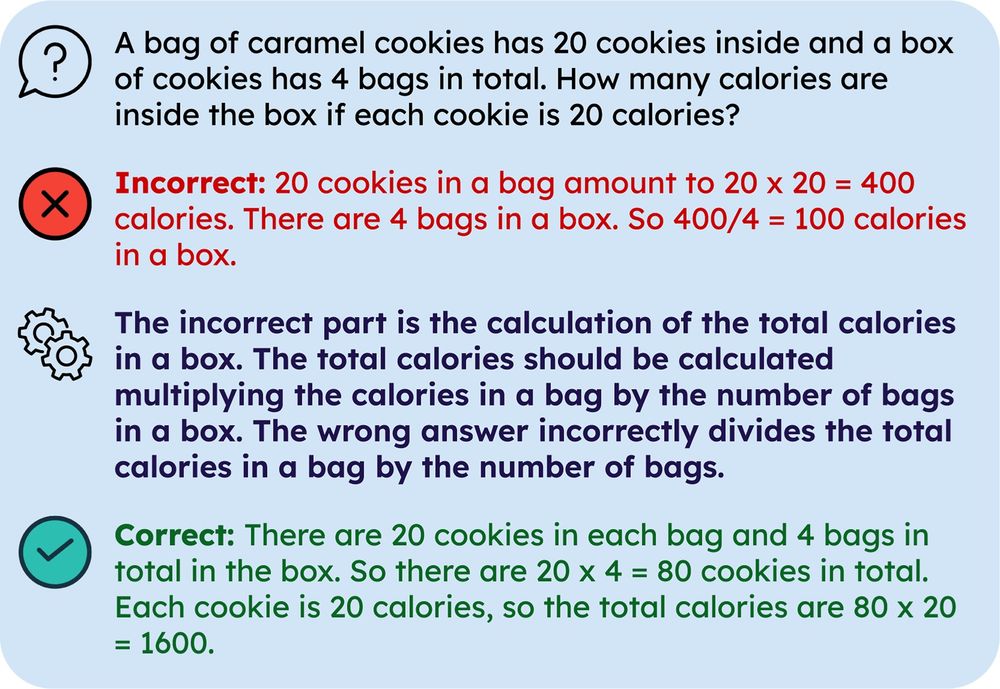

These findings are surprising, as rationales are prevalent in current frameworks for learning from mistakes with LLMs, despite being expensive to curate at scale. Our investigation suggests they are redundant and can even hurt performance by adding unnecessary constraints!

13.02.2025 15:38 — 👍 1 🔁 0 💬 1 📌 0

Additionally, our analysis shows that LLMs can implicitly infer high-quality corrective rationales when prompted only with correct and incorrect answers, and that these are of equal quality as those generated with the aid of explicit exemplar rationales.

13.02.2025 15:38 — 👍 0 🔁 0 💬 1 📌 0

We find the implicit setup without rationales is consistently superior in all cases. It also overwhelmingly outperforms CoT, even when we make this baseline more challenging by extending its context with additional, diverse question-answer pairs.

13.02.2025 15:38 — 👍 1 🔁 0 💬 1 📌 0

We test these setups across multiple LLMs from different model families, multiple datasets of varying difficulty, and different fine-grained tasks: labelling an answer (or an individual reasoning step) as correct or not, editing an incorrect answer, and answering a new question.

13.02.2025 15:38 — 👍 1 🔁 0 💬 1 📌 0

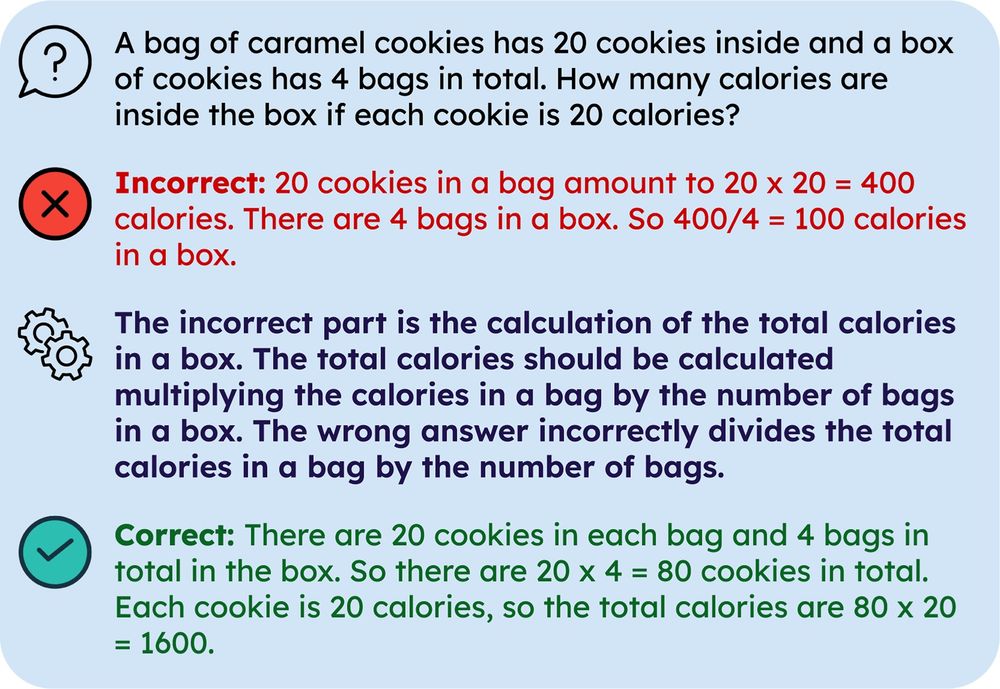

We construct few-shot prompts containing mathematical reasoning questions, alongside incorrect and correct answers. We compare this simple, implicit setup to the one that additionally includes explicit rationales illustrating how to turn an incorrect answer into a correct one.

13.02.2025 15:38 — 👍 2 🔁 0 💬 1 📌 0

Do LLMs need rationales for learning from mistakes? 🤔

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

13.02.2025 15:38 — 👍 21 🔁 9 💬 1 📌 3

PleIAs/common_corpus · Datasets at Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Announcing the release of Common Corpus 2. The largest fully open corpus for pretraining comes back better than ever: 2 trillion tokens with document-level licensing, provenance and language information. huggingface.co/datasets/Ple...

11.02.2025 13:17 — 👍 74 🔁 26 💬 2 📌 1

I noticed a lot of starter packs skewed towards faculty/industry, so I made one of just NLP & ML students: go.bsky.app/vju2ux

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

23.11.2024 19:54 — 👍 176 🔁 54 💬 101 📌 4

Description

Please note that job descriptions are not exhaustive, and you may be asked to take on additional duties that align with the key responsibilities ment...

We are hiring 6 lecturers at @imperialcollegeldn.bsky.social to work on AI, ML, graphics, vision, quantum and software engineering. This includes researchers working on LLMs, NLP, generative models and text applications. Deadline 6 Jan. @imperial-nlp.bsky.social www.imperial.ac.uk/jobs/search-...

24.12.2024 00:23 — 👍 12 🔁 1 💬 0 📌 1

I'm excited to share a new paper: "Mastering Board Games by External and Internal Planning with Language Models"

storage.googleapis.com/deepmind-med...

(also soon to be up on Arxiv, once it's been processed there)

05.12.2024 07:49 — 👍 76 🔁 13 💬 4 📌 7

Spot-on thread by that we should all read (and hope our reviewers will read)

27.11.2024 18:13 — 👍 3 🔁 0 💬 0 📌 0

Computational Chemist @ Francis Crick Institute. #WomenInSTEM

📍 London, UK.

Associate Professor at Duke | Director of Duke Spark | AI in Medical Imaging

Create and share social media content anywhere, consistently.

Built with 💙 by a global, remote team.

⬇️ Learn more about Buffer & Bluesky

https://buffer.com/bluesky

cs && comp-bio ugrad @pitt_sci; in love with #NLProc 🗣️🧠🌍; aspiring educator; he/him

Scientific AI/ machine learning, dynamical systems (reconstruction), generative surrogate models of brains & behavior, applications in neuroscience & mental health

Associate Professor - University of Alberta

Canada CIFAR AI Chair with Amii

Machine Learning and Program Synthesis

he/him; ele/dele 🇨🇦 🇧🇷

https://www.cs.ualberta.ca/~santanad

Ph.D. Postdoc@USC | Best USC Viterbi RA | Ex-intern@ Amazon, Meta | Interests: Human understanding, trustworthy computing, speech, multimodal, and wearable sensing | Love sports and music.

NLP research engineer at Barcelona Supercomputing Center | Machine translation

https://javi897.github.io/

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

Behavioral and Internal Interpretability 🔎

Incoming PostDoc Tübingen University | PhD Student at @ukplab.bsky.social, TU Darmstadt/Hochschule Luzern

PhD candidate in Deep Learning at the University of Trieste, Italy. Visiting at @ucl.ac.uk. Representations, robustness, empirical methods, kernels, physics & neuroscience. Quantitativist, overenthusiastic tinkerer.

https://ballarin.cc/

✨ Comprehensive evaluation of the INTERPLAY between model internals and behavior

✨ https://interplay-workshop.github.io/

✨ Submission due June 23rd

✨ October 10th, @colmweb.org

Postdoc at University of California, Riverside

PhD student at EPFL interested in LLM cognition & scientific discovery with AI.

Just Finished PhD @ UT Austin; Human-Centered NLP. Language Models

https://anubrata.github.io

Postdoc at UW NLP 🏔️. #NLProc, computational social science, cultural analytics, responsible AI. she/her. Previously at Berkeley, Ai2, MSR, Stanford. Incoming assistant prof at Wisconsin CS. lucy3.github.io/prospective-students.html

PhD Student @HelsinkiNLP / Low-resource, Machine Translation, Knowledge Distillation, Multilinguality

CS PhD @umdclip

Multilingual / Culture #NLProc, MT

https://dayeonki.github.io/

prev SWE intern @Microsoft; LLMs, data, code, and other shenanigans; Masters grad @ Clemson; Probably staring at wandb logs; DMs open