Thrilled that our paper is out today in Nature!

www.nature.com/articles/s4...

@zds7.bsky.social

PhD student at @unituebingen.bsky.social

Thrilled that our paper is out today in Nature!

www.nature.com/articles/s4...

📢 Deadline extended! 📢

The registration deadline for #SNS2025 has been extended to Sunday, September 28th!

Register here 👉 meg.medizin.uni-tuebingen.de/sns_2025/reg...

PS: Students of the GTC (Graduate Training Center for Neuroscience) in Tübingen can earn 1 CP for presenting a poster! 👀

Are top-down feedback connections enough for robust vision?

We found ConvRNN with top-down feedback exhibiting OOD robustness only when trained with dropout, revealing a dual mechanism for robust sensory coding

with @marco-d.bsky.social, Karl Friston, Giovanni Pezzulo & @siegellab.bsky.social

🧵👇

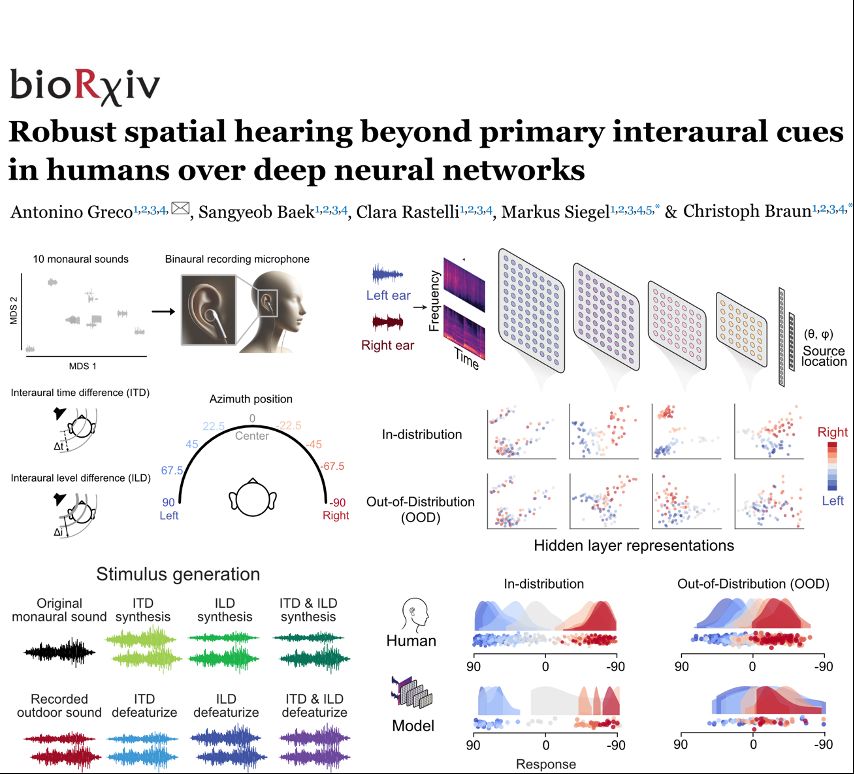

🔵 Proud to share our new preprint 🔵

We compared humans and deep neural networks on sound localization 👂📍

Humans robustly localized OOD sounds even without primary interaural cues (ITD & ILD)

Models localized well only in-training distribution sounds, failing on OOD regime

Link & full story 🧵👇

Our results underscore the central role of action in human cognition, with motor-centric error encoding likely conferring advantages for adaptive and flexible behaviour.

Thanks to Rossetti, Schwarz, @siegellab.bsky.social , Braun and @agreco.bsky.social

www.biorxiv.org/content/10.1...

Finally, cross-decoding analysis showed significant pattern generalization only when the motor output was identical but not when the sensory input was held constant, suggesting that temporal prediction errors were primarily encoded in a motor rather than sensory space.

08.08.2025 07:05 — 👍 0 🔁 0 💬 1 📌 0

Crucially, we found action-oriented prediction error encoding even when controlling for motor confounds by removing motor-evoked activity from the original data, indicating that the effect was not just a motor artifact.

08.08.2025 07:05 — 👍 0 🔁 0 💬 1 📌 0

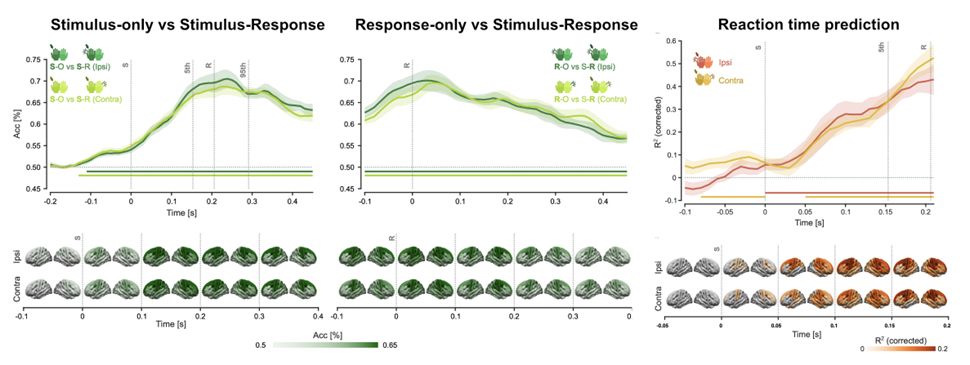

By fitting prediction error trajectories to the brain data, we found that error signals were amplified when a response was required following the tactile stimulation, compared to when the stimulus was passively received.

08.08.2025 07:05 — 👍 0 🔁 0 💬 1 📌 0

Multivariate decoding analyses highlighted a distributed frontocentral 🧠 network linked to tactile-motor associations which predicted reaction-time variability across trials.

08.08.2025 07:05 — 👍 0 🔁 0 💬 1 📌 0

We recorded MEG while participants received temporally jittered finger stimulations, either just perceiving them (stimulus only) or reacting as fast as possible (stimulus response), allowing us to dissect how action demands shape the brain’s encoding of temporal expectations.

08.08.2025 07:05 — 👍 0 🔁 0 💬 1 📌 0

🧠⏱️ New preprint!

We found that temporal prediction errors are more strongly encoded when an overt response is required, and this encoding occurs in motor rather than sensory space.

www.biorxiv.org/content/10.1...

🧵👇

🔵 Tübingen Systems Neuroscience Symposium 2025 is here! 🔵

#SNS2025 brings together leading international researchers in system neuroscience 🧠

Join us for plenary lectures, poster sessions and social events on 6️⃣-7️⃣ October 2️⃣0️⃣2️⃣5️⃣

registration is open here 👉 meg.medizin.uni-tuebingen.de/sns_2025/

🚨 NEW PAPER 🚨

Psychedelics alter cognition profoundly, but is the alteration of the visual perception causally related to high-level cognition modulation?

In VR, we found that simulated visual hallucinations affect high-level human cognition in specific ways!

Link 👉 doi.org/10.1016/j.co...

🧵👇

You cannot decode perceived basic emotions categories using fMRI MVPA in just the amygdala... We've tried it, you've tried it, everyone's tried it, no one's talked about it... It doesn't work.

The amygdala doesn't work like that.

journals.sagepub.com/doi/10.1177/...

🔵 NEW PAPER 🔵

Spatiotemporal Style Transfer #STST is out on #NatureComputationalScience!

STST as a framework for dynamic visual stimulus generation to study brain and machine vision

feat. @siegellab.bsky.social

paper: www.nature.com/articles/s43...

code: github.com/antoninogrec...

🧵👇

I was fucking joking!

25.11.2024 13:30 — 👍 121 🔁 28 💬 9 📌 3

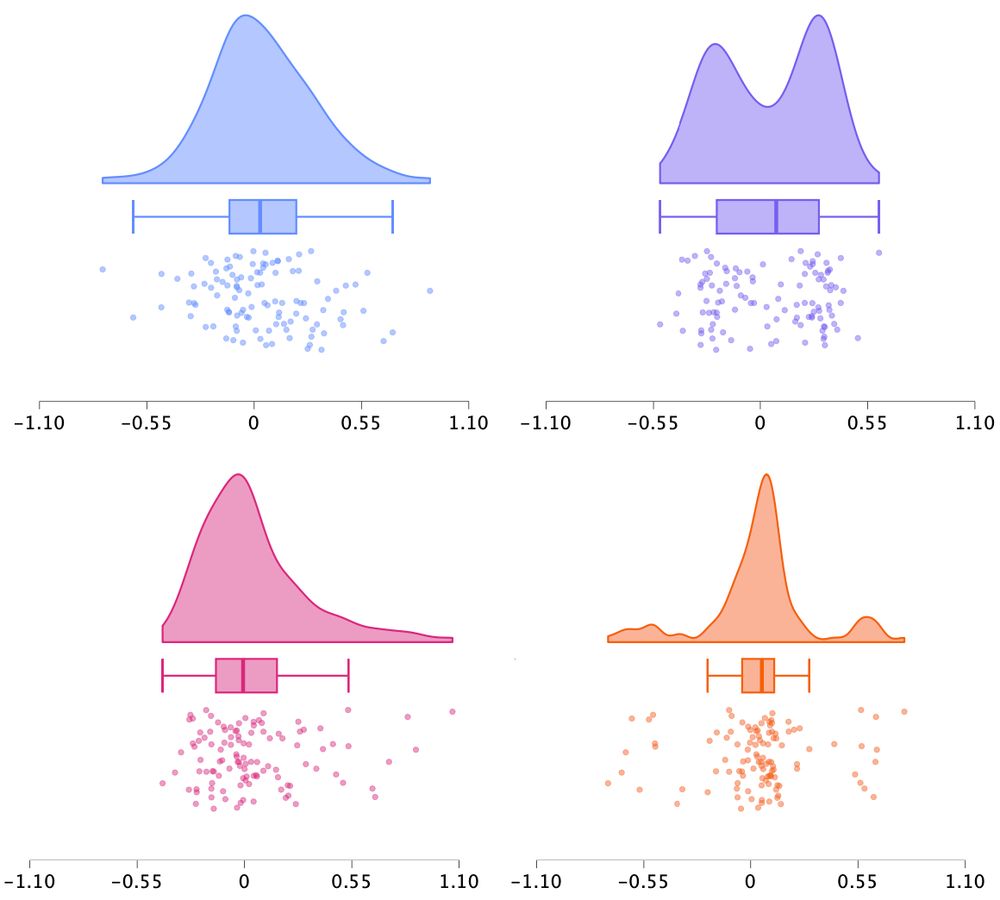

Four raincloud plots: a normal distribution, bimodal, skewed, and one riddled with outliers. Popular sample statistics are identical so that mere plotting of the identical means and confidence intervals would miss these qualitative differences.

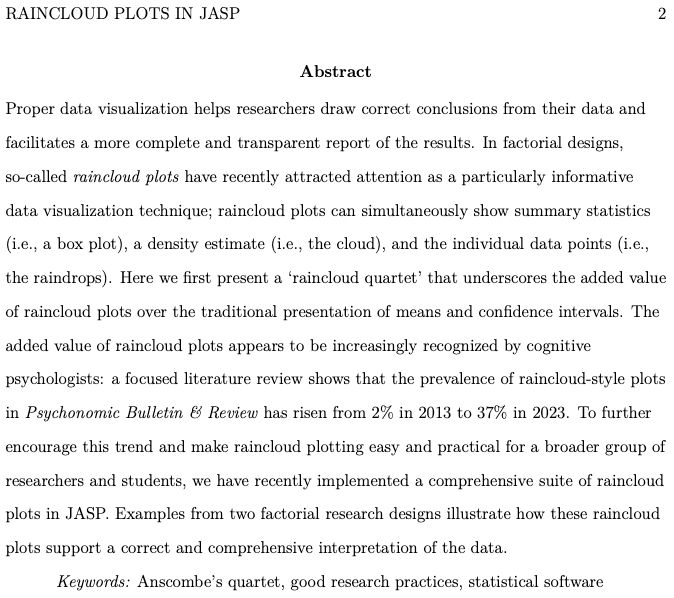

abstract of pre-print

The Raincloud Quartet (new pre-print)

All N = 111, M = 0.04, SD = 0.27.

One-sided t-tests vs. 0 yield: t(110) = 1.67, p = .049.

Only raincloud plots reveal the qualitative differences, preventing the drawing of inappropriate conclusions.

How did we create the quartet?

Where are data & code?

🧵👇