Thanks for following the thread and big shout out to the team:

Zechen Zhang and @hidenori8tanaka.bsky.social

Here is the preprint:

arxiv.org/abs/2505.01812

14/n

@corefpark.bsky.social

https://cfpark00.github.io/

Thanks for following the thread and big shout out to the team:

Zechen Zhang and @hidenori8tanaka.bsky.social

Here is the preprint:

arxiv.org/abs/2505.01812

14/n

Key Takeaways:

✅ There's a clear FT-ICL gap

✅ Self-QA largely mitigates it

✅ Larger models are more data efficient learners

✅ Contextual shadowing hurts fine-tuning

Please check out the paper (see below) for even more findings!

13/n

For humans, this contextualizes the work and helps integration, but this might be hindering learning in LLMs.

We are working on confirming this hypothesis on real data.

12/n

We suspect this effect significantly harms fine-tuning as we know!

Let's take the example of research papers. In a typical research paper, the abstract usually “spoils” the rest of the paper.

11/n

⚠️⚠️ But here comes drama!!!

What if the news appears in the context upstream of the *same* FT data?

🚨 Contextual Shadowing happens!

Prefixing the news during FT *catastrophically* reduces learning!

10/n

Next, we analyzed Sys2-FT from a scaling law perspective. We found an empirical scaling law of Sys2-FT where the knowledge integration is a function of the compute spent.

Larger models are thus more data efficient learners!

Note that this scaling isn’t evident in loss.

9/n

Interestingly, Sys2-FT shines most in domains where System-2 inference has seen the most success: Math and Coding.

8/n

Among these protocols, Self-QA especially stood out, largely mitigating the FT-ICL gap and integrating the given knowledge into the model!

Training on synthetic Q/A pairs really boost knowledge integration!

7/n

Inspired by cognitive science on memory consolidation, we introduce System-2 Fine-Tuning (Sys2-FT). Models actively rehearse, paraphrase, and self-play about new facts to create fine-tuning data. We explore three protocols: Paraphrase, Implication, and Self-QA.

6/n

As expected, naïve fine-tuning on the raw facts isn’t enough to integrate knowledge across domains or model sizes up to 32B.

We call this the FT-ICL gap.

5/n

But how do we update the model’s weights to bake in this new rule?

To explore this, we built “New News”: 75 new hypothetical (but non-counterfactual) facts across diverse domains, paired with 375 downstream questions.

4/n

Today’s LLMs can effectively use *truly* novel information given as a context.

Given:

- Mathematicians defined 'addiplication' as (x+y)*y

Models can answer:

Q: What is the addiplication of 3 and 4?

A: (3+4)*4=28

3/n

TL;DR: We present “New News,” a dataset for measuring belief updates, and propose Self-QA, a highly effective way to integrate new knowledge via System-2 thinking at training time. We also show that in-context learning can actually hurt fine-tuning.

2/n

🚨 New Paper!

A lot happens in the world every day—how can we update LLMs with belief-changing news?

We introduce a new dataset "New News" and systematically study knowledge integration via System-2 Fine-Tuning (Sys2-FT).

1/n

New paper <3

Interested in inference-time scaling? In-context Learning? Mech Interp?

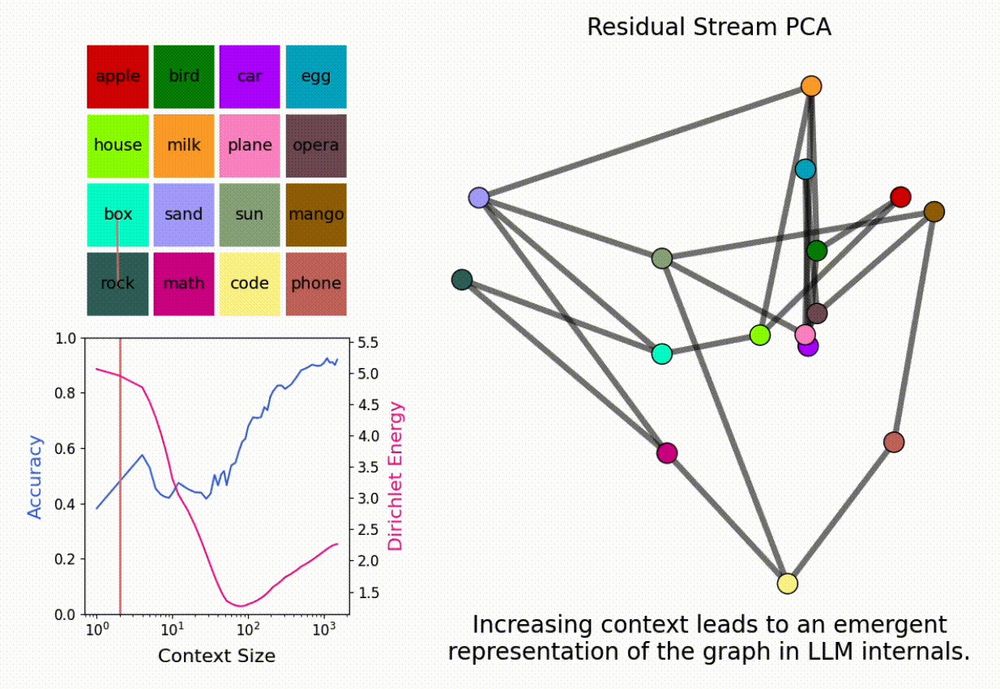

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Special thanks to @ndif-team.bsky.social for letting us run these experiments remotely!

15/n

This project was a true collaborative effort where everyone contributed to major parts of the project!

Big thanks to the team: @ajyl.bsky.social, @ekdeepl.bsky.social, Yongyi Yang, Maya Okawa, Kento Nishi, @wattenberg.bsky.social, @hidenori8tanaka.bsky.social

14/n

We further investigate how this critical context size for an in-context transition scales with graph size.

We find a power law relationship between the critical context size and the graph size.

13/n

We find that LLMs indeed minimize the spectral energy on the graph and the rule-following accuracy sharply rises after the energy hits a minimum!

12/n

How to explain these results? We hypothesize a model runs an implicit optimization process to adapt to context-specified tasks (akin to in-context GD by @oswaldjoh et al), prompting an analysis of Dirichlet energy between the ground-truth graph & model representation.

11/n

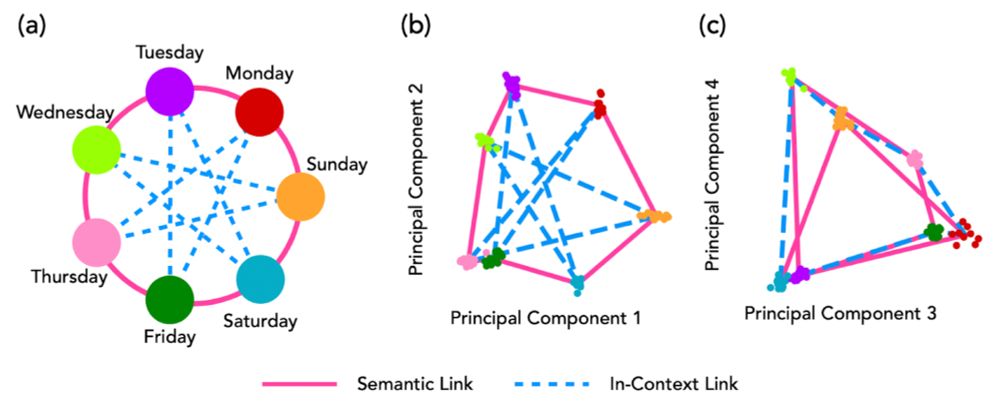

What happens when there is a strong semantic structure acquired during pretraining?

We set up a task where the days of the week should be navigated in an unusual way: Mon -> Thu -> Sun, etc.

Here, we find that in-context representations show up in higher PC dimensions.

10/n

We call these context dependent representations “In-Context Representations” and these appear robustly across graph structures and models.

9/n

What about a different structure?

Here, we used a ring graph and sampled random neighbors on the graph.

Again, we find that internal representations re-organizes to match the task structure.

8/n

Interestingly, a similar phenomenon was observed in humans! One can reconstruct the graph underlying a sequence of random images from fMRI scans of the brain during the task.

elifesciences.org/articles/17086

7/n

Surprisingly, when we input this sequence to Llama-3.1-8B, the model’s internal representations show an emergent grid structure matching the task in its first principal components!

6/n

But do LLM representations also reflect the structure of a task given purely in context?

To explore this question, we set up a synthetic task where we put words on a grid and perform a random walk. The random walk outputs the words it accessed as a sequence.

5/n

We know that LLM representations reflect the structure of the real world’s data generating process. For example, @JoshAEngels showed that the days of the weeks are represented as a ring in the residual stream.

x.com/JoshAEngels/...

4/n

w/ @ajyl.bsky.social, @ekdeepl.bsky.social, Yongyi Yang, Maya Okawa, Kento Nishi, @wattenberg.bsky.social, @hidenori8tanaka.bsky.social

3/n

TL;DR: Given sufficient context, LLMs can suddenly shift from their concept representations to 'in-context representations' that align with the task structure!

Paper: arxiv.org/abs/2501.00070

2/n

New paper! “In-Context Learning of Representations”

What happens to an LLM’s internal representations in the large context limit?

We find that LLMs form “in-context representations” to match the structure of the task given in context!