Thanks!

03.03.2025 18:11 — 👍 0 🔁 0 💬 0 📌 0

Tom Cunningham

@testingham.bsky.social

Economics Research at OpenAI.

@testingham.bsky.social

Economics Research at OpenAI.

Thanks!

03.03.2025 18:11 — 👍 0 🔁 0 💬 0 📌 0I think the effects of AI on the offense-defense balance across many domains is really important topic & I'm surprised there aren't more people working on it, it seems a perfect fit for econ theorists.

03.03.2025 16:42 — 👍 1 🔁 0 💬 0 📌 0How does AI change the balance of power in content moderation & communication?

I wrote something on this (pre-OpenAI) with a simple prediction:

1. Where the ground truth is human judgment, AI favors defense.

2. Where the ground truth is facts in the world, AI favors offense.

Thank you!

31.01.2025 20:51 — 👍 0 🔁 0 💬 0 📌 0

A new post: On Deriving Things

(about the time spent back and forth between clipboard whiteboard blackboard & keyboard)

tecunningham.github.io/posts/2020-1...

I also talk about the related point that outliers typically have one big cause rather than many small causes.

28.12.2024 00:31 — 👍 1 🔁 0 💬 0 📌 0Some more formalization of the argument here:

tecunningham.github.io/posts/2024-1...

2. If a drug is associated with a 5% higher rate of birth defects it’s probably a selection effect, if it’s associated with a 500% higher rate of birth defects it’s probably causal.

28.12.2024 00:31 — 👍 0 🔁 0 💬 1 📌 0When too much good news is bad news:

1. If an AB test shows an effect of +2% (±1%) it’s very persuasive, but if it shows a an effect of +50% (±1%) then the experiment was probably misconfigured, and it’s not at all persuasive.

Full post here:

tecunningham.github.io/posts/2023-1...

Choosing headcount? Increasing headcount on a team will shift out the Pareto frontier of a team, and so you can then sketch out the *combined* Pareto frontier across metrics as you reallocate headcount.

25.10.2023 15:48 — 👍 0 🔁 0 💬 1 📌 0

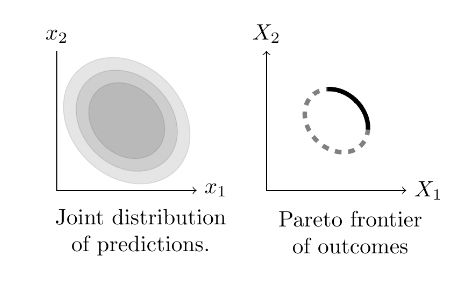

Choosing ranking weights? You can think of the set of classifier scores (pClick,pReport) as drawn from a distribution, and if additive it's easy to calculate the Pareto frontier, and if Gaussian then the Pareto frontier is an ellipse.

25.10.2023 15:48 — 👍 0 🔁 0 💬 1 📌 0

Choosing launch criteria? You can think of the set of experiments as pairs (ΔX,ΔY) from some joint distribution, and if additive it's easy to calculate the Pareto frontier, and if (ΔX,ΔY) are Gaussian then the Pareto frontier is an ellipse.

25.10.2023 15:47 — 👍 0 🔁 0 💬 1 📌 0

New post: Thinking about tradeoffs? Draw an ellipse.

With applications to (1) experiment launch rules; (2) ranking weights in a recommender; and (3) allocating headcount in a company.

My daughter and I have an arrangement: She does whatever I ask her to do. In return I only ask her to do things that I know she's going to do anyway.

23.10.2023 14:16 — 👍 0 🔁 0 💬 0 📌 06. To extrapolate the effect of metric B from metric A, it's best to do a cross-experiment regression, but be careful about bias.

17.10.2023 19:09 — 👍 1 🔁 0 💬 0 📌 04. Empirical Bayes estimates are useful but only incorporate some information, so shouldn't be treated as best-guess of true causal effects.

5. Launch criteria should identify "final" metrics with conversion factors from "proximal" metrics. Don't make decisions on stat-significance.

Claims:

1. Experiments are not the primary way we learn causal relationships.

2. A simple Gaussian model gives you a robust way of thinking about challenging cases.

3. The Bayesian approach makes it easy to think about things that are confusing (multiple testing, peeking, selective reporting).

A long and partisan note about experiment interpretation based on experience at Meta and Twitter.

The common thread is that people are pretty good intuitive Bayesian reasoners, so just summarize the relevant evidence and let a human be the judge:

tecunningham.github.io/posts/2023-0...

The most interesting mechanisms: (1) AI can find patterns which humans didn't know about; (2) AI can use human tacit knowledge, not available to our conscious brain.

06.10.2023 20:07 — 👍 0 🔁 0 💬 0 📌 0

It requires formalizing the relationship between the AI, the human, and the world. Interestingly there are a number of reasons why the AI, who only encounters the real world via mimicking human responses, can have a superior understanding of the world. Can describe this visually:

06.10.2023 20:06 — 👍 1 🔁 0 💬 1 📌 0I wrote a long blog post working through the different ways in which an AI trained to *imitate* humans could *outperform* humans: tecunningham.github.io/posts/2023-0...

06.10.2023 20:04 — 👍 4 🔁 0 💬 1 📌 0