Excited to share that our lab will be presenting multiple projects at SPSP 2026!

If you’re interested in social perception, race talk, intergroup dynamics, or collective action — come check us out!

#SPSP2026 #SocialPsychology #PersonalityPsychology #AcademicResearch #RaceTalk

25.02.2026 19:34 —

👍 19

🔁 7

💬 2

📌 0

A photo of Emily Finn, with text that reads, “Emily Finn, assistant professor of Psychological and Brain Sciences. Janet Taylor Spence Award for Transformative Early-Career Contributions.”

Professor @esfinn.bsky.social of @dartmouthpbs.bsky.social received a 2026 Janet Taylor Spence Award for Transformative Early-Career Contributions from @psychscience.bsky.social for her groundbreaking work investigating the neural underpinnings of human behavior and cognition. https://bit.ly/4scvVxL

25.02.2026 16:50 —

👍 9

🔁 1

💬 1

📌 0

Congratulations to @dartmouthpbs.bsky.social Prof Emily Finn (@esfinn.bsky.social) on winning the APS Spence Award!

23.02.2026 18:01 —

👍 2

🔁 1

💬 0

📌 0

OSF

New preprint with @SamJung @timbrady.bsky.social and @violastoermer.bsky.social: osf.io/preprints/ps.... Here we uncover what might be driving the “meaningfulness benefit” in visual working memory. Studies show that real objects are remembered better in VWM tasks than abstract stimuli. But why? 1/

09.02.2026 21:06 —

👍 41

🔁 24

💬 1

📌 0

New preprint from Lindsey Tepfer (@ltjaql.bsky.social) and me! We silenced portions of internal monologues in two films to manipulate participants' access to characters' thoughts. Using ISC and RSA, we found that this aligned later neural processing of the narrative & encoding of trait impressions.

11.02.2026 20:26 —

👍 48

🔁 16

💬 2

📌 1

Meeting People as Individuals, Not Assumptions

Why communication depends on updating our assumptions about others.

.@dartmouthpbs.bsky.social professor @stolkarjen.bsky.social's latest research highlights why individualized communication approaches are crucial, with insights that could improve understanding of autism.

05.02.2026 20:10 —

👍 1

🔁 2

💬 0

📌 0

Photo of Tor Wager with overlaid text, “Tor Wager, Diana L. Taylor Distinguished Professor in Neuroscience. Atkinson Prize in Psychological and Cognitive Sciences.”

Congrats to @dartmouthpbs.bsky.social professor Tor Wager, who received the Atkinson Prize in Psychological and Cognitive Sciences from @nasonline.org. The prize recognizes Wager's pioneering research on the mind-body connection and innovative neuroimaging approaches. https://bit.ly/4k0glSM

26.01.2026 21:45 —

👍 6

🔁 2

💬 0

📌 0

Models of Language and Communication - PSYC 51.17

Course materials for PSYC 51.17: Language Models from Scratch - Dartmouth College

Excited to be teaching a new undergraduate course on Models of Language and Conversation this term!

Check it out here: context-lab.com/llm-course/

I've added lots of fun interactive demos of chatbots and NLP techniques that let students dig into the approaches.

07.01.2026 13:10 —

👍 26

🔁 8

💬 0

📌 0

I made a quirky little web app to help guide your lucid dreams: context-lab.com/dream-stream/

It's kind of like a "netflix" or "spotify" for lucid dreaming-- you select different narratives to form a playlist, and then it uses your device's microphone to start playing when it detects you're in REM.

11.01.2026 05:47 —

👍 10

🔁 4

💬 1

📌 1

🧠 Why it matters 🧠

-The results challenge a prevailing view that 5‑HT2A activation alone drives psilocybin’s therapeutic actions.

-Highlights the importance of polypharmacology 🥳, and points to the 1B receptor as a target for non‑hallucinogenic antidepressant and anxiolytic pharmacotherapies.

21.12.2025 19:52 —

👍 15

🔁 7

💬 0

📌 0

GitHub - ContextLab/leetcode-solutions: Leetcode discussions, brainstorming, musings, and solutions

Leetcode discussions, brainstorming, musings, and solutions - ContextLab/leetcode-solutions

Remember when grinding leetcode was still a thing? If you'd like to hone your coding skills, or even just return to that simpler time for nostalgia's sake, you might enjoy this project from our group: github.com/ContextLab/l...

Happy hacking! 👩💻

06.11.2025 13:42 —

👍 3

🔁 2

💬 0

📌 0

Hope to see all of the serotonin enthusiasts at the ISSR mixer at SfN on Monday (people who find dopamine rewarding are welcome too). register here: pci.jotform.com/form/2528274...

12.11.2025 13:36 —

👍 4

🔁 3

💬 0

📌 0

A plot showing a 3D projection of 8 "authors" (each represented with a differently colored and labeled dot). Stylistic distances between authors are reflected by spatial distances in the plot.

🚨 New preprint alert!

We use trained-from-scratch GPT-2 models to characterize & capture the unique writing styles of individual authors. We also develop a new LLM-based relative stylometric measure.

Paper: arxiv.org/abs/2510.21958

Code/data: github.com/ContextLab/l...

🤗: huggingface.co/contextlab

28.10.2025 04:28 —

👍 10

🔁 4

💬 0

📌 0

Cognitive Science Graduate Admissions – Information about graduate admissions from the cognitive science faculty

We're excited to announce that Cognitive Science at Dartmouth is recruiting PhD students to work collaboratively with me, Steven Frankland, and Fred Callaway. Come study the principles and mechanisms that enable us to understand, plan, and act in the world! Info: sites.dartmouth.edu/cogscigrad/

23.10.2025 17:30 —

👍 59

🔁 39

💬 1

📌 1

Fig. 1. a. Visual and auditory regions of interest (ROIs). b. Responses in a combination of visual (e.g., early dorsal visual stream; Fig. 1a, middle panel) and auditory regions were used to predict responses in the rest of the brain using MVPN. c. In order to identify brain regions that combine responses from auditory and visual regions, we identified voxels where predictions generated using the combined patterns from auditory regions and one set of visual regions jointly (as shown in Fig. 1b) are significantly more accurate than predictions generated using only auditory regions or only that set of visual regions.

I’m excited to share my 1st first-authored paper, “Distinct portions of superior temporal sulcus combine auditory representations with different visual streams” (with @mtfang.bsky.social and @steanze.bsky.social ), now out in The Journal of Neuroscience!

www.jneurosci.org/content/earl...

02.10.2025 15:20 —

👍 22

🔁 11

💬 1

📌 0

Excited to share the preprint for my 1st 1st-author manuscript! @markthornton.bsky.social and I show that people hold robust, structured beliefs about how individual mental states unfold in intensity over time. We find that these beliefs are reflected in other domains of mental state understanding.

16.09.2025 14:46 —

👍 34

🔁 6

💬 2

📌 1

Very excited to share @landrybulls.bsky.social's 1st lead-author preprint in my lab! Using datasets from MySocialBrain.org we measured people's beliefs about how mental states change in intensity over time, the dimensional structure of those beliefs, and their correlates: osf.io/preprints/ps... 🧵👇

16.09.2025 15:08 —

👍 21

🔁 4

💬 0

📌 0

Effect of confound mass on true positive rates under FDR correction. Confound mass represents how large a confound is in terms of the product of its voxel extent and effect size. Results are shown at differing combinations of true effect size, true effect voxel extent, and sample size.

Inflated surface maps of meta-analytic z-statistics from Neurosynth for low-level confounds (top) and high-level cognitive tasks (bottom). Red reflects positive activations, blue reflects negative (de)activations, and darker colors indicate larger z-statistics. Maps are thresholded at |z| = 1 for visualization purposes.

Effect of confound effect size on true positive rates for task effects under FDR correction. Colors indicate sample sizes: N = 25 in blue, N = 50 in green, and N = 100 in orange. Effect sizes are reflected by the darkness of each color, with light shades representing d = .2, medium d = .5, and dark d = .8. The task brain maps and confound brain maps referenced in each panel are shown in Figure 3.

Effect of FDR-based publication bias on observed confound effects sizes. Simulated meta-analytic confound effect sizes are visualized through violin plots for each combination of task effect and confound effect examined in the neural data simulations. Meta-analyses featuring publication bias (orange) substantially inflate these effect size estimates in all cases, relative to meta-analyses featuring no publication bias (blue).

After 5 years, I finally carved out time to turn this blog post on FDR (markallenthornton.com/blog/fdr-pro...) into a manuscript. The preprint features a much broader range of simulations showing how FDR promotes confounds, and how this effect compounds with publication bias: osf.io/preprints/ps...

29.08.2025 15:43 —

👍 49

🔁 15

💬 3

📌 1

Ventral Striatal Dopamine Increases following Hippocampal Sharp-Wave Ripples

Leading theories suggest that hippocampal replay drives offline learning through coupling with an internal teaching signal such as ventral striatal dopamine (DA); however, the relationship between hip...

Hung-tu Chen, Nicolas Tritsch, Matt van der Meer, and I have submitted a new preprint (doi.org/10.1101/2025...) in which we use simultaneous hippocampal ephys and ventral striatal (VS) fiber photometry to establish a link between sharp-wave ripples (SWRs) and VS dopamine (DA) in mice. (1/9)

04.08.2025 18:30 —

👍 20

🔁 5

💬 1

📌 1

Clustrix Documentation — Clustrix Documentation

I'm starting to work on a new library, "clustrix" (

clustrix.readthedocs.io/en/latest/) to ease switching between local vs. remote execution in Python scripts, notebooks, etc. This has been a pain point for my group for a while!

30.06.2025 04:47 —

👍 10

🔁 2

💬 1

📌 0

Jason and I wearing Chicago Booth swag, inadvertently looking like new MBA students. We are smiling and celebrating at a restaurant!

Next summer I will start as an Assistant Professor of Behavioral Science at the University of Chicago Booth School of Business. I couldn't be more excited! 1/

30.06.2025 21:52 —

👍 86

🔁 6

💬 14

📌 3

DataWrangler — datawrangler 0.4.0 documentation

🤠 New release announcement for our datawrangler package! Try it using:

pip install --upgrade pydata-wrangler

Lots of awesome performance improvements (including native polars support!), simplified API, support for @hf.co text embeddings, etc. More info here: data-wrangler.readthedocs.org

14.06.2025 12:10 —

👍 4

🔁 3

💬 0

📌 0

Title: Representations of what’s possible reflect others’ epistemic states

Authors: Lara Kirfel, Matthew Mandelkern, and Jonathan Scott Phillips

Abstract: People’s judgments about what an agent can do are shaped by various constraints, including probability, morality, and normality. However, little is known about how these representations of possible actions—what we call modal space representations—are influenced by an agent’s knowledge of their environment. Across two studies, we investigated whether epistemic constraints systematically shift modal space representations and whether these shifts affect high-level force judgments. Study 1 replicated prior findings that the first actions that come to mind are perceived as the most probable, moral, and normal, and demonstrated that these constraints apply regardless of an agent’s epistemic state. Study 2 showed that limiting an agent’s knowledge changes which actions people perceive to be available for the agent, which in turn affects whether people judged an agent as being “forced” to take a particular action. These findings highlight the role of Theory of Mind in modal cognition, revealing how epistemic constraints shape perceptions of possibilities.

🏔️ Brad is lost in the wilderness—but doesn’t know there’s a town nearby. Was he forced to stay put?

In our #CogSci2025 paper, we show that judgments of what’s possible—and whether someone had to act—depend on what agents know.

📰 osf.io/preprints/ps...

w/ Matt Mandelkern & @jsphillips.bsky.social

16.05.2025 12:04 —

👍 11

🔁 3

💬 0

📌 0

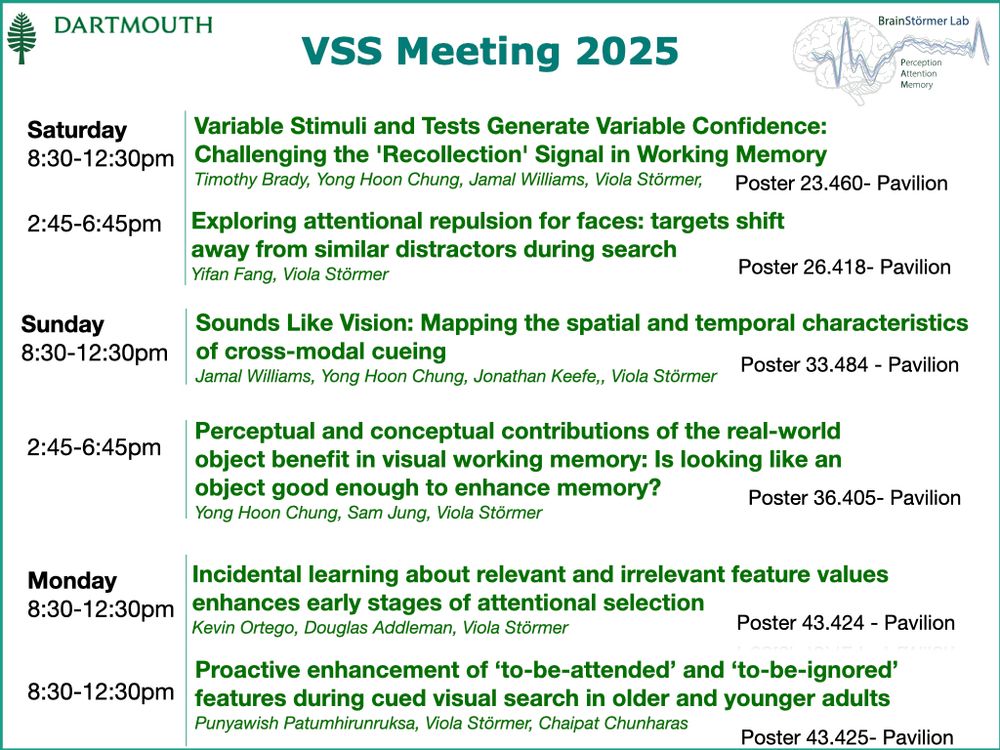

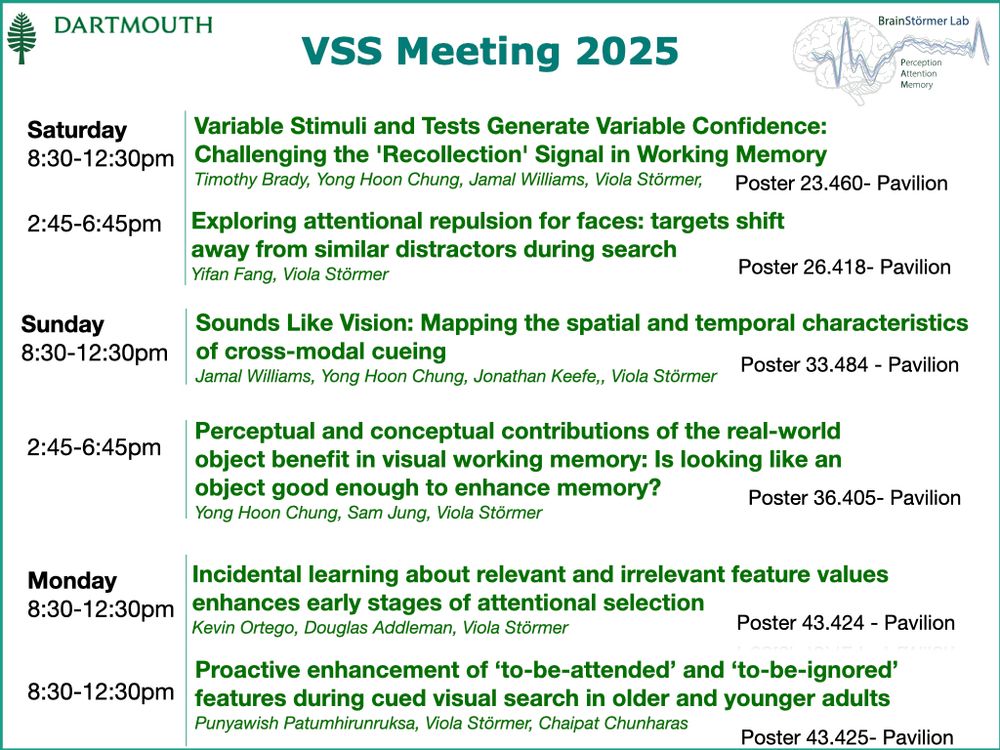

Excited to see everyone at #VSS2025 - Come check out what my lab has been up to over this past year:

16.05.2025 16:04 —

👍 29

🔁 3

💬 0

📌 0

Despite everything going on, I may have funds to hire a postdoc this year 😬🤞🧑🔬 Open to a wide variety of possible projects in social and cognitive neuroscience. Get in touch if you are interested! Reposts appreciated.

09.05.2025 19:01 —

👍 130

🔁 102

💬 3

📌 5

OSF

New preprint! Thrilled to share my latest work with @esfinn.bsky.social -- "Sensory context as a universal principle of language in humans and LLMs"

osf.io/preprints/ps...

05.05.2025 14:49 —

👍 51

🔁 19

💬 3

📌 3