I will be giving a short talk on this work at the COLM Interplay workshop on Friday (also to appear at EMNLP)!

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

06.10.2025 12:05 — 👍 8 🔁 5 💬 0 📌 0

On my way to #COLM2025 🍁

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

06.10.2025 15:50 — 👍 12 🔁 4 💬 0 📌 0

Heading to #COLM2025 to present my first paper w/ @jennhu.bsky.social @kmahowald.bsky.social !

When: Tuesday, 11 AM – 1 PM

Where: Poster #75

Happy to chat about my work and topics in computational linguistics & cogsci!

Also, I'm on the PhD application journey this cycle!

Paper info 👇:

06.10.2025 16:05 — 👍 7 🔁 3 💬 0 📌 0

Also, I’m on the look out for my first PhD student! If you’d like to be the one, please reach out to me (dms/email open) and we can chat!!

@jessyjli.bsky.social and @kmahowald.bsky.social are also hiring students, and we’re all eager to co-advise!

06.10.2025 15:22 — 👍 1 🔁 0 💬 0 📌 0

I’ll also be moderating a roundtable at the INTERPLAY workshop on Oct 10 — excited to discuss behavior, representations, and a third secret thing with folks!

06.10.2025 15:22 — 👍 0 🔁 0 💬 1 📌 0

All of us (@kanishka.bsky.social @kmahowald.bsky.social and me) are looking for PhD students this cycle! If computational linguistics/NLP is your passion, join us at UT Austin!

For my areas see jessyli.com

30.09.2025 19:30 — 👍 4 🔁 5 💬 0 📌 0

We'll all be attending #COLM2025 -- come say hi if you are interested in working with us!!

Separate tweet incoming for COLM papers!

30.09.2025 16:17 — 👍 0 🔁 0 💬 0 📌 0

Picture of the UT Tower with "UT Austin Computational Linguistics" written in bigger font, and "Humans processing computers processing human processing language" in smaller font

The compling group at UT Austin (sites.utexas.edu/compling/) is looking for PhD students!

Come join me, @kmahowald.bsky.social, and @jessyjli.bsky.social as we tackle interesting research questions at the intersection of ling, cogsci, and ai!

Some topics I am particularly interested in:

30.09.2025 16:17 — 👍 18 🔁 10 💬 3 📌 2

Sigmoid function. Non-linearities in neural network allow it to behave in distributed and near-symbolic fashions.

New paper! 🚨 I argue that LLMs represent a synthesis between distributed and symbolic approaches to language, because, when exposed to language, they develop highly symbolic representations and processing mechanisms in addition to distributed ones.

arxiv.org/abs/2502.11856

30.09.2025 13:15 — 👍 26 🔁 11 💬 1 📌 0

Hehe but really — unifying all of them + easy access = yes plssss

27.09.2025 15:01 — 👍 1 🔁 0 💬 0 📌 0

Friendship ended with minicons, glazing is my new fav package!

27.09.2025 14:47 — 👍 5 🔁 0 💬 1 📌 0

God’s work 🙏

27.09.2025 14:46 — 👍 1 🔁 0 💬 0 📌 0

GitHub - aaronstevenwhite/glazing: Unified data models and interfaces for syntactic and semantic frame ontologies.

Unified data models and interfaces for syntactic and semantic frame ontologies. - aaronstevenwhite/glazing

I've found it kind of a pain to work with resources like VerbNet, FrameNet, PropBank (frame files), and WordNet using existing tools. Maybe you have too. Here's a little package that handles data management, loading, and cross-referencing via either a CLI or a python API.

27.09.2025 13:51 — 👍 27 🔁 7 💬 3 📌 1

Would you say it’s a dead area right now? (Ignoring the podcasts)

26.09.2025 03:14 — 👍 0 🔁 0 💬 1 📌 0

Love to start the day by mistakenly stumbling onto hate speech against south-asians ty internet

21.09.2025 15:09 — 👍 4 🔁 0 💬 0 📌 0

Accepted at #NeurIPS2025! So proud of Yulu and Dheeraj for leading this! Be on the lookout for more "nuanced yes/no" work from them in the future 👀

18.09.2025 16:12 — 👍 6 🔁 1 💬 0 📌 0

Abstract deadline changed to *December 1, 2025*

07.09.2025 21:48 — 👍 13 🔁 5 💬 0 📌 0

How Linguistics Learned to Stop Worrying and Love the Language Models

How Linguistics Learned to Stop Worrying and Love the Language Models

📣@futrell.bsky.social and I have a BBS target article with an optimistic take on LLMs + linguistics. Commentary proposals (just need a few hundred words) are OPEN until Oct 8. If we are too optimistic for you (or not optimistic enough!) or you have anything to say: www.cambridge.org/core/journal...

15.09.2025 15:46 — 👍 50 🔁 10 💬 4 📌 3

Happy and proud to see @rjantonello.bsky.social’s work awarded by SNL!

13.09.2025 21:47 — 👍 28 🔁 4 💬 1 📌 0

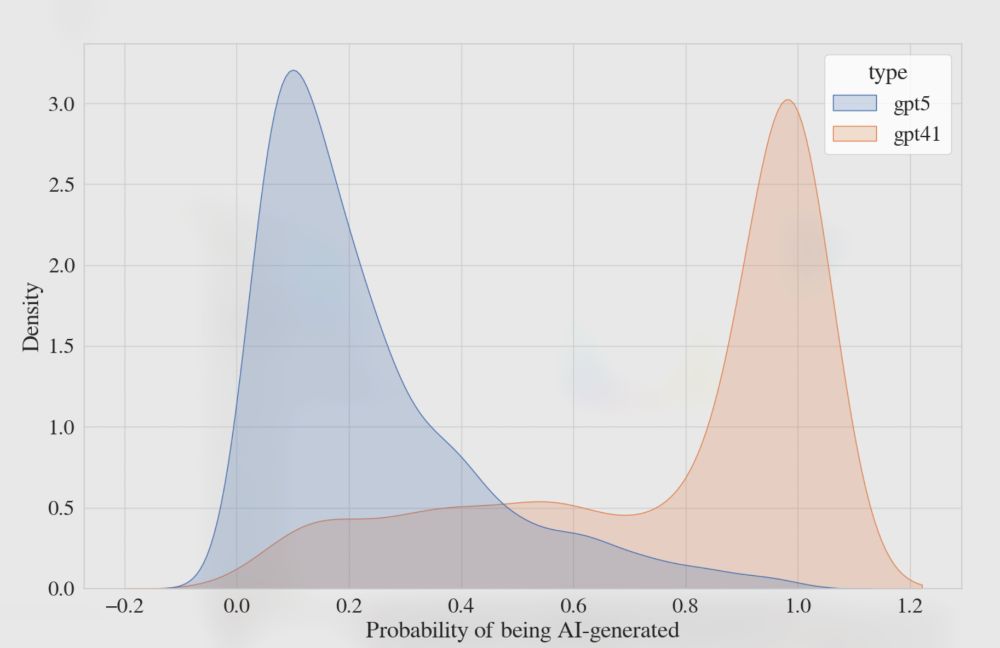

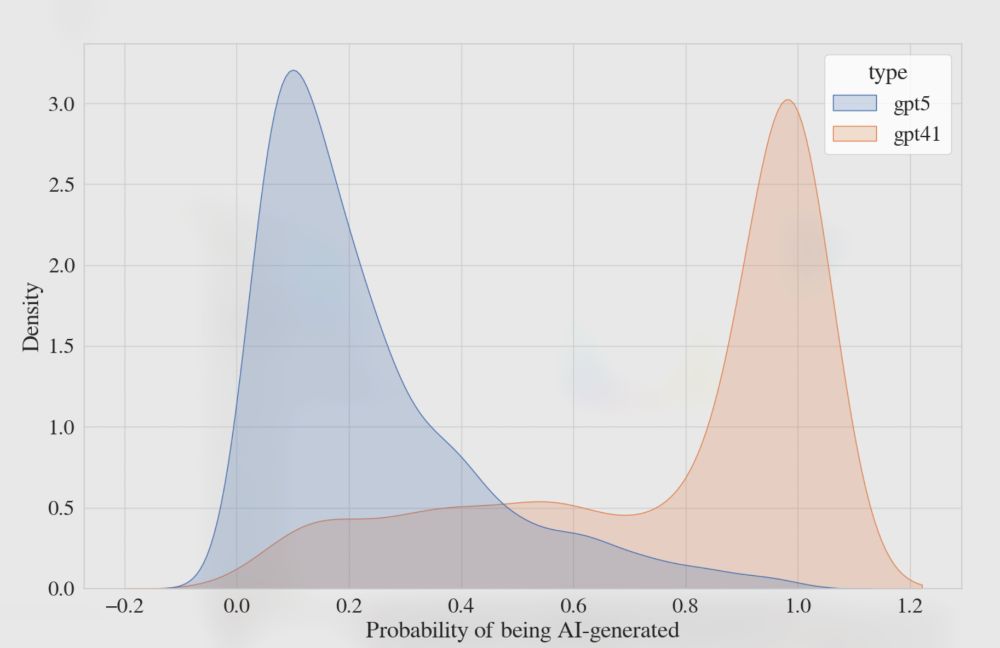

Density plot with X axis being probability of text being synthetic from an AI detector model. Plots show that GPT4.1 outputs are assigned high probability of being AI text, but GPT5 outputs are assigned low probability of being AI text.

Exhibit N on how synthetic text/AI detectors just don't work reliably. Generating some (long) sentences from GPT4.1 and GPT5 with the same prompt, the top open-source model on the RAID benchmark classifies most GPT4.1 outputs as synthetic and most GPT5 as not synthetic.

10.09.2025 20:05 — 👍 5 🔁 1 💬 0 📌 0

Title: The Cats of Cogsci. Two cats, Coco and Loki, with Northwestern Cognitive Science logo in the background. Coco is sitting on some books and Loki is holding an apple. Both are wearing glasses b/c they are academics.

Loki the cat has his paw on a laptop; text "Remember to add Cog Sci 110 to you shopping cart now in Caesar so that you're ready to enroll come September 12th!"

Super happy with Cogsci program assistant Chris Kent's work for our college Instagram feed. Glad I could get our Loki featured to advertise my class.

06.09.2025 20:15 — 👍 13 🔁 2 💬 0 📌 0

Excited to speak alongside such an illustrious set of speakers!

24.08.2025 14:49 — 👍 6 🔁 0 💬 0 📌 0

professor at university of washington and founder at csm.ai. computational cognitive scientist. working on social and artificial intelligence and alignment.

http://faculty.washington.edu/maxkw/

Research scientist at Google DeepMind.🦎

She/her.

http://www.aidanematzadeh.me/

Assoc. Prof in CS @ Northeastern, NLP/ML & health & etc. He/him.

phd. linguist. last name rhymes with “epic”.

www: sites.google.com/view/ryanlepic

Computational linguist trying to understand how humans and computers learn and use language 👶🧠🗣️🖥️💬

PhD @clausebielefeld.bsky.social, Bielefeld University

https://bbunzeck.github.io

Philosopher of science, author of 'Language, science, and structure (OUP 2023) & 'The Philosophy of Theoretical Linguistics' (CUP 2024). Prof @ University of Cape Town & Bristol. Associate Editor Theoretical Linguistics (De Gruyter): ryannefdt.weebly.com

Psychology PhD Student at UW-Madison

Studying the evolution and development of complex cognition like language, number, and logic.

Postdoc at Utrecht University, previously PhD candidate at the University of Amsterdam

Multimodal NLP, Vision and Language, Cognitively Inspired NLP

https://ecekt.github.io/

Assistant Professor of Psychology at UW-Madison. PI, Cognitive Origins Lab, investigating the evolutionary and developmental origins of thought. He/Him. cognitiveoriginslab.psych.wisc.edu

PhD Student at UW-Madison studying language and cognition, inner speech, and semantic representation.

Personal Website: https://kira-breeden.github.io/

Philosopher, Scientist, Engineer

https://hokindeng.github.io/

Associate Professor, Department of Psychology, Harvard University. Computation, cognition, development.

PhD candidate interested in language, cognition, and computation at @ucirvine.bsky.social

Website: https://shiupadhye.github.io/

Assistant Professor at UCLA | Alum @MIT @Princeton @UC Berkeley | AI+Cognitive Science+Climate Policy | https://ucla-cocopol.github.io/

I do research in social computing and LLMs at Northwestern with @robvoigt.bsky.social and Kaize Ding.

Mom, Professor of Linguistics @colorado.edu. 1st gen Construction Grammarian, Fillmore protégée, using social semiotic syntax to combine climate-change action & meaning construction. Feet planted in Boulder, heart still in Berkeley

Assistant Professor of Cognitive AI @UvA Amsterdam

language and vision in brains & machines

cognitive science 🤝 AI 🤝 cognitive neuroscience

michaheilbron.github.io

Linguist at Georgia Tech (semantics, socio) with my own views; runner with type 1 diabetes; mom of 2