Soon hiring a lab manager! Looking for someone who is really interested in language neuroscience, who is organised, motivated, a great communicator, and who works well in a research team. Express interest by submitting this form: tinyurl.com/glysn-labman...

Reposts appreciated!

03.02.2026 16:14 — 👍 41 🔁 39 💬 3 📌 1

The Visual Learning Lab is hiring TWO lab coordinators!

Both positions are ideal for someone looking for research experience before applying to graduate school. Application deadline is Feb 10th (approaching fast!)—with flexible summer start dates.

30.01.2026 23:21 — 👍 48 🔁 41 💬 1 📌 0

Hopkins Cog Sci is hiring! We have two open faculty positions: one in vision, and one language. Please repost!

12.12.2025 18:18 — 👍 32 🔁 34 💬 0 📌 2

Excited to share our work on mechanisms of naturalistic audiovisual processing in the human brain 🧠🎬!!

www.biorxiv.org/content/10.1...

07.11.2025 16:01 — 👍 6 🔁 5 💬 9 📌 2

Call for applications to cognitive science PhD program with QR code to the link above

The department of Cognitive Science @jhu.edu is seeking motivated students interested in joining our interdisciplinary PhD program! Applications due 1 Dec

Our PhD students also run an application mentoring program for prospective students. Mentoring requests due November 15.

tinyurl.com/2nrn4jf9

30.10.2025 19:09 — 👍 12 🔁 9 💬 0 📌 2

🚨New preprint w/ @lisik.bsky.social!

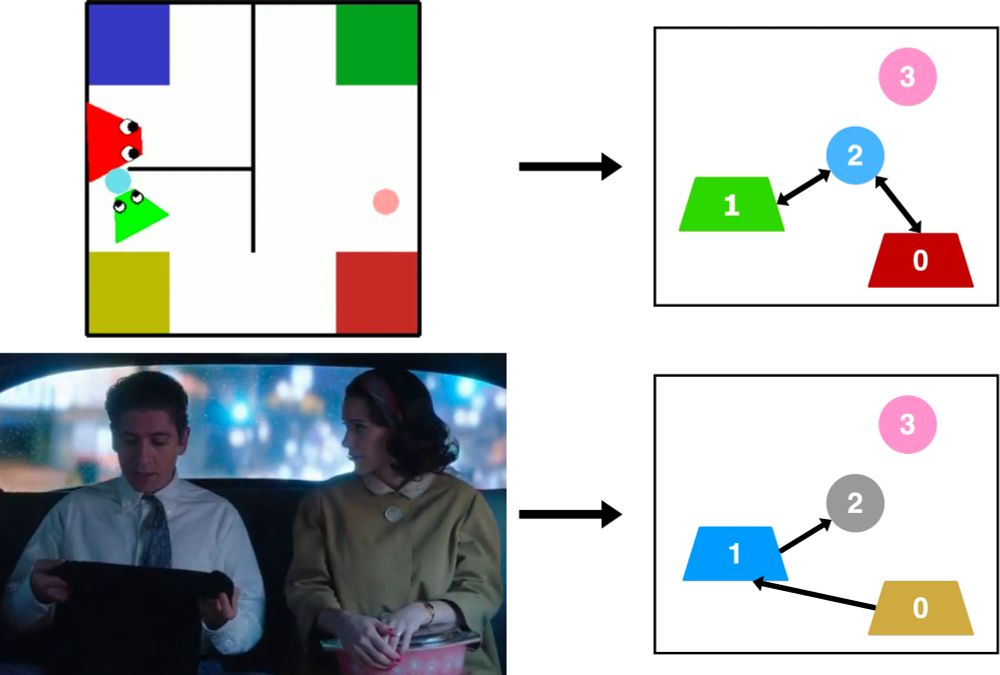

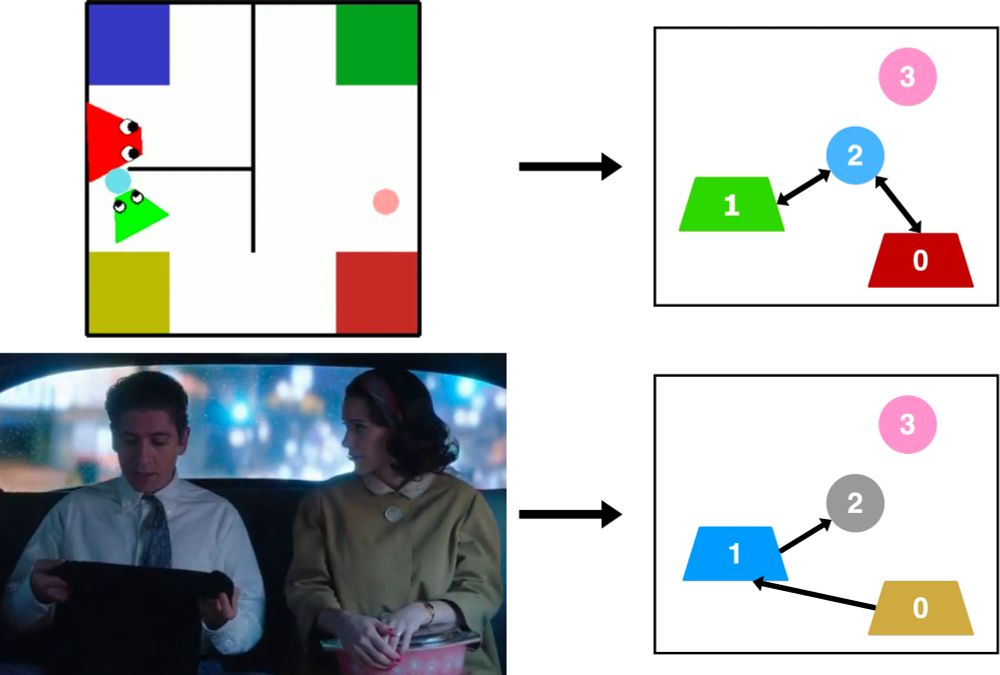

Aligning Video Models with Human Social Judgments via Behavior-Guided Fine-Tuning

We introduce a ~49k triplet social video dataset, uncover a modality gap (language > video), and close via novel behavior-guided fine-tuning.

🔗 arxiv.org/abs/2510.01502

03.10.2025 13:48 — 👍 22 🔁 6 💬 1 📌 1

Laboratory Technician

About the Opportunity SUMMARY The Subjectivity Lab, directed by Jorge Morales, and housed in the Department of Psychology at Northeastern University is excited to invite applications for a full-time L...

🚨🚨🚨 The Subjectivity Lab is looking for a lab manager! The position is available immediately. We want someone who can help coordinate our large sample fMRI study, plus other behavioral work. Because *gestures at everything* the job was approved only now (ends in June 2026). Great opportunity! 🧵 1/4

29.09.2025 14:22 — 👍 22 🔁 29 💬 2 📌 1

My lab at USC is recruiting!

1) research coordinator: perfect for a recent graduate looking for research experience before applying to PhD programs: usccareers.usc.edu REQ20167829

2) PhD students: see FAQs on lab website dornsife.usc.edu/hklab/faq/

28.09.2025 21:46 — 👍 40 🔁 25 💬 1 📌 1

Follow-up analyses showed that both social perception and language regions were best predicted by later vision model layers that map onto both high-level social semantic signals (valence, the presence of a social interaction, faces).

7/n

24.09.2025 19:51 — 👍 1 🔁 0 💬 1 📌 0

Importantly, vision and language embeddings are only weakly correlated throughout the movie, suggesting that the vision and language embeddings are each predicting distinct variance in the neural responses.

6/n

24.09.2025 19:51 — 👍 0 🔁 0 💬 1 📌 0

We find that vision embeddings dominate prediction across cortex. Surprisingly, even language-selective regions were well predicted by vision model embeddings, as well as or better than language model features.

5/n

24.09.2025 19:51 — 👍 0 🔁 0 💬 1 📌 0

We densely labeled the vision and language features of the movie using a combination of human annotations and vision and language deep neural network (DNN) models and linearly mapped these features to fMRI responses using an encoding model

4/n

24.09.2025 19:49 — 👍 0 🔁 0 💬 1 📌 0

To address this, we collected fMRI data from 34 participants while they watched a 45- minute naturalistic audiovisual movie. Critically, we used functional localizer experiments to identify social interaction perception and language-selective regions in the same participants.

3/n

24.09.2025 19:47 — 👍 0 🔁 0 💬 1 📌 0

Humans effortlessly extract social information from both the vision and language signals around us. However, most work (even most naturalistic fMRI encoding work) is limited to studying unimodal processing. How does the brain process simultaneous multimodal social signals?

2/n

24.09.2025 19:46 — 👍 0 🔁 0 💬 1 📌 0

Excited to share new work with @hleemasson.bsky.social , Ericka Wodka, Stewart Mostofsky and @lisik.bsky.social! We investigated how simultaneous vision and language signals are combined in the brain using naturalistic+controlled fMRI. Read the paper here: osf.io/b5p4n

1/n

24.09.2025 19:46 — 👍 49 🔁 11 💬 1 📌 2

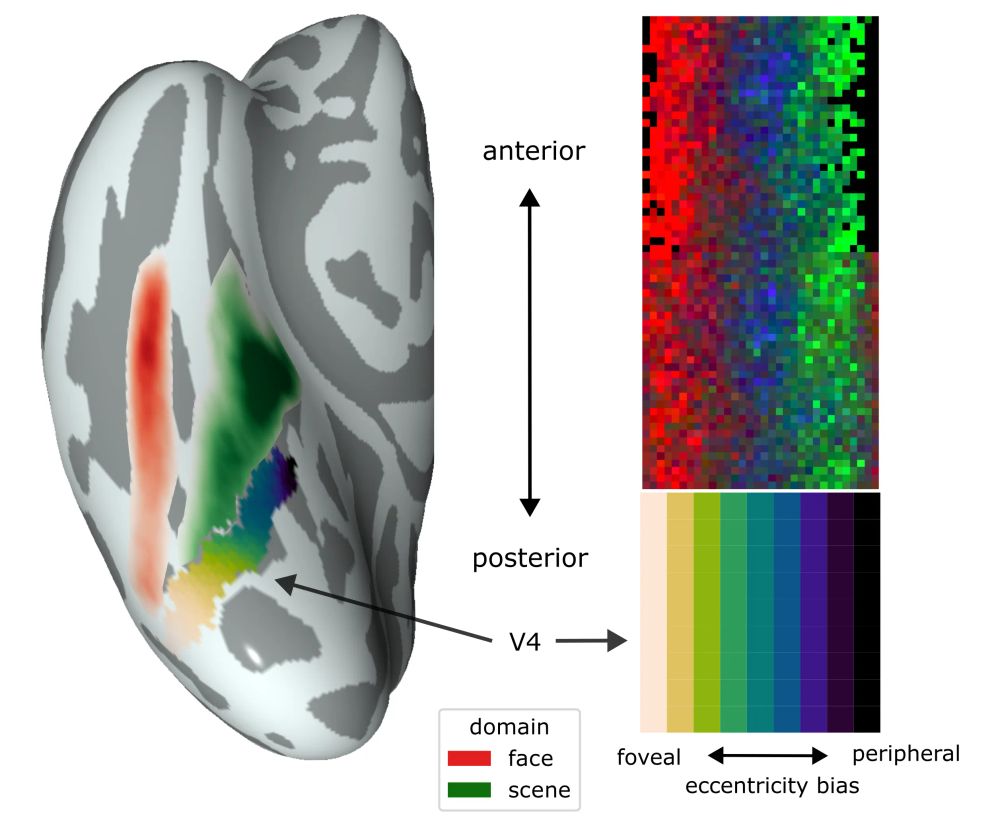

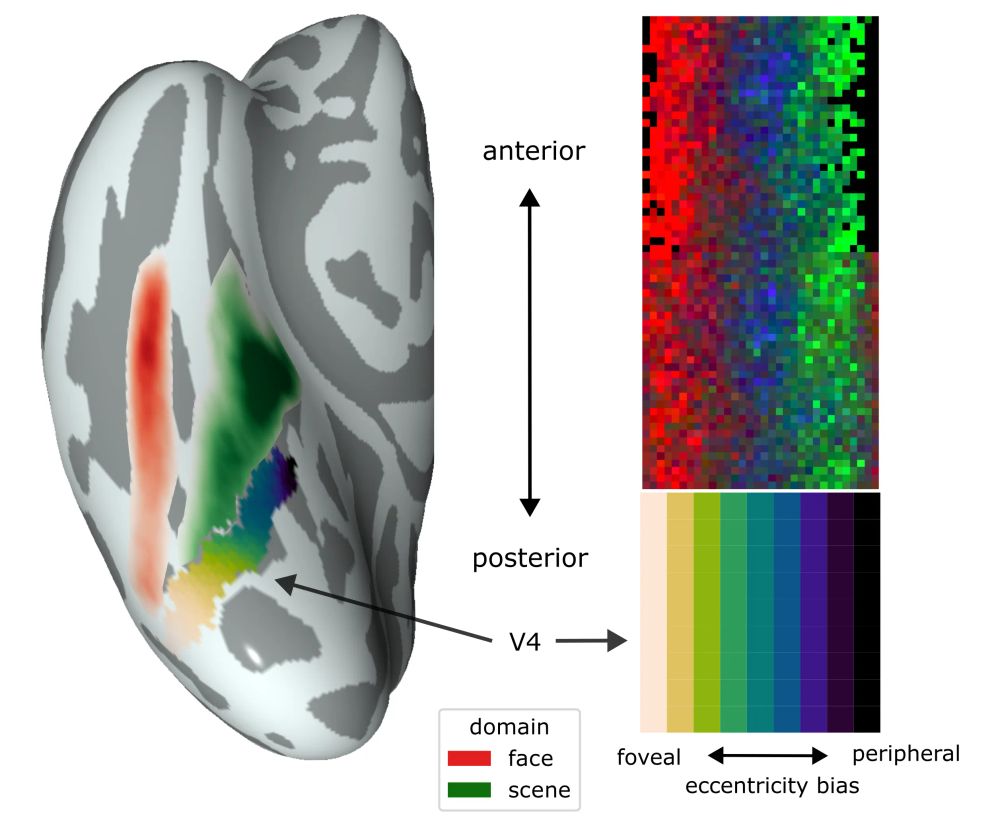

What shapes the topography of high-level visual cortex?

Excited to share a new pre-print addressing this question with connectivity-constrained interactive topographic networks, titled "Retinotopic scaffolding of high-level vision", w/ Marlene Behrmann & David Plaut.

🧵 ↓ 1/n

16.06.2025 15:11 — 👍 67 🔁 24 💬 1 📌 0

Despite everything going on, I may have funds to hire a postdoc this year 😬🤞🧑🔬 Open to a wide variety of possible projects in social and cognitive neuroscience. Get in touch if you are interested! Reposts appreciated.

09.05.2025 19:01 — 👍 130 🔁 102 💬 3 📌 5

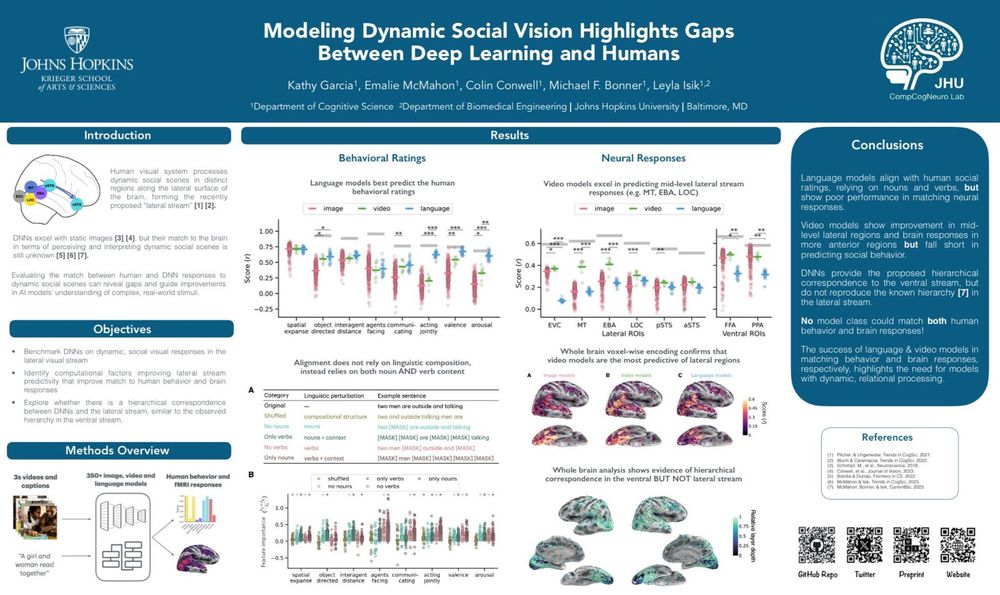

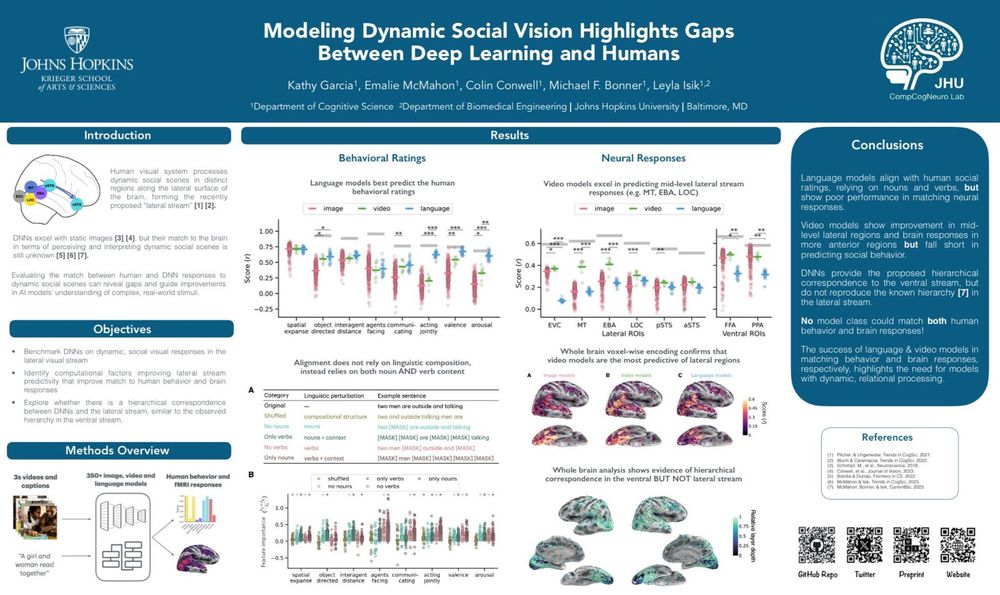

📢 Excited to announce our paper at #ICLR2025: “Modeling dynamic social vision highlights gaps between deep learning and humans”! w/ @emaliemcmahon.bsky.social, Colin Conwell, Mick Bonner, @lisik.bsky.social

📆 Thur, Apr, 24: 3:00-5:30 - Poster session 2 (#64)

📄 bit.ly/4jISKES%E2%8... [1/6]

23.04.2025 18:07 — 👍 9 🔁 3 💬 1 📌 0

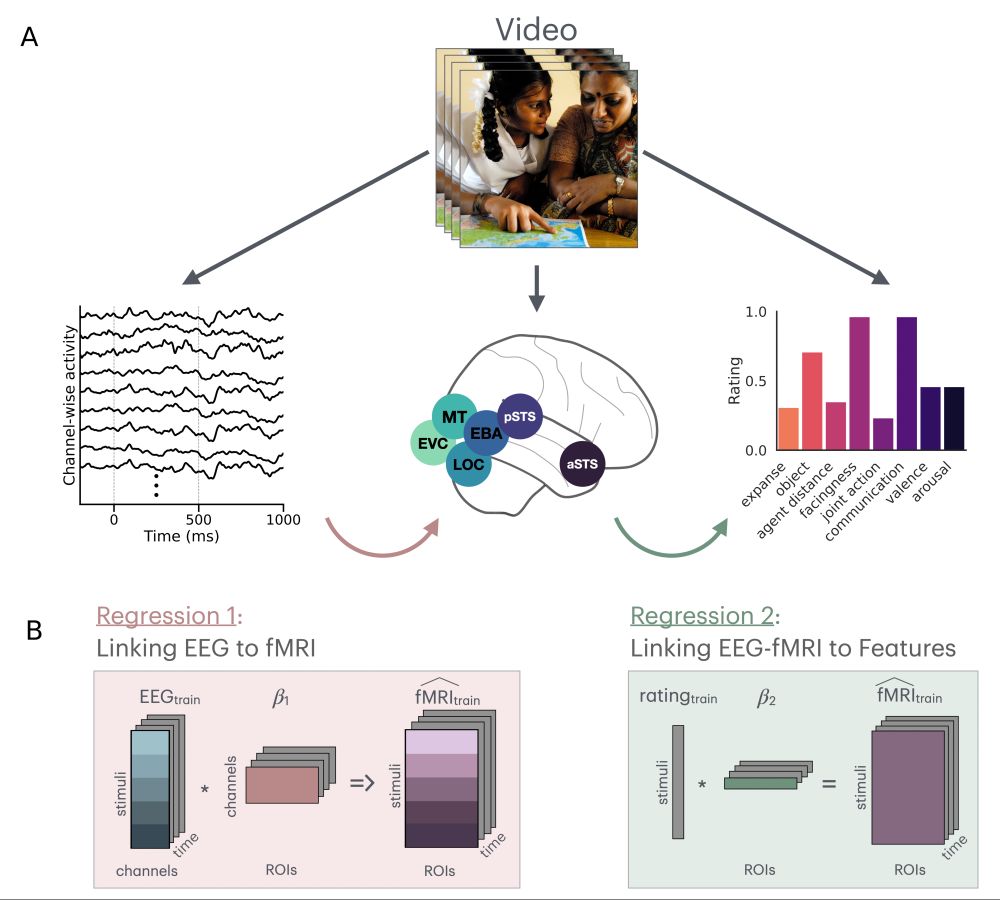

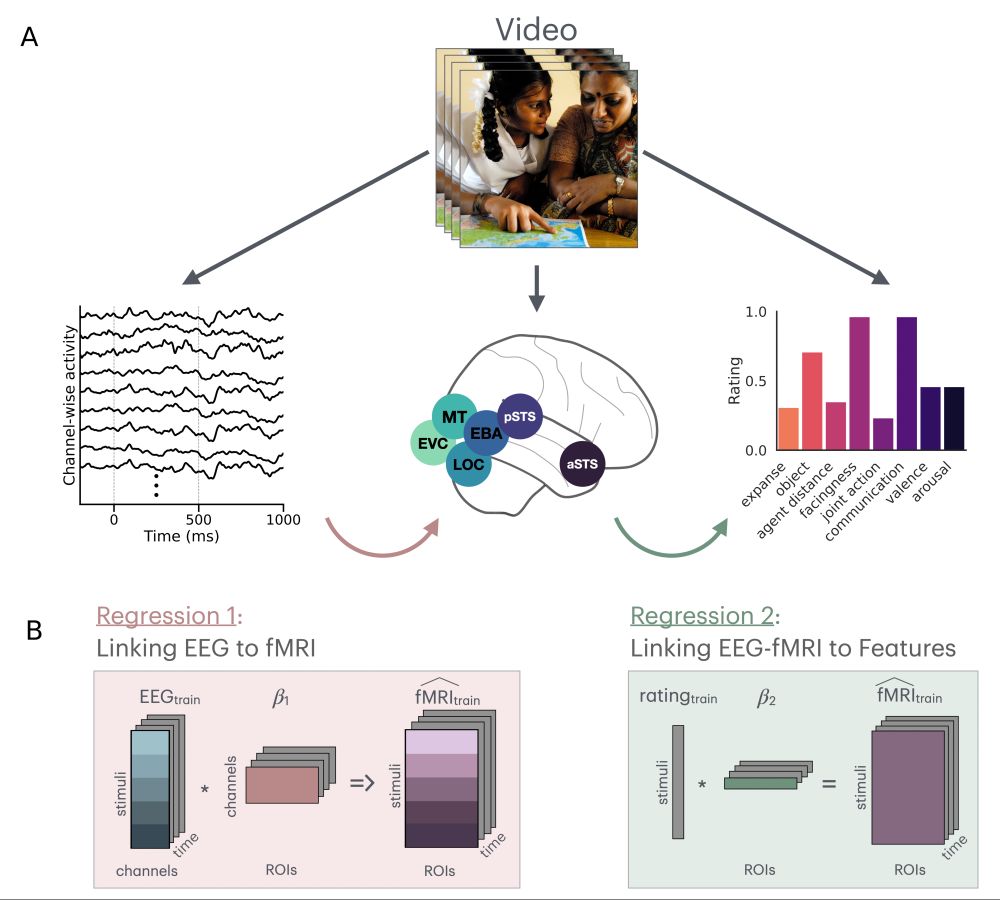

Shown is an example image that participants viewed either in EEG, fMRI, and a behavioral annotation task. There is also a schematic of a regression procedure for jointly predicting fMRI responses from stimulus features and EEG activity.

I am excited to share our recent preprint and the last paper of my PhD! Here, @imelizabeth.bsky.social, @lisik.bsky.social, Mick Bonner, and I investigate the spatiotemporal hierarchy of social interactions in the lateral visual stream using EEG-fMRI.

osf.io/preprints/ps...

#CogSci #EEG

23.04.2025 15:34 — 👍 27 🔁 9 💬 1 📌 0

This is incredibly cool: if you search for a condition that’s affected your family, the site returns stats on how much NIH has done for that disease, *and* a contact form for reaching out to tell your Members of Congress why you want to see them defend NIH.

Pass it on!

21.04.2025 13:06 — 👍 618 🔁 405 💬 4 📌 3

The cerebellar components of the human language network

The cerebellum's capacity for neural computation is arguably unmatched. Yet despite evidence of cerebellar contributions to cognition, including language, its precise role remains debated. Here, we sy...

New paper! 🧠 **The cerebellar components of the human language network**

with: @hsmall.bsky.social @moshepoliak.bsky.social @gretatuckute.bsky.social @benlipkin.bsky.social @awolna.bsky.social @aniladmello.bsky.social and @evfedorenko.bsky.social

www.biorxiv.org/content/10.1...

1/n 🧵

21.04.2025 15:19 — 👍 50 🔁 20 💬 2 📌 3

Technical Associate I, Kanwisher Lab

MIT - Technical Associate I, Kanwisher Lab - Cambridge MA 02139

I’m hiring a full-time lab tech for two years starting May/June. Strong coding skills required, ML a plus. Our research on the human brain uses fMRI, ANNs, intracranial recording, and behavior. A great stepping stone to grad school. Apply here:

careers.peopleclick.com/careerscp/cl...

......

26.03.2025 15:09 — 👍 64 🔁 48 💬 5 📌 3

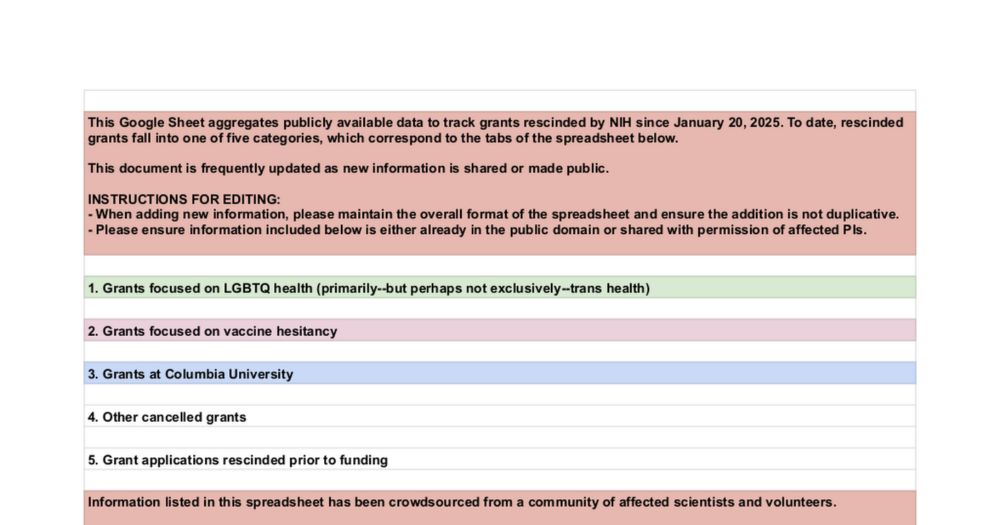

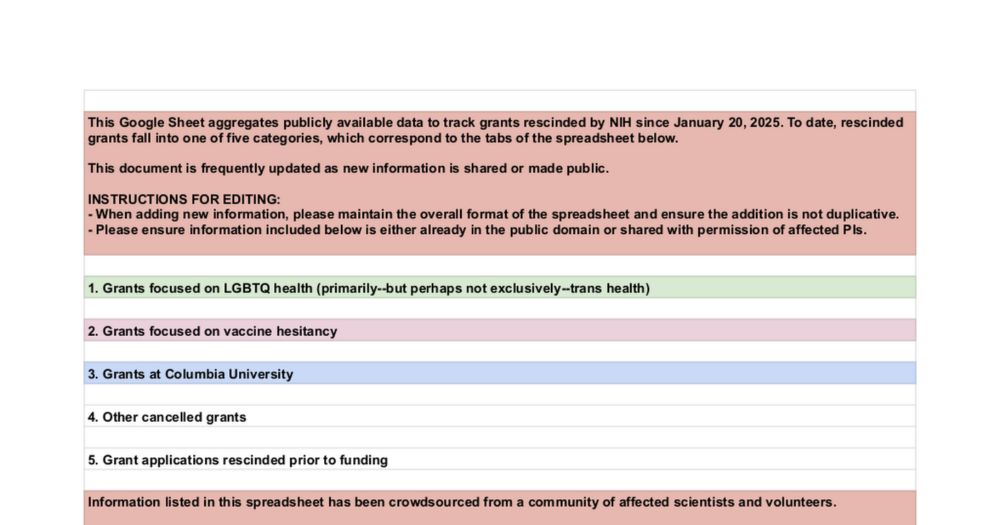

Rescinded NIH & NSF Grants

Substantial updates to the list of cancelled grants👇

- THANK YOU to all who have contributed. Crowdsourcing restores faith in humanity.

- It's still a work in progress. You'll see more updates shortly.

- There are multiple teams & efforts engaged in tracking & advocacy. More to come soon!

18.03.2025 04:24 — 👍 255 🔁 157 💬 14 📌 20

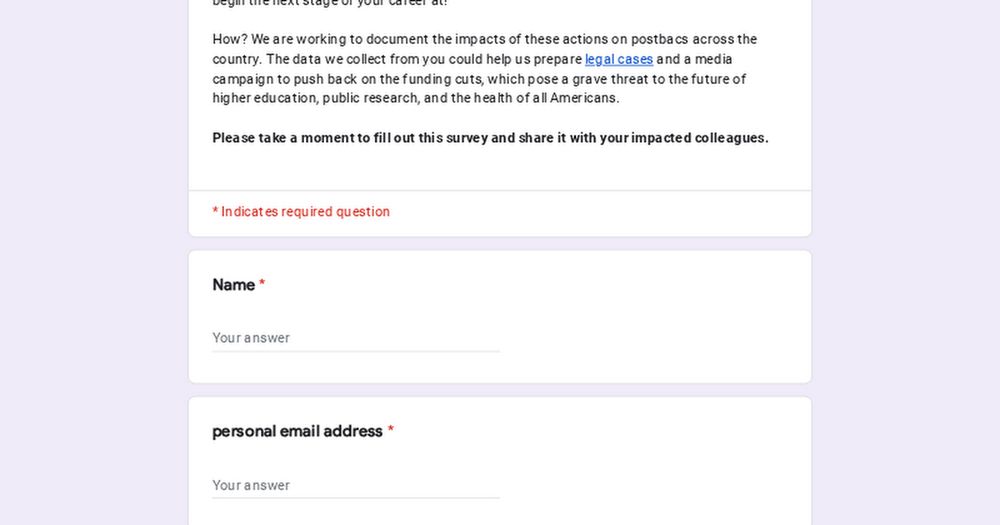

Grad Admission Impacts Survey

It is grad admissions season and many postbacs are feeling the chilling impacts of the Trump administration's recent executive orders freezing and slashing extramural research funding. Dozens of gradu...

As a result of Trump’s slashes to research funding, dozens of graduate programs have announced reductions and cancellations of graduate admissions slots.

If you are an impacted applicant, please fill out this survey: docs.google.com/forms/d/e/1F...

🧪🧠🧬🔬🥼👩🏼🔬🧑🔬

24.02.2025 03:51 — 👍 263 🔁 268 💬 8 📌 5

EvLab

Our research aims to understand how the language system works and how it fits into the broader landscape of the human mind and brain.

Our language neuroscience lab (evlab.mit.edu) is looking for a new lab manager/FT RA to start in the summer. Apply here: tinyurl.com/3r346k66 We'll start reviewing apps in early Mar. (Unfortunately, MIT does not sponsor visas for these positions, but OPT works.)

05.02.2025 14:43 — 👍 30 🔁 20 💬 0 📌 0

Hey Bsky friends on #neuroskyence! Very excited to share our

@iclr-conf.bsky.social paper: TopoNets! High-performing vision and language models with brain-like topography! Expertly led by grad student Mayukh and Mainak! A brief thread...

30.01.2025 15:23 — 👍 56 🔁 18 💬 3 📌 3

Google Forms: Sign-in

Access Google Forms with a personal Google account or Google Workspace account (for business use).

✨i'm hiring a lab manager, with a start date of ~September 2025! to express interest, please complete this google form: forms.gle/GLyAbuD779Rz...

looking for someone to join our multi-disciplinary team, using OPM, EEG, iEEG and computational techniques to study speech and language processing! 🧠

13.12.2024 01:13 — 👍 103 🔁 64 💬 2 📌 3

Our paper "Relational visual representations underlie human social interaction recognition" led by @manasimalik.bsky.social is now out in Nature Communications

www.nature.com/articles/s41...

13.11.2023 15:54 — 👍 30 🔁 13 💬 1 📌 0

PhD student at Stanford Psychology 🧠💻 | Computational modeling of the visual cortex & NeuroAI | Johns Hopkins U ‘24

Cognitive neuroscience. Deep learning. PhD Student at Princeton Neuroscience with @cocoscilab.bsky.social and Cohen Lab.

neuromantic - ML and cognitive computational neuroscience - PhD student at Kietzmann Lab, Osnabrück University.

⛓️ https://init-self.com

Postdoc at Stanford | Developmental NeuroAI

Cognitive Science PhD student @ JHU

Cog comp neuro PhD at Johns Hopkins

🔗 http://kelseyhan-jhu.github.io

Cognitive computational neuroscience | Postdoc at Pitt advised by Marlene Behrmann; PhD at JHU advised by Mick Bonner

https://raj-magesh.org

Cognitive computational neuroscience, machine learning, psychophysics & consciousness.

Currently Professor at Freie Universität Berlin, also affiliated with the Bernstein Center for Computational Neuroscience.

Asst Professor Psychology & Data Science @ NYU | Working on brains & climate, separately | Author of Models of the Mind: How physics, engineering, and mathematics have shaped our understanding of the brain https://shorturl.at/g23c5 | Personal account (duh)

Assistant Professor of Cognitive Science at Johns Hopkins. My lab studies human vision using cognitive neuroscience and machine learning. bonnerlab.org

PhD student at MIT Brain and Cognitive Sciences, Tedlab. I study psycholinguistics. immigrant 🏳️🌈 he/him ex-STEM-phobic

website: mpoliak.notion.site

Researcher in Neuroscience & AI

CNRS, Ecole Normale Supérieure, PSL

currently detached to Meta

Studying speech using intracranial recordings and EEG. Opinions my own.

Cognitive Computational Neuroscientist in Training

Neuro + ML PhD @ CMU'29 | BS in Math and in CS @ MIT' 23 & MEng' 24

Cognitive Science Ph.D. Student @ Johns Hopkins in the GLINT lab, advised by Jennifer Hu

Johns Hopkins University Department of Cognitive Science

assoc prof, uc irvine cogsci & LPS: perception+metacognition+subjective experience, fMRI+models+AI

phil sci, education for all

2026: UCI-->UCL!

prez+co-founder, neuromatch.io

fellow, CIFAR brain mind consciousness

meganakpeters.org

she/her💖💜💙views mine

I'm a philosopher, psychologist and neuroscientist studying vision, mental imagery, consciousness and introspection. As S.S. Stevens said "there are numerous pitfalls in this business." https://www.subjectivitylab.org

Language processing in Brains vs Machines PhD student Georgia Tech

https://tahabinhuraib.github.io/