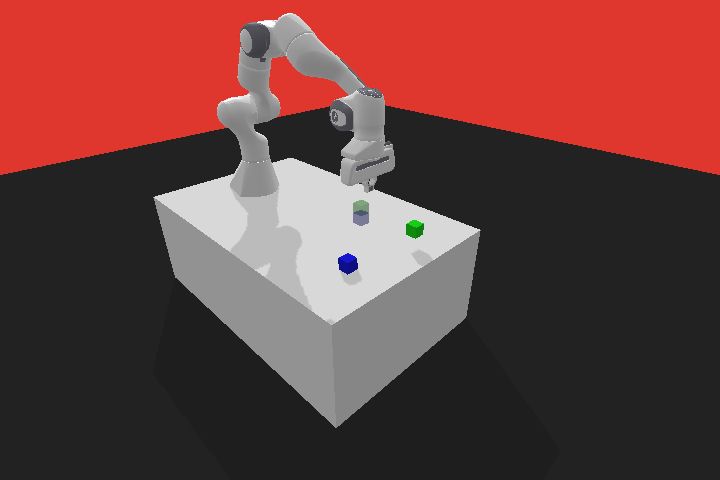

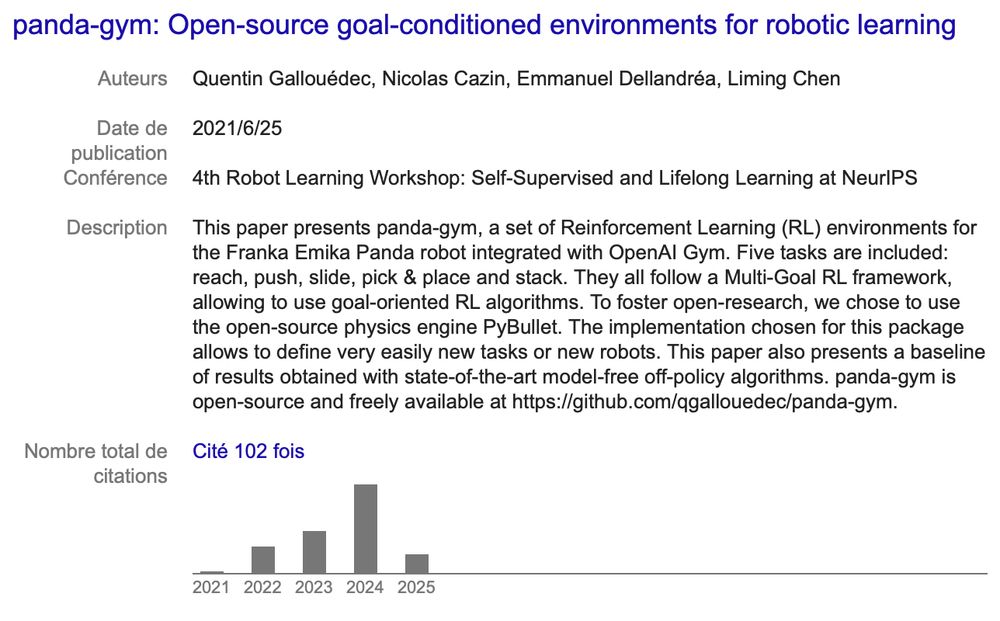

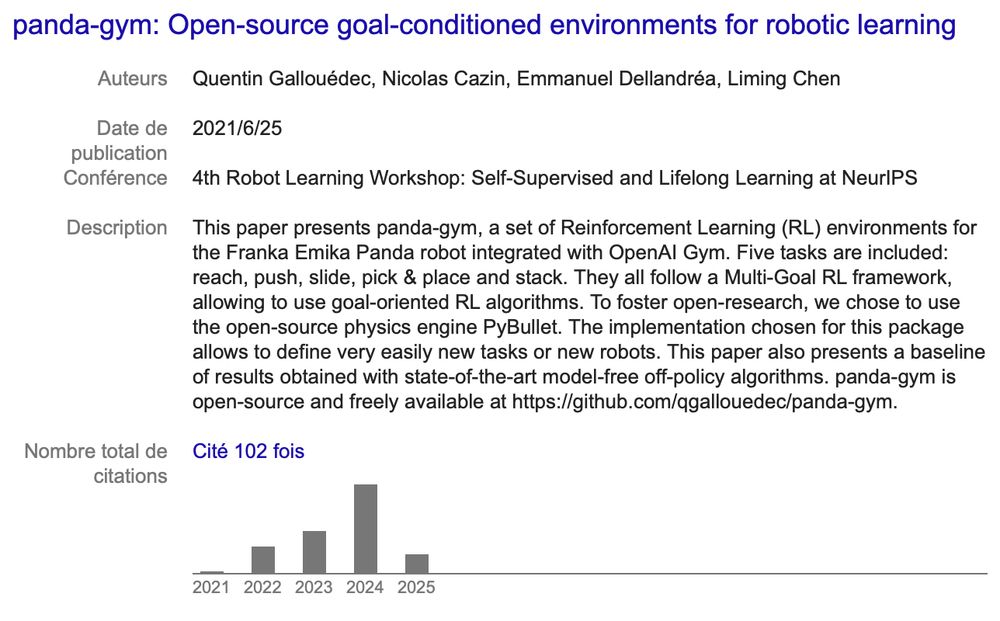

It started as a modest project to offer a free, open-source alternative to MuJoCo environments, and today, panda-gym is downloaded over 100k times, and cited in over 100 papers. 🦾

02.05.2025 23:14 — 👍 7 🔁 1 💬 0 📌 0@qgallouedec.hf.co

PhD - Research @hf.co 🤗 TRL maintainer

It started as a modest project to offer a free, open-source alternative to MuJoCo environments, and today, panda-gym is downloaded over 100k times, and cited in over 100 papers. 🦾

02.05.2025 23:14 — 👍 7 🔁 1 💬 0 📌 0

just pip install trl

26.04.2025 22:57 — 👍 4 🔁 0 💬 0 📌 0

How many of these 8 things did you know?

huggingface.co/blog/qgallou...

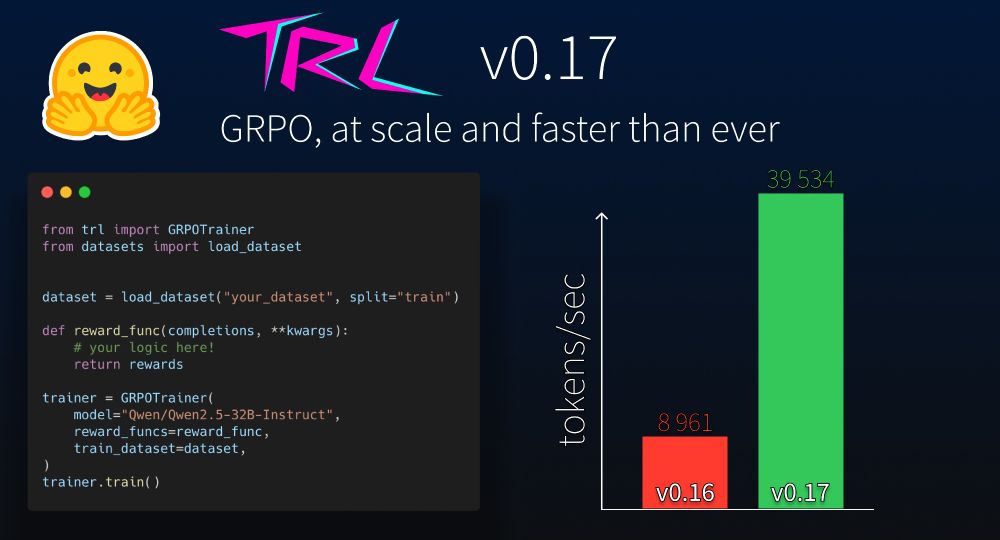

🚀 TRL 0.14 – Featuring GRPO! 🚀

TRL 0.14 brings *GRPO*, the RL algorithm behind 🐳 DeekSeek-R1 .

⚡ Blazing fast generation with vLLM integration.

📉 Optimized training with DeepSpeed ZeRO 1/2/3.

The most impactful open-source project of today (dixit Vercel VP of AI)

=> huggingface.co/blog/open-r1

Last moments of closed-source AI 🪦 :

Hugging Face is openly reproducing the pipeline of 🐳 DeepSeek-R1. Open data, open training. open models, open collaboration.

🫵 Let's go!

github.com/huggingface/...

The algorithm behind DeepSeek's R1 model (aka GRPO) now lives in TRL main branch! Go and test it!

22.01.2025 15:07 — 👍 4 🔁 0 💬 0 📌 0

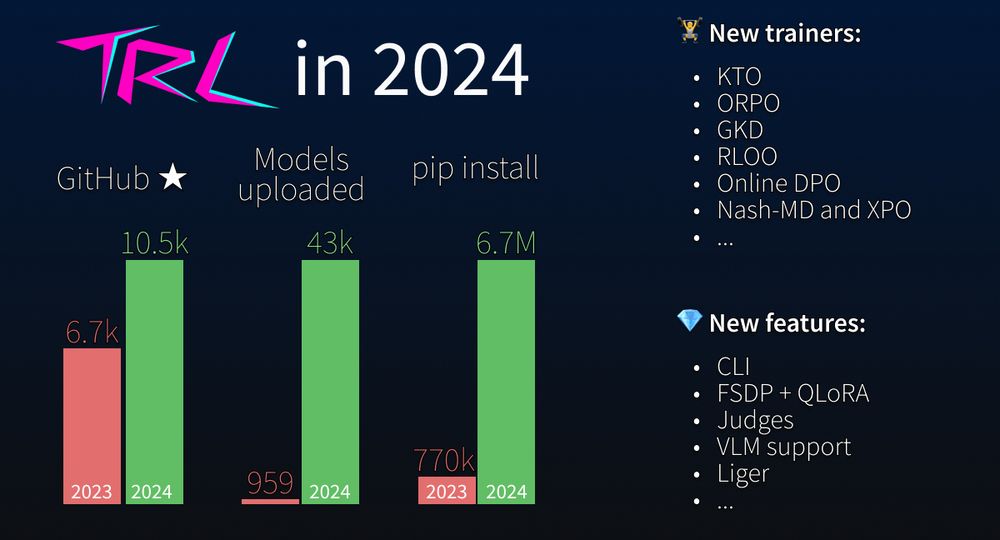

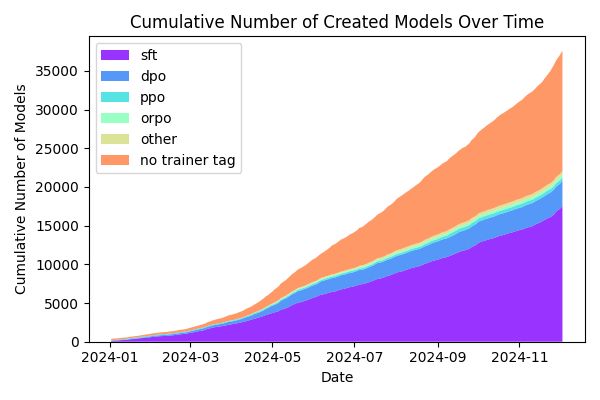

[Stonks] TRL is a Python library for training language models.

It has seen impressive growth this year. Lots of new features, an improved codebase, and this has translated into increased usage. You can count on us to do even more in 2025.

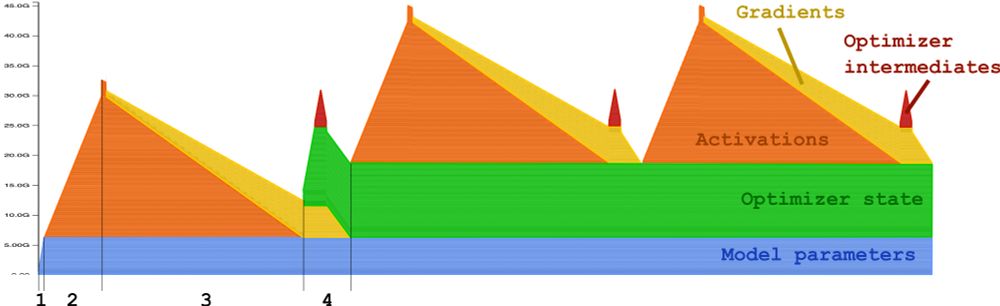

🎅 Santa Claus has delivered the ultimate guide to understand OOM error (link in comment)

24.12.2024 11:04 — 👍 16 🔁 5 💬 2 📌 0

Top 1 Python dev today. Third time since september 🫨

17.12.2024 18:32 — 👍 4 🔁 0 💬 0 📌 0

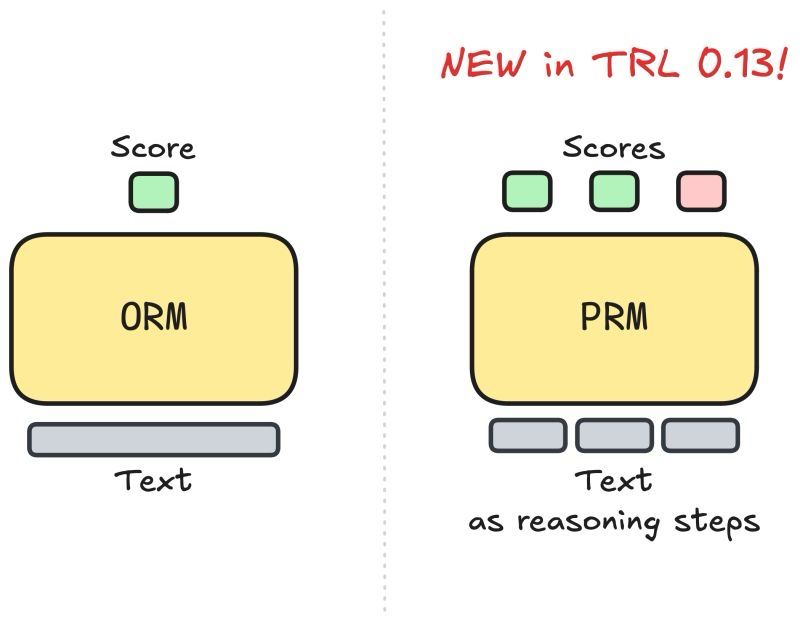

🚨 TRL 0.13 is out! 🤗

Featuring a Process-supervised Reward Models (PRM) Trainer 🏋️

PRMs empower LLMs to "think before answering"—a key feature behind OpenAI's o1 launch just two weeks ago. 🚀

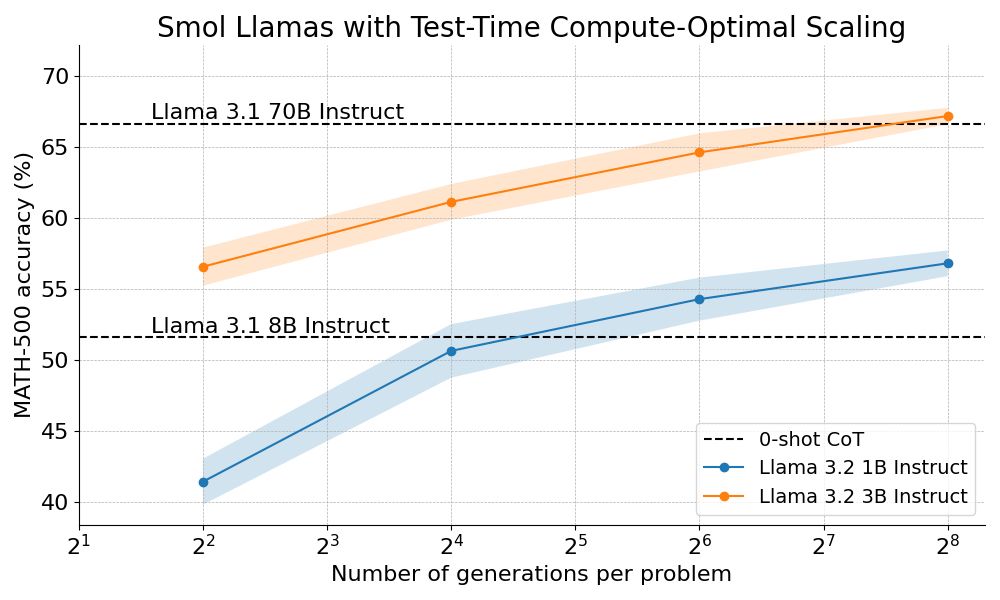

We outperform Llama 70B with Llama 3B on hard math by scaling test-time compute 🔥

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

The number of TRL models on the 🤗 Hub has risen x60 this year! 📈

How about doing the same next year?

We took those TRL notebooks from last week and made a page from them. So if you're upskilling on finetuning or aligning LLMs, and want examples from the community (like Maxime Labonne Philipp Schmid Sergio Paniego Blanco), check it out!

bsky.app/profile/benb...

>> huggingface.co/docs/trl/mai...

(don't mind the location) apply.workable.com/huggingface/...

27.11.2024 15:51 — 👍 1 🔁 0 💬 0 📌 0

Join us at Hugging Face as an intern if you want to contribute to amazing open-source projects, and develop LLM's best finetuning library, aka TRL.

🧑💻 Full remote

🤯 Exciting subjects

🌍 Anywhere in the world

🤸🏻 Flexible working hours

Link to apply in comment 👇

We’re looking for an intern to join our SmolLM team! If you’re excited about training LLMs and building high-quality datasets, we’d love to hear from you. 🤗

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

I'd love to! We have a lot of room for improvement here!

25.11.2024 10:43 — 👍 3 🔁 0 💬 0 📌 0These tutorials provide a comprehensive but concise roadmap through TRL across the main fine-tuning and alignment classes.

🤔 Let me know if you would like a dedicated course on TRL basics.

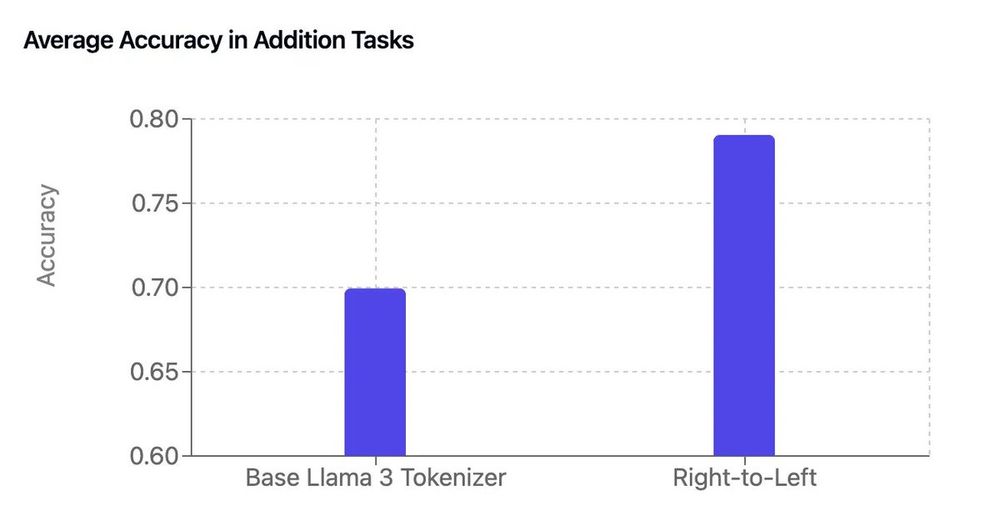

It's Sunday morning so taking a minute for a nerdy thread (on math, tokenizers and LLMs) of the work of our intern Garreth

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

How can you avoid the temptation to use a subprocess for sub-commands?

This blog post from @muellerzr.bsky.social saved my day.

muellerzr.github.io/til/argparse...

Finetune SmolLM2 with TRL!

21.11.2024 11:32 — 👍 12 🔁 0 💬 0 📌 1

When XetHub joined Hugging Face, we brainstormed how to share our tech with the community.

The magic? Versioning chunks, not files, giving rise to:

🧠 Smarter storage

⏩ Faster uploads

🚀 Efficient downloads

Curious? Read the blog and let us know how it could help your workflows!