Common pitfalls (with examples) when building AI applications, both from public case studies and my personal experience.

huyenchip.com/2025/01/16/a...

Would love to hear from your experience about the pitfalls you've seen!

@ahkval.bsky.social

I just wanna know and experience stuff. Come say hi 🦧 | Exploring LLM agents rn

Common pitfalls (with examples) when building AI applications, both from public case studies and my personal experience.

huyenchip.com/2025/01/16/a...

Would love to hear from your experience about the pitfalls you've seen!

Has anyone written an history of standard evaluation sets? How they were created and where does this content ultimately come from?

18.12.2024 22:18 — 👍 10 🔁 2 💬 2 📌 1

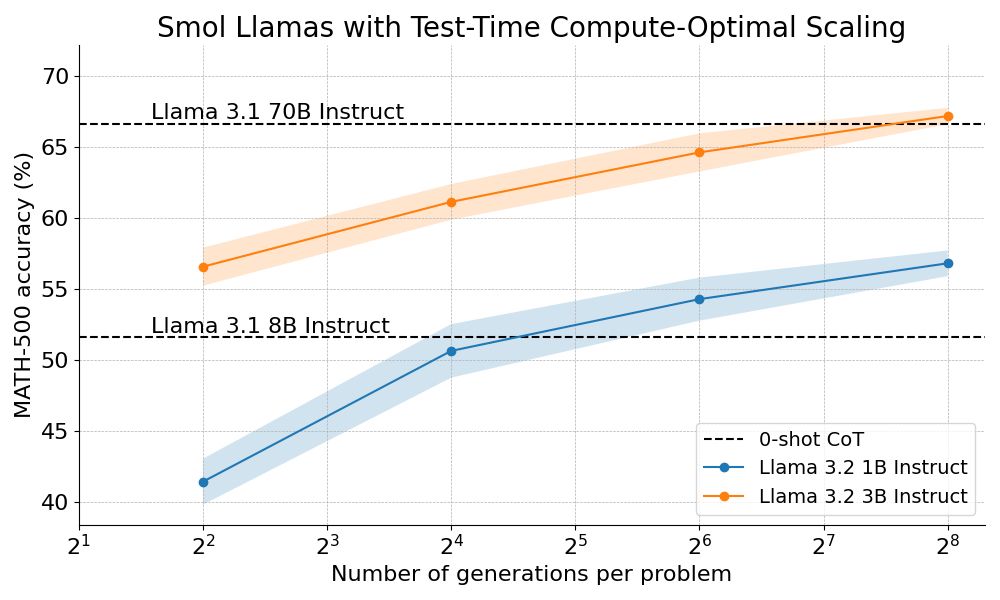

We outperform Llama 70B with Llama 3B on hard math by scaling test-time compute 🔥

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

I'd just like to interject for a moment. What you're referring to as a LLM, is in fact, a LLM / language generation system, or as I've recently taken to calling it, an LLM + LGS. An LLM is not a language generation system unto itself, but rather just one component of a fully functioning language gen

07.12.2024 06:01 — 👍 27 🔁 2 💬 3 📌 3same

28.11.2024 11:17 — 👍 0 🔁 1 💬 0 📌 0lmao

27.11.2024 23:07 — 👍 0 🔁 0 💬 0 📌 0

Yep basically, maybe even one step more abstract.

e.g. this picture i can imagine instances where i might want to search for works with similar:

- oil painting/moody style

- ethereal/cosmic/angelic dreams style

- mixed media mashups

How to select/change embedding to reflect those aspects in query

By that i mean, in the same image there can be different aspects of it that draws me in or that i want to find more instances of/commission. For most embedding and searching its always the same embedding no matter the intent/aspect of focus.

guess thats why text input exists, to be able to steer

I would. I really like the idea of being able to find artists based on a vibe i want/mood board. A lot of times i give up bc there is no good way to express what i want.

The crux i think would be, can this let me change how it queries the embedding space on what features/vibes of the images i want

One problem I’m consistently running into while building agents is with API responses. A lot of web APIs dump giant payloads intended to be parsed as needed, else ignored.

But for LLMs its all into the ctx_len. Some that are +5k tokens make models tweak and start outputting gibberish/multilingual

agreed

my reason for the small model route is, it takes care of any json oddities as well, without constraining the output diversity/quality of the big model while also getting parseable json more reliably than just having the model freestyle it

1) plan like usual with big intelligent model

2) format it into json format with guided generation on a small 1B model finetuned to put outputs into JSON, so not (nearly as)much reasoning required

New plugin for sqlite-utils that lets you ask questions of a SQLite database (or a even against one or more CSV/TSV/JSON files) in human language and have an LLM write a SQL query for you to get the answer. simonwillison.net/2024/Nov/25/...

25.11.2024 01:34 — 👍 129 🔁 15 💬 7 📌 3Same, just got here, hopping following lists to build back my old network. surprisingly more that i thought already here

I did change pfp and overall vibes though 🤐