5/ Each of the tech mentioned above has its own pros and cons. The processor that you are running in your system (a phone, a laptop, etc) will have a weighted sum of all the above.

It baffles me to think about all of this. 🤗

03.03.2025 18:05 — 👍 0 🔁 0 💬 0 📌 0

4/N Multi Threading in Single Core

In a core, we can have multiple register blocks (context blocks) to access different instructions. This way if a process is stalled, the processor quickly jumps to another.

03.03.2025 18:05 — 👍 0 🔁 0 💬 1 📌 0

3/N SIMD paradigm

In a single core if we have duplicate ALUs we can operate of a bunch of data in a single clock tick. The catch? Each operation should be the same.

Single instruction Multiple Data

03.03.2025 18:05 — 👍 0 🔁 0 💬 1 📌 0

2/N Multi Core Processor:

A single processor consists of a control unit, arithmetic unit and some registers. How about we duplicate this block into multiple blocks? This is the multi-core architecture. As a programmer you would need to explicitly mention which code runs where.

03.03.2025 18:05 — 👍 0 🔁 0 💬 1 📌 0

1/N Superscalar processors:

Your program is a list of instructions. This list almost always has independent instructions. A superscalar processor would identify them and execute seperately in the same clock tick.

03.03.2025 18:05 — 👍 0 🔁 0 💬 1 📌 0

Some pointers on parallel computing:

A small thread 🧵👇

03.03.2025 18:05 — 👍 0 🔁 0 💬 1 📌 0

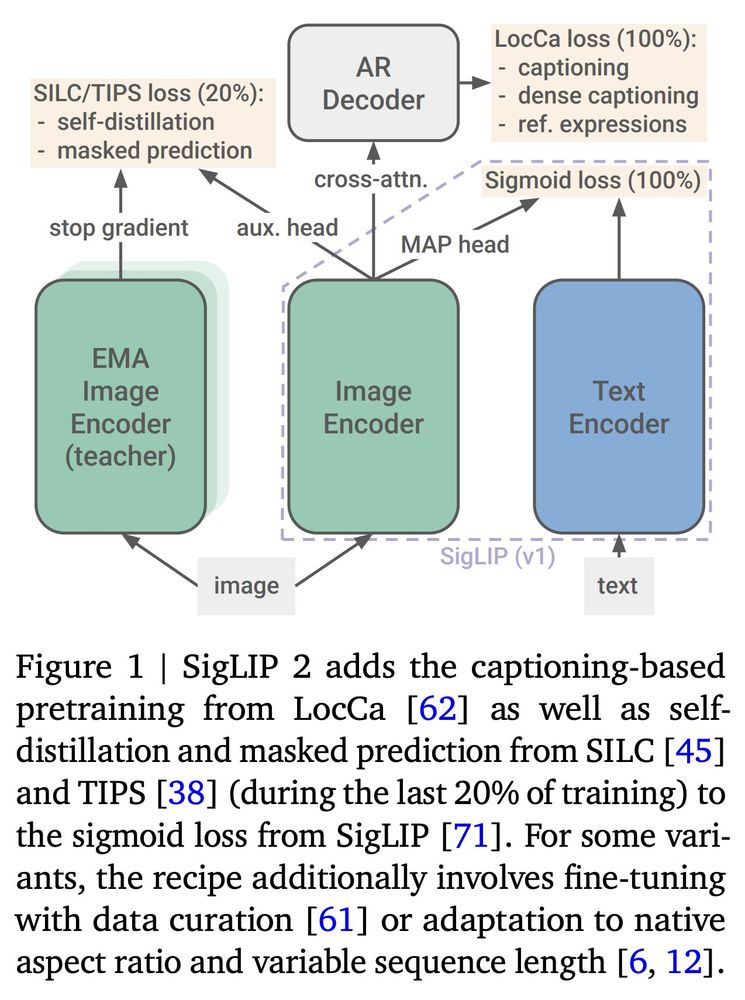

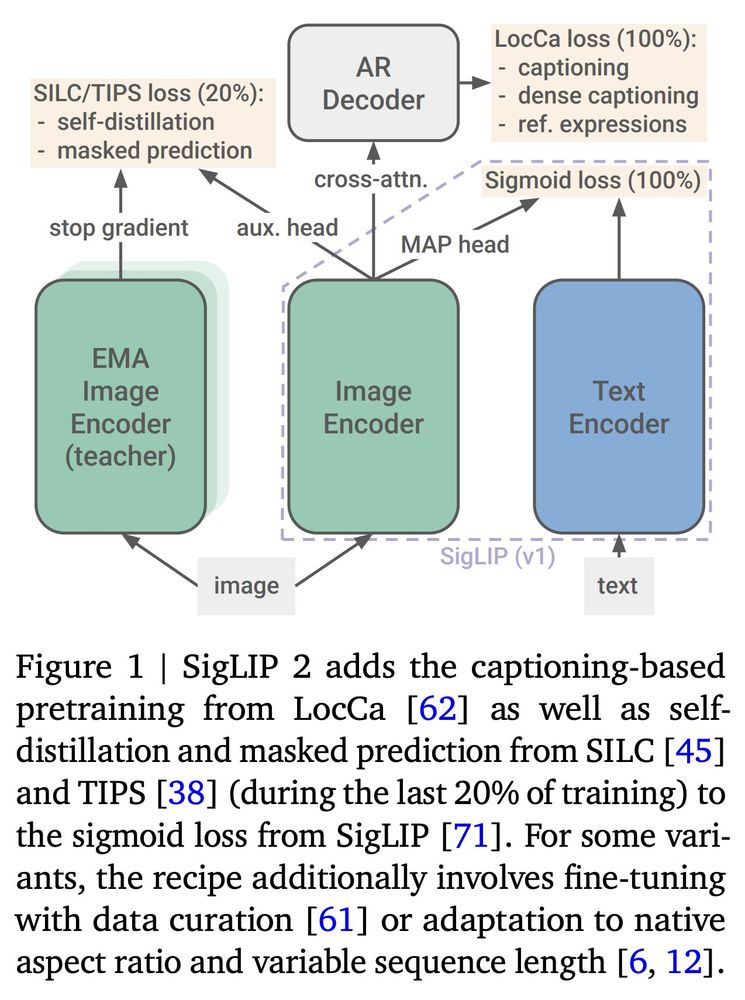

📢2⃣ Yesterday we released SigLIP 2!

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

22.02.2025 15:34 — 👍 12 🔁 1 💬 1 📌 0

🚀 Build a Qwen 2.5 VL API endpoint with Hugging Face spaces and Docker!

A Blog post by Aritra Roy Gosthipaty on Hugging Face

Build a Qwen 2.5 VL API endpoint with Hugging Face spaces and Docker! by @arig23498.bsky.social

Build a proof-of-concept API, hosting Qwen2.5-VL-7B-Instruct on Hugging Face Spaces using Docker.

huggingface.co/blog/ariG234...

29.01.2025 14:00 — 👍 5 🔁 1 💬 0 📌 0

Models - Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

I forgot to mention that you can use the same code to access any `warm` model on the Hub.

Here is a list of all the `warm` models: huggingface.co/models?infer...

Happy vibe checking 😇

[N/N]

03.12.2024 06:41 — 👍 2 🔁 0 💬 0 📌 0

But today it was my lucky day. I noticed that the model was already loaded on the Serverless Inference API and was ready to be used.

No more spinning up my GPUs and stress testing them (happy GPU noises)

[3/N]

03.12.2024 06:41 — 👍 0 🔁 0 💬 1 📌 0

My usual workflow is to visit the Hugging Face Hub model card (here that was hf[dot]co[dot]Qwen/QwQ-32B-Preview) and copy the working code sample.

I am sure this is how most of you work with a new model as well (if not, I would love to hear from you)

[2/N]

03.12.2024 06:41 — 👍 0 🔁 0 💬 1 📌 0

The Qwen team is doing so much for the community by keeping research open and constructive.

They listen to the community and put efforts in building competitive models.

I was intrigued by their latest `Qwen/QwQ-32B-Preview` model and wanted to play with it.

[1/N]

03.12.2024 06:41 — 👍 10 🔁 1 💬 1 📌 0

I've been exploring the latest Llama 3.2 releases and working on a couple of projects you may find interesting:

1️⃣ Understanding tool calling with Llama 3.2 🔧

2️⃣ Using Text Generation Inference (TGI) with Llama models 🦙

(links in the next post)

29.11.2024 10:10 — 👍 12 🔁 3 💬 1 📌 0

I like the evaluation part. Is there some evals you particularly like?

26.11.2024 11:18 — 👍 0 🔁 0 💬 1 📌 0

What is THE pain point in training Vision Language Models according to you?

I will go first, the data pipeline.

26.11.2024 10:52 — 👍 1 🔁 0 💬 3 📌 0

🙋♂️ ariG23498

23.11.2024 15:38 — 👍 2 🔁 0 💬 0 📌 0

To the video generation enthusiats, Mochi 1 Preview is now supported in `diffusers`

15.11.2024 10:19 — 👍 6 🔁 0 💬 0 📌 0

awesome, thanks a lot for sharing 🙌

13.11.2024 16:37 — 👍 1 🔁 1 💬 0 📌 0

`bitsandbytes` makes it really easy to quantize models

Note: MB should be GB in the diagram.

13.11.2024 12:03 — 👍 7 🔁 1 💬 1 📌 0

Read about the Qwen2.5-Coder Series

huggingface.co/blog/ariG234...

12.11.2024 07:08 — 👍 1 🔁 0 💬 0 📌 0

Training ranking models for better retrieval from stores is GOD level thinking.

08.11.2024 05:36 — 👍 0 🔁 0 💬 0 📌 0

I am diving head first into Vision Language Models. Comment below the papers that I definitely should read.

07.11.2024 05:52 — 👍 2 🔁 0 💬 0 📌 0

Hugging Face + PyCharm

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Welcome the @huggingface.bsky.social integration in PyCharm. From instant model cards to navigating the local cache, working with Hugging Face models becomes a lot easier with PyCharm.

Bonus: Claim a 3 month PyCharm subscription using PyCharm4HF

Blog Post: huggingface.co/blog/pycharm...

06.11.2024 11:25 — 👍 0 🔁 0 💬 0 📌 0

Research Scientist @GoogleDeepMind. Representation learning for multimodal understanding and generation.

mitscha.github.io

TEDx Speaker, Google GDE, AWS Community Builder, Podcaster, Founder Malaysia R User Group, Artificial Intelligence & Machine Learning Malaysia User Group

ai @CSatUSC, ml @USC_ICT and @USCCinema, @googledevexpert prev @harman @letsunifyai, gsoc, words @pyimagesearch, old words in @Weights_Biases, @TDataScience

Researcher (OpenAI. Ex: DeepMind, Brain, RWTH Aachen), Gamer, Hacker, Belgian.

Anon feedback: https://admonymous.co/giffmana

📍 Zürich, Suisse 🔗 http://lucasb.eyer.be

AI/ML engineer. Previously at Google: Product Manager for Keras and TensorFlow and developer advocate on TPUs. Passionate about democratizing Machine Learning.

Kaggle.com - Kaggle is the world's largest data science community with powerful tools and resources to help you achieve your data science goals.

Product Marketing Lead @NVIDIA

| PhD @UMBaltimore | omics, immuno/micro, AI/ML | 🇺🇸🇸🇰 |

Posts are my own views, not those of my employer.

PhD - Research @hf.co 🤗

TRL maintainer

Advocate for tech that makes humans better | Spatial Computing, Holodeck, and AI Futurist | Ex-Microsoft, Rackspace | Co-author, "The Infinite Retina."

Deep Learning Practitioner | Language Lead for Tamil @ HuggingFace | Interested in Continual Learning and Generative Models |

Website : https://ash-01xor.github.io/

X : https://twitter.com/ashvanth_s1

Reverse engineering neural networks at Anthropic. Previously Distill, OpenAI, Google Brain.Personal account.

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

Research Scientist at DeepMind. Opinions my own. Inventor of GANs. Lead author of http://www.deeplearningbook.org . Founding chairman of www.publichealthactionnetwork.org

Research Scientist at Meta • ex Cohere, Google DeepMind • https://www.ruder.io/

Tech Lead and LLMs at @huggingface 👨🏻💻 🤗 AWS ML Hero 🦸🏻 | Cloud & ML enthusiast | 📍Nuremberg | 🇩🇪 https://philschmid.de

ML & DevRel @ Giskard & Pruna | ex HF 🤗 | 👨🏽🍳 Cooking, 👨🏽💻 Coding, 🏆 Committing

Research & code: Research director @inria

►Data, Health, & Computer science

►Python coder, (co)founder of scikit-learn, joblib, & @probabl.bsky.social

►Sometimes does art photography

►Physics PhD