yes, it does raise questions (and I don't have an answer yet). but I am not sure whether the practical setting falls within the smooth case neither (if smooth=Lipschitz smooth; and even if smooth=differentiable, there are non-diff elements in the architecture like RMSNorm)

05.02.2025 15:15 — 👍 0 🔁 0 💬 0 📌 0

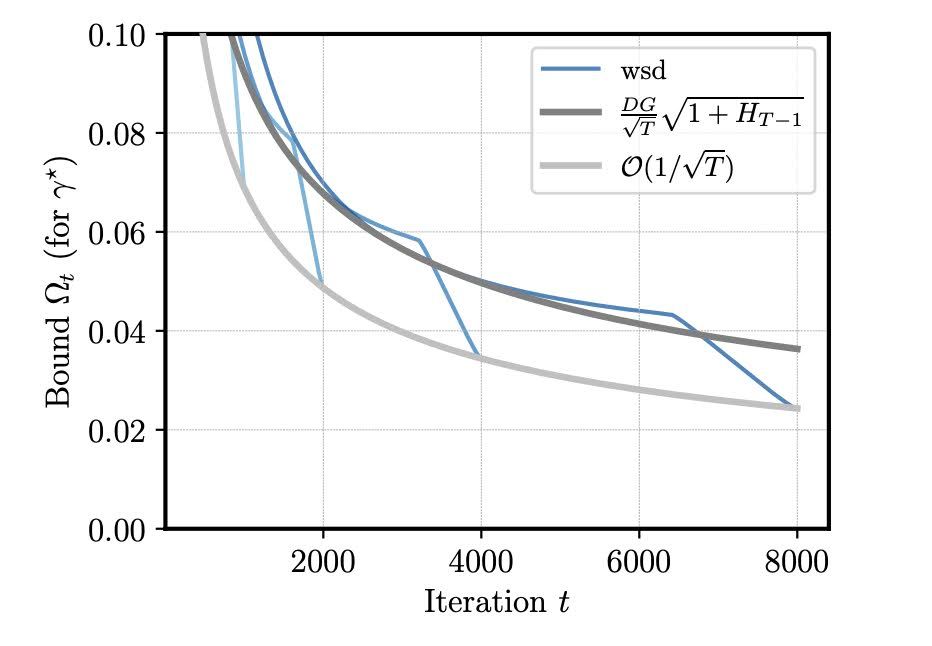

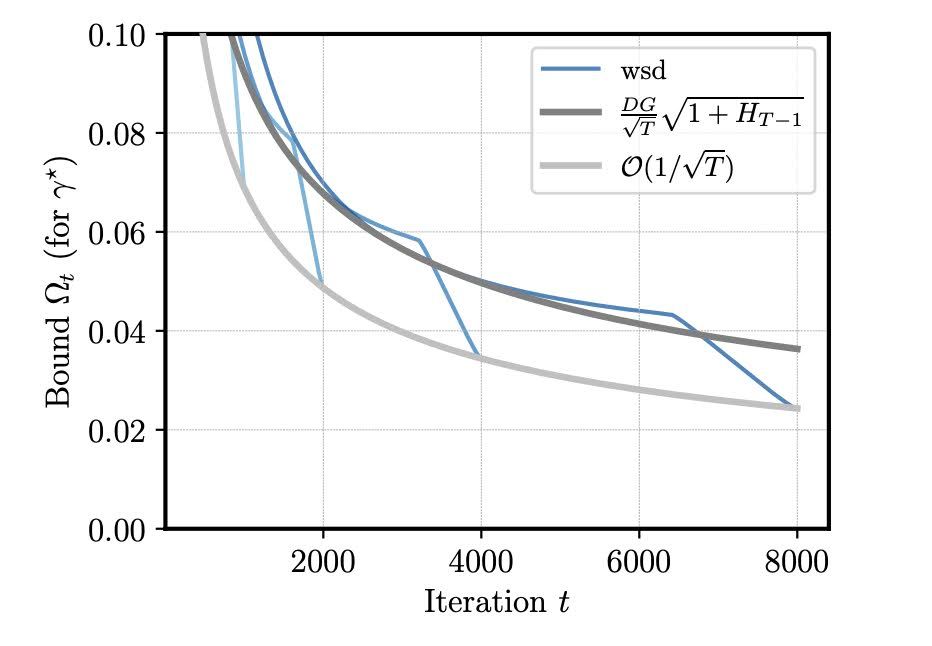

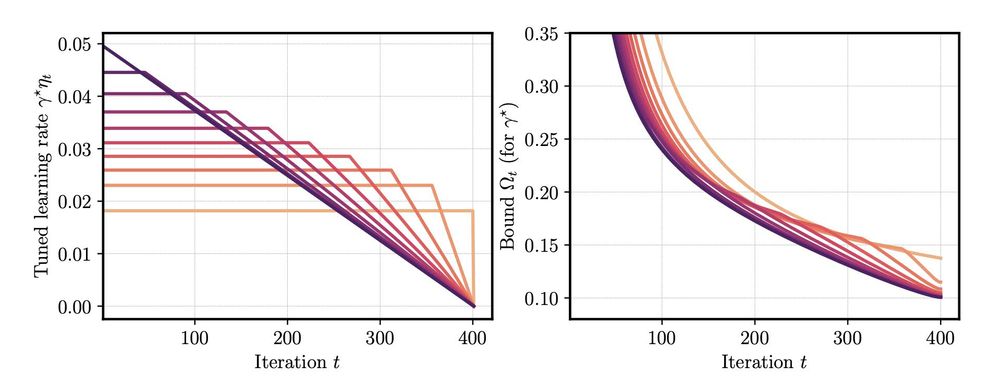

Bonus: this provides a provable explanation for the benefit of cooldown: if we plug in the wsd schedule into the bound, a log-term (H_T+1) vanishes compared to constant LR (dark grey).

05.02.2025 10:13 — 👍 1 🔁 0 💬 1 📌 0

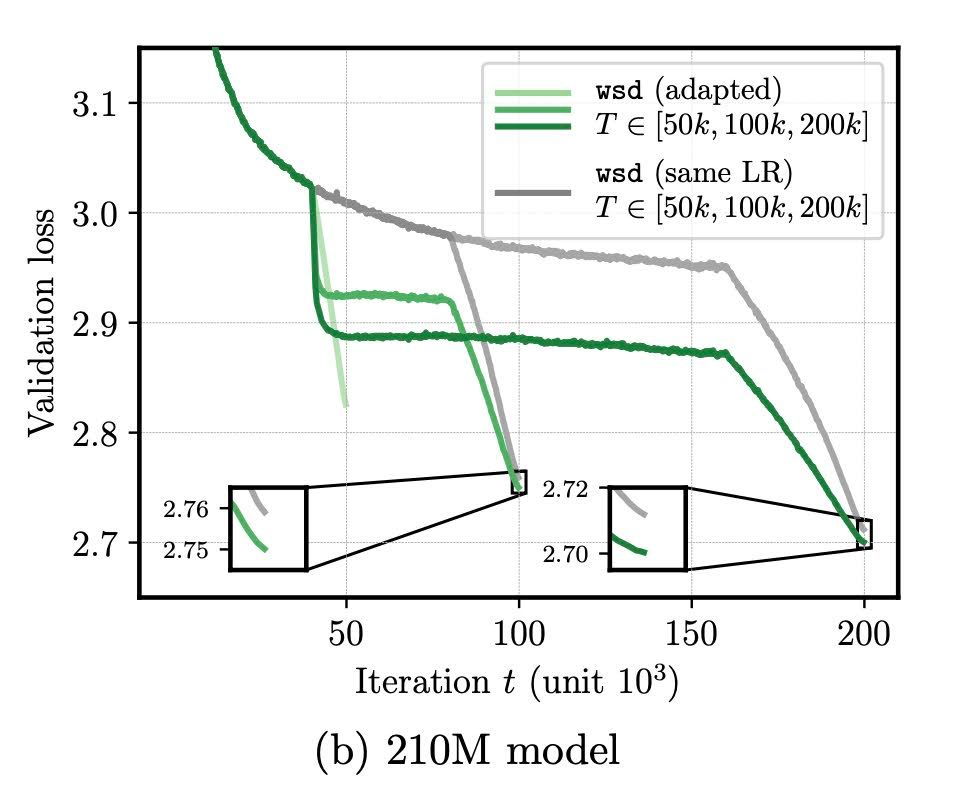

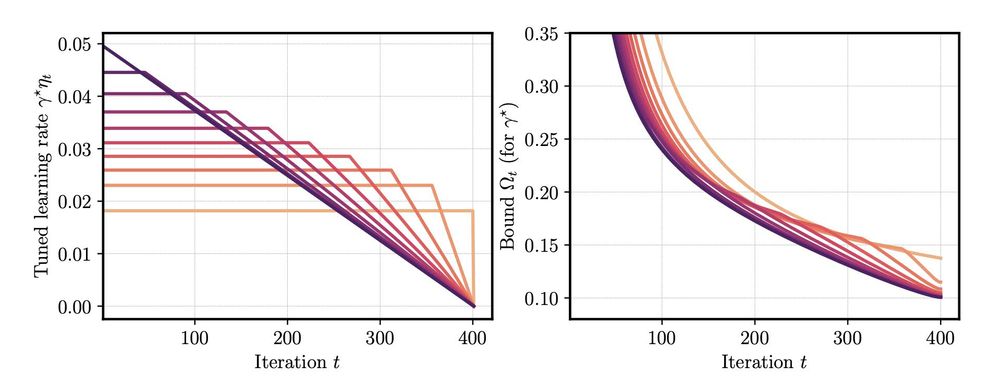

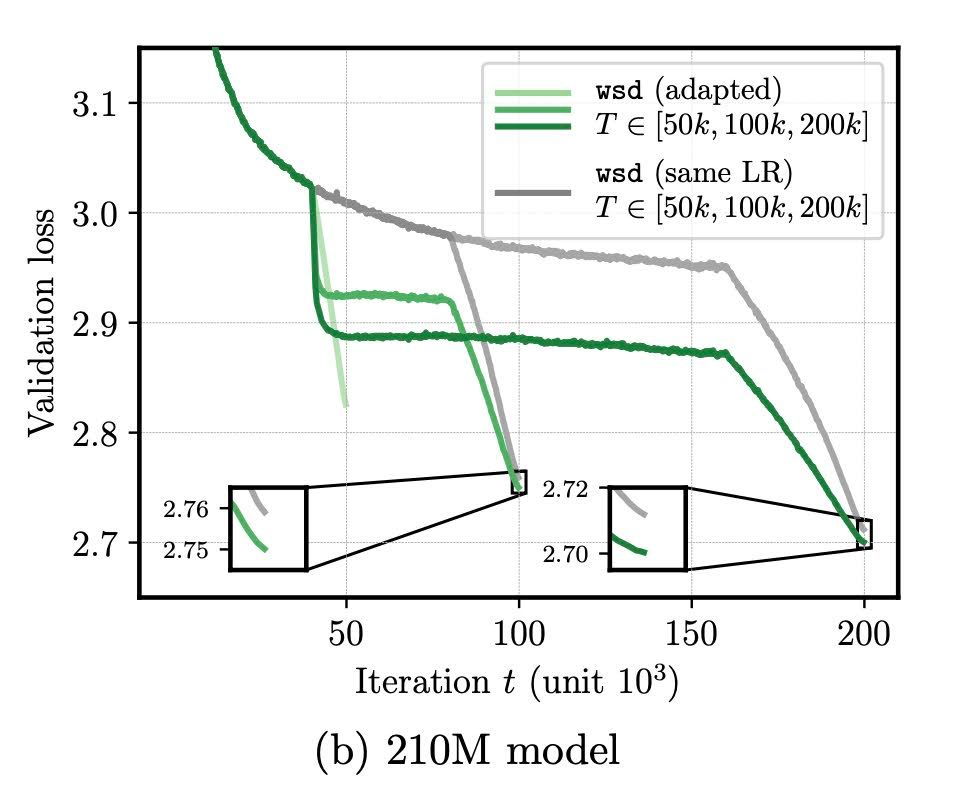

How does this help in practice? In continued training, we need to decrease the learning rate in the second phase. But by how much?

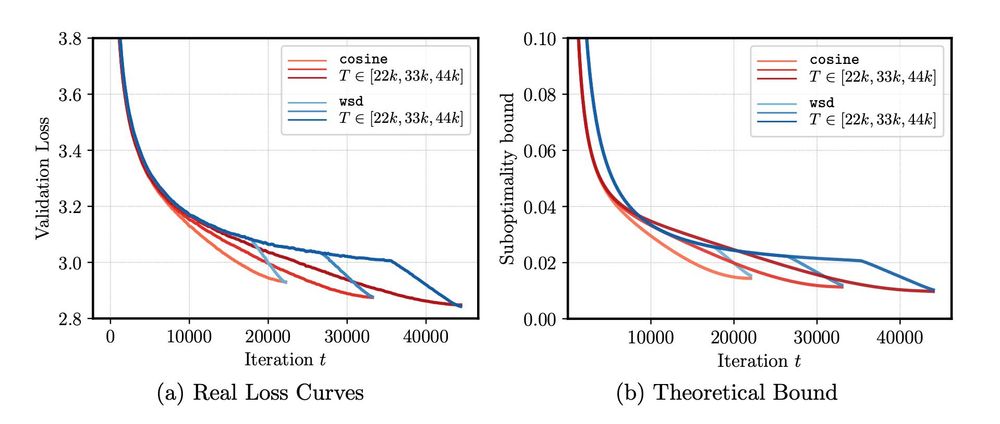

Using the theoretically optimal schedule (which can be computed for free), we obtain noticeable improvement in training 124M and 210M models.

05.02.2025 10:13 — 👍 2 🔁 0 💬 1 📌 0

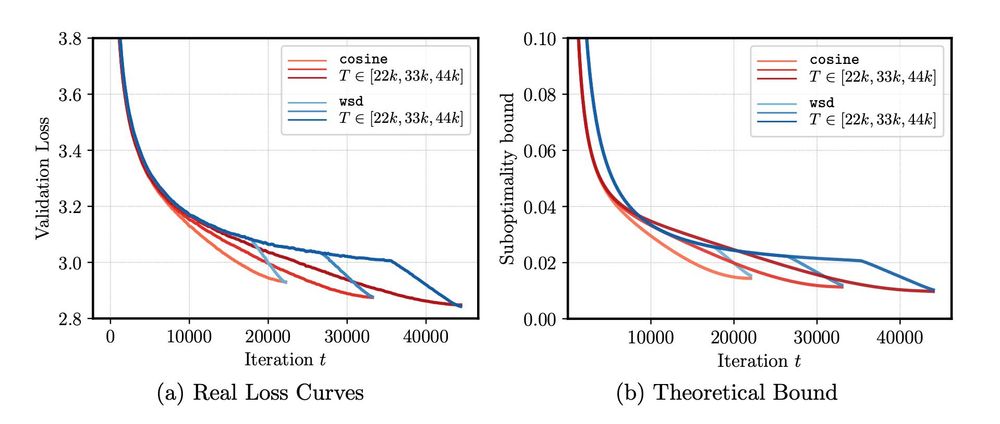

This allows to understand LR schedules beyond experiments: we study (i) optimal cooldown length, (ii) the impact of gradient norm on the schedule performance.

The second part suggests that the sudden drop in loss during cooldown happens when gradient norms do not go to zero.

05.02.2025 10:13 — 👍 1 🔁 0 💬 1 📌 0

Using a bound from arxiv.org/pdf/2310.07831, we can reproduce the empirical behaviour of cosine and wsd (=constant+cooldown) schedule. Surprisingly the result is for convex problems, but still matches the actual loss of (nonconvex) LLM training.

05.02.2025 10:13 — 👍 1 🔁 0 💬 1 📌 0

That time of the year again, where you delete a word and latex manages to make the line <longer>.

24.01.2025 09:26 — 👍 2 🔁 0 💬 0 📌 0

nice!

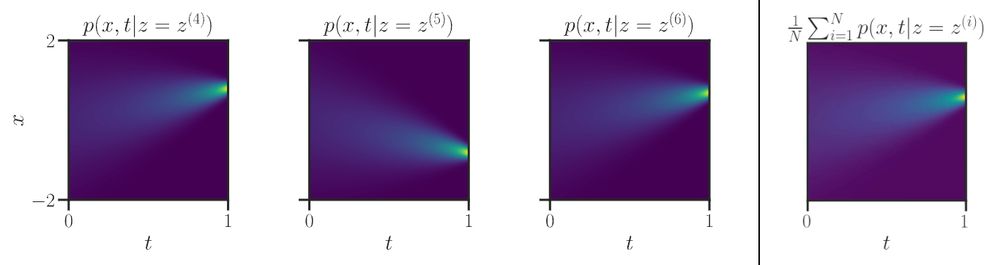

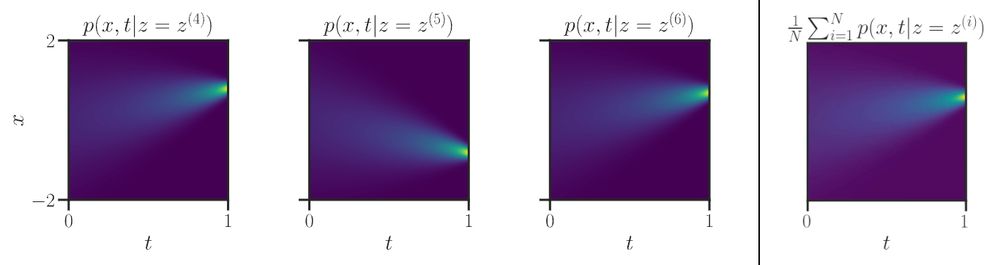

Figure 9 looks like a lighthouse guiding the way (towards the data distribution)

13.12.2024 16:27 — 👍 1 🔁 0 💬 0 📌 0

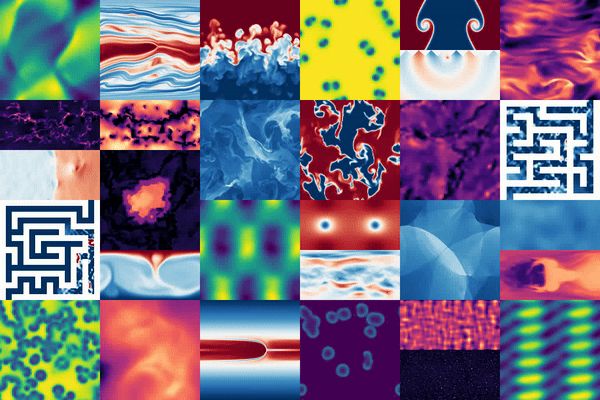

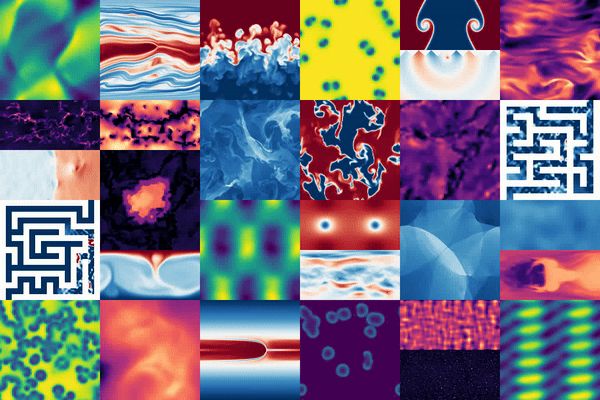

Generating cat videos is nice, but what if you could tackle real scientific problems with the same methods? 🧪🌌

Introducing The Well: 16 datasets (15TB) for Machine Learning, from astrophysics to fluid dynamics and biology.

🐙: github.com/PolymathicAI...

📜: openreview.net/pdf?id=00Sx5...

02.12.2024 16:08 — 👍 65 🔁 19 💬 3 📌 2

could you add me? ✌🏻

28.11.2024 07:32 — 👍 1 🔁 0 💬 1 📌 0

Not so fun exercise: take a recent paper that you consider exceptionally good, and one that you think is mediocre (at best).

Then look up their reviews on ICLR 2025. I find these reviews completely arbitrary most of the times.

25.11.2024 15:40 — 👍 1 🔁 0 💬 0 📌 0

my French 🇨🇵 digital bank (supposedly!) today asked me (via letter) to confirm an account action via sending them a signed letter. wtf

25.11.2024 12:41 — 👍 3 🔁 0 💬 0 📌 0

I made a #starterpack for computational math 💻🧮 so please

1. share

2. let me know if you want to be on the list!

(I have many new followers which I do not know well yet, so I'm sorry if you follow me and are not on here, but want to - drop me a note and I'll add you!)

go.bsky.app/DXdZkzV

18.11.2024 15:08 — 👍 59 🔁 28 💬 29 📌 1

would love to be added :)

22.11.2024 12:53 — 👍 1 🔁 0 💬 1 📌 0

EurIPS is a community-organized, NeurIPS-endorsed conference in Copenhagen where you can present papers accepted at @neuripsconf.bsky.social

eurips.cc

La #FondationTaraOcéan est la première fondation reconnue d’utilité publique consacrée à l’Océan en France. #ExplorerEtPartager

Human-centric urban #DataScience 🚶🚲 Sustainable #mobility #networks, in #Copenhagen. searchable

Created: https://datasci.social, https://growbike.net […]

🌉 bridged from https://datasci.social/@mszll on the fediverse by https://fed.brid.gy/

Trending papers in Vision and Graphics on www.scholar-inbox.com.

Scholar Inbox is a personal paper recommender which keeps you up-to-date with the most relevant progress in your field. Follow us and never miss a beat again!

I do SciML + open source!

🧪 ML+proteins @ http://Cradle.bio

📚 Neural ODEs: http://arxiv.org/abs/2202.02435

🤖 JAX ecosystem: http://github.com/patrick-kidger

🧑💻 Prev. Google, Oxford

📍 Zürich, Switzerland

Machine Learning engineer @planet. Mentor with Frontier Development Lab. Previously at Dropbox & Google. Started coworking. Interests: Machine Learning, space, Earth Observation, VR.

http://codinginparadise.org

Twitter: @bradneuberg

ML Professor at École Polytechnique. Python open source developer. Co-creator/maintainer of POT, SKADA. https://remi.flamary.com/

Principal research scientist at Google DeepMind. Synthesized views are my own.

📍SF Bay Area 🔗 http://jonbarron.info

This feed is a mostly-incomplete mirror of https://x.com/jon_barron, I recommend you just follow me there.

Associate Professor of Machine Learning, University of Oxford;

OATML Group Leader;

Director of Research at the UK government's AI Safety Institute (formerly UK Taskforce on Frontier AI)

senior research scientist at Google | author of DreamBooth

https://natanielruiz.github.io/

Musician, math lover, cook, dancer, 🏳️🌈, and an ass prof of Computer Science at New York University

Associate Professor in EECS at MIT. Neural nets, generative models, representation learning, computer vision, robotics, cog sci, AI.

https://web.mit.edu/phillipi/

Compte officiel de l’Insee. #Statistiques et études sur l'#économie et la #société françaises.

https://www.insee.fr/fr/accueil

Robustness, Data & Annotations, Evaluation & Interpretability in LLMs

http://mimansajaiswal.github.io/

PhD student at FAIR at Meta and Ecole des Ponts with @syhw and @Amaury_Hayat, co-supervised by @wtgowers. Machine learning for mathematics and programming.

TMLR Homepage: https://jmlr.org/tmlr/

TMLR Infinite Conference: https://tmlr.infinite-conf.org/

Professor in applied mathematics at University of Orléans 🇫🇷 & IUF Junior 2022 on an innovation chair.

Interested in image processing and deep generative models.

I teach generative models for imaging at #MasterMVA.