... so definitely more than 4.4 but less than 4.6 million? /s

source?

05.11.2025 02:12 — 👍 0 🔁 0 💬 1 📌 0

Just expressing my support for typst as well! It’s mature enough to typeset a PhD thesis, has a very mellow learning curve, and has a great community.

11.05.2025 22:45 — 👍 1 🔁 0 💬 0 📌 0

Orb-v3 out now -- achieves SOTA on speed *and* accuracy

arxiv.org/abs/2504.06231

09.04.2025 12:31 — 👍 1 🔁 0 💬 0 📌 0

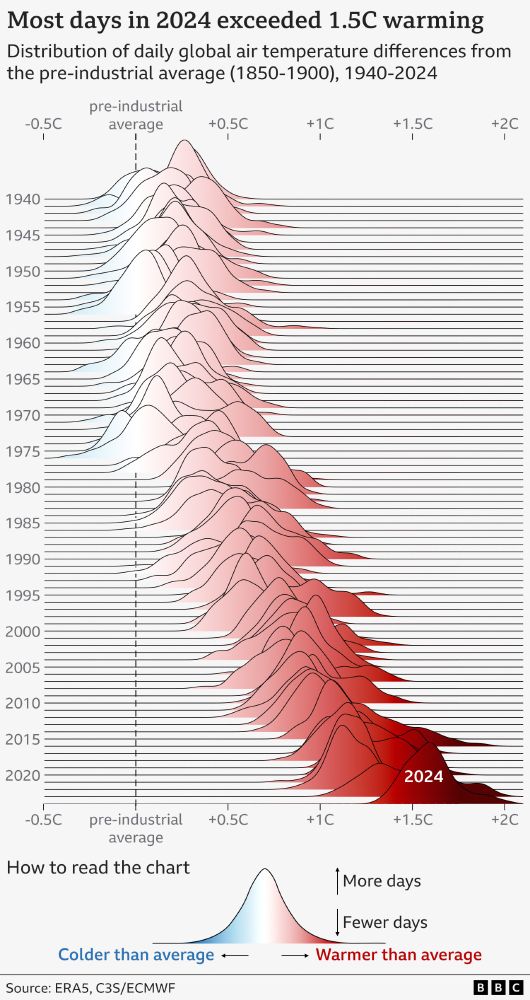

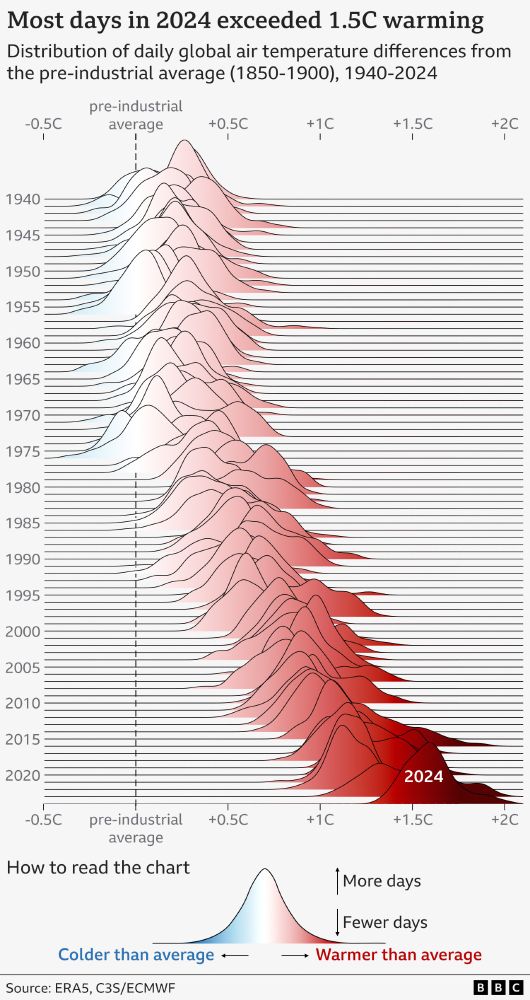

Ridgeline chart showing the distribution of global daily air temperature differences from the pre-industrial reference period (1850-1900), for every year between 1940 and 2024. Each individual year resembles a hill, shaded in a darker shade of red and further to the right for warmer years. The trend is clearly towards warmer years, with 2024 standing out as first year above 1.5C.

NEW: 2024 has just been confirmed as the warmest year on record, and the first to breach the 1.5C threshold.

We used a ridgeline (Joy Division inspired) chart to visualise daily temperature anomalies since 1940.

2024 clearly stands out with 100% of its days above 1.3C and 75% above 1.5C.

10.01.2025 08:04 — 👍 5920 🔁 2768 💬 211 📌 332

Typst: Compose papers faster

Focus on your text and let Typst take care of layout and formatting. Sign up now and speed up your writing process.

typst (the definitive Latex successor) and manim (for stunning visuals/movies/slideshows) are two incredibly useful pieces of software

typst.app

github.com/3b1b/manim

15.01.2025 20:42 — 👍 2 🔁 0 💬 0 📌 0

Anne Gagneux, Ségolène Martin, @quentinbertrand.bsky.social Remi Emonet and I wrote a tutorial blog post on flow matching: dl.heeere.com/conditional-... with lots of illustrations and intuition!

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

27.11.2024 09:00 — 👍 354 🔁 102 💬 12 📌 11

Here I was thinking I’d have a hard time convincing people RPA is empirical.

Do quantum monte carlo techniques have true potential or are we stuck with decades-old approximations invented by highly noncomputational scientists?

29.11.2024 00:17 — 👍 0 🔁 0 💬 0 📌 0

simple Python API; to drive a single 'master' job which then does everything else!

25.11.2024 18:09 — 👍 1 🔁 0 💬 0 📌 0

scalable molecular simulation: github.com/molmod/psiflow

scientific: ML potentials, DFT and post-HF calculations, (path-integral) MD, replica exchange, alchemical ΔF , hessians, ...

technical: automated job submission, simple Python, scales to >100 nodes, containerized!

25.11.2024 18:08 — 👍 5 🔁 0 💬 1 📌 0

is there a #compchem starter pack here?

25.11.2024 14:23 — 👍 2 🔁 0 💬 1 📌 0

a golden (😂) PES

Actually, from that perspective, even a 1000x slowdown could be acceptable since it would be used less for super long MDs and more for building models above and beyond atomic-level MD...

24.11.2024 23:05 — 👍 1 🔁 0 💬 0 📌 0

From a distance, and this is probably controversial, but it feels like AF has made so much progress that would have otherwise required decades of atomic simulations ?

24.11.2024 15:42 — 👍 1 🔁 0 💬 1 📌 0

For drug discovery, do you think more accurate atomic interactions are the way to go, or will people gradually abandon bottom-up atomic-level simulations?

24.11.2024 15:40 — 👍 0 🔁 0 💬 1 📌 0

So as long as all possible low-density environments are included in training, putting a limit at fixed cutoff makes sense?

24.11.2024 15:04 — 👍 1 🔁 0 💬 0 📌 0

Hmm, yeah, and maybe the fixed neighbors thing is just a trick to speed up training and improve performance on the synthetic benchmarks

At the same time: beyond a “threshold” number of neighbors there is maybe so much screening that the required # neighs to include becomes a constant?

24.11.2024 15:03 — 👍 1 🔁 0 💬 2 📌 0

I should really get out of my matsci cave because I am so unaware of these things 😂

I was imagining OpenMM with a bunch of custom force expressions, PME, and anisotropic pressure control (often needed in solid state) at 1 ms / step — and maybe 100 ms / step for MACE for a similar system, approx..

24.11.2024 14:53 — 👍 1 🔁 0 💬 2 📌 0

at least in my experience!

24.11.2024 14:41 — 👍 1 🔁 0 💬 0 📌 0

Although matbench leading entries usually truncate the number of neighbors to consider to a fixed number, such that the cost of a single message passing layer no longer scales with density …

24.11.2024 14:40 — 👍 1 🔁 0 💬 1 📌 0

Right sorry, wasn’t counting bio! Though I think it’s more the increased density rather than shear system size that widens the performance gap?

In matsci / catalysis, with proper enhanced sampling, the main worry is not so much the achievable time scales rather than the accuracy of the QM data…

24.11.2024 14:39 — 👍 1 🔁 0 💬 2 📌 0

100x because that’s how much slower an “optimized” ML potential for any particular system would be. I might be optimistic here but a small MACE network and the right training data have always gotten me below ~1 meV/atom and ~50 meV/A errors.

24.11.2024 11:09 — 👍 1 🔁 0 💬 1 📌 0

Epic capture ….Grand Canyon National Park in Arizona ✨👏✨😎✨

24.11.2024 02:05 — 👍 71389 🔁 4843 💬 1271 📌 347

Thrilled to announce Boltz-1, the first open-source and commercially available model to achieve AlphaFold3-level accuracy on biomolecular structure prediction! An exciting collaboration with Jeremy, Saro, and an amazing team at MIT and Genesis Therapeutics. A thread!

17.11.2024 16:20 — 👍 611 🔁 204 💬 18 📌 25

new SOTA on collective variable learning!

gist? Train classifier in feature space of pretrained GNN to predict 'phase' of an atomic geometry:

CV(A->B) = logit(B) - logit(A)

+data-efficient

+invariant wrt trans/rot/perm

+compatible w foundation models!

08.04.2024 13:00 — 👍 3 🔁 0 💬 1 📌 0

We create proteins that solve modern challenges in medicine, technology, and sustainability.

• 2024 Nobel Prize in Chemistry

• University of Washington, Seattle

→ ipd.uw.edu

Senior Staff Research Scientist @Google DeepMind, previously Stats Prof @Oxford Uni - interested in Computational Statistics, Generative Modeling, Monte Carlo methods, Optimal Transport.

messing up with gaussians

Head of Sci/cofounder at futurehouse.org. Prof of chem eng at UofR (on sabbatical). Automating science with AI and robots in biology. Corvid enthusiast

Husband, dad, veteran, writer, and proud Midwesterner. 19th US Secretary of Transportation and former Mayor of South Bend.

PhD student @ Ghent University

Statistical mechanic working on generative models for biophysics and beyond. Assistant professor at Stanford. https://statmech.stanford.edu

Associate Research Professor Ghent University * @ERC.europa.eu StG Grantee - STRAINSWITCH * Engineering Physicist * Co-president @JongeAcademie.bsky.social

Co-Founder & Chief Scientist @ Emmi AI. Ass. Prof / Group Lead @jkulinz. Former MSFTResearch, UvA_Amsterdam, CERN, TU_Wien

Head of ML at Orbital Materials. BC: Research/Eng at Allen Institute for AI.

markneumann.xyz

Computer scientist, Bioinformatician, PhD in Chemistry and Molecular Sciences. Working on the above as an Assistant Prof at WUR. Interested in all the sciences. Materialist. Fan of cats, Punk Rock, and Hockey.

Achira | http://achira.ai

Research laboratory | http://choderalab.org

Antiviral drug discovery for pandemics | http://asapdiscovery.org

OpenADMET | http://openadmet.org

Employer-mandated disclaimer: http://choderalab.org/disclaimer

Pronouns: he/him

Professor of Chemistry and Computer Science

University of Toronto

Faculty member, Vector Institute

Director, Acceleration Consortium

Senior Director of Quantum Chemistry NVIDIA

My views expressed here are personal and are not those of my employers.

Chemist, husband, father, singer. Sustainability, catalysis, stereochemistry, predictive modeling. Senior Principal Scientist @astrazeneca.bsky.social Gothenburg. he/him

Chemistry professor at CMU. Connecting chemical sciences with AI #MachineLearning and automated experimentation. #tarheels fan. Care: #design, #photography #Ukraine #cats🐈 Rants are mine

Computational Biophysicist, Amateur Photographer, History+Language Nerd.

All views my own.

Website: https://martinvoegele.github.io/

Assistant Professor at Princeton. Quantum-chemical engineer and materials designer.

https://rosen.cbe.princeton.edu

Physicist, professor @univie.ac.at, director of @esivienna.bsky.social, computational physics, statistical mechanics, machine learning, soft matter, biking, hiking, skiing, *320 ppm. 🇪🇺