Yes!

14.11.2025 19:23 — 👍 4 🔁 0 💬 0 📌 0Matt Perich

@mattperich.bsky.social

Neuroscience, engineering, AI, music. Asst. Professor / PI at University of Montréal and Mila.

@mattperich.bsky.social

Neuroscience, engineering, AI, music. Asst. Professor / PI at University of Montréal and Mila.

Yes!

14.11.2025 19:23 — 👍 4 🔁 0 💬 0 📌 0My CIHR committee was already at an 11% funding line last cycle (and I don't think it's the lowest)

06.11.2025 20:29 — 👍 0 🔁 0 💬 0 📌 0

Yes but thankfully it's only a small cut in the current plan (which is kind of a win given how bad it could have gone). But certainly worse than a sustained increase, given the increasing applicant pool.

www.science.org/content/arti...

That being said, investment is investment, and it all could be a lot worse! Having a government acknowledging scientific excellence as a priority is a good signal.

06.11.2025 18:45 — 👍 2 🔁 0 💬 0 📌 0Fully agree. The unfortunate thing is that this is paired with cuts to federal research budgets. Importing highly competitive "big fish" while shrinking the pot for grants is not a great combo for the broader ecosystem... If Canada really wants to capitalize they need to think short and long term

06.11.2025 18:31 — 👍 4 🔁 0 💬 2 📌 0

🚨Job alert🚨

The lab has up to *3 postdoc openings* for comp systems neuroscientists interested in describing and manipulating neural population dynamics mediating behaviour

This is part of a collaborative ARIA grant "4D precision control of cortical dynamics"

euraxess.ec.europa.eu/jobs/383909

If you're interested in dynamical systems analysis for neuroscience, definitely check out @oliviercodol.bsky.social 's revised version of our RL paper! Very cool results in the new Fig 6, worth it regardless of if you saw our previous version or if it's all new.

www.biorxiv.org/content/10.1...

A big "get" for the Champalimaud! Excited to see what comes out of the Warehouse and the next phase of Juan's lab

07.10.2025 19:12 — 👍 7 🔁 0 💬 1 📌 0Very excited and proud to share my postdoctoral research with @neurrriot.bsky.social looking at the context-specific encoding of social behavior 💃🕺 in hormone-sensitive, large-scale brain networks in mice!

www.biorxiv.org/content/10.1...

#neuroskyence #compneurosky 🧪

1/12

Of potential interest to those keen on motor control and/or multi-task networks. Congrats to Elom and Eric.

www.biorxiv.org/content/10.1...

Thanks!

13.08.2025 23:36 — 👍 0 🔁 0 💬 0 📌 0Thanks!

13.08.2025 18:48 — 👍 0 🔁 0 💬 0 📌 0More info on our funded project is here: webapps.cihr-irsc.gc.ca/decisions/p/.... In many ways, this directly follows our 2020 paper: www.biorxiv.org/content/10.1.... But we have much broader ambitions too and are building a flexible platform for NHP motor ephys. Should be a fun 5 years!

13.08.2025 16:23 — 👍 5 🔁 0 💬 1 📌 0IMO Montreal is one of the best cities out there for neuro, and everyone in my lab will get to enjoy learning from and interacting with the NeuroAI folks at Mila. If modeling the computations behind multi-modal sensorimotor integration and adaptation is of interest to you, please reach out!

13.08.2025 16:23 — 👍 12 🔁 0 💬 1 📌 0🚨 I’m excited to say that my CIHR Project Grant was funded! My NHP lab is now full-speed-ahead, and I’m hiring experimentalists (postdoc, PhD student, and/or a tech/manager). We’ll do multi-region ephys during reaching/grasping in macaques, with behavioral and spinal perturbations.

13.08.2025 16:23 — 👍 84 🔁 25 💬 2 📌 3Awesome work from @juangallego.bsky.social and lab. An interface from single motoneuron control in tetraplegia!

07.08.2025 19:07 — 👍 6 🔁 0 💬 1 📌 0

Check out @jordancollver.bsky.social’s great illustration of modular RNNs training to work like a bio-brain🦾🧠

Thanks to Crearte for featuring our collaboration!

www.instagram.com/crearte.ca/p...

Very happy about my former mentor Sara Solla having received the Valentin Braitenberg Award for her lifelong contributions to computational neuroscience!

Sara will be giving a lecture at the upcoming @bernsteinneuro.bsky.social meeting which you shouldn't miss.

bernstein-network.de/en/newsroom/...

Could be a cell type difference (Gardner 2022 focused on grid cells) or a region difference? Methods difference? Lots of interesting possibilities!

04.08.2025 20:35 — 👍 0 🔁 0 💬 0 📌 0Though I will say that some evidence suggests it's not going to always be 1:1 with environment. E.g. the 2022 Gardner ERC place cell paper we mention in the article has place cells mapping even square environments into a toroid shape. Though the Guo 2024 CA1 paper has environment-hspaed manifolds

04.08.2025 20:34 — 👍 0 🔁 0 💬 2 📌 0There's a long and fun conversation to be had here 🙂. But I agree, the "many-to-few" nature of neurons to manifolds allows considerable drift in single neuron activity without changing the manifold. Important in next steps to find out when, and how, neural drift changes manifold-level properties!

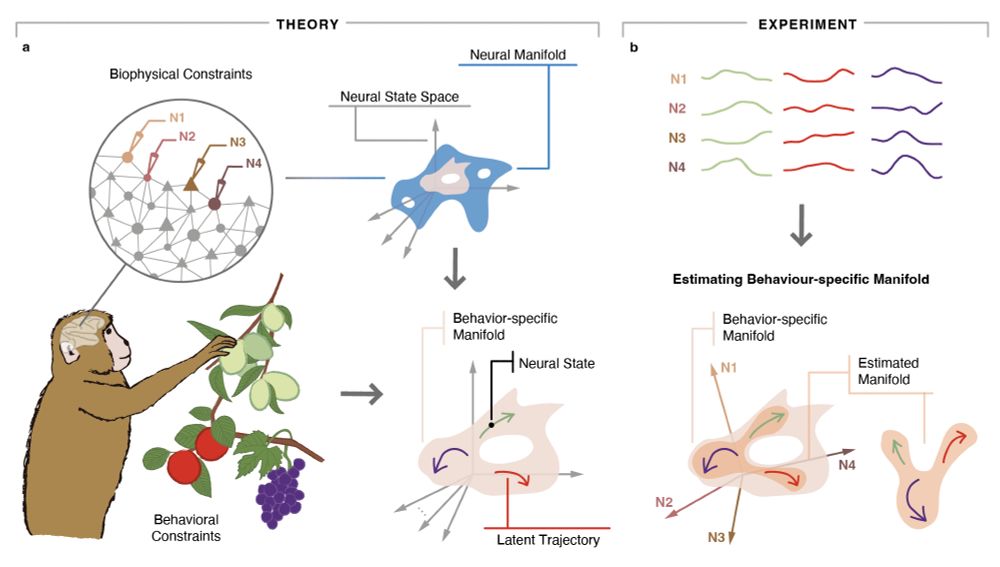

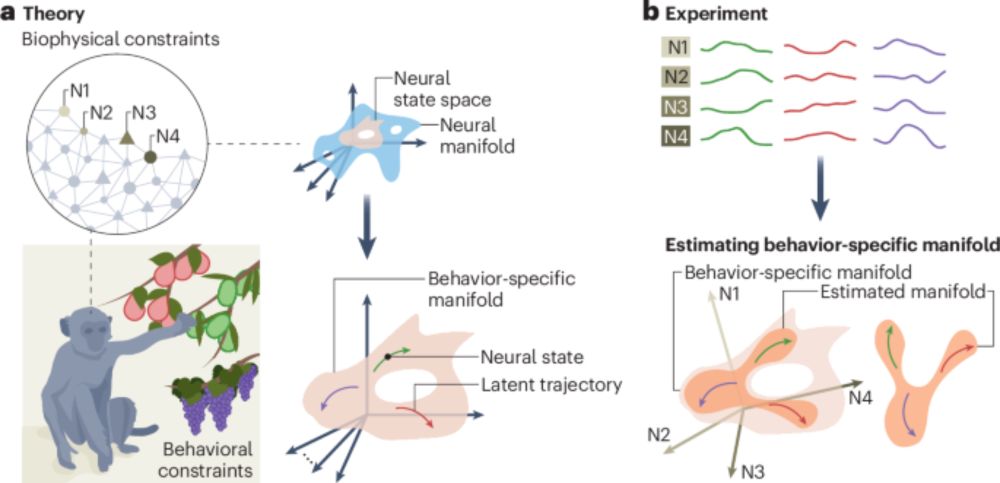

04.08.2025 20:27 — 👍 4 🔁 0 💬 0 📌 0Indeed, IMO behavior (and environment, etc) are inextricably linked to manifold properties. For this reason, comparative (e.g. for same behavior, are manifolds different in different regions?) and causal (e.g., move activity on the manifold and predict behavioral changes) experiments are essential.

04.08.2025 20:24 — 👍 0 🔁 0 💬 1 📌 0Thanks for the kind words and really glad you enjoyed the article!

04.08.2025 20:23 — 👍 4 🔁 0 💬 0 📌 0Thanks to @emilysingerneuro.bsky.social for another opportunity to work with The Transmitter (which is an awesome publication), and of course the many, many long conversations on manifolds with @juangallego.bsky.social that shaped these articles 🙂

04.08.2025 18:47 — 👍 4 🔁 0 💬 1 📌 0📰 I really enjoyed writing this article with @thetransmitter.bsky.social! In it, I summarize parts of our recent perspective article on neural manifolds (www.nature.com/articles/s41...), with a focus on highlighting just a few cool insights into the brain we've already seen at the population level.

04.08.2025 18:45 — 👍 54 🔁 15 💬 1 📌 1

🚨New paper🚨

Neural manifolds went from a niche-y word to an ubiquitous term in systems neuro thanks to many interesting findings across fields. But like with any emerging term, people use it very differently.

Here, we clarify our take on the term, and review key findings & challenges rdcu.be/ex8hW

'manifolds', and the overall conception of the brain using a dynamical systems framework, have come a long way.

30.07.2025 05:36 — 👍 20 🔁 5 💬 0 📌 0A lot has changed since we wrote our last perspective piece in 2017 (www.cell.com/neuron/fullt..., both in how we think about neural manifolds and in the prevalence in the field. We hope this paper provides a good primer for the ideas, and points towards some big open questions in this space.

29.07.2025 19:07 — 👍 5 🔁 0 💬 0 📌 0

Check out our new review/perspective (w/ @juangallego.bsky.social & Devika Narain) on neural manifolds in the brain! It was a lot of fun to think through these ideas over the past couple of years, and I'm excited it's finally out in the world!

🔗: www.nature.com/articles/s41...

📄: rdcu.be/ex8hW

I guess I wouldn't think of babies as "learning" a foundation model. IMO a better analogy is that evolution learned the foundation model and babies during development are fine-tuning it (albeit in a "multi-task" way) for their bodies/experiences .

01.07.2025 19:02 — 👍 3 🔁 0 💬 2 📌 0