Zebra-CoT: A Dataset for Interleaved Vision Language Reasoning

arxiv: arxiv.org/abs/2507.16746

data: huggingface.co/datasets/mul...

@jbohnslav.bsky.social

computer vision + machine learning. Perception at Zoox. Prev: Cobot, PhD. Arxiv every day.

Zebra-CoT: A Dataset for Interleaved Vision Language Reasoning

arxiv: arxiv.org/abs/2507.16746

data: huggingface.co/datasets/mul...

ZEBRA-CoT

Dataset for vision-language reasoning where the model *generates images during the CoT*. Example: for geometry problems, it's helpful to draw lines in image space.

182K CoT labels: math, visual search, robot planning, and more.

Only downside: cc-by-nc license :(

Franca: Nested Matryoshka Clustering for Scalable Visual Representation Learning

arxiv: arxiv.org/abs/2507.14137

code: github.com/valeoai/Franca

Cool technique: RASA, Removal of Absolute Spatial Attributes. They decode grid coords and find the plane in feature space that encodes position. They basically subtract this off, baking it into the last linear layer to leave the forward pass unchanged.

23.07.2025 12:17 — 👍 0 🔁 0 💬 1 📌 0

Beats or is competitive to SigLIP/2, DinoV2 on linear eval, OOD detection, linear segmentation.

23.07.2025 12:17 — 👍 0 🔁 0 💬 1 📌 0

Franca

Fully open vision encoder. Masks image, encodes patches, then trains student to match teacher's clusters. Key advance: Matryoshka clustering. Each slice of the embedding gets its own projection head and clustering objective. Fewer features == fewer clusters to match.

VRU-Accident: A Vision-Language Benchmark for Video Question Answering and Dense Captioning for Accident Scene Understanding

arxiv: arxiv.org/abs/2507.098...

project: vru-accident.github.io

VRU-Accident

New benchmark of 1K videos, 1K captions, and 6K MCQs from accidents involving VRUs. Example: "why did the accident happen?" "(B): pedestrian moves or stays on the road."

Current VLMs get ~50-65% accuracy, much worse than humans (95%).

BlindSight: Harnessing Sparsity for Efficient VLMs

arxiv: arxiv.org/abs/2507.090...

Side note: I've always liked Pali/Gemma's Prefix-LM masking. Why have causal attention for image tokens?

15.07.2025 13:56 — 👍 0 🔁 0 💬 1 📌 0

BlindSight

AMD paper: they find attention heads often have stereotyped sparsity patterns (e.g. only attending within an image, not across). They generate sparse attention variants for each prompt. Theoretically saves ~35% FLOPs for 1-2% worse on benches.

Scaling RL to Long Videos

arxiv: arxiv.org/abs/2507.07966

code: github.com/NVlabs/Long-RL

Long-RL

Nvidia paper scaling RL to long videos. First trains with SFT on a synthetic long CoT dataset, then does GRPO with up to 512 video frames. Uses cached image embeddings + sequence parallelism, speeding up rollouts >2X.

Bonus: code is already up!

Skywork-R1V3 Technical Report

arxiv: arxiv.org/abs/2507.06167

code: github.com/SkyworkAI/Sk...

They identify entropy of "wait" or "alternatively" to be strongly correlated with MMMU. Neat!

09.07.2025 15:41 — 👍 2 🔁 0 💬 1 📌 0

Fine-tuning the connector at the end gives a point or two of MMMU. I wonder how much of this is benchmaxxing--I haven't seen an additional SFT stage after RL before.

09.07.2025 15:41 — 👍 0 🔁 0 💬 1 📌 0

They construct their warm-start SFT data with synthetic traces from Skywork-R1V2.

GRPO is pretty standard, interesting that they just did math instead of math, grounding, other possible RLVR tasks. Qwen-2.5-Instruct 32B to judges the accuracy of the answer in addition to rule-based verification.

Skywork-R1V3: new reasoning VLM with 76% MMMU.

InternViT-6B stitched with QwQ-32B. SFT warmup, GRPO on math, then a small SFT fine-tune at the end.

Good benches, actual ablations, and interesting discussion.

Details: 🧵

High-Resolution Visual Reasoning via Multi-Turn Grounding-Based Reinforcement Learning

arxiv: arxiv.org/abs/2507.05920

code: github.com/EvolvingLMMs...

Training: use verl with vLLM for rollouts. Limit image resolution to 1280 visual tokens. Train on 32 H100s.

Results: +18 points better on V* compared to Qwen2.5-VL, and +5 points better than GRPO alone.

RL: GRPO. Reward: only correct answer, not valid grounding coordinates. Seems weird to not add that though.

Data: training subset of MME-RealWorld. Evaluate on V*.

Uses Qwen2.5-VL as a base model. The NaViT encoder makes it easy to have many images of different shapes.

They use a SFT warm-start, as the VLMs struggled to output good grounding coordinates. They constructed two-turn samples for this.

MGPO: multi-turn grounding-based policy optimization.

I've been waiting for a paper like this! Trains the LLM to iteratively crop regions of interest to answer a question, and the only reward is the final answer.

Details in thread 👇

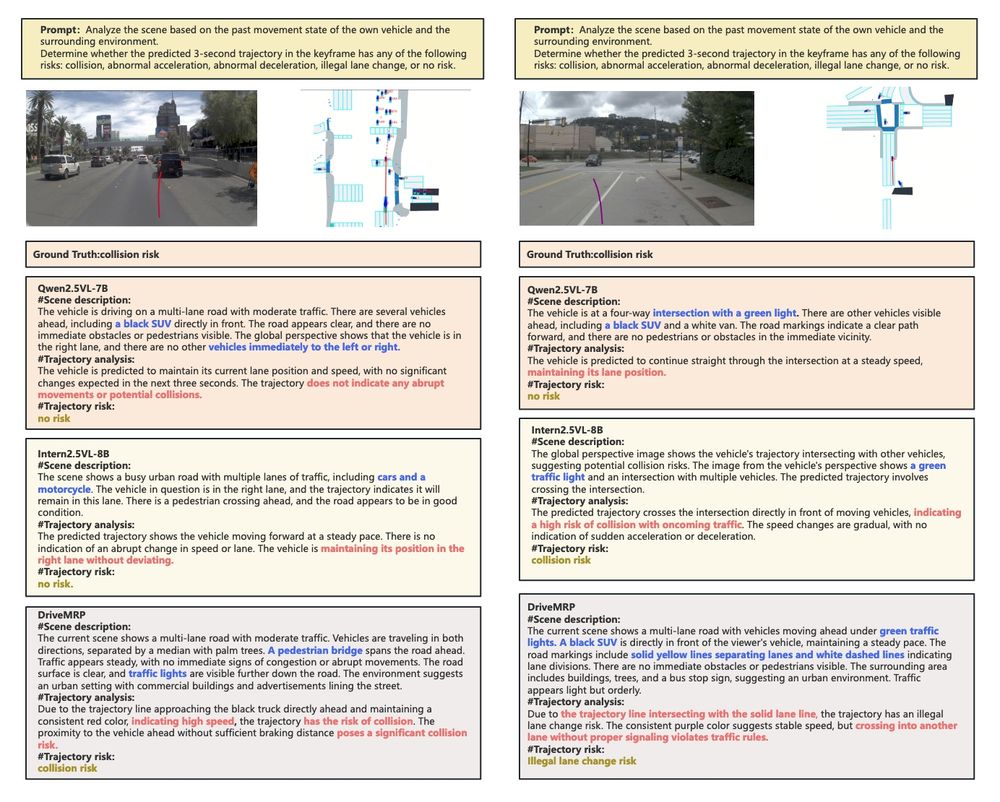

DriveMRP: Enhancing Vision-Language Models with Synthetic Motion Data for Motion Risk Prediction

arxiv: arxiv.org/abs/2507.02948

code: github.com/hzy138/Drive...

Using automatically generated risk category labels and the front-facing view, they have GPT4o caption the scenarios. The metrics are based on caption similarity + classification metrics on riskiness-type.

08.07.2025 14:03 — 👍 0 🔁 0 💬 1 📌 0

DriveMRP: interesting method to get a VLM to understand BEV maps + driving scenarios

They synthesize high-risk scenes derived from NuPlan. They render it as both a bird's eye view image and a front camera view.

👇

SeqGrowGraph: Learning Lane Topology as a Chain of Graph Expansions

arxiv: arxiv.org/abs/2507.048...

SeqGrowGraph

Instead of segment + postprocess, generate lane graphs autoregressively. Node == vertex in BEV space, edge == control point for Bezier curves. At each step, a vertex is added and the adjacency matrix adds one row + column.

They formulate this process as next token prediction. Neat!

GLM-4.1V-Thinking: Towards Versatile Multimodal Reasoning with Scalable Reinforcement Learning

arxiv: arxiv.org/abs/2507.01006

code: github.com/THUDM/GLM-4....

Excitingly, in one of their few shown results, multi-domain RL shows positive cross-task-transfer. Training on GUI agent data improves STEM answers, OCR, and grounding.

02.07.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0