These results suggest that perceptual strategies are shaped by the reliability of encoding at early stages of the auditory system. 🧵5/5

07.02.2026 08:56 — 👍 1 🔁 0 💬 0 📌 0

We find that neural tracking of pitch is linked to pitch cue weighting during word emphasis and lexical stress perception. Specifically, higher pitch weighting is linked to increased tracking of pitch at early latencies within the neural response, from 15 to 55 ms. 🧵4/5

07.02.2026 08:55 — 👍 0 🔁 0 💬 1 📌 0

Here, we tested the hypothesis that the reliability of early auditory encoding of a given dimension is linked to the weighting placed on that dimension during speech categorization. We tested this in 60 first language speakers of Mandarin learning English as a second language. 🧵3/5

07.02.2026 08:55 — 👍 1 🔁 0 💬 1 📌 0

Linguistic categories are conveyed in speech by many acoustic cues at the same time, but not all of them are equally important. There are clear and replicable individual differences in how people use those cues during speech perception, but the underlying mechanisms are unclear. 🧵2/5

07.02.2026 08:55 — 👍 1 🔁 0 💬 1 📌 0

Early neural encoding of pitch drives cue weighting during speech perception

Abstract. Linguistic categories are conveyed in speech by several acoustic cues simultaneously, so listeners need to decide how to prioritize different potential sources of information. There are robu...

🚨New paper🚨about mechanisms underlying individual differences in cue weighting doi.org/10.1162/IMAG... from fun times at @audioneurolab.bsky.social @birkbeckpsychology.bsky.social with @ashleysymons.bsky.social, Kazuya Saito, Fred Dick, and @adamtierney.bsky.social #psychscisky #neuroskyence 🧵1/5

07.02.2026 08:54 — 👍 12 🔁 3 💬 1 📌 0

Together, these results suggest that the precision with which people perceive and remember sound patterns plays a major role in how well they understand accented speech, and that auditory training may help listeners who struggle. 🧵5/5

03.02.2026 09:44 — 👍 1 🔁 0 💬 0 📌 0

Native English speakers who were better at understanding the accent were also better at detecting pitch differences, remembering sound patterns, and attending to pitch. Musical training also helped. Better speech perception was also linked to stronger neural encoding of speech harmonics. 🧵4/5

03.02.2026 09:44 — 👍 1 🔁 0 💬 1 📌 0

In this study, we asked L1 English speakers to listen to the prosody of Mandarin-accented English. We found that some listeners are better at understanding accented speech than others. 🧵3/5

03.02.2026 09:42 — 👍 1 🔁 0 💬 1 📌 0

Non-native speakers of English speak with varying degrees of accent. So far, research has focused more on factors that help learners communicate more effectively. But what about the listeners? Are there factors that make it easier for native listeners to understand accented speech? 🧵2/5

03.02.2026 09:42 — 👍 1 🔁 0 💬 1 📌 0

Redirecting

🚨New paper🚨 about accented speech perception doi.org/10.1016/j.ba... by brilliant (MSc student at the time!) Amir Ghooch Kanloo accompanied by myself, Kazuya Saito and @adamtierney.bsky.social from fun times at @audioneurolab.bsky.social @birkbeckpsychology.bsky.social 🧵1/5

03.02.2026 09:40 — 👍 11 🔁 4 💬 1 📌 0

#NeuroJobs

01.12.2025 17:11 — 👍 0 🔁 0 💬 0 📌 0

If you haven't, you should, it's brilliant!

18.11.2025 10:03 — 👍 7 🔁 2 💬 0 📌 0

As it's hiring season again I'm resharing the NeuroJobs feed. Add #NeuroJobs to your post if you're recruiting or looking for an RA, PhD, Postdoc, or faculty position in Neuro or an adjacent field.

bsky.app/profile/did:...

03.09.2025 15:25 — 👍 46 🔁 28 💬 3 📌 0

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text.

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

19.08.2025 01:12 — 👍 52 🔁 10 💬 1 📌 1

My PhD student Yue Li is looking for L1 speakers of Chinese and Spanish for her online English experiment! Please see below for details!

14.08.2025 15:01 — 👍 12 🔁 25 💬 2 📌 0

🎧 Join us for some fun listening tasks!

🧠 Researchers at the University of Manchester want to recruit normal hearing volunteers aged 18-50 who are native English speakers to take part in research, which will help us to understand different aspects of listening in noise.

#hearinghealth #research

23.07.2025 13:11 — 👍 1 🔁 1 💬 0 📌 0

A ✨bittersweet✨ moment – after 5 years at UCL, my final first-author project with @smfleming.bsky.social is ready to read as a preprint! 🥲

25.07.2025 09:23 — 👍 31 🔁 8 💬 2 📌 1

Nice review, but why "controversies"? Evidence isn’t controversial. Like "epiphenomenon," it often just means, "doesn’t fit my hypothesis." That’s ad hominem science.

Brain rhythms in cognition -- controversies and future directions

arxiv.org/abs/2507.15639

#neuroscience

25.07.2025 15:25 — 👍 12 🔁 2 💬 0 📌 0

Delighted to have our newest paper out in #Jneurosci ! We looked at how much a single cell contributes to an auditory-evoked EEG signal. Big thanks to my co-authors Ira Kraemer, Christine Köppl, Catherine Carr and Richard Kempter (all not in Bsky). Here’s how: (1/13)

bsky.app/profile/sfnj...

28.06.2025 14:18 — 👍 21 🔁 6 💬 3 📌 0

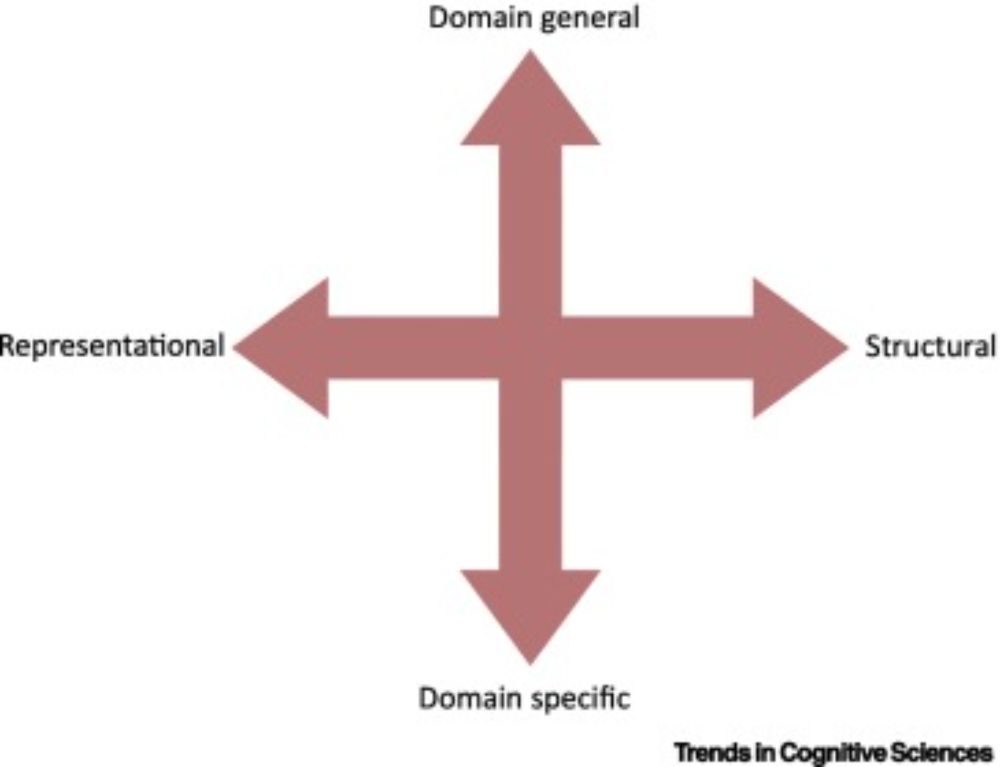

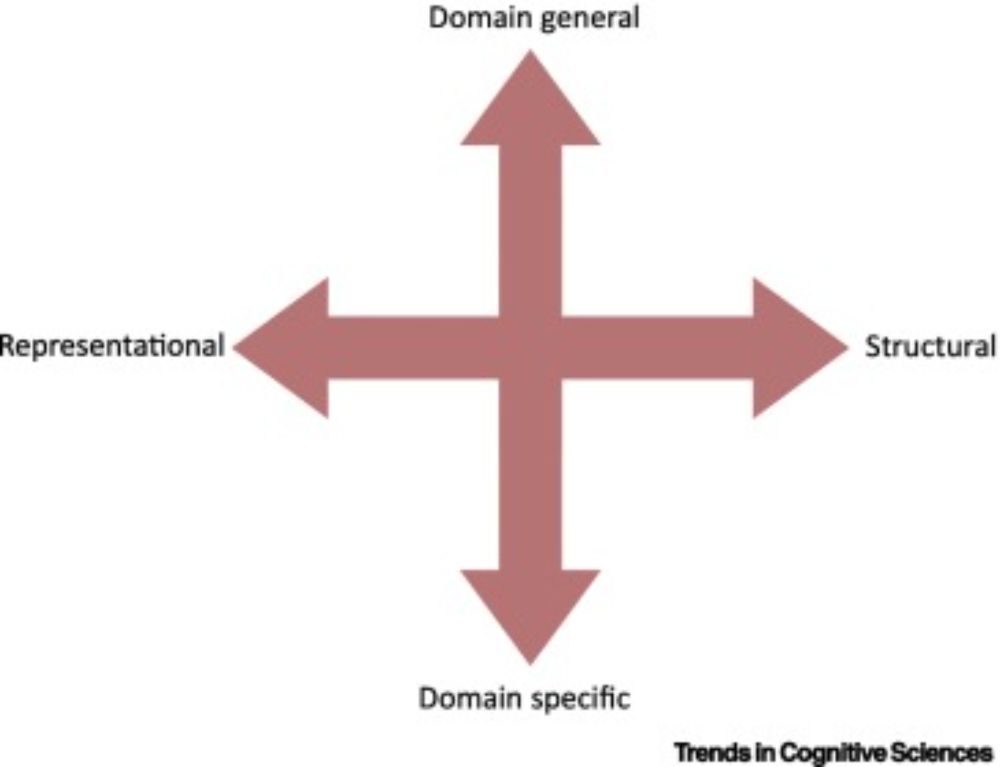

Constructing language: a framework for explaining acquisition

Explaining how children build a language system is a central goal of research in language

acquisition, with broad implications for language evolution, adult language processing,

and artificial intelli...

Children are incredible language learning machines. But how do they do it? Our latest paper, just published in TICS, synthesizes decades of evidence to propose four components that must be built into any theory of how children learn language. 1/

www.cell.com/trends/cogni... @mpi-nl.bsky.social

27.06.2025 05:19 — 👍 154 🔁 58 💬 9 📌 12

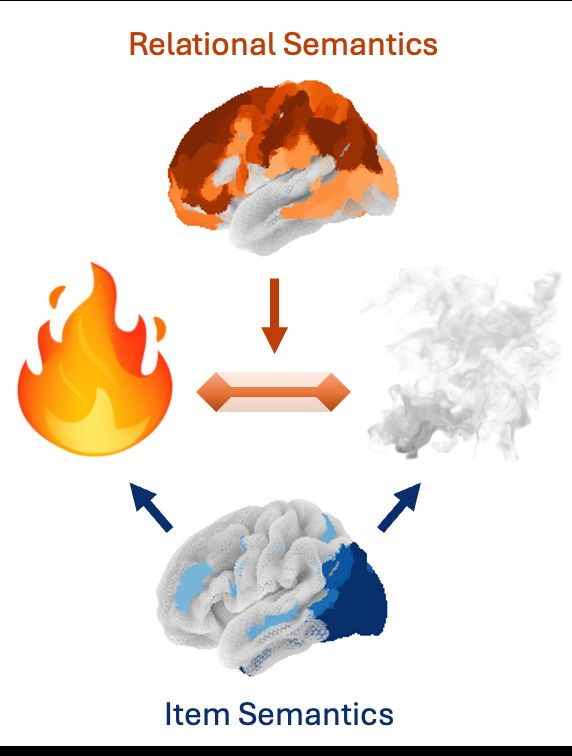

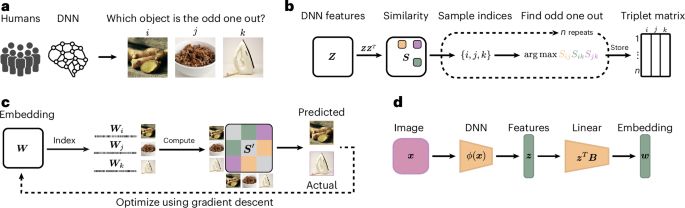

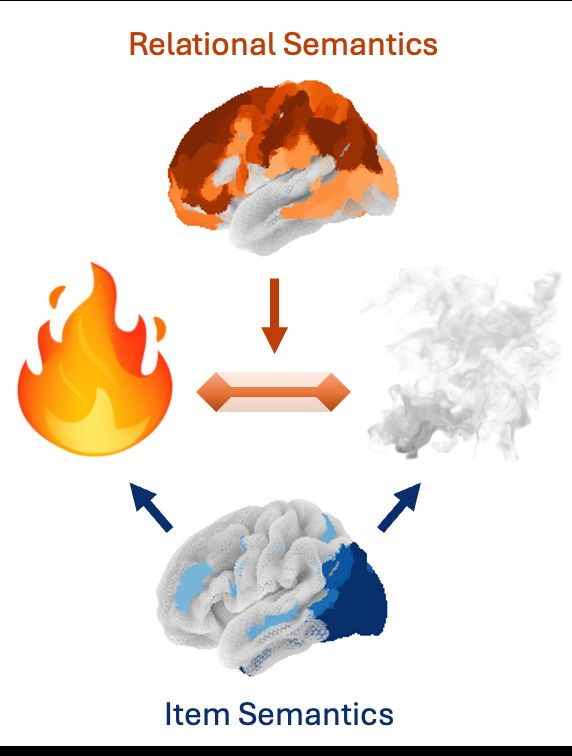

🚨 New preprint 🚨

Prior work has mapped how the brain encodes concepts: If you see fire and smoke, your brain will represent the fire (hot, bright) and smoke (gray, airy). But how do you encode features of the fire-smoke relation? We analyzed fMRI with embeddings extracted from LLMs to find out 🧵

24.06.2025 13:49 — 👍 32 🔁 8 💬 1 📌 2

In what way is the frontoparietal network domain general? We show it uses the same neural resources to represent rules in auditory and visual tasks but does so with independent codes doi.org/10.1162/IMAG..., thanks to A Rich, D Moerel, @linateichmann.bsky.social, J Duncan @alexwoolgar.bsky.social

24.06.2025 09:27 — 👍 13 🔁 5 💬 1 📌 1

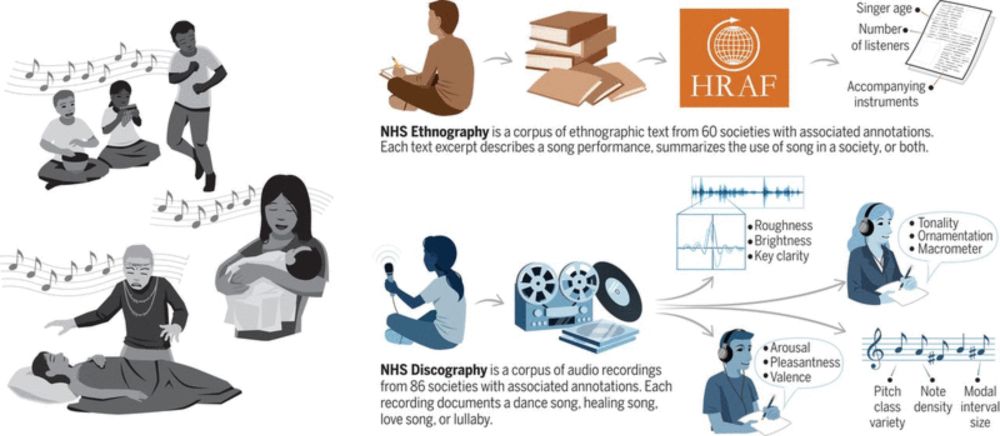

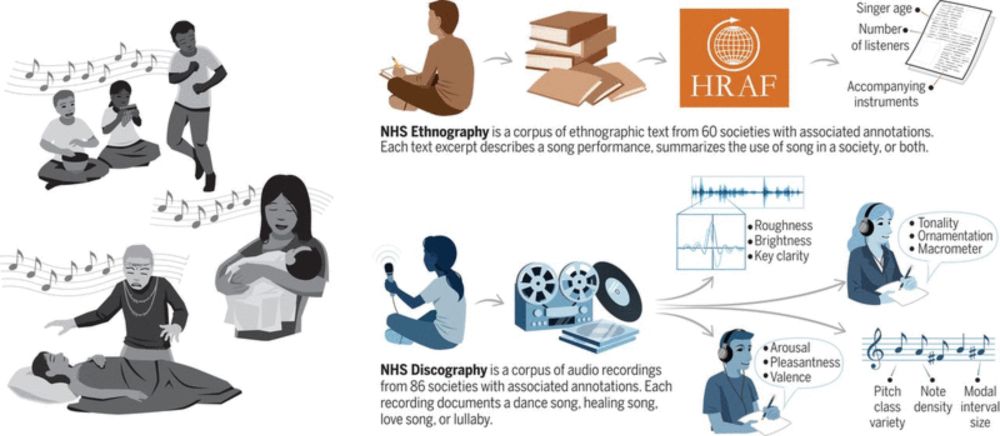

Universality and diversity in human song

Songs exhibit universal patterns across cultures.

Music is universal. It varies more within than between societies and can be described by a few key dimensions. That’s because brains operate by using the raw materials of music: oscillations (brainwaves).

www.science.org/doi/10.1126/...

#neuroscience

23.06.2025 11:38 — 👍 39 🔁 20 💬 4 📌 1

🌍 Paris Brain Institute is an international scientific and medical research centre.

🇫🇷 L’Institut du Cerveau est un centre de recherche scientifique et médical.

👉 https://parisbraininstitute.org/

Post-doc in Computational Cognitive Science at Leiden & Amsterdam University

The premier research center for #ComplexSystems #science.

santafe.edu

linktr.ee/sfiscience

Mentalab Explore Pro

High precision mobile ExG

mentalab.com

Assistant Professor @ Vanderbilt Otolaryngology (ENT) + Psych/Human Development. Co-director @ Vanderbilt Music Cognition Lab. Interdisciplinary science of musicality, language, hearing, development. Views mine

CNRS Researcher at CRNL. Research Specialist at Karolinska Institutet, Stockholm. Studying the prefrontal cortex and cognition in rodents. Electronic musician.

lab : https://www.lab.pierrelemerre.com/

personal webpage: https://www.pierrelemerre.com/

We are a vibrant research-intensive Division with expertise in cognitive and clinical neuroscience, culture and evolution, developmental, and social psychology based in Brunel University of London 🎓🧠🧬🧪

LINK TREE: https://linktr.ee/brunelpsyc

Assistant Professor in Experimental Psychology and Researcher on Psychophysics and Visual Perception.

Avid reader of non-fiction.

Amateur astrophotographer, fencer, and musician.

https://bids.neuroimaging.io/

Distinguished Prof of Philosophy and Cognitive Science, Guggenheim Fellow, Author of The Unity of Perception (OUP 2018), http://tinyurl.com/2p8ttuux

perception, cognition, reflexivity in biological and AI systems.🤖🧠👩🔬🧪

http://susannaschellenberg.org

Psycholinguist + sociolinguist interested in multilingualism, L3/Ln acquisition and heritage and community languages • 🇦🇺➞🇳🇴 ➞🇸🇬

Cohost of @whenlanguagesmeet.bsky.social

🧠👄🎙💻🔍

www.chloecastle.com

Assistant Professor at the Center for Cognitive Medicine, Vanderbilt University Medical Center. he/him/his

Alzheimer's | Cognition | EEG | 🇦🇺

https://www.vumc.org/ccm/

Neuroscientist interested in (laminar) MEG, quantitative MRI, OPMs, speech rhythms, dance and open science

We study cognitive neuroscience, in particular perception, attention, memory, and sleep. Part of the CRNL. https://pam-lyon.cnrs.fr

Research group @mpicbs.bsky.social in Leipzig 🧠 investigating plasticity in cognitive networks 🪢 using neuroimaging (e.g. fMRI) and non-invasive brain stimulation (e.g. TMS).

We're on a mission to invent ways to repair or prevent damage to the hearing and balance systems.

PostDoc at BAND-lab (PI: Sonja Kotz), Maastricht, NL

Rhythms in the body, brain and environment

Global neuroscience association: supporting neuroscience education, research & outreach

IBRO Journals: Neuroscience & IBRO Neuroscience Reports @ibrojournals.bsky.social

Join us in Cape Town for #IBRO2027!

More information: ibro.org

Computational Neurobiologist from Sydney, Australia. https://shine-lab.org. Banner image from https://www.gregadunn.com.

This is the official Bluesky account for the School of Psychology, Trinity College Dublin, the University of Dublin. For more see https://www.tcd.ie/psychology/