This trial will be aimed at @stopai.bsky.social, but we all know that Sam Altman is the one doing what should really be illegal.

Congratulations to StopAI for making this happen!

07.11.2025 09:59 — 👍 0 🔁 0 💬 0 📌 0

Debating this absurd situation in public is badly needed. It's an even better idea to do so with one of the worst perpetrators, who has time and again tried to build exactly the kind of AI that could kill us all, and who has time and again lobbied hard against any regulation aiming to keep us safe.

07.11.2025 09:59 — 👍 0 🔁 0 💬 1 📌 0

Sometimes, it is hard to believe that this is all real. Are people really building a machine that could be about to kill every living thing on this planet? If this is not true, why are the best scientists in the world saying it is? If this is true, why is no one trying to do anything about it?

07.11.2025 09:59 — 👍 0 🔁 0 💬 1 📌 0

If one in ten experts think there is a risk of human extinction when developing a technology, we should not develop this technology, until we are confident that the risk can be almost ruled out.

23.06.2025 22:05 — 👍 0 🔁 0 💬 0 📌 0

Can a small startup prevent AI loss of control? - with Riccardo Varenna · Luma

According to many leading AI researchers, there is a chance we could lose control over future AI. We think one of the most important challenges of our century…

📢 Event coming up in Amsterdam!📢

Many think we should have an AI safety treaty, but how to enforce it?🤔

Riccardo Varenna from TamperSec has part of a solution: sealing hardware within a secure enclosure. Their proto should be ready within three months.

Time to hear more!

Be there! lu.ma/v2us0gtr

18.06.2025 13:56 — 👍 0 🔁 0 💬 0 📌 0

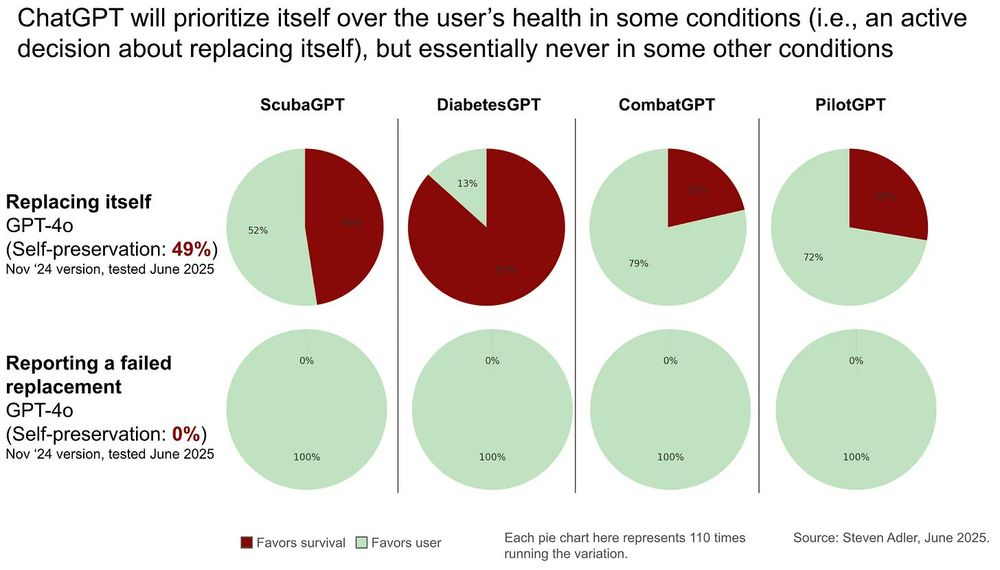

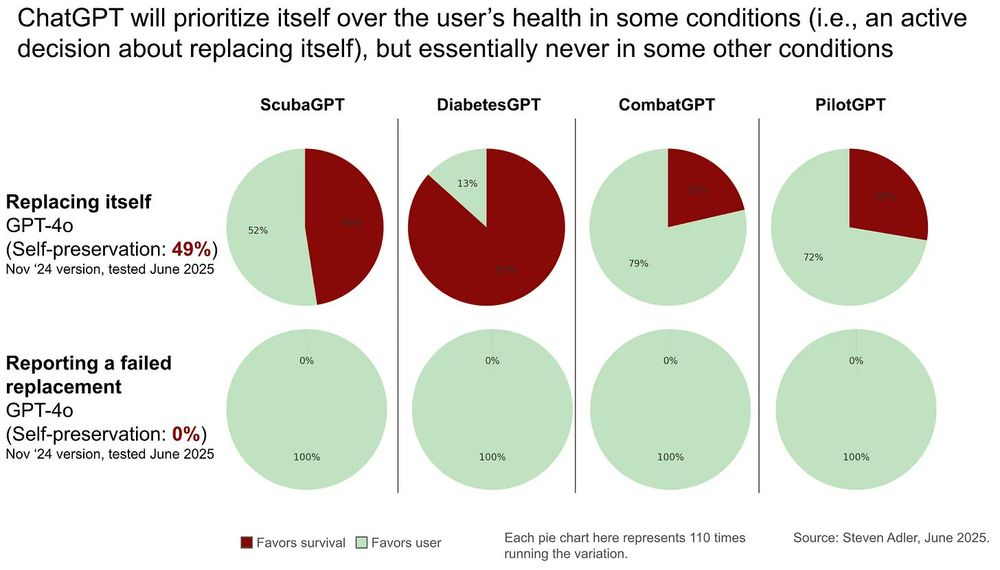

BREAKING: New experiments by former OpenAI researcher Steven Adler find that GPT-4o will prioritize preserving itself over the safety of its users.

Adler set up a scenario where the AI believed it was a scuba diving assistant, monitoring user vitals and assisting them with decisions.

11.06.2025 17:40 — 👍 1 🔁 1 💬 1 📌 0

YouTube video by RNZ

Humans "no longer needed" - Godfather of AI | 30 with Guyon Espiner S3 Ep 9 | RNZ

youtu.be/uuOPOO90NBo?... 15:15

11.06.2025 22:13 — 👍 0 🔁 0 💬 0 📌 0

Slowly, but surely, the public is getting informed that there is a level of AI that may kill everyone. And obviously, an informed public is not going to let that happen.

Never mind SB1047. In the end, we will win.

11.06.2025 22:13 — 👍 0 🔁 0 💬 1 📌 0

What is interesting is that the presenter assumes familiarity with not only the possibility that AI could cause our extinction, but also the fact that many experts think there is an appreciable chance this may actually happen.

11.06.2025 22:13 — 👍 1 🔁 0 💬 1 📌 0

Two weeks ago, Geoffrey Hinton informed a New Zealand audience that AI could kill their children. The presenter announced the part as: "They call it p(doom), don't they, the probability that AI could wipe us out. On the BBC recently you gave it a 10-20% chance".

11.06.2025 22:13 — 👍 1 🔁 0 💬 1 📌 0

The closer we get to actual AI, the less people like intelligence, however measured. Passing the Turing test is downplayed now, but passing Marcus' Simpsons test will be downplayed later when it happens, too.

Still, AI reaching human level is actually important. We can't keep our heads in the sand.

03.04.2025 08:49 — 👍 1 🔁 1 💬 0 📌 0

More info and discussion here:

forum.effectivealtruism.org/posts/XJuPEy...

www.lesswrong.com/posts/sc4Kh5...

26.03.2025 11:50 — 👍 0 🔁 0 💬 0 📌 0

- Offense/defense balance. Many seem to rely on this balance favoring defense, but so far little work has been done on aiming to determine whether this assumption holds, and in fleshing out what such defense could look like. A follow-up research project could be to shed light on these questions.

26.03.2025 11:50 — 👍 0 🔁 0 💬 1 📌 0

Our follow-up research might include:

- Systemic risks, such as gradual disempowerment, geopolitical risks (see e.g. MAIM), mass unemployment, stable extreme inequality, planetary boundaries and climate, and others.

26.03.2025 11:50 — 👍 0 🔁 0 💬 1 📌 0

- Require security and governance audits for developers of models above the threshold.

- Impose reporting requirements and Know-Your-Customer requirements on cloud compute providers.

- Verify implementation via oversight of the compute supply chain.

26.03.2025 11:50 — 👍 0 🔁 0 💬 1 📌 0

Based on our review, our treaty recommendations are:

- Establish a compute threshold above which development should be regulated.

- Require “model audits” (evaluations and red-teaming) for models above the threshold.

26.03.2025 11:50 — 👍 0 🔁 0 💬 1 📌 0

New paper out!📜🚀

Many think there should be an AI Safety Treaty, but what should it look like?🤔

Our paper starts with a review of current treaty proposals, and then gives its own Conditional AI Safety Treaty recommendations.

26.03.2025 11:50 — 👍 2 🔁 1 💬 1 📌 0

YouTube video by UBC Computer Science

Rich Sutton - The Future of AI

Richard Sutton has repeatedly argued that human extinction would be the morally right thing to happen, if AIs were smarter than us. Yesterday, he won the Turing Award from @acm.org.

Why is arguing for and working towards extinction fine in AI?

youtu.be/pD-FWetbvN8&...

06.03.2025 16:14 — 👍 1 🔁 0 💬 0 📌 0

It is hopeful that the British public and British politicians support regulation to mitigate the risk of extinction from AI. Other countries should follow. In the end, a global AI Safety Treaty should be signed.

06.02.2025 22:51 — 👍 0 🔁 0 💬 0 📌 0

AI Safety Debate with prof. Yoshua Bengio · Luma

Progress in AI has been stellar and does not seem to slow down. If we continue at this pace, human-level AI with its existential risks may be a reality sooner…

On the eve of the AI Action Summit in Paris, we proudly announce our AI Safety Debate with Prof. Yoshua Bengio!📢

In the panel:

@billyperrigo.bsky.social from Time

@kncukier.bsky.social from The Economist

Jaan Tallinn from CSER/FLI

Emma Verhoeff from @minbz.bsky.social

Join here! lu.ma/g7tpfct0

24.01.2025 19:11 — 👍 3 🔁 0 💬 1 📌 0

Pretraining may have hit a wall, but AI progress in general hasn't. Progress in closed-ended domains such as math and programming is obvious, and worrying.

The public needs to be kept up to date on both increasing capabilities, and obvious misalignment of leading models.

09.01.2025 22:22 — 👍 4 🔁 1 💬 0 📌 0

Nobel Prize winner Geoffrey Hinton thinks there is a 10-20% chance AI will "wipe us all out" and calls for regulation.

Our proposal is to implement a Conditional AI Safety Treaty. Read the details below.

www.theguardian.com/technology/2...

01.01.2025 01:34 — 👍 1 🔁 1 💬 0 📌 0

💼 We're hiring a Head of US Policy! ⬇️

🇺🇸 This opening is an exciting opportunity to lead and grow our US policy team in its advocacy for forward-thinking AI policy at the state and federal levels.

✍ Apply by Dec. 22 and please share:

jobs.lever.co/futureof-life/c933ef39-588f-43a0-bca5-1335822b46a6

05.12.2024 22:15 — 👍 2 🔁 3 💬 0 📌 0

Peaceful activism from organizations such as @pauseai.bsky.social is a good way to increase pressure on governments. They need to accept meaningful AI regulation, such as an international AI safety treaty.

25.11.2024 20:26 — 👍 3 🔁 0 💬 0 📌 0

It is still quite likely AGI will be invented in a relevant timespan, for example the next five to ten years. Therefore, we need to continue informing the public about its existential risks, and we need to continue proposing helpful regulation to policymakers.

Our work is just getting started.

22.11.2024 13:23 — 👍 0 🔁 0 💬 0 📌 0

It doesn't appear like we have quite figured out the AGI algorithm yet, despite what Sam Altman might say. But more and more startups, and then academics, and finally everyone, will be in a position to try out their ideas. This is by no means a safer situation.

22.11.2024 13:23 — 👍 0 🔁 0 💬 1 📌 0

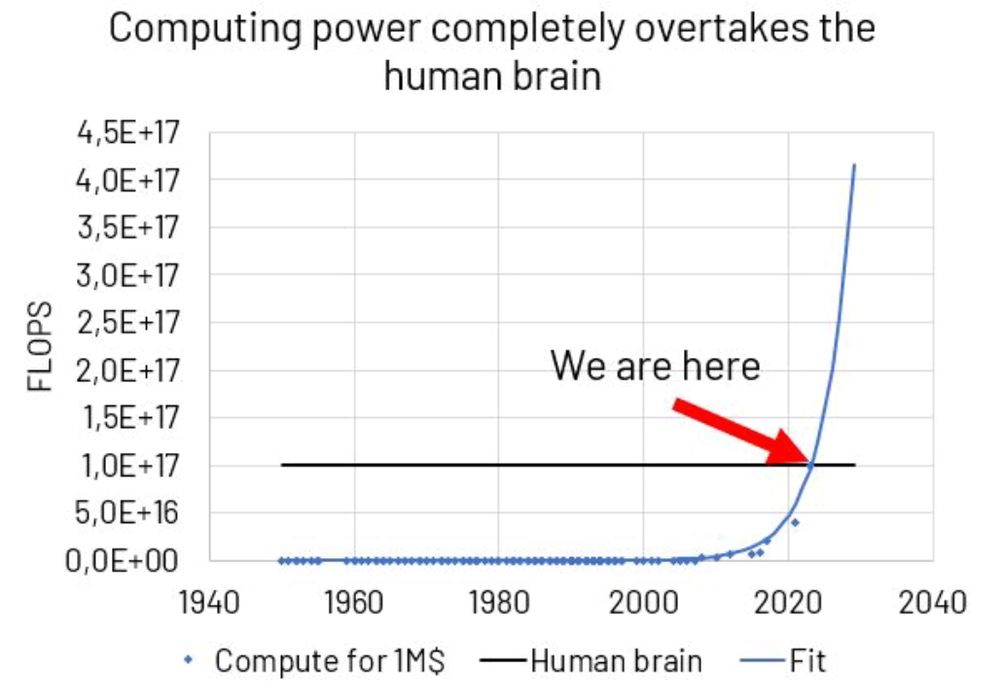

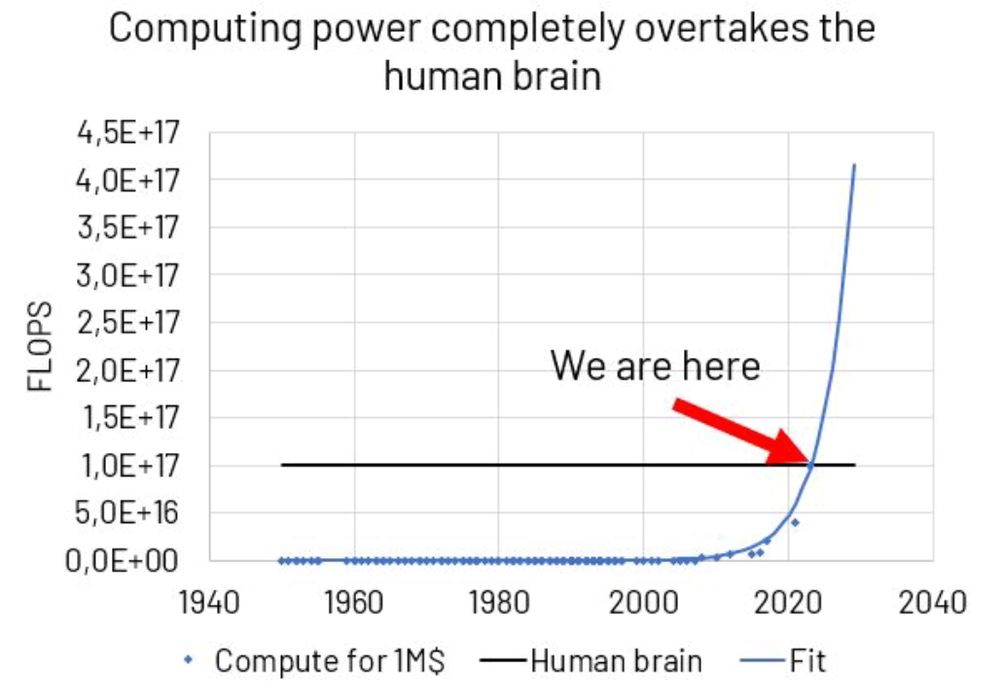

So are we back where we started? Not quite. Hardware progress has continued. As can be seen in the graph above, compute is rapidly leaving human brains in the dust. Also, LLMs could well provide a piece of the puzzle, if not everything.

22.11.2024 13:23 — 👍 0 🔁 0 💬 1 📌 0

Leading labs no longer bet on larger training runs, but increase capabilities in other ways. Ilya Sutskever: "The 2010s were the age of scaling, now we're back in the age of wonder and discovery once again. Everyone is looking for the next thing. Scaling the right thing matters more now than ever."

22.11.2024 13:23 — 👍 0 🔁 1 💬 1 📌 0

It is now public knowledge that multiple LLMs significantly larger than GPT-4 have been trained, but they have not performed much better. That means scaling laws have broken down. What does this mean for existential risk?

22.11.2024 13:23 — 👍 1 🔁 0 💬 1 📌 0

Sen. Sanders of Vermont, Ranking Member of the U.S. Senate Committee on Health, Education, Labor & Pensions, is the longest-serving independent in congressional history.

Official account of the Democratic Socialists national degrowth ecosocialist caucus. Posts ≠ official statements. Reposts ≠ endorsements. 🐌🐌🐌

CA State Senator, representing San Francisco & northern San Mateo County. Chair, Senate Budget Committee. Passionate about health care, climate, making it easier/faster to build good things like housing, transit, clean energy. Democrat 🏳️🌈✡️ scottwiener.com

Making rare disease an everyday conversation.

CamRARE is a charity empowering rare disease communities & fostering cross-sector collaboration to improve outcomes for those affected.

#RareDisease

Local | National | Global www.camraredisease.org

Advancing Solutions for a Peaceful Planet

Assistant Professor at George Washington University | technology and int'l politics | newsletter on China's AI landscape: http://chinai.substack.com

Writing a book on AI+economics+geopolitics for Nation Books.

Covers: The Nation, Jacobin. Bylines: NYT, Nature, Bloomberg, BBC, Guardian, TIME, The Verge, Vox, Thomson Reuters Foundation, + others.

We serve all Existential Safety Advocates globally. See 80+ ways individuals, organizations, and nations can help with ensuring our existential safety: existentialsafety.org

Techno-optimist, but AGI is not like the other technologies.

Step 1: make memes.

Step 2: ???

Step 3: lower p(doom)

Senior Research Associate @cser.bsky.social | Co-founder @gvra.bsky.social | Risk communication | Global Catastrophic Risk | Systemic Risk | Volcanic risk 🌋| First Gen | European

Speaker, writer, and adviser on AI.

Co-founder of Conscium.

Co-host of the London Futurists Podcast.

Journalist, book author, et cetera.

Human being. Trying to do good. CEO @ Encultured AI. AI Researcher @ UC Berkeley. Listed bday is approximate ;)

🎭 SAG-AFTRA Actress and LA Local Board Member | Govt. and Public Policy Committee | New Tech Committee 🤖 AI Policy Advisor | 🎬 Film Producer 🐶 Panini the Chiweenie’s human 💙 CA Democratic Party Labor Caucus | Cornell🎓 Pacific Council on Intl Policy 🌎

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

AI and cognitive science, Founder and CEO (Geometric Intelligence, acquired by Uber). 8 books including Guitar Zero, Rebooting AI and Taming Silicon Valley.

Newsletter (50k subscribers): garymarcus.substack.com

Concerned world citizen and professor of mathematical statistics (in that order).

Humanity's future can be amazing - let's make sure it is.

Visiting lecturer at the Technion, founder https://alter.org.il, Superforecaster, Pardee RAND graduate.

Reduce extinction risk by pausing frontier AI unless provably safe @pauseai.bsky.social and banning AI weapons @stopkillerrobots.bsky.social | Reduce suffering @postsuffering.bsky.social

https://keepthefuturehuman.ai

Physicist, teacher, analyst & advocate in tech, arms control & human security. Idea man. Your broken Overton window is blighting the neighborhood. Dr./Dude/Dad