about | ICLR Blogposts 2026

A simple, whitespace theme for academics. Based on [*folio](https://github.com/bogoli/-folio) design.

Call for Blog Posts: Submission deadline: Dec. 1st, 2025 23:59 AOE All information is now available: iclr-blogposts.github.io/2026/about/ Please RT!

Organizers:

@schwinnl.bsky.social @busycalibrating.bsky.social @jonkhler.argmin.xyz @n-gao.bsky.social @mhrnz.bsky.social & myself

22.09.2025 07:47 — 👍 2 🔁 1 💬 1 📌 0

Blog Posts are a great medium to share ML research. If you have new intuitions on past work, noticed key implementation details for reproducibility, have insights into the societal implications of AI, or an interesting negative result consider writing and submitting a blogpost.

22.09.2025 07:45 — 👍 2 🔁 1 💬 1 📌 0

📣 Call for Blog Posts at #ICLR2026 @iclr_conf

Following the success of the past iterations, we are opening the Call for Blog Posts 2026!

iclr-blogposts.github.io/2026/about/#...

Please retweet!

22.09.2025 07:44 — 👍 14 🔁 8 💬 1 📌 1

Fantastic opportunity to join our team at the European Center for Medium-Range Weather Forecast (ECMWF) as an ML Scientist working on Atmospheric Composition/Air Quality Forecasting: <https://jobs.ecmwf.int/Job/JobDetail?JobId=10318>

Write me if you have any questions!

16.06.2025 12:42 — 👍 4 🔁 3 💬 0 📌 0

If you are at @iclr-conf.bsky.social and are interested in making your RLHF really fast come find @mnoukhov.bsky.social and me at poster #582.

25.04.2025 07:58 — 👍 4 🔁 0 💬 1 📌 0

I am at ICLR this year, please reach out if you would like to have a chat.

24.04.2025 04:00 — 👍 4 🔁 0 💬 0 📌 0

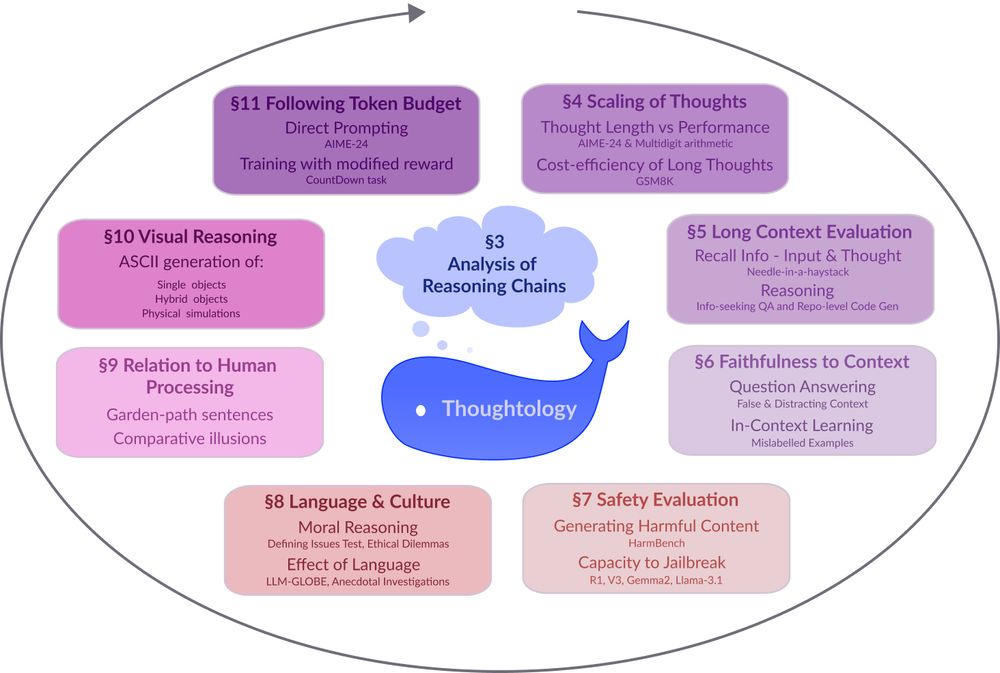

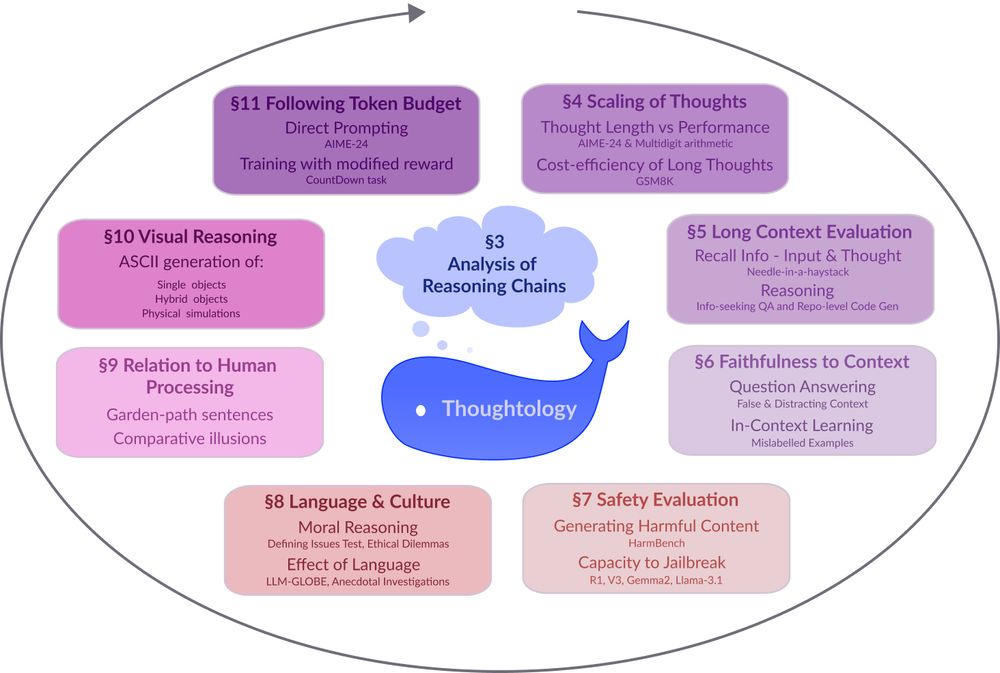

A circular diagram with a blue whale icon at the center. The diagram shows 8 interconnected research areas around LLM reasoning represented as colored rectangular boxes arranged in a circular pattern. The areas include: §3 Analysis of Reasoning Chains (central cloud), §4 Scaling of Thoughts (discussing thought length and performance metrics), §5 Long Context Evaluation (focusing on information recall), §6 Faithfulness to Context (examining question answering accuracy), §7 Safety Evaluation (assessing harmful content generation and jailbreak resistance), §8 Language & Culture (exploring moral reasoning and language effects), §9 Relation to Human Processing (comparing cognitive processes), §10 Visual Reasoning (covering ASCII generation capabilities), and §11 Following Token Budget (investigating direct prompting techniques). Arrows connect the sections in a clockwise flow, suggesting an iterative research methodology.

Models like DeepSeek-R1 🐋 mark a fundamental shift in how LLMs approach complex problems. In our preprint on R1 Thoughtology, we study R1’s reasoning chains across a variety of tasks; investigating its capabilities, limitations, and behaviour.

🔗: mcgill-nlp.github.io/thoughtology/

01.04.2025 20:06 — 👍 52 🔁 16 💬 1 📌 9

Our work on Asynchronous RLHF was accepted to #ICLR2025 ! (I was so excited to announce it, I forgot to say I was excited)

Used by @ai2.bsky.social for OLMo-2 32B 🔥

New results show ~70% speedups for LLM + RL math and reasoning 🧠

🧵below or hear my DLCT talk online on March 28!

18.03.2025 20:45 — 👍 13 🔁 3 💬 1 📌 1

Thanks again to my collaborators:

@vwxyzjn.bsky.social

@sophie-xhonneux.bsky.social

@arianh.bsky.social

Rishabh and Aaron who have not yet migrated 🦋

DMs open📲let's chat about about everything LLM + RL @ ICLR and check out

Paper 📰 arxiv.org/abs/2410.18252

Code 🧑💻 github.com/mnoukhov/asy...

18.03.2025 20:45 — 👍 2 🔁 1 💬 0 📌 0

Come to our Spotlight Poster #4702!

East Exhibition Hall A-C

12.12.2024 19:02 — 👍 17 🔁 5 💬 0 📌 0

Voici un résumé d'une minute de l'article de @sophie-xhonneux.bsky.social " Efficient Adversarial Training in LLMs with Continuous Attacks ". Venez voir le poster vedette à

@neuripsconf.bsky.social aujourd'hui : Session de posters 3 Est, #4702.

12.12.2024 18:58 — 👍 7 🔁 2 💬 0 📌 0

I will be at NeurIPS! Would love to chat about research!

Especially about fine-tuning of LLMs as well as generative models more generally and reasoning!

I will be presenting "Efficient Adversarial Training in LLMs with Continuous Attacks" (spotlight) at the morning poster session on Thursday!

10.12.2024 00:20 — 👍 8 🔁 1 💬 0 📌 0

ML/NLP PhD student at Queen Mary, previous Imperial College MSc. | 🇬🇧 | GoogleDeepMind Scholar

https://majapavlo.github.io/

(She/her)

AI Researcher at the Samsung SAIT AI Lab 🐱💻

I build generative models for images, videos, text, tabular data, NN weights, molecules, and now video games!

PhD-ing at McGill Linguistics + Mila, working under Prof. Siva Reddy. Mostly computational linguistics, with some NLP; habitually disappointed Arsenal fan

Restless neuroscientist and occasional artist.

PhD candidate at Université de Montréal.

Interests include: Emotion, critical psychiatry, trauma, psychophysiology, history and phil of science, painting, drawing, music, game development

dariusliutas.com

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Postdoc at Meta FAIR, Comp Neuro PhD @McGill / Mila. Looking at the representation in brains and machines 🔬 https://dongyanl1n.github.io/

Department of Meteorology at the University of Reading. Undergraduate, MSc and PhD courses in Weather, Climate and more plus internationally top notch research: https://research.reading.ac.uk/meteorology/

Professor @UniofReading | OBE @UniRdg_water Co-Director | floods, heatwaves, natural hazards | hydrology | climate impact | ensemble forecasts | disaster risk | science communication

Climate scientist at the National Centre for Atmospheric Science, University of Reading | IPCC AR6 Lead Author | MBE | Views own | https://edhawkins.org

Warming Stripes: http://www.ShowYourStripes.info

I lead Cohere For AI. Formerly Research

Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

Faculty at the ELLIS Institute Tübingen and Max Planck Institute for Intelligent Systems. Leading the AI Safety and Alignment group. PhD from EPFL supported by Google & OpenPhil PhD fellowships.

More details: https://www.andriushchenko.me/

Red-Teaming LLMs / PhD student at ETH Zurich / Prev. research intern at Meta / People call me Javi / Vegan 🌱

Website: javirando.com

Professor and Head of Machine Learning Department at Carnegie Mellon. Board member OpenAI. Chief Technical Advisor Gray Swan AI. Chief Expert Bosch Research.

Senior Research Fellow @ ucl.ac.uk/gatsby & sainsburywellcome.org

{learning, representations, structure} in 🧠💭🤖

my work 🤓: eringrant.github.io

not active: sigmoid.social/@eringrant @eringrant@sigmoid.social, twitter.com/ermgrant @ermgrant

So far I have not found the science, but the numbers keep on circling me.

Views my own, unfortunately.

glaciologist @ethzurich and @wslresearch.bsky.social, head of GLAMOS, passionate about mountains

PhD fellow in XAI, IR & NLP

✈️ Mila - Quebec AI Institute | University of Copenhagen 🏰

#NLProc #ML #XAI

Recreational sufferer

Blog: https://sander.ai/

🐦: https://x.com/sedielem

Research Scientist at Google DeepMind (WaveNet, Imagen 3, Veo, ...). I tweet about deep learning (research + software), music, generative models (personal account).

Assist. prof. at Université de Montreal and Mila · machine learning for science · climate change and health · open science · he/él/il #PalestinianLivesMatter 🍉

alexhernandezgarcia.github.io