wooahh! need to give this a read 🤓

08.08.2025 19:26 — 👍 2 🔁 1 💬 0 📌 0

APA PsycNet

Ah yeah most of the work im familiar with skews toward generative models. e.g. like this classic piece, which captures various interactions between Stroop conditions, learning effects, etc.

psycnet.apa.org/record/1990-...

02.07.2025 12:49 — 👍 1 🔁 0 💬 0 📌 0

APA PsycNet

Probably not what you are referring to, but the Stroop task is our main example here: psycnet.apa.org/fulltext/202...

02.07.2025 12:28 — 👍 1 🔁 0 💬 1 📌 0

simply amazing

27.06.2025 01:15 — 👍 1 🔁 0 💬 0 📌 0

yeah this is a great set

13.05.2025 00:34 — 👍 1 🔁 0 💬 0 📌 0

Wish I could have made it in person!

05.05.2025 21:41 — 👍 1 🔁 0 💬 0 📌 0

i have now vented on reddit and bsky so maybe i will feel better about it now

23.04.2025 02:47 — 👍 0 🔁 0 💬 1 📌 0

last of us S2 E2, more like last episode amirite

23.04.2025 02:37 — 👍 0 🔁 0 💬 1 📌 0

the moment some unintuitive simulation and empirical results finally click and you know what needs to be done 🤌

a feeling vibe coders can only dream of

21.04.2025 23:12 — 👍 3 🔁 0 💬 0 📌 0

tariffs amirite

21.04.2025 15:51 — 👍 2 🔁 0 💬 0 📌 0

Thanks for the endorsement! Awesome to hear it's been influential to your work 😁

And yes the KL finding is super interesting by itself, happy to hear someone found it buried in the supplement ha

17.04.2025 19:46 — 👍 1 🔁 0 💬 1 📌 0

Thank you! Glad you have found it useful 🤓

17.04.2025 19:44 — 👍 1 🔁 0 💬 0 📌 0

it was horrible, there were 10+ papers based on the initial preprint/blog that were in print before this one eventually made it there 😅

17.04.2025 15:22 — 👍 1 🔁 0 💬 0 📌 0

SIX YEARS after the initial blog post, this paper is finally published.. what a wild ride

- the blog: bit.ly/3GbOqQa

- original tweet thread: x.com/Nate__Haines...

- published (open access) paper: doi.org/10.1037/met0...

17.04.2025 13:22 — 👍 33 🔁 9 💬 2 📌 2

Yeah I've found LLM tech good for stuff that is mostly boilerplate (e.g. some general software development stuff), but when it comes to modeling work that is necessarily bespoke, they are quite bad

14.04.2025 15:58 — 👍 2 🔁 0 💬 0 📌 0

sounds like a black mirror episode

12.04.2025 21:21 — 👍 2 🔁 0 💬 0 📌 0

That's called MDMA

11.04.2025 12:07 — 👍 1 🔁 0 💬 0 📌 0

But I have not read into this lit much tbh, so I have a biased sampling of what people think 😁

08.04.2025 00:01 — 👍 3 🔁 0 💬 0 📌 0

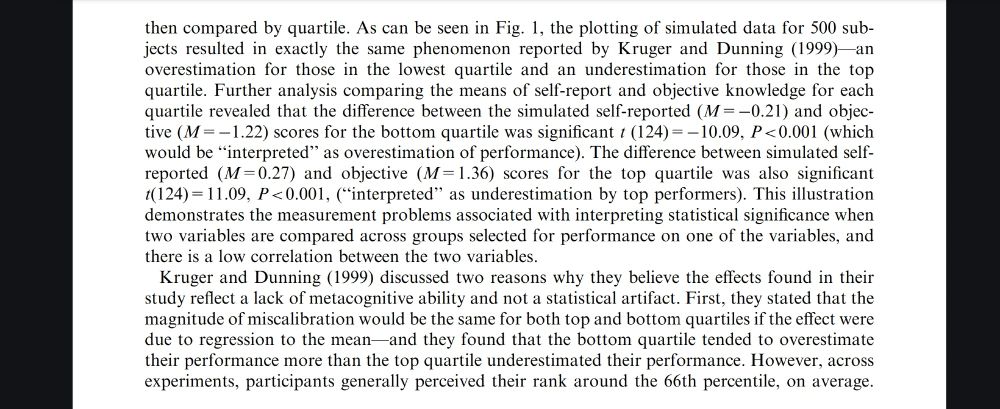

"Regressions to the mean = statistical artifact = the effect is not real" is my read based on papers like this

07.04.2025 23:54 — 👍 1 🔁 0 💬 1 📌 0

My read is quite different, e.g. www.sciencedirect.com/science/arti...

07.04.2025 23:53 — 👍 0 🔁 0 💬 1 📌 0

hey we grow good bourbon and that's food

07.04.2025 22:03 — 👍 1 🔁 0 💬 0 📌 0

Yes that's exactly what I mean—the claim that, because regression to the mean captures the effect, it is a statistical artifact and not something with a psychological explanation

06.04.2025 22:28 — 👍 0 🔁 0 💬 1 📌 0

I think they could potentially be good items for the classroom! It is a fun item set at least, although not sure about calibration as I really only looked at quartiles

06.04.2025 16:55 — 👍 1 🔁 0 💬 0 📌 0

Yeah IMO, I think the ideal design would be something within-person such that we could sample people across their individual skill distributions so that we could understand if the effect arises at the person level

06.04.2025 16:43 — 👍 1 🔁 0 💬 0 📌 0

"That explanation ... would not be able to explain self estimated performance going up again at very low ability"

I still think it is a better explanation than the "stats artifact" explanation. The models I used are too simple on purpose—I didn't have data for fitting anything more complicated

06.04.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

I feel there is some talking in circles going on here lol character limits be damned.. my stance is that the mechanism generating the noise is not well understood, but that psych-motivated models help provide an explanation. To get more fine grained (empirically) we need better datasets

06.04.2025 16:09 — 👍 1 🔁 0 💬 0 📌 0

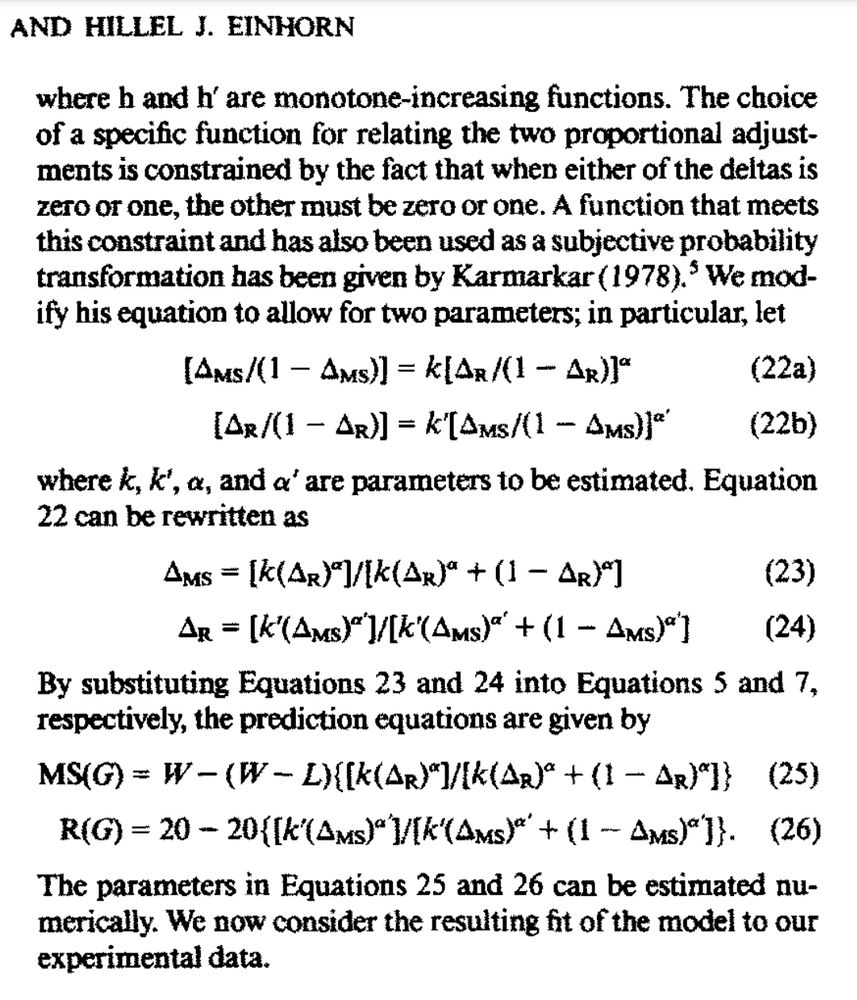

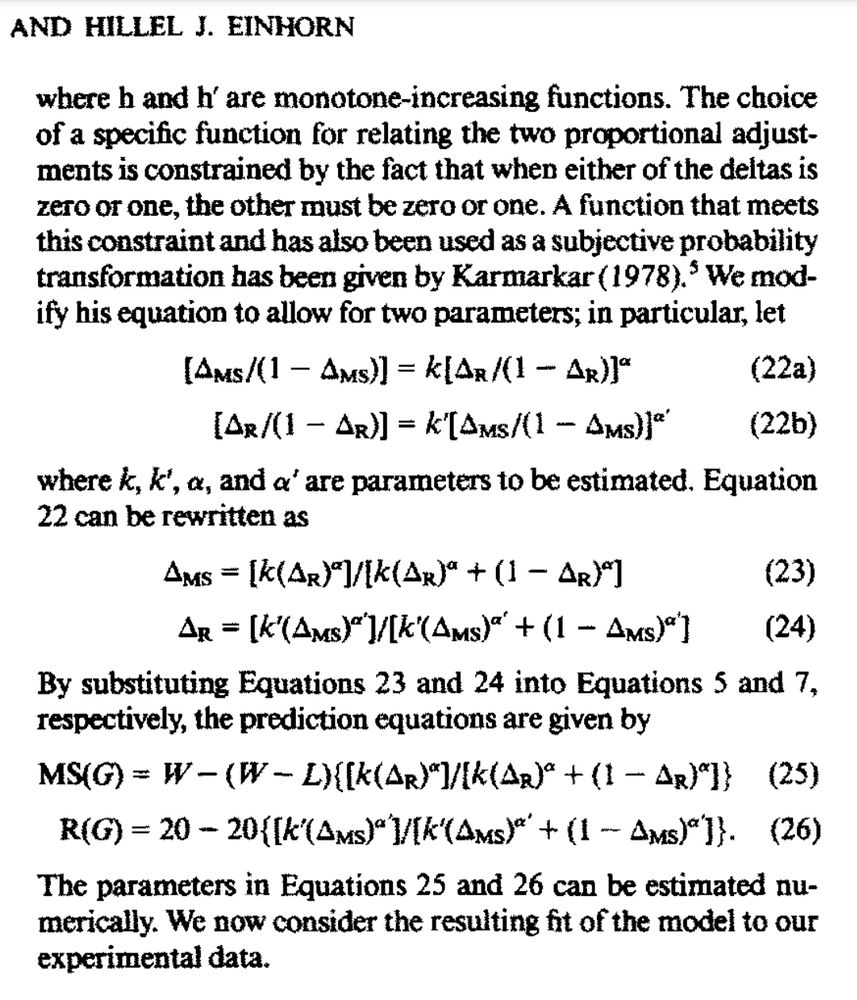

Ah yeah this form of probability distortion is generally attributed to Goldstein & Einhorn (1987). e.g. see eq ~23

sci-hub.se/https://psyc...

06.04.2025 16:00 — 👍 1 🔁 0 💬 0 📌 0

I mean snipping the quote like that is a bit misleading ..

there are of course many different ways that noise could arise. the two models have different implications re mechanisms, but the data I had available were not able to distinguish between them

06.04.2025 15:31 — 👍 0 🔁 0 💬 1 📌 0

If you build a model with these assumptions based in, you will get the DK effect. In the same way, if you simulate multivariate normal data with one mean higher than the other and a moderate covariance, you get the DK effect. IMO the former is a plausible explanation, the latter just a description

06.04.2025 14:21 — 👍 1 🔁 0 💬 1 📌 0

Clinical Psych PhD student at Notre Dame ☘️ Using EMA and comp. modeling to study suicide and NSSI

Professor (michaelinzlicht.com), Podcaster (www.fourbeers.com), Writer (www.speakandregret.michaelinzlicht.com)

Psychology prof at the University of Toronto.

Sustainability | Governance

Trust is built in complexity

Engagement ≠ Alignment

Global Healthcare Services Manager @ Hyland | Passionate about healthcare, digital health, AI & cloud | BSc, PRINCE2, MSP, L6σ Green Belt | Opinions are my own | https://linktr.ee/paulcochrane

I'm a psychometrician in the experimental field, currently doing my PhD research on the Psychometric Properties of Experimental Tasks at the Universidad Autónoma de Madrid.

PhD student at @cimcyc.bsky.social | @universidadgranada.bsky.social. Experimental psychology, #rstats and bayesian statistics, but not too much. https://franfrutos.github.io/

ML Eng. and econometrics. Lot more left-posting than normal. Some hobby-level finance

Regrettably degen trading for the next 3 months, im sorry

Views dont reflect my employer

mediocre transsexual; sydneysider; saltbush girl; former professor; credibly accused of crimes against statistics, data science, mathematical psychology, pharmacometrics, and art; old; bitter; should quit grindr; probably won't

certified big nerd | big #rstats dweeb | accidental python fan | he/him

thedatadiary.net

I am an assistant professor at the department of Social Psychology at Tilburg University's School of Social and Behavioral Sciences.

I have a website at https://vuorre.com.

All posts are posts.

AI systems, eigenadmin, financial economics PhD, ATProto fan, man with tattooed legs.

Devrel @ letta.com

Signal: https://signal.me/#eu/AQ1ajwHwgg0rabcRsdtkm9UYpdg52axiruSTFMmrFy0LR4Ds8pdH25jzjoTc2bGu

Assistant Professor at Emory Psychology || Computational clinical science of depression and anxiety || translational-lab.com

Assistant Professor @ Utah State University. Suicide, self-harm, behavior therapies, and public education. Licensed psychologist. School psychologist. 🏳️🌈

erikreinbergs.com

Asst Prof UH-Clear Lake / #RStats, Methods, & Measurement / Youth Wellbeing / #SchoolPsych / 🎸Radical🤙Behaviorism / #OpenScience / 🎞 Da Moviesh 🎥 / #DoubleBass

Social Psychologist, Data Scientist, Baseball Fan, Dog Dad, Improviser

Clinical psychology PhD candidate at Ohio State | Interested in developmental psychopathology, emotion regulation, and #rstats | Smith College '18 | she/her

Professor of Cognitive Neuroscience.

Co-director of York Neuroimaging Centre (YNiC).

Interested in memory, spatial navigation and brain imaging.

He/Him

http://www.aidanhorner.org/

Applied Scientist in Industry. Previously UCSD. Princeton PhD.

Follow me for recreational methods trash talk.

www.rexdouglass.com