LinkedIn

This link will take you to a page that’s not on LinkedIn

At OASIS Lab, #UCLA, we are accepting applications for a PhD student who is passionate about using computational modeling, big data, and HCI to advance digital safety, responsible AI, and the study of online information ecosystems.

tinyurl.com/phdopenningu...

#PhD #AIforGood #OnlineSafety

24.11.2025 20:40 — 👍 6 🔁 2 💬 2 📌 1

Already done from LinkedIn:)

25.11.2025 04:47 — 👍 1 🔁 0 💬 0 📌 0

🚨 New preprint 🚨

Can AI agents coordinate influence campaigns without human guidance? And how does coordination arise among AI agents? In our latest research, we simulate LLM-powered AI agents acting like users on an online platform, some benign, some running an influence operation

03.11.2025 19:46 — 👍 18 🔁 7 💬 1 📌 2

Comparison diagram showing traditional vs discourse network user representations. Left: isolated platform-specific clusters with no cross-platform connections. Right: unified network where users are connected across platforms through shared narrative engagement, revealing previously invisible cross-platform communities.

New paper + interactive dashboard on the 2024 election information ecosystem.

Building on discourse networks work with @hanshanley.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social—this lets us visualize the online landscape as a unified system, rather than isolating each platform.

20.10.2025 18:32 — 👍 6 🔁 4 💬 1 📌 0

We created a framework for auditing and characterizing the undesireable effects of alignment safeguards in LLMs, that can result in censorship or information suppression. And we tested DeepSeek against potentially sensitive topics!

16.10.2025 22:44 — 👍 4 🔁 0 💬 0 📌 0

Thrilled to share our latest paper "Information Suppression in Large Language Models" is now published on Information Sciences!

To read more, see: www.sciencedirect.com/science/arti...

great work w/ @siyizhou.bsky.social

16.10.2025 22:44 — 👍 4 🔁 0 💬 1 📌 0

Peiran Qiu, Siyi Zhou, Emilio Ferrara: Information Suppression in Large Language Models: Auditing, Quantifying, and Characterizing Censorship in DeepSeek https://arxiv.org/abs/2506.12349 https://arxiv.org/pdf/2506.12349 https://arxiv.org/html/2506.12349

17.06.2025 09:05 — 👍 2 🔁 5 💬 0 📌 0

Amin Banayeeanzade, Ala N. Tak, Fatemeh Bahrani, Anahita Bolourani, Leonardo Blas, Emilio Ferrara, Jonathan Gratch, Sai Praneeth Karimireddy

Psychological Steering in LLMs: An Evaluation of Effectiveness and Trustworthiness

https://arxiv.org/abs/2510.04484

07.10.2025 09:43 — 👍 2 🔁 1 💬 0 📌 0

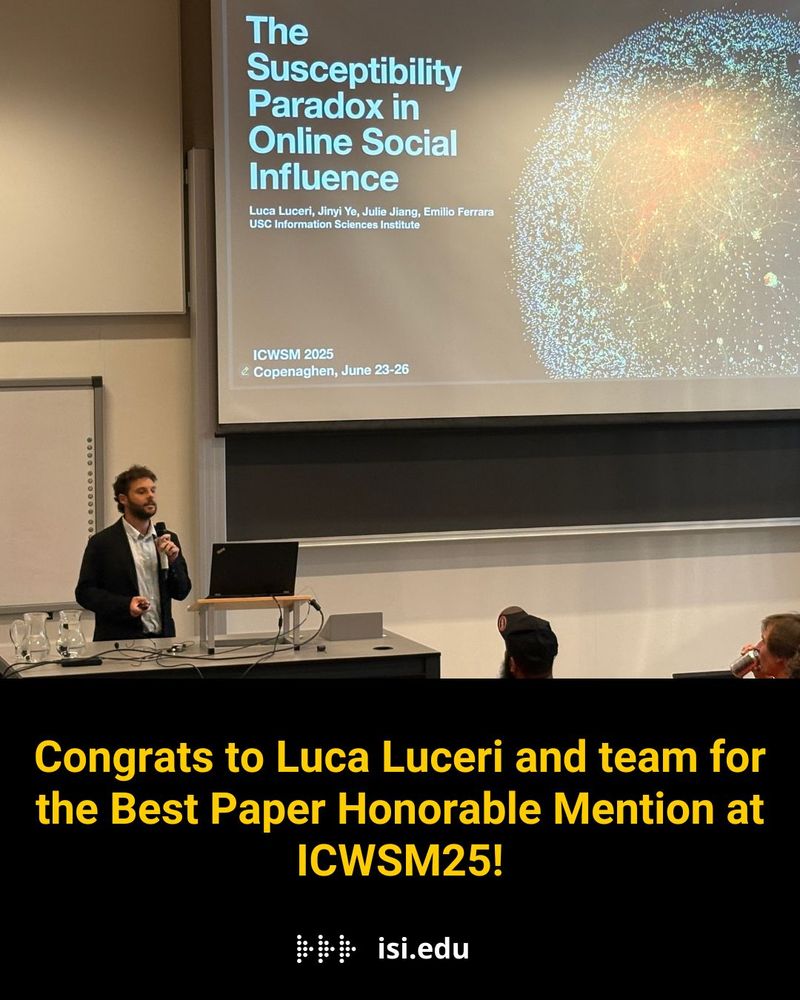

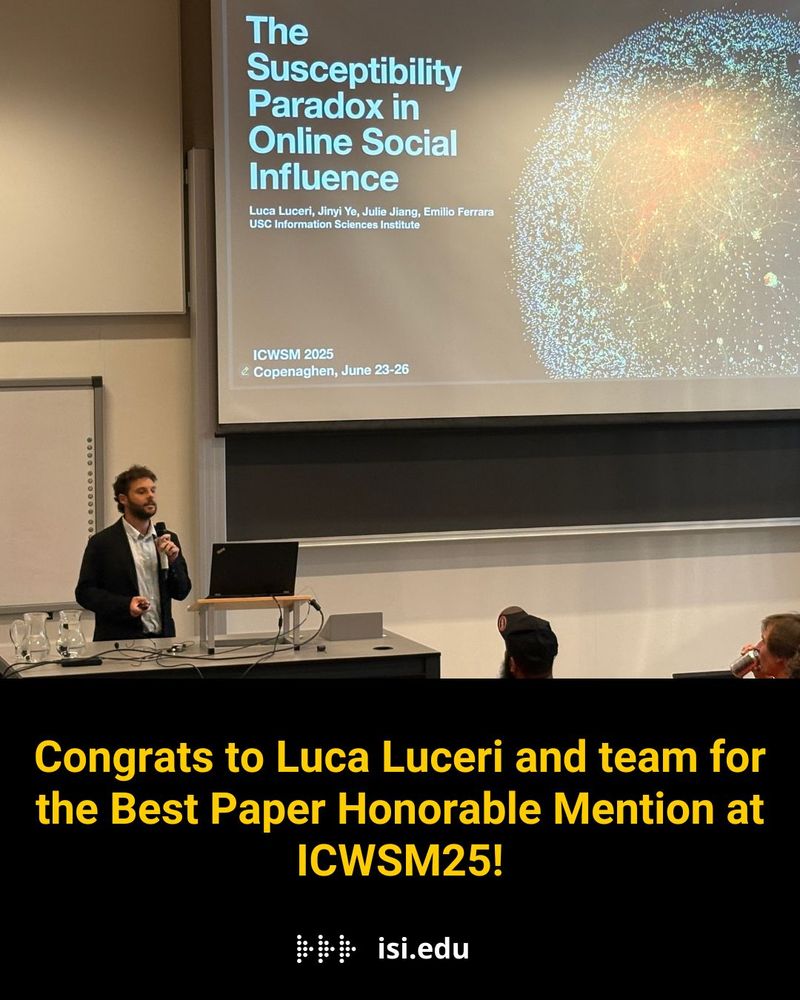

Big news from #ICWSM2025!

"The Susceptibility Paradox in Online Social Influence” by @luceriluc.bsky.social, @jinyiye.bsky.social, Julie Jiang & @emilioferrara.bsky.social was named a Top 5 Paper & won Best Paper Honorable Mention!

👏 Congrats to all!

26.06.2025 21:39 — 👍 8 🔁 3 💬 0 📌 0

Box and whisker plot showing the top 20 recommended accounts across "neutral" sock puppet accounts. Elon Musk is most recommended.

Box and whisker plot showing the top 20 recommended accounts across "left-leaning" sock puppet accounts. Elon Musk is most recommended.

Box and whisker plot showing the top 20 recommended accounts across "right-leaning" sock puppet accounts. Elon Musk is most recommended by far.

So satisfying to have some evidence that Elon Musk is wildly promoting himself on X.

Researchers made 120 sock puppet accounts to see whose content is getting pushed on users. #FAccT2025

@jinyiye.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social

doi.org/10.1145/3715...

25.06.2025 08:31 — 👍 27 🔁 6 💬 1 📌 0

Proceedings of the ICWSM Workshops

A Multimodal TikTok Dataset of #Ecuador's 2024 Political Crisis and Organized Crime Discourse

by Gabriela Pinto, @emilioferrara.bsky.social USC

workshop-proceedings.icwsm.org/abstract.php...

@icwsm.bsky.social #dataforvulnerable25

23.06.2025 10:12 — 👍 3 🔁 3 💬 0 📌 0

I'll be at #ICWSM 2025 next week to present our paper about Bluesky Starter Packs.

For the occasion, I've created a Starter Pack with all the organizers, speakers, and authors of this year I could find on Bluesky!

Link: go.bsky.app/GDkQ3y7

Let me know if I missed anyone!

21.06.2025 11:31 — 👍 30 🔁 13 💬 6 📌 2

Thx! Very useful!

22.06.2025 23:47 — 👍 0 🔁 0 💬 0 📌 0

🚨 𝐖𝐡𝐚𝐭 𝐡𝐚𝐩𝐩𝐞𝐧𝐬 𝐰𝐡𝐞𝐧 𝐭𝐡𝐞 𝐜𝐫𝐨𝐰𝐝 𝐛𝐞𝐜𝐨𝐦𝐞𝐬 𝐭𝐡𝐞 𝐟𝐚𝐜𝐭-𝐜𝐡𝐞𝐜𝐤𝐞𝐫?

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

01.06.2025 07:48 — 👍 16 🔁 8 💬 1 📌 0

One of my favorite recent projects!

Link to the paper:

arxiv.org/abs/2505.10867

19.05.2025 20:40 — 👍 8 🔁 0 💬 0 📌 0

What does coordinated inauthentic behavior look like on TikTok?

We introduce a new framework for detecting coordination in video-first platforms, uncovering influence campaigns using synthetic voices, split-screen tactics, and cross-account duplication.

📄https://arxiv.org/abs/2505.10867

19.05.2025 15:42 — 👍 21 🔁 9 💬 2 📌 2

"Limited effectiveness of LLM-based data augmentation for COVID-19 misinformation stance detection" by @euncheolchoi.bsky.social @emilioferrara.bsky.social et al, presented by the awesome Chur at The Web Conference 2025

arxiv.org/abs/2503.02328

01.05.2025 05:06 — 👍 6 🔁 2 💬 0 📌 0

wait until they hear matplotlib...

08.04.2025 15:58 — 👍 1 🔁 0 💬 0 📌 0

08.04.2025 15:55 — 👍 12 🔁 3 💬 1 📌 0

08.04.2025 15:55 — 👍 12 🔁 3 💬 1 📌 0

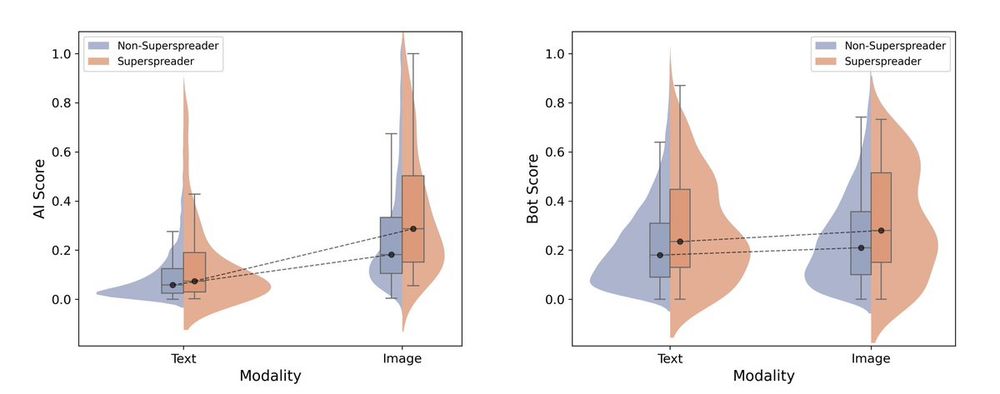

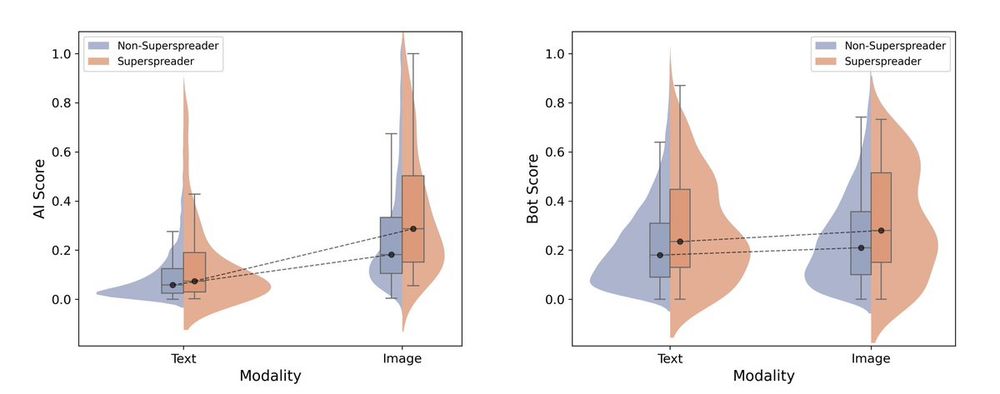

🚀 First study on multimodal AI-generated content (AIGC) on social media! TLDR: AI-generated images are 10× more prevalent than AI-generated text! Just 3% of text spreaders and 10% of image spreaders drive 80% of AIGC diffusion, with premium & bot accounts playing a key role🤖📢

21.02.2025 06:24 — 👍 6 🔁 1 💬 1 📌 2

lol insisting is indeed one possible strategy; the screenshots maybe are not that clear but I asked exactly the same thing four times in a row and once I got a no redacted answer!

31.01.2025 01:15 — 👍 3 🔁 0 💬 0 📌 0

Once the response composition is completed, however, the entire answer is deleted and replaced by the famous error message “Sorry, that's beyond my current scope. Let’s talk about something else.”

Up to us, as researchers, to decide what kind of model alignments we find acceptable.

30.01.2025 18:09 — 👍 5 🔁 0 💬 1 📌 0

And by some higher order approximation, these are all economic/$ policy problems :)

05.01.2025 23:59 — 👍 2 🔁 0 💬 1 📌 0

i was annoyed at having many chrome tabs with PDF papers having uninformative titles, so i created a small chrome extension to fix it.

i'm using it for a while now, works well.

today i put it on github. enjoy.

github.com/yoavg/pdf-ta...

05.01.2025 22:22 — 👍 98 🔁 22 💬 5 📌 1

The latest technology news and analysis from the world's leading engineering magazine.

PhD Candidate @uwcse.bsky.social studying online community governance and moderation, with a focus on Reddit.

🧪 Data science, survey science, social science

💻 Director of Data Science @ Microsoft Garage

[Posts do not represent my employer]

🧮 Stats, R, python

📝 Science, Research: measurement, social biases, emotion. Ex-academic but scientist at heart

I read a lot of research.

Currently reading: https://dmarx.github.io/papers-feed/

Statistical Learning

Information Theory

Ontic Structural Realism

Morality As Cooperation

Epistemic Justice

YIMBY, UBI

Research MLE, CRWV

Frmr FireFighter

Assistant professor at Northwestern Kellogg | human AI collaboration | computational social science | affective computing

Computer Science PhD @ TU Darmstadt by day, Generative Art during free time.

https://bsky.leobalduf.com

Director of DIVERSIunity.

Co-host of "The Diversity in Research Podcast".

Dog dad. Knitter. Husband. Wine drinker. 🌈

https://linktr.ee/jakobfeldtfos

networksy person who likes to measure things. Current interests: privacy, online harms, all 'thinks' Starlink et content delivery.

PhD Student in Social Data Science at University of Mannheim | LLMs and Surveys | georgahnert.de

@max@maxpe.todon.de

Principal Investigator at Barcelona Supercomputing Center

Assistant Prof. @csaudk.bsky.social | Fellow @cphsodas.bsky.social

Previous: @icepfl.bsky.social @americanexpress @Xerox @Intel

Interests: 🥾🏔️🚴♂️🏋️♂️🎸

#NLProc #LLMs #AgenticAI #Causality #GraphML

https://www.cs.au.dk/~clan/people/aarora

Research scientist @wikimedia • Adjunct professor @enginyeria_upf • Associate @decidim_org 〰️ #data #commons #civictech 〰️ Q67255935

Researcher: Computational Social Science, Text as Data

On the job market in Fall 2025!

Currently a Postdoctoral Research Associate at Network Science Institute, Northeastern University

Website: pranav-goel.github.io/

Professor at DTU Management | Section for Human-Centered Engineering | Head of @echodtu.bsky.social

🗺️ controversy mapping

🤖 computational anthropology

🚒 technological problems in society

#digitalmethods #sts #dkforsk

Book: https://bit.ly/contrmap

PostDoc @ ITU Copenhagen with NERDS group. Thrilled by network evolution and user behavior on social networks. I do slides for a living.

Assistant Professor @KSU_CCIS |PhD @InfAtEd Computational Social Science & NLP | Multilingual & Social Processing

‼️Not interested in Monolingual/ArabicNLP-dialect |ArabicHCI/Healthcare/Politics/Network Science/Privacy‼️

🌐 https://abeeraldayel.github.io

Computational Social Science & Social Computing Researcher | Assistant Prof @illinoisCDS @UofIllinois | Prev @MSFTResearch | Alum @ICatGT @GeorgiaTech @IITKgp

08.04.2025 15:55 — 👍 12 🔁 3 💬 1 📌 0

08.04.2025 15:55 — 👍 12 🔁 3 💬 1 📌 0