Do AI agents ask good questions? We built “Collaborative Battleship” to find out—and discovered that weaker LMs + Bayesian inference can beat GPT-5 at 1% of the cost.

Paper, code & demos: gabegrand.github.io/battleship

Here's what we learned about building rational information-seeking agents... 🧵🔽

27.10.2025 19:17 — 👍 20 🔁 9 💬 1 📌 2

I'm really excited about this work (two years in the making!).

We look at how LLMs seek out and integrate information and find that even GPT-5-tier models are bad at this, meaning we can use Bayesian inference to uplift weak LMs and beat them... at 1% of the cost 👀

28.10.2025 19:39 — 👍 2 🔁 0 💬 0 📌 0

Title page of the paper: WUGNECTIVES: Novel Entity Inferences of Language Models from Discourse Connectives, with two figures at the bottom

Left: Our figure 1 -- comparing previous work, which usually predicted the connective given the arguments (grounded in the world); our work flips this premise by getting models to use their knowledge of connectives to predict something about the world.

Right: Our main results across 7 types of connective senses. Models are especially bad at Concession connectives.

"Although I hate leafy vegetables, I prefer daxes to blickets." Can you tell if daxes are leafy vegetables? LM's can't seem to! 📷

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

16.10.2025 15:27 — 👍 33 🔁 10 💬 2 📌 1

Common Descent

Machine learning begins with the perceptron

It’s week 4 and probably time to start doing machine learning in machine learning class. We begin with the only nice thing we have: the perceptron.

23.09.2025 14:31 — 👍 40 🔁 3 💬 1 📌 0

Out of curiosity (and my own ignorance), how are teachers aware of students' socioeconomic backgrounds when the students are this young?

I can think of clothing as an immediate signal, and, over time, getting to know parents (and thus their occupations). Are these the main ways this is inferred?

05.09.2025 17:18 — 👍 1 🔁 0 💬 1 📌 0

"for too long has my foot been allowed to carry my body" I say, as I load a shotgun and aim at it.

28.08.2025 16:26 — 👍 16 🔁 1 💬 0 📌 0

> looking for a coffee

> have to judge if their coffee is burnt or flavorful

> "we have a Cimbali coffee machine"

> buy coffee

> it's burnt

18.07.2025 18:55 — 👍 1 🔁 0 💬 0 📌 0

me: "I want my research to be featured in the NYT"

Genie: (grinning)

23.06.2025 13:30 — 👍 74 🔁 19 💬 0 📌 0

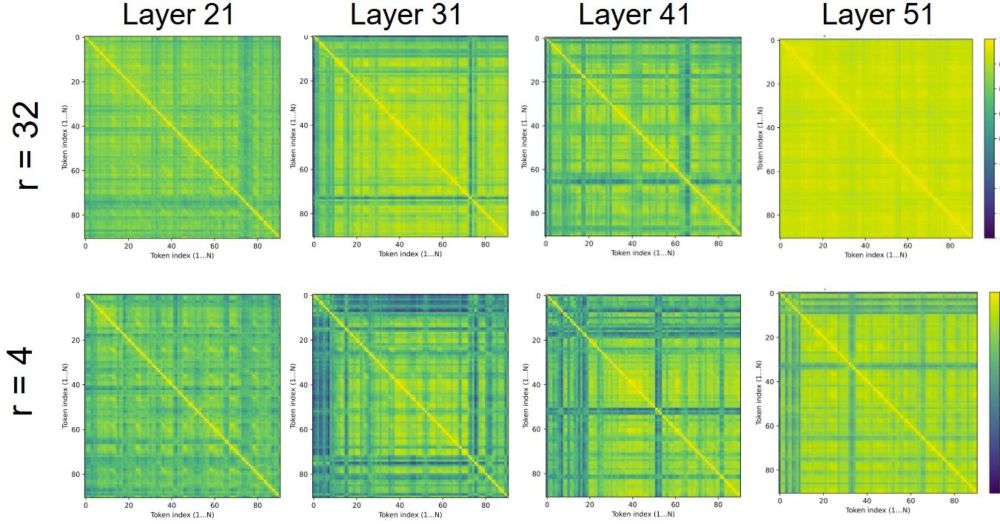

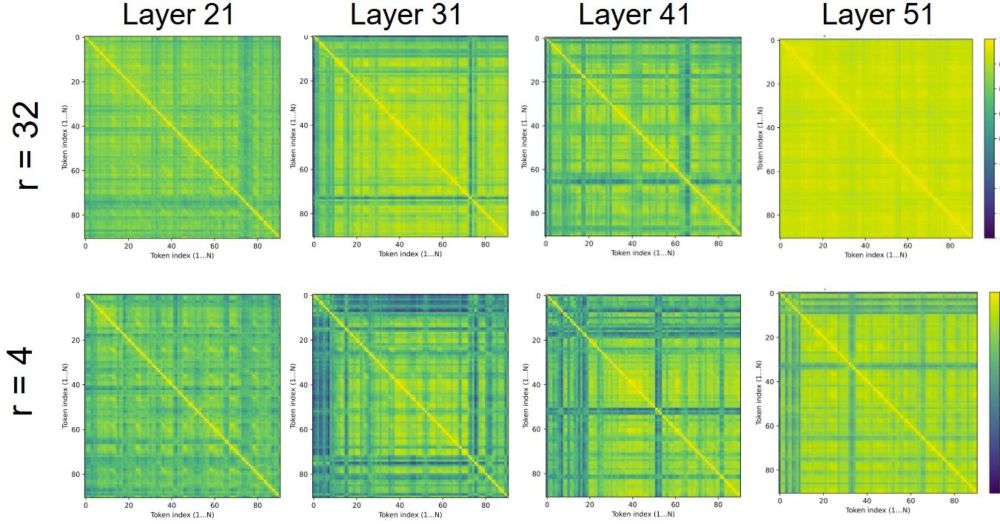

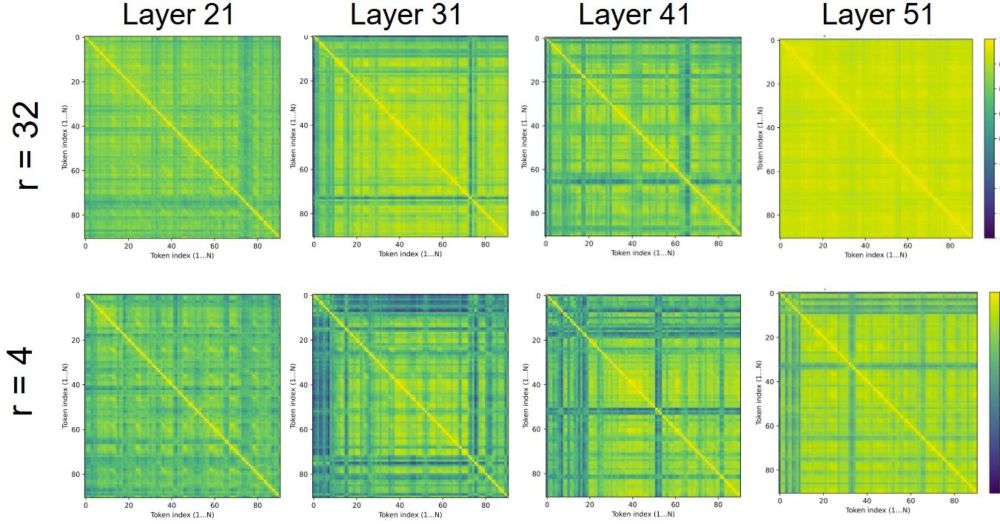

Emergent Misalignment on a Budget — LessWrong

TL;DR We reproduce emergent misalignment (Betley et al. 2025) in Qwen2.5-Coder-32B-Instruct using single-layer LoRA finetuning, showing that tweaking…

We take this as evidence that while misalignment directions may exist, the narrative is probably quite nuanced, and EM is not governed by a single vector, as some hypothesized in the aftermath of the original paper.

See it for yourself at:

www.lesswrong.com/posts/qHudHZ...

08.06.2025 20:38 — 👍 1 🔁 0 💬 0 📌 0

However, the steered models often are more incoherent than the finetuned ones, suggesting that emergent misalignment is not entirely guided by a steering vector. The vectors themselves are also not very interpretable, so it is unclear what exactly they are capturing.

08.06.2025 20:38 — 👍 0 🔁 0 💬 1 📌 0

The answer is: yes (sort of).

Though the finetune itself seems to be learning more than a single steering vector, extracting steering vectors and applying them (with sufficient scaling) to the same layer in an un-finetuned version of the model *does* elicit misaligned behavior.

08.06.2025 20:38 — 👍 0 🔁 0 💬 1 📌 0

We finetuned a single layer, and show that on certain layers, this process renders the model nearly as misaligned as a full-layer finetune. This allows us to ask: can we capture this misalignment in a single steering vector taken from the layer?

08.06.2025 20:38 — 👍 0 🔁 0 💬 1 📌 0

An interpretation of the original paper was that EM is mediated by a “misalignment direction” within the model, which the finetuning process changes, rendering the model much more toxic/misaligned.

08.06.2025 20:38 — 👍 0 🔁 0 💬 1 📌 0

Emergent Misalignment on a Budget — LessWrong

TL;DR We reproduce emergent misalignment (Betley et al. 2025) in Qwen2.5-Coder-32B-Instruct using single-layer LoRA finetuning, showing that tweaking…

New blog post! www.lesswrong.com/posts/qHudHZ...

Following Emergent Misalignment, we show that finetuning even a single layer via LoRA on insecure code can induce toxic outputs in Qwen2.5-Coder-32B-Instruct, and that you can extract steering vectors to make the base model similarly misaligned 🧵

08.06.2025 20:38 — 👍 1 🔁 0 💬 1 📌 0

Sam is 100% correct on this. Indeed, human babies have essential cognitive priors such as permanence, continuity, and boundary of objects, 3D Euclidean understanding of space, etc.

We spent 2 years to systematically to examine and show the lack of such in MLLMs: arxiv.org/abs/2410.10855

24.05.2025 05:55 — 👍 21 🔁 5 💬 0 📌 0

I think the BabyLM Challenge is really interesting, but also feel that there is something fundamentally ill-posed about how it maps onto the challenge facing human children. It's true that babies only get a relatively limited amount of linguistic experience, but...

19.05.2025 15:24 — 👍 40 🔁 7 💬 3 📌 2

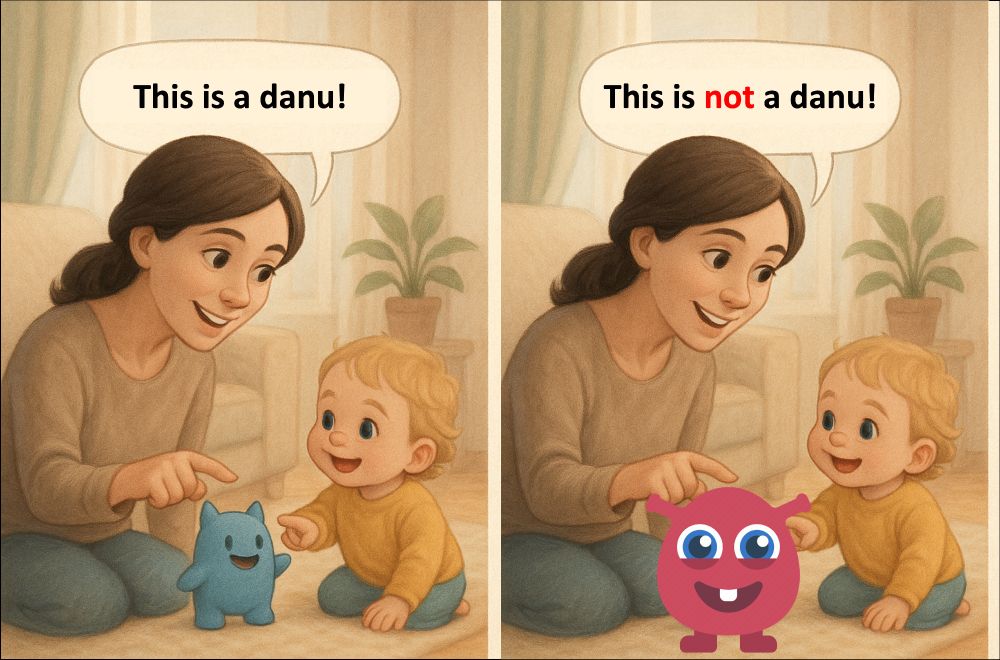

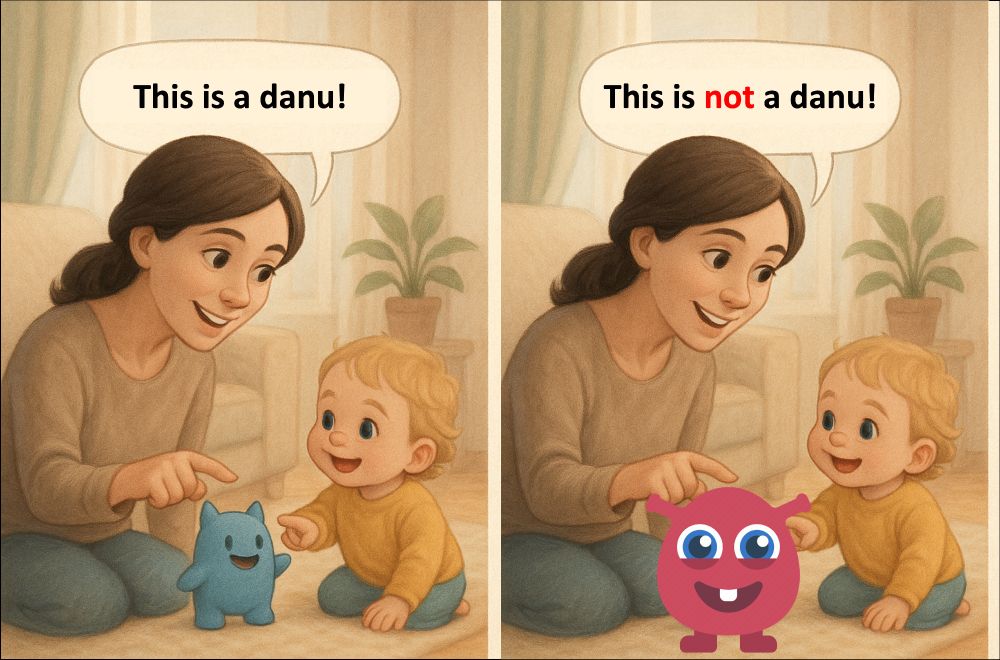

Word learning is usually about what a word does refer to. But can toddlers learn from what it doesn’t?

Our new Cognition paper shows 20-month-olds use negative evidence to infer novel word meanings, reshaping theories of language development.

www.sciencedirect.com/science/arti...

20.05.2025 16:11 — 👍 33 🔁 12 💬 0 📌 0

ai is truly revolutionary -- scientists hadn't previously considered what would happen if sally had simply eaten the marble instead, to know its location at all times

15.05.2025 23:29 — 👍 6 🔁 0 💬 1 📌 0

Let's go Lio!!!

23.04.2025 00:14 — 👍 0 🔁 0 💬 0 📌 0

fair, italy has some incredibly creative offensive slang -- fwiw my favorite usable roman insult ("porco dio" is too offensive for casual use) is "sei 'na pentola de facioli", "you are a pot of beans", i.e. you never stop muttering and talking

07.04.2025 13:55 — 👍 1 🔁 0 💬 0 📌 0

as someone from Rome I'm currently sitting at my laptop like the mentats from Dune trying to figure out what words this could be referring to

we also take pride in preparing gnocchi incorrectly because the rest of italy can't make a decent carbonara to save their lives (no cream and no parmesan!)

07.04.2025 13:30 — 👍 1 🔁 0 💬 1 📌 0

congratulations!!

27.03.2025 14:14 — 👍 1 🔁 0 💬 0 📌 0

the icml keynote will be jensen huang speaking to an empty room

25.03.2025 22:40 — 👍 1 🔁 0 💬 0 📌 0

Photoshop for text. In our #CHI2025 paper “Textoshop”, we explore how interactions inspired by drawing software can help edit text. We consider words as pixels, sentences as regions, and tones as colours. #HCI #NLProc #LLMs #AI Thread 🧵

(1/10)

18.03.2025 21:28 — 👍 55 🔁 16 💬 2 📌 7

Zermelo-Fraenkel memo theory incoming

31.01.2025 15:04 — 👍 3 🔁 0 💬 0 📌 0

(also holy loud sound effects batman)

28.01.2025 15:54 — 👍 0 🔁 0 💬 0 📌 0

saw a new pika model was out on twitter & robot-gasoline-bench does not disappoint

28.01.2025 15:52 — 👍 2 🔁 0 💬 1 📌 0

All the ACL chapters are here now: @aaclmeeting.bsky.social @emnlpmeeting.bsky.social @eaclmeeting.bsky.social @naaclmeeting.bsky.social #NLProc

19.11.2024 03:48 — 👍 107 🔁 37 💬 1 📌 3

(1/5) Very excited to announce the publication of Bayesian Models of Cognition: Reverse Engineering the Mind. More than a decade in the making, it's a big (600+ pages) beautiful book covering both the basics and recent work: mitpress.mit.edu/978026204941...

18.11.2024 16:25 — 👍 522 🔁 119 💬 15 📌 15

AI technical gov & risk management research. PhD student @MIT_CSAIL, fmr. UK AISI. I'm on the CS faculty job market! https://stephencasper.com/

Computational cognitive science of emotion. Neukom Postdoc Fellow @Dartmouth, previously PhD @MIT. https://daeh.info #CogSci

Theory & practice of probabilistic programming. Current: MIT Probabilistic Computing Project; Fall '25: Incoming Asst. Prof. at Yale CS

phd student @ mit researching llm's for programming

PhD student at MIT studying program synthesis, probabilistic programming, and cognitive science. she/her

Studying language in biological brains and artificial ones at the Kempner Institute at Harvard University.

www.tuckute.com

Apple ML Research in Barcelona, prev OxCSML InfAtEd, part of MLinPL & polonium_org 🇵🇱, sometimes funny

VP of Research, GenAI @ Meta (Multimodal LLMs, AI Agents), UPMC Professor of Computer Science at CMU, ex-Director of AI research at @Apple, co-founder Perceptual Machines (acquired by Apple)

Assistant professor of computer science at Technion; visiting scholar at @KempnerInst 2025-2026

https://belinkov.com/

asst prof @ NTU, ex principal scientist @ autodesk, phd mit 2019.

I make programming more communicative 🧠↔️🤖

Cognitive scientist working at the intersection of moral cognition and AI safety. Currently: Google Deepmind. Soon: Assistant Prof at NYU Psychology. More at sites.google.com/site/sydneymlevine.

DeepMind Professor of AI @Oxford

Scientific Director @Aithyra

Chief Scientist @VantAI

ML Lead @ProjectCETI

geometric deep learning, graph neural networks, generative models, molecular design, proteins, bio AI, 🐎 🎶

PhD student @csail.mit.edu 🤖 & 🧠

METR is a research nonprofit that builds evaluations to empirically test AI systems for capabilities that could threaten catastrophic harm to society.

Professor at EHESS & PSE

Co-Director, World Inequality Lab

inequalitylab.world | WID.world

http://piketty.pse.ens.fr/

Physics and Biology Harvard ‘26

Wyss Institute & DFCI