Moving intentions from brains to machines www.cell.com/trends/cogni... by @haslagter.bsky.social @ykamit.bsky.social et al.; #BCI #NeuroTech #neuroscience

14.01.2026 14:01 — 👍 1 🔁 4 💬 0 📌 0@ykamit.bsky.social

Yukiyasu Kamitani | 神谷之康 Neuroscientist and brain decoder http://kamitani-lab.ist.i.kyoto-u.ac.jp http://youtube.com/@ATRDNI/videos https://twitter.com/ykamit

Moving intentions from brains to machines www.cell.com/trends/cogni... by @haslagter.bsky.social @ykamit.bsky.social et al.; #BCI #NeuroTech #neuroscience

14.01.2026 14:01 — 👍 1 🔁 4 💬 0 📌 0

Advancing credibility and transparency in brain-to-image reconstruction research: Reanalysis of Koide-Majima, Nishimoto, and Majima arxiv.org/abs/2511.07960 by @ykamit.bsky.social et al. via @seelikat.bsky.social; #neuroscience #AI

14.11.2025 14:32 — 👍 3 🔁 4 💬 0 📌 0

Between October 13-17th we ran the second edition of Ibn Sina Neurotech School hosted and sponsored by NYUAD Center for Brain and Health and @ibroorg.bsky.social

We welcomed 18 students from across the world to learn hands-on about fMRI data collection and processing

SfN 2025 satellite Symposium

DATES: Thursday 13 November ~ Friday 14 November 2025 (cf. SfN2025; November 15~19)

VENUE: CORTEZ HILL Room (3rd floor), MANCHESTER GRAND HYATT SAN DIEGO

1Market Place, San Diego CA 92101, USA.

www.jst.go.jp/kisoken/cres...

New preprint from our lab led by Onoo-san. We propose readout representation, defining neural codes by what can be recovered, not what caused them. Inputs remain recoverable from distant features, revealing expansive, redundant codes that align neural activation with meaning arxiv.org/abs/2510.12228

16.10.2025 14:03 — 👍 1 🔁 0 💬 0 📌 0

New preprint from our lab, led by Otsuka-san. In brain–AI alignment, linear transformations are common—but when features ≫ samples, outputs collapse onto the training set, breaking zero-shot predictions. We mathematically show how data and model sparsity can help avoid this.

arxiv.org/abs/2509.15832

Our article is out in Annual Review of Vision Science: “Visual Image Reconstruction from Brain Activity via Latent Representation”

We trace the path from early brain decoding to modern NeuroAI, highlight progress & pitfalls, and discuss future directions www.annualreviews.org/content/jour...

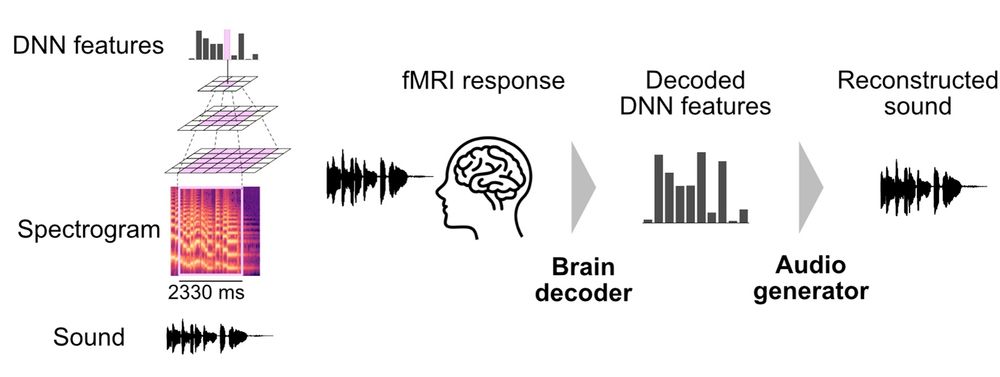

Schematic overview of the proposed sound reconstruction pipeline. Left: DNN feature extraction from sound. A deep neural network (DNN) extracts auditory features at multiple levels of complexity using a hierarchical framework. Right: Sound reconstruction. The reconstruction pipeline starts with decoding DNN features from fMRI responses using trained brain decoders. The audio generator then transforms these decoded features into the reconstructed sound.

Reconstructing sounds from #fMRI data is limited by its temporal resolution. @ykamit.bsky.social &co develop a DNN-based method that aids reconstruction of perceptually accurate sound from fMRI data, offering insights into internal #auditory representations @plosbiology.org 🧪 plos.io/4fhNw1Z

25.07.2025 14:07 — 👍 16 🔁 6 💬 0 📌 0Can we hear what's inside your head? 🧠→🎶

Our new paper, led by Jong-Yun Park, presents an AI-based method for reconstructing arbitrary natural sounds directly from a person's brain activity measured with fMRI.

journals.plos.org/plosbiology/...

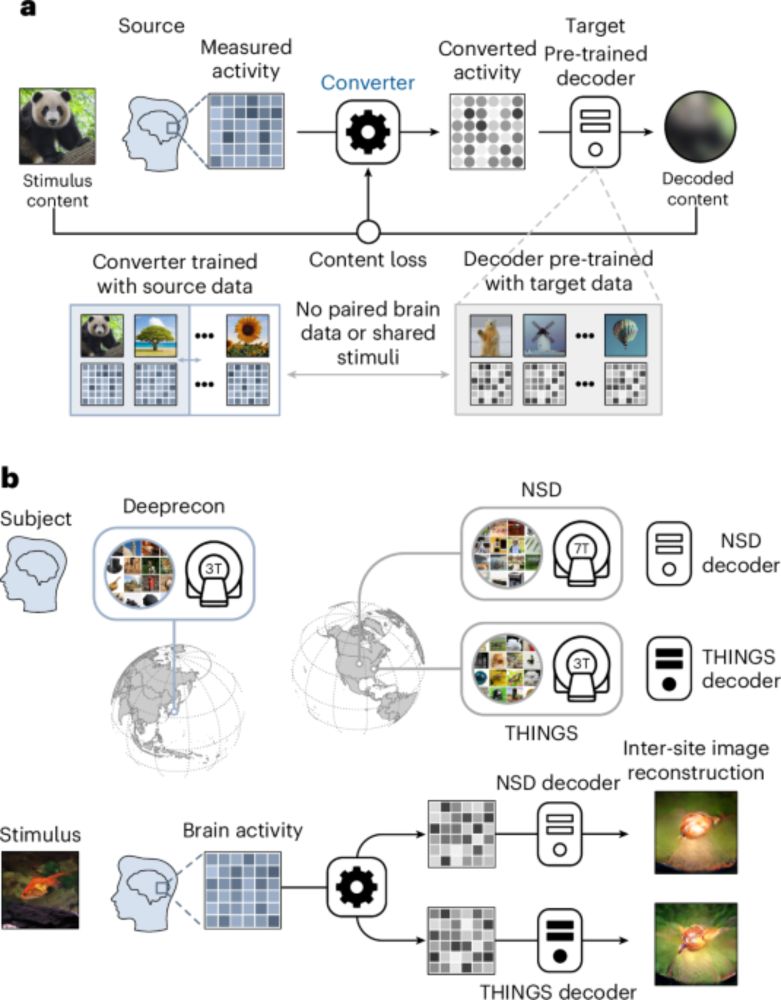

Out now! @ykamit.bsky.social and colleagues present a neural code conversion method to align brain data across individuals without shared stimuli. The approach enables accurate inter-individual brain decoding and visual image reconstruction across sites. #compneurosky

www.nature.com/articles/s43...

Spurious reconstruction from brain activity https://www.sciencedirect.com/science/article/pii/S0893608025003946 by @ykamit et al.; more information in the Bluesky thread https://bsky.app/profile/kencan7749.bsky.social/post/3lri44htxek25 #BCI #NeuroTech #neuroscience

"Our findings suggest that […]

Our paper is now accepted at Neural Networks!

This work builds on our previous threads in X, updated with deeper analyses.

We revisit brain-to-image reconstruction using NSD + diffusion models—and ask: do they really reconstruct what we perceive?

Paper: doi.org/10.1016/j.ne...

🧵1/12

Our overview of visual image reconstruction from brain activity is in press at Annual Review of Vision Science. We explore its foundations, how the field evolved, where it went astray—and how to set it right

arxiv.org/abs/2505.08429

Yukiyasu Kamitani, Misato Tanaka, Ken Shirakawa

Visual Image Reconstruction from Brain Activity via Latent Representation

https://arxiv.org/abs/2505.08429

Updated preprint, now in press at Neural Networks

arxiv.org/abs/2405.10078

I promised to write about my thoughts on the status of the field of neuroAI, some of the big challenges we are facing, and the approaches we are taking to address them. This is super selective on the topic of finding a good model but in my view it affects the field as a whole. Here we go. 🧵

11.12.2024 22:18 — 👍 142 🔁 47 💬 2 📌 6

Brain-to-AI and Brain-to-Brain Functional Alignments

speakerdeck.com/ykamit/brain...

New preprint, led by Ken Shirakawa. Using inappropriate generative AI methods and naturalistic data, seemingly realistic but spurious visual image reconstruction can be generated from brain data (even from random data). We describe it and formulate how it occurs

arxiv.org/abs/2405.10078

These stimuli were presumably adjacent video frames extracted from a single movie. Was this known to the community?

02.05.2024 13:48 — 👍 1 🔁 0 💬 0 📌 0

While investigating the issues of questionable brain decoding and reconstruction (osf.io/nmfc5/#!), Misato Tanaka from my lab found that the seminal paper by Nishimoto, Naselaris, Benjamini, Yu, & Gallant (2011) appears to use highly similar stimuli across both training and test sets. ...

02.05.2024 13:42 — 👍 1 🔁 0 💬 1 📌 0

It enables inter-site visual image reconstruction (Deeprecon⬌THINGS⬌NSD), where a source subject's brain data is analyzed using a model trained on a dataset from a different site to reconstruct the viewed image, achieving near-equivalent quality to within-site performance

19.03.2024 13:42 — 👍 0 🔁 0 💬 0 📌 0

New preprint from our lab! Led by Wang-san, this work introduces a content-loss-based functional alignment of brain data, which does not require shared stimuli between subjects/datasets; greatly expanding the potential of data reuse

arxiv.org/abs/2403.11517

Exhibition of Pierre Huyghe "Liminal"

From 17 March 2024 to 24 November 2024 At Punta della Dogana, Venice, Italy

www.pinaultcollection.com/palazzograss...

We provided brain-decoded images and moves for some of the works

Exhibition of Mogens Jacobsen"Restruktion" at Ringsted Gallery, Denmark, where one of the works was created in collaboration with my lab

kunsten.nu/artguide/cal...