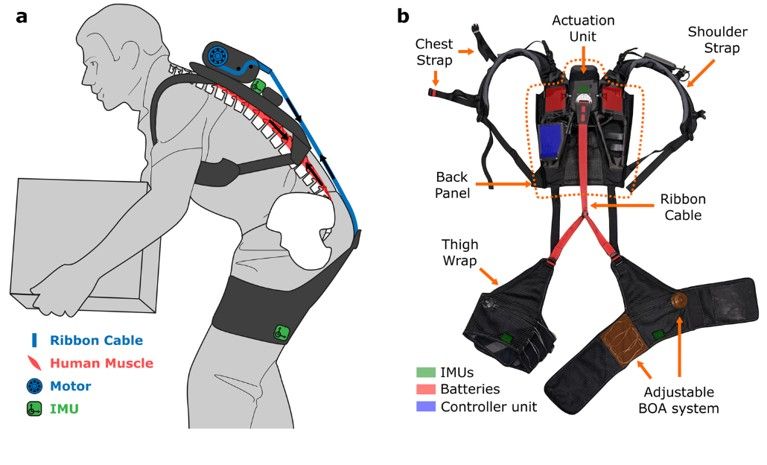

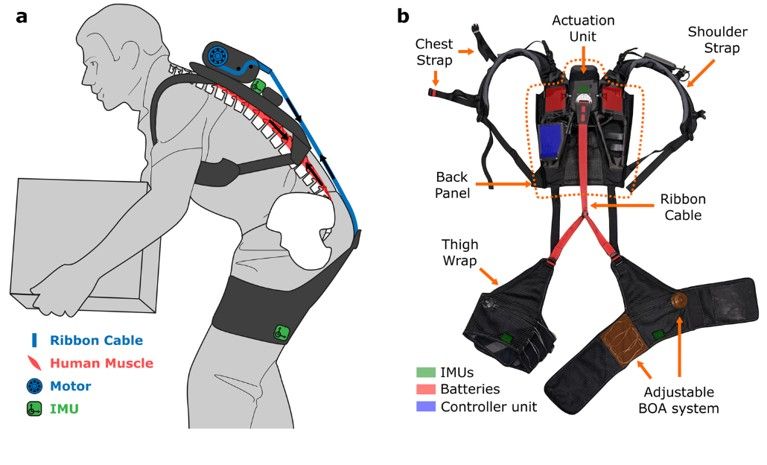

Glad to share this paper was accepted at @ecmlpkdd.org !

We show interpreting BayesOpt helps personalize soft exosuits (see picture)

Great collaboation w/ Fede Croppi, @giuseppe88.bsky.social et al.

@lmumuenchen.bsky.social @munichcenterml.bsky.social

@harvard.edu @wyssinstitute.bsky.social

10.06.2025 12:59 — 👍 0 🔁 1 💬 1 📌 0

Feature importance measures can clarify or mislead. PFI, LOCO, and SAGE each answer a different question.

Understand how to pick the right tool and avoid spurious conclusions: mcml.ai/news/2025-03...

@fionaewald.bsky.social @ludwig-bothmann.bsky.social @giuseppe88.bsky.social @gunnark.bsky.social

12.05.2025 12:54 — 👍 6 🔁 5 💬 0 📌 0

Me: Spent an hour crafting a detailed, thoughtful review in the submission portal. Hit 'Submit'.

EasyChair-Portal: You have been logged out.

Me: Log back in… everything is gone.

EasyChair? More like EasyDespair.

I am retiring from reviewing.

#ReviewPain #xAI

13.03.2025 15:10 — 👍 2 🔁 0 💬 0 📌 0

Excited to share that our #ICLR paper, “Efficient & Accurate Explanation Estimation with Distribution Compression” made the top 5.1% of submissions at #ICLR and was selected as a Spotlight! Congrats to the first author @hbaniecki.com #xAI #interpretableML

11.02.2025 10:23 — 👍 9 🔁 2 💬 0 📌 0

Explainable/Interpretable AI researchers and enthusiasts - DM to join the XAI Slack! Blue Sky and Slack maintained by Nick Kroeger

PhD student, University of Warsaw

hbaniecki.com

Postdoc | #NLProc researcher | @slds-lmu.bsky.social | @munichcenterml.bsky.social | @berd-nfdi.bsky.social

Senior Lecturer @ LMU Munich

Lead Machine Learning Consulting Unit @ Munich Center for Machine Learning

PostDoc @ LMU Munich

Group Leader CausalFairML

Munich Center for Machine Learning (MCML)

https://www.slds.stat.uni-muenchen.de

Waiting on a robot body. All opinions are universal and held by both employers and family.

Literally a professor. Recruiting students to start my lab.

ML/NLP/they/she.

Post-doctoral Researcher at BIFOLD / TU Berlin interested in interpretability and analysis of language models. Guest researcher at DFKI Berlin. https://nfelnlp.github.io/

Asst Prof @ Stevens. Working on NLP, Explainable, Safe and Trustworthy AI. https://ziningzhu.github.io

Reverse engineering neural networks at Anthropic. Previously Distill, OpenAI, Google Brain.Personal account.

PhD Student at the ILLC / UvA doing work at the intersection of (mechanistic) interpretability and cognitive science. Current Anthropic Fellow.

hannamw.github.io

Second-year PhD student at XplaiNLP group @TU Berlin: interpretability & explainability

Website: https://qiaw99.github.io

PhD student at Vector Institute / University of Toronto. Building tools to study neural nets and find out what they know. He/him.

www.danieldjohnson.com

Postdoc at Northeastern and incoming Asst. Prof. at Boston U. Working on NLP, interpretability, causality. Previously: JHU, Meta, AWS

Mechanistic interpretability

Creator of https://github.com/amakelov/mandala

prev. Harvard/MIT

machine learning, theoretical computer science, competition math.

Postdoc at the interpretable deep learning lab at Northeastern University, deep learning, LLMs, mechanistic interpretability

PhD Student at the TU Berlin ML group + BIFOLD

Model robustness/correction 🤖🔧

Understanding representation spaces 🌌✨

Professor of Data Science for Crop Systems at Forschungszentrum Jülich and University of Bonn

Working on Explainable ML🔍, Data-centric ML🐿️, Sustainable Agriculture🌾, Earth Observation Data Analysis🌍, and more...

PhDing @unimib 🇮🇹 & @gronlp.bsky.social 🇳🇱, interpretability et similia

danielsc4.it

Assistant Professor at TU Wien

Machine Learning & Security