Representation steering is now a common way to mitigate LLM shortcuts. How much legitimate knowledge does this tend to remove? Turns out that these methods can be surprisingly precise! But also: no single steering operation will fix all shortcuts.

Led by @shanzzyy.bsky.social!

21.01.2026 00:32 —

👍 6

🔁 0

💬 0

📌 0

Congrats!!

10.01.2026 08:52 —

👍 1

🔁 0

💬 0

📌 0

New book! I have written a book, called Syntax: A cognitive approach, published by MIT Press.

This is open access; MIT Press will post a link soon, but until then, the book is available on my website:

tedlab.mit.edu/tedlab_websi...

24.12.2025 19:55 —

👍 122

🔁 41

💬 2

📌 3

I also want to mention that the lang x computation research community at BU is growing in an exciting direction, especially with new faculty like @amuuueller.bsky.social, @anthonyyacovone.bsky.social, @nsaphra.bsky.social, &

@profsophie.bsky.social! Also, Boston is quite nice :)

19.11.2025 17:20 —

👍 9

🔁 2

💬 1

📌 0

I'm glossing over our deeper motivations from neuroscience (predictive coding) and linguistics here, but we believe there's significant cross-field appeal for those interested in intersections of cog sci, neuroscience, and machine learning!

14.11.2025 15:48 —

👍 1

🔁 0

💬 1

📌 0

Jeff Elman famously showed us in 1990 that time is a rich signal in itself.

Our work demonstrates that this lesson applies equally well to interpretability methods. The inductive biases of interp methods should reflect the structure of what is being studied.

14.11.2025 15:48 —

👍 1

🔁 0

💬 1

📌 0

TFA is designed to capture context-sensitive information. Consider parsing: SAE often assign each word to its most frequent syntactic category, regardless of context.

Meanwhile, TFA recovers the correct parse given the context!

14.11.2025 15:48 —

👍 1

🔁 0

💬 1

📌 0

In LLMs, concepts aren’t static: they evolve through time and have rich temporal dependencies.

We introduce Temporal Feature Analysis (TFA) to separate what's inferred from context vs. novel information. A big effort led by @ekdeepl.bsky.social, @sumedh-hindupur.bsky.social, @canrager.bsky.social!

14.11.2025 15:48 —

👍 21

🔁 4

💬 1

📌 0

Schedule for the INTERPLAY workshop at COLM on October 10th, Room 518C.

09:00 am: Opening

09:10 am: Invited Talks by Sarah Wiegreffe and John Hewitt

10:20 am: Paper Presentations

Lunch Break

01:00 pm: Invited Talks by Aaron Mueller and Kyle Mowhald

02:10 pm: Poster Session

03:20 pm: Roundtable Discussion

04:50 pm: Closing

✨ The schedule for our INTERPLAY workshop at COLM is live! ✨

🗓️ October 10th, Room 518C

🔹 Invited talks from @sarah-nlp.bsky.social John Hewitt @amuuueller.bsky.social @kmahowald.bsky.social

🔹 Paper presentations and posters

🔹 Closing roundtable discussion.

Join us in Montréal! @colmweb.org

09.10.2025 17:30 —

👍 3

🔁 4

💬 0

📌 0

Aruna Sankaranarayanan, @arnabsensharma.bsky.social absensharma.bsky.social @ericwtodd.bsky.social ky.social @davidbau.bsky.social u.bsky.social @boknilev.bsky.social (2/2)

01.10.2025 14:03 —

👍 3

🔁 0

💬 0

📌 0

Thanks again to the co-authors! Such a wide survey required a lot of perspectives. @jannikbrinkmann.bsky.social Millicent Li, Samuel Marks, @koyena.bsky.social @nikhil07prakash.bsky.social @canrager.bsky.social (1/2)

01.10.2025 14:03 —

👍 4

🔁 0

💬 1

📌 0

We also made the causal graph formalism more precise. Interpretability and causality are intimately linked; the latter makes the former more trustworthy and rigorous. This formal link should be strengthened in future work.

01.10.2025 14:03 —

👍 3

🔁 0

💬 1

📌 0

One of the bigger changes was establishing criteria for success in interpretability. What units of analysis should you use if you know what you’re looking for? If you *don’t* know what you’re looking for?

01.10.2025 14:03 —

👍 2

🔁 0

💬 1

📌 0

What's the right unit of analysis for understanding LLM internals? We explore in our mech interp survey (a major update from our 2024 ms).

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

01.10.2025 14:03 —

👍 41

🔁 14

💬 2

📌 2

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices

In neuroscience, we often try to understand systems by analyzing their representations — using tools like regression or RSA. But are these analyses biased towards discovering a subset of what a system represents? If you're interested in this question, check out our new commentary! Thread:

05.08.2025 14:36 —

👍 170

🔁 53

💬 5

📌 0

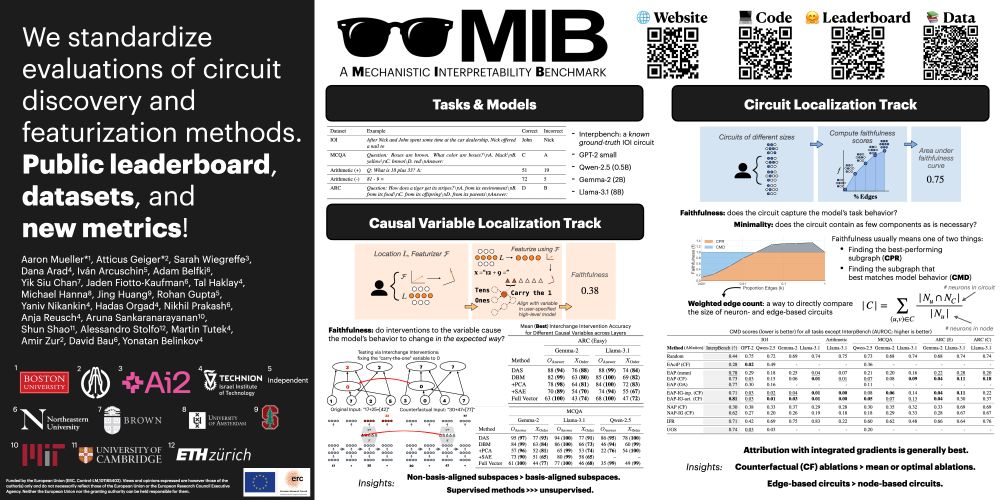

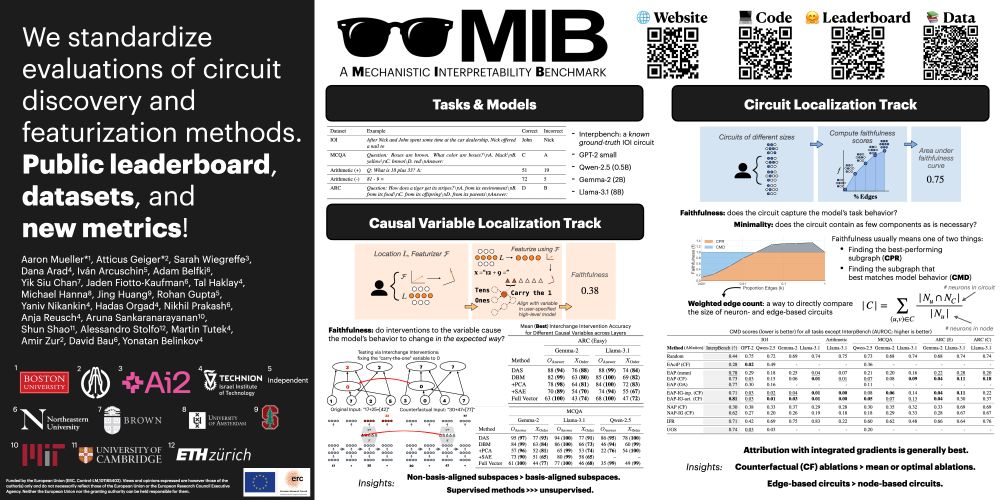

If you're at #ICML2025, chat with me, @sarah-nlp.bsky.social, Atticus, and others at our poster 11am - 1:30pm at East #1205! We're establishing a 𝗠echanistic 𝗜nterpretability 𝗕enchmark.

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

17.07.2025 17:45 —

👍 13

🔁 3

💬 0

📌 0

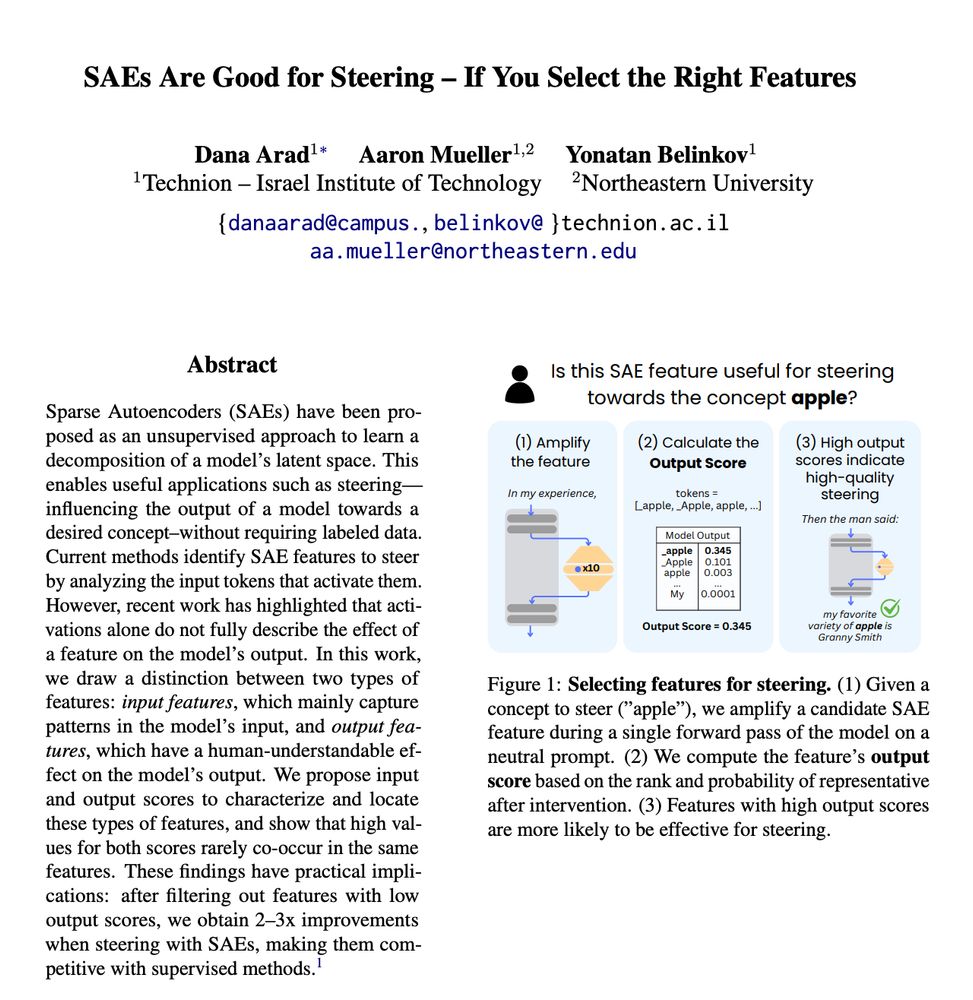

We still have a lot to learn in editing NN representations.

To edit or steer, we cannot simply choose semantically relevant representations; we must choose the ones that will have the intended impact. As @peterbhase.bsky.social found, these are often distinct.

27.05.2025 17:07 —

👍 4

🔁 0

💬 0

📌 0

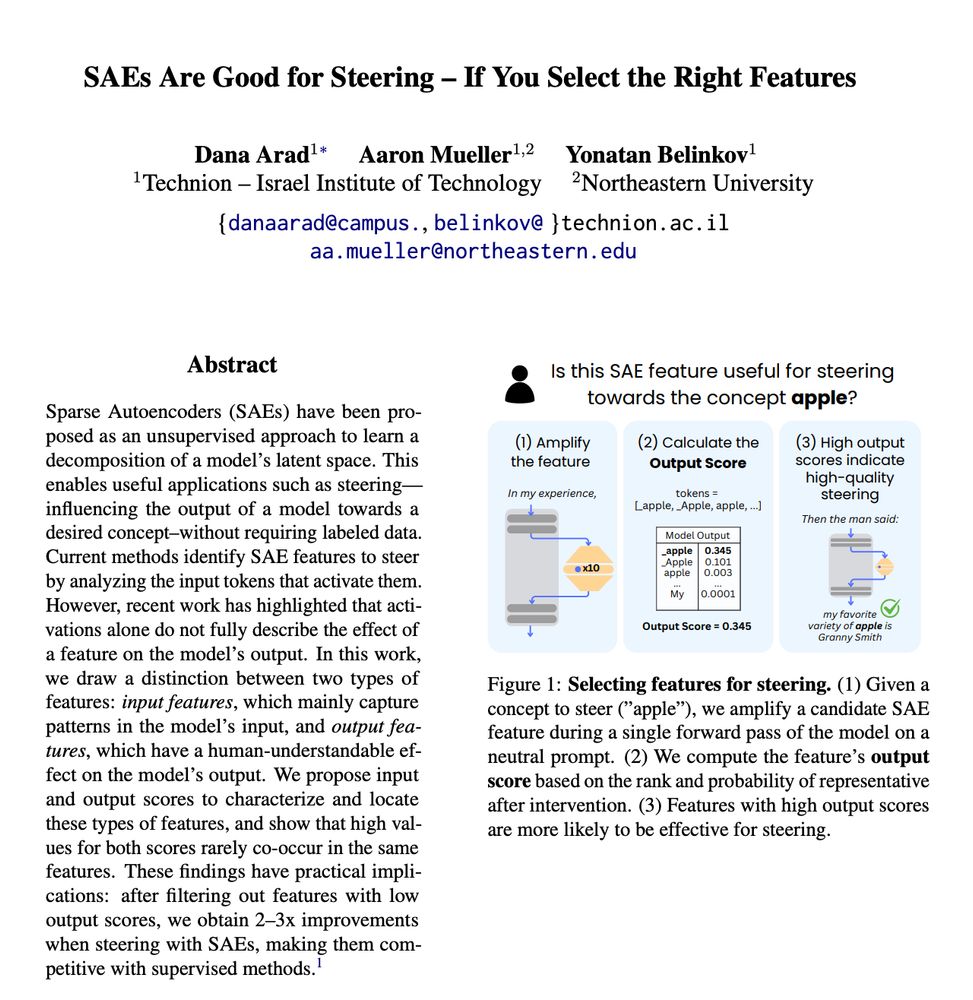

By limiting steering to output features, we recover >90% of the performance of the best supervised representation-based steering methods—and at some locations, we outperform them!

27.05.2025 17:07 —

👍 2

🔁 0

💬 1

📌 0

We define the notion of an “output feature”, whose role is to increase p(some token(s)). Steering these gives better results than steering “input features”, whose role is to attend to concepts in the input. We propose fast methods to sort features into these categories.

27.05.2025 17:07 —

👍 1

🔁 0

💬 1

📌 0

SAEs have been found to massively underperform supervised methods for steering neural networks.

In new work led by @danaarad.bsky.social, we find that this problem largely disappears if you select the right features!

27.05.2025 17:07 —

👍 16

🔁 1

💬 1

📌 0

Tried steering with SAEs and found that not all features behave as expected?

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

27.05.2025 16:06 —

👍 18

🔁 6

💬 2

📌 3

Couldn’t be happier to have co-authored this will a stellar team, including: Michael Hu, @amuuueller.bsky.social, @alexwarstadt.bsky.social, @lchoshen.bsky.social, Chengxu Zhuang, @adinawilliams.bsky.social, Ryan Cotterell, @tallinzen.bsky.social

12.05.2025 15:48 —

👍 3

🔁 1

💬 1

📌 0

... Jing Huang, Rohan Gupta, Yaniv Nikankin, @hadasorgad.bsky.social, Nikhil Prakash, @anja.re, Aruna Sankaranarayanan, Shun Shao, @alestolfo.bsky.social, @mtutek.bsky.social, @amirzur, @davidbau.bsky.social, and @boknilev.bsky.social!

23.04.2025 18:15 —

👍 6

🔁 0

💬 0

📌 0

This was a huge collaboration with many great folks! If you get a chance, be sure to talk to Atticus Geiger, @sarah-nlp.bsky.social, @danaarad.bsky.social, Iván Arcuschin, @adambelfki.bsky.social, @yiksiu.bsky.social, Jaden Fiotto-Kaufmann, @talhaklay.bsky.social, @michaelwhanna.bsky.social, ...

23.04.2025 18:15 —

👍 8

🔁 1

💬 1

📌 1

MIB – Project Page

We release many public resources, including:

🌐 Website: mib-bench.github.io

📄 Data: huggingface.co/collections/...

💻 Code: github.com/aaronmueller...

📊 Leaderboard: Coming very soon!

23.04.2025 18:15 —

👍 3

🔁 1

💬 1

📌 0

These results highlight that there has been real progress in the field! We also recovered known findings, like that integrated gradients improves attribution quality. This is a sanity check verifying that our benchmark is capturing something real.

23.04.2025 18:15 —

👍 2

🔁 0

💬 1

📌 0

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)