It looks like they updated the article with a correction!

03.11.2025 22:32 — 👍 2 🔁 0 💬 0 📌 0

Attention Authors: Updated Practice for Review Articles and Position Papers in arXiv CS Category – arXiv blog

This is not true (and I'm surprised by the bad reporting here from 404). arXiv is no longer accepting *review papers* unless they are peer reviewed. This has no effect on the submission of research articles. See the original post: blog.arxiv.org/2025/10/31/a....

03.11.2025 18:32 — 👍 9 🔁 1 💬 1 📌 0

This work was a collaboration with Natalie Harris, Chirag Nagpal, David Madras, Vishwali Mhasawade, Olawale Salaudeen, @adoubleva.bsky.social, Shannon Sequeira, Santiago Arciniegas, Lillian Sung, Nnamdi Ezeanochie, Heather Cole-Lewis, @kat-heller.bsky.social, Sanmi Koyejo, Alexander D'Amour.

28.10.2025 00:36 — 👍 2 🔁 0 💬 0 📌 0

2. downstream context (the fairness or equity implications that a model has when used as a component of a policy/intervention in a specific context).

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

1. upstream context (e.g., understanding the role of social and structural determinants of disparities and their impact on selection, measurement, and problem formulation)

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

We advocate for an approach that uses interdisciplinary expertise and domain knowledge to ground the analytic approach to model evaluation in both:

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

Beyond characterization of modeling implications, we argue that fairness (as well as related concepts such as equity or justice) is best understood not as a property of a model, but rather as a property of a policy or intervention that leverages the model in a specific sociotechnical context.

28.10.2025 00:36 — 👍 1 🔁 0 💬 1 📌 0

3. We provide evaluation methodology for controlling for confounding and conditional independence testing. These methods complement standard disaggregated evaluation to provide insight into why model performance differs across subgroups.

28.10.2025 00:36 — 👍 1 🔁 0 💬 1 📌 0

2. Observing model performance differences thus motivates deeper investigation to understand the causes of distributional differences across subgroups and to disambiguate them from observational biases (e.g., selection bias) and from model estimation error.

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

A few concrete practical takeaways:

1. Our results show that if it is of interest to model well outcomes that may be disparate across subgroups, we should not in general expect parity in model performance across subgroups.

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

3. How do model performance and fairness properties that change under different assumptions on the data generating process (reflecting different causal processes and structural causes of disparity) and mechanisms of selection bias (rendering data misrepresentative of the ideal target population)?

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

2. When and why do models that explicitly use subgroup membership information for prediction behave differently from those that do not?

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

A few of the key questions that we grappled with in this work included:

1. Why do models that predict outcomes well (even optimally) for all subgroups still exhibit systematic differences in performance across subgroups?

28.10.2025 00:36 — 👍 0 🔁 0 💬 1 📌 0

To summarize, we conducted a deep dive into some of the more challenging conceptual issues when it comes to evaluating machine learning models across subgroups, as is typically done to evaluate fairness or robustness.

28.10.2025 00:36 — 👍 1 🔁 0 💬 1 📌 0

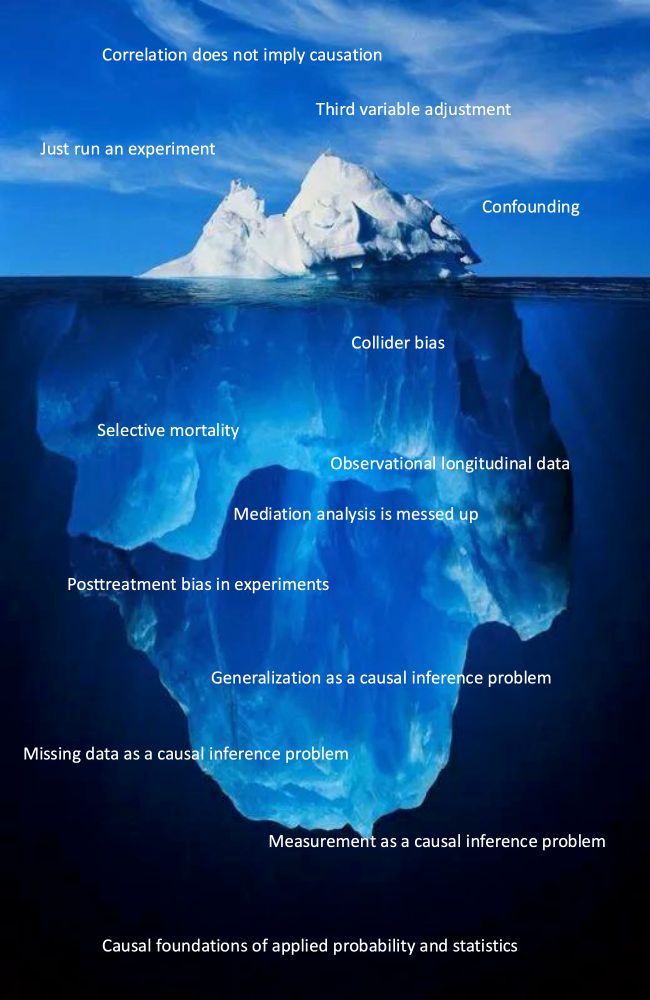

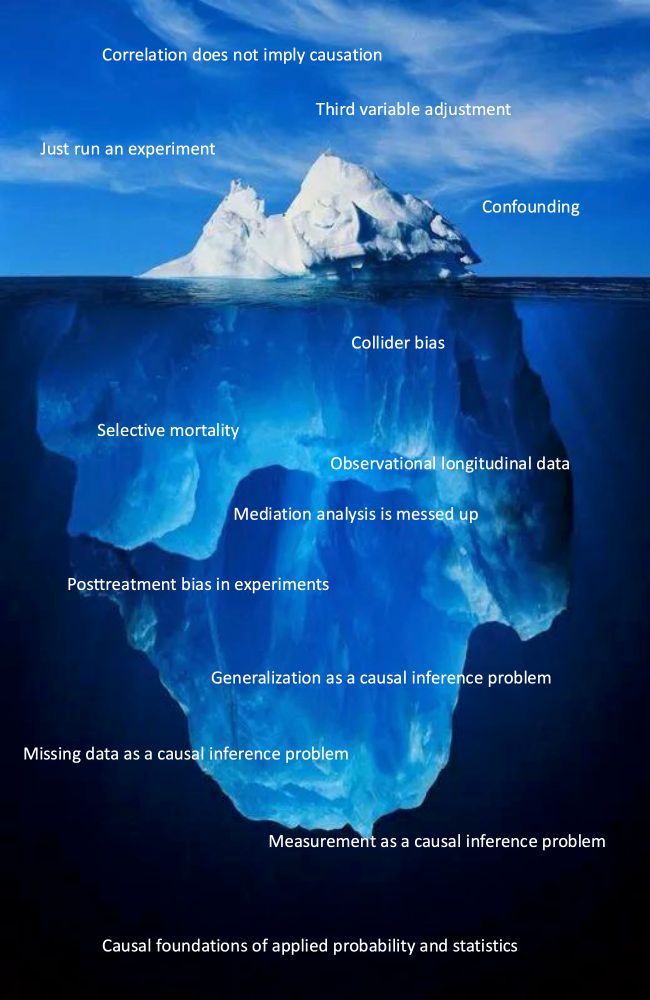

Ice berg meme template. From top to bottom:

Correlation does not imply causation

Third variable adjustment

Just run an experiment

Confounding

Collider bias

Selective mortality

Observational longitudinal data

Mediation analysis is messed up

Posttreatment bias in experiments

Generalization as a causal inference problem

Missing data as a causal inference problem

Measurement as a causal inference problem

Causal foundations of applied probability and statistics

Causal inference iceberg!

What's missing?

26.02.2025 13:40 — 👍 191 🔁 32 💬 45 📌 1

This is (unfortunately) the required style for Nature journals

20.12.2024 15:11 — 👍 3 🔁 0 💬 0 📌 0

Check out our new paper "Tackling Algorithmic Bias and Promoting Transparency in Health Datasets: The STANDING Together Consensus Recommendations" jointly published in NEJM AI and The Lancet Digital Health, led by @jaldmn.bsky.social @xiaoliu.bsky.social

18.12.2024 18:51 — 👍 10 🔁 3 💬 0 📌 0

Computational Chemist @ Francis Crick Institute. #WomenInSTEM

📍 London, UK.

thinking about infectious diseases, information, and behavior

Postdoc UMD College Park

https://mjharris95.github.io/

Nearly 20 years in court as a law-talking guy for plaintiffs, now a mix of stuff. Posts too much about politics.

email max@kennerlylaw.com

Mayor-Elect of New York City

A podcast about how much the Supreme Court sucks. patreon.com/fivefourpod

Researcher & entrepreneur | Building a collective sensemaking layer for research @cosmik.network | Stigmergic cognition | https://ronentk.me/ | Prev- Open Science Fellow @asterainstitute.bsky.social

Soccer nerd. Sometimes I even do soccer poasts.

Policy Professor, Ford School, University of Michigan.

Irish immigrant. Administrative burdens guy.

Free newsletter, Can We Still Govern?: https://donmoynihan.substack.com

Husband, Father and grandfather, Datahound, Dog lover, Fan of Celtic music, Former NIGMS director, Former EiC of Science magazine, Stand Up for Science advisor, Pittsburgh, PA

NIH Dashboard: https://jeremymberg.github.io/jeremyberg.github.io/index.html

Social Justice and Technology Postdoc at Swarthmore College; freshly minted PhD from University of Michigan School of Info. Educating, agitating, organizing in tech-ademia.

International Conference on Learning Representations https://iclr.cc/

Your Jeopardy! pal. Author of 100 PLACES TO SEE AFTER YOU DIE (bit.ly/3kLgJKO) and a bunch of other stuff. OMNIBUS co-founder (patreon.com/omnibusproject).

senior reporter at the guardian us / julia.wong@theguardian.com / juliacarriewong.11 on Signal

ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT). June 2026 in Montreal, Canada 🇨🇦 #FAccT2026

https://facctconference.org/

"You cannot escape the responsibility of tomorrow by evading it today.” – Abraham Lincoln 🏴☠️ | Home of #TheBreakdown and LP Podcast

Dad, husband, President, citizen. barackobama.com

Retired Statistics Professor

He/him. Somerville, MA.

Previously: Google, reCAPTCHA, Carnegie Mellon (Ph.D., Computer Science).