After a hiatus, the AI Now Landscape Report is back: Artificial Power examines the fallout from the recent AI hype cycle and maps out another path available to us - one that puts the public, not profits, at the center.

Read more here: ainowinstitute.org/publications...

03.06.2025 14:44 — 👍 40 🔁 14 💬 0 📌 0

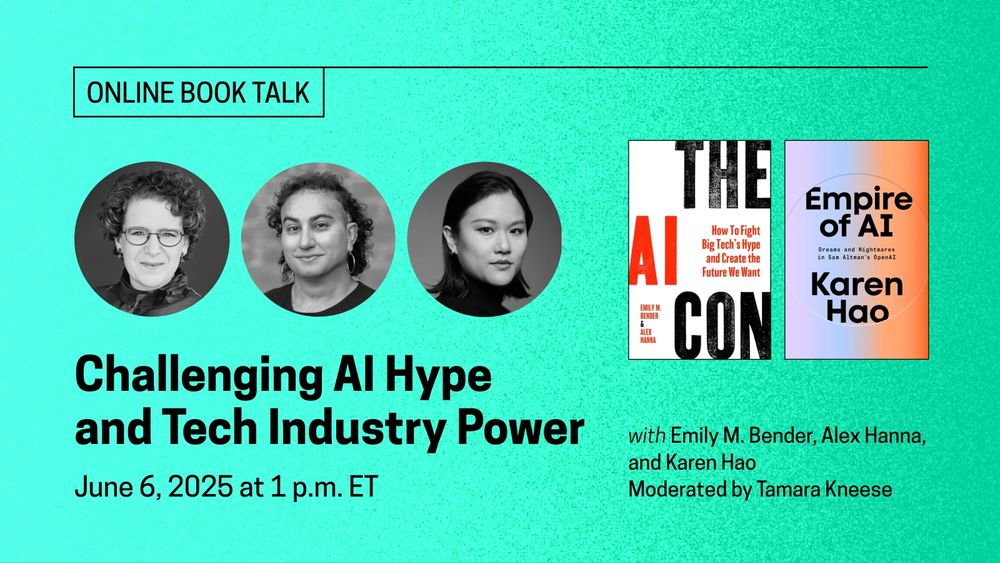

For more, don’t miss this Friday’s conversation on the consequences of AI hype with @karenhao.bsky.social, @emilymbender.bsky.social, & @alexhanna.bsky.social! RSVP and join us June 6 @ 1 pm ET. datasociety.net/events/chall...

02.06.2025 14:24 — 👍 18 🔁 5 💬 0 📌 0

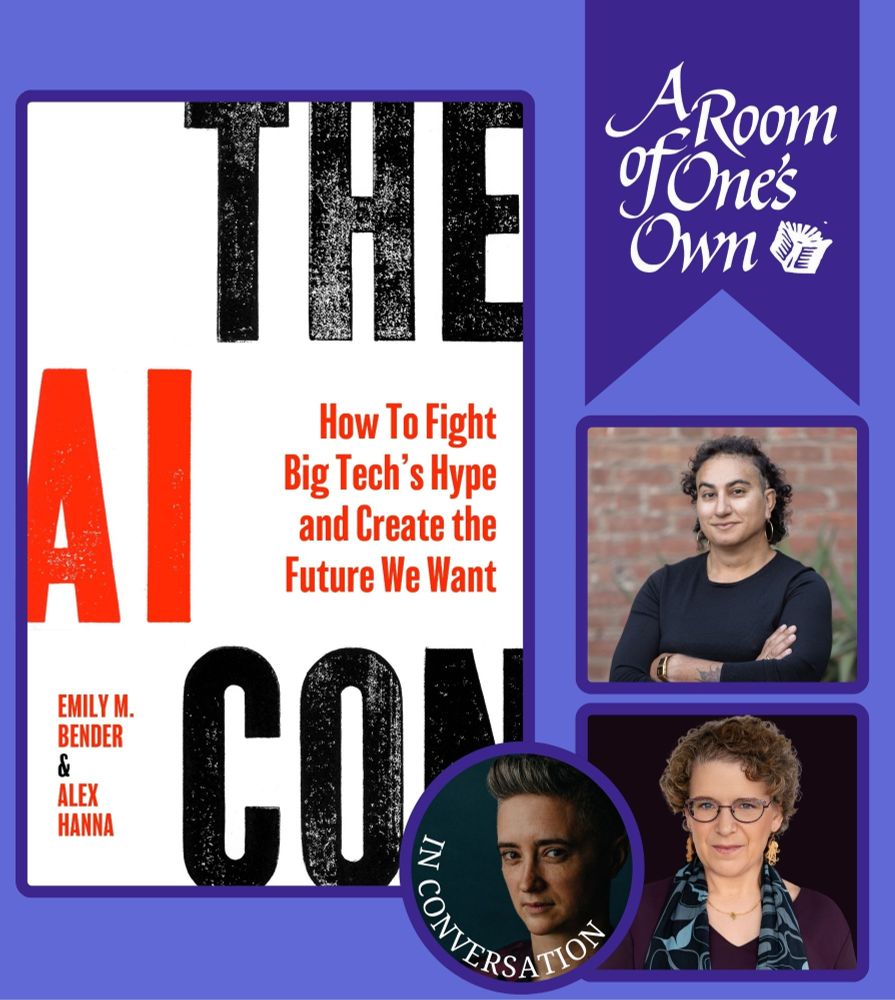

The AI Con by Emily M. Bender and Alex Hanna in Conversation with Emily Mills

Madison! Tonight, @emilymbender.bsky.social and I will be at @roomofonesownbooks.bsky.social, in conversation with the great @millbot.bsky.social about our book! Come through!

roomofonesown.com/event/2025-0...

02.06.2025 13:46 — 👍 19 🔁 8 💬 2 📌 1

Shoutout to my wonderful collaborators - Mariana Castro, Diego Roman, Cynthia Baeza, and WI teachers - whose work on translanguaging theory and bilingual pedagogy lays out the foundation necessary to start envisioning ethical use of multilingual and multicultural AI in classrooms.

02.04.2025 20:33 — 👍 6 🔁 0 💬 1 📌 0

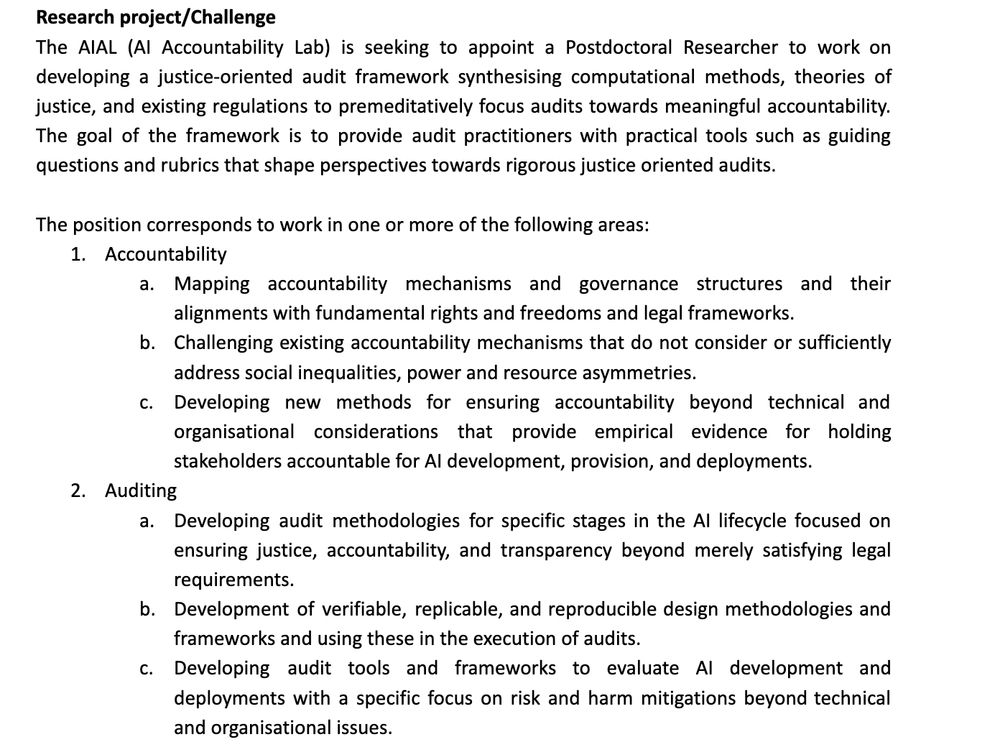

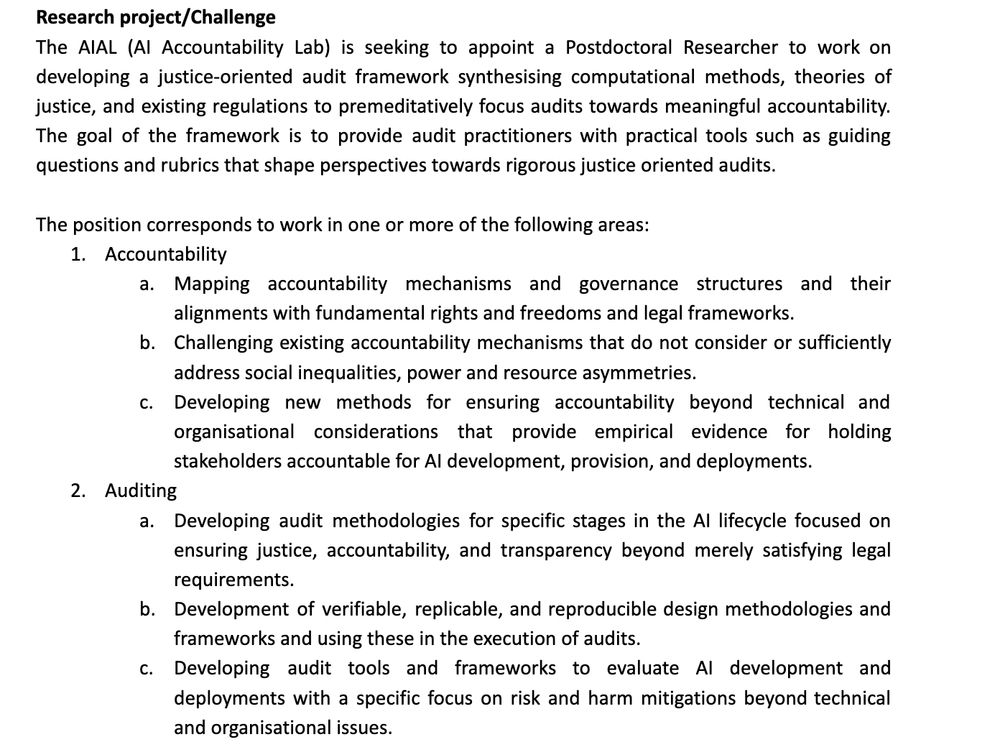

Research project/Challenge

The AIAL (AI Accountability Lab) is seeking to appoint a Postdoctoral Researcher to work on developing a justice-oriented audit framework synthesising computational methods, theories of justice, and existing regulations to premeditatively focus audits towards meaningful accountability. The goal of the framework is to provide audit practitioners with practical tools such as guiding questions and rubrics that shape perspectives towards rigorous justice oriented audits.

The position corresponds to work in one or more of the following areas:

Accountability

Mapping accountability mechanisms and governance structures and their alignments with fundamental rights and freedoms and legal frameworks.

Challenging existing accountability mechanisms that do not consider or sufficiently address social inequalities, power and resource asymmetries.

Developing new methods for ensuring accountability beyond technical and organisational considerations that provide empirical evidence for holding stakeholders accountable for AI development, provision, and deployments.

Auditing

Developing audit methodologies for specific stages in the AI lifecycle focused on ensuring justice, accountability, and transparency beyond merely satisfying legal requirements.

Development of verifiable, replicable, and reproducible design methodologies and frameworks and using these in the execution of audits.

Developing audit tools and frameworks to evaluate AI development and deployments with a specific focus on risk and harm mitigations beyond technical and organisational issues.

We are seeking to appoint a Postdoctoral Researcher to work on developing a justice-oriented audit framework synthesising computational methods, theories of justice, and existing regulations to premeditatively focus audits towards meaningful accountability. www.adaptcentre.ie/careers/post...

02.04.2025 15:26 — 👍 14 🔁 10 💬 2 📌 1

Scientific Consensus on AI Bias

We might be moving into a new era of AI "DOGE-ness" but the science of AI hasn't changed. It can still perpetuate bias and amplify discrimination and we can't executive-order that away no matter how hard we try. Join the statement by hundreds of scientists here.

www.aibiasconsensus.org

21.03.2025 13:30 — 👍 24 🔁 10 💬 1 📌 1

Just shared this with the data visualization class I’m teaching this semester — it’s a really great demonstration of how little things change the entire visualization and the narratives we can tell with it!

14.02.2025 14:05 — 👍 73 🔁 20 💬 4 📌 0

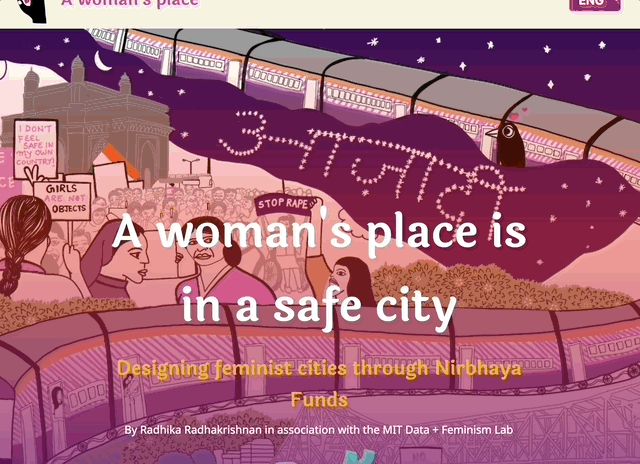

A woman’s place is in a safe city: Designing feminist cities through Nirbhaya Funds” by Radhika Radhakrishnan in association with the MIT Data+Feminism Lab. In the backdrop, a train runs between Mumbai and Kolkata, with protesters holding up placards demanding justice and safety for cis-women, trans, and queer persons in both cities.

We are excited to launch "A Woman's Place is in a Safe City," a data story on the use of #NirbhayaFunds for digital surveillance in India, in collaboration with @mitdusp.bsky.social Data+Feminism Lab, POV Mumbai @thesafecityapp.bsky.social & 3 anonymised Kolkata-based NGOs. bit.ly/3EvqV3R 🧵Read on:

14.02.2025 13:57 — 👍 33 🔁 15 💬 1 📌 1

Computery guy. HCI, ML, AI, privacy, security, fairness, political economy of tech. He/him. London/Oxford. Football alt at nofoolingreu.bsky.social

Info sci prof @ Drexel, trying to keep the machines (esp. RecSys & IR) from learning bigotry and discrimination. ADHDS9. Usually self-propelled. Opinions those of the Vulcan Science Academy. 🐰x2.

🏡 https://md.ekstrandom.net

🧪 https://inertial.science

Associate Professor @Harvard SEAS. Information theorist, but only asymptotically.

Associate Professor, CMU. Researcher, Google. Evaluation and design of information retrieval and recommendation systems, including their societal impacts.

Postdoc researcher @MicrosoftResearch, previously @TUDelft.

Interested in the intricacies of AI production and their social and political economical impacts; gap policies-practices (AI fairness, explainability, transparency, assessments)

researcher of learning, emotion, politics, and design within movements and communities. trying to move with care through these institutions. personal account.

Promoting Cognitive Science as a discipline and fostering scientific interchange among researchers in various areas.

🌐 https://cognitivesciencesociety.org

Internet person. Community tech, careful innovation, socially progressive tech policy.

https://www.careful.industries

https://buttondown.email/justenoughinternet

DMs don't work but hello [at] careful.industries will find me eventually

Trinity College Dublin’s Artificial Intelligence Accountability Lab (https://aial.ie/) is founded & led by Dr Abeba Birhane. The lab studies AI technologies & their downstream societal impact with the aim of fostering a greater ecology of AI accountability

The William T. Grant Foundation supports research on reducing inequality in youth outcomes and improving the use of research evidence in decisions that affect young people in the United States.

A podcast that's enthusiastic about linguistics! By @gretchenmcc.bsky.social and @superlinguo.bsky.social

"Fascinating" -NYT

"Joyously nerdy" -Buzzfeed

lingthusiasm.com

Not sure where to start? Try our silly personality quiz: bit.ly/lingthusiasmquiz

Design-based education researcher. Embodied cognition in math learning, sensory neurodiversity, & multimodal education technologies. Postdoc at UW-Madison. She/her

Associate Prof & Director of MILES Lab at @mcgillu.ca

Interested in motivational support & motivational climate, riding my bike, and books

kristyarobinson.com

❤️ Professor Insider-Outsider 🖤 Writer 💚Dis/Embodied Ethnographer 🪷 Complex Trauma Clinical Therapist 🧘🏽♀️ Founder: www.venusevanswinters.com

Doctoral student, Quantitative Methods in Educational Psychology at UW-Madison | Grounding brisks to explain our gaze skyward.

Asst Prof at @UWMadEducation studying mental health, race, and disability.

Open University professor emerita, making the shift over to podcasting, audio drama and fiction.

Learning Scientist of Higher Ed // Asst Prof at UW-Madison // Educator. Consultant. Freedom Dreamer.