YouTube video by Tiera Tanksley

Howard University AI Panel

I need everyone, esp anyone working in education or tech (but really everyone) to WATCH THIS CLIP of @drtanksley.bsky.social discussing the technologies infiltrating our schools & psyches and how she is addressing it with our young people. youtu.be/5mtcSL4S3HQ

22.11.2025 13:43 — 👍 72 🔁 40 💬 3 📌 3

Good idea! Surely some opportunity for fieldwork...

C&E claimed that Hawkeye redistributed epistemological privilege, also happening with CricViz models but challenging players/coaches rather than umpires. But it's also interesting to consider how these models/data are being put to work in public

22.11.2025 14:10 — 👍 1 🔁 0 💬 0 📌 0

Agreed, although it’s wider than Wisden types and the wider internet nostalgia boom.

CricViz now have an “expected leave” model which is being used to criticise England’s batting, based on how many time similar balls are played or left in previous games.

22.11.2025 13:00 — 👍 0 🔁 0 💬 1 📌 0

Also some very funny replies

21.11.2025 23:22 — 👍 1 🔁 0 💬 0 📌 0

steve smith, you scored 17 on your specialist subject, "getting hit by jofra archer"

21.11.2025 07:52 — 👍 269 🔁 75 💬 7 📌 9

Day 3 Sheffield Uni UCU strike against threat of extensive redundancies. 400 academic staff gone. Nearly 100 more now confirmed. They want another £10m staff saving this year, despite being in a strong position financially. #SheffieldUCU @sheffielducu.bsky.social www.ucu.org.uk/article/1423...

19.11.2025 10:33 — 👍 39 🔁 19 💬 0 📌 1

A UCU placard that reads “too burnt out to think of a slogan” in front of a tower block and another placard reading “trade unionists for trans rights”

Good rally today with @sheffielducu.bsky.social and Sheffield Hallam

17.11.2025 19:23 — 👍 2 🔁 0 💬 0 📌 0

Sign the Petition

SAVE AMERICAN STUDIES TEACHING AT THE UNIVERSITY OF NOTTINGHAM

RED ALERT: the University of Nottingham is threatening to close its Department of American Studies, putting all staff at risk of redundancy, and ending any American specialist knowledge in the Faculty of Arts.

Sign the petition here to save jobs:

www.change.org/p/save-ameri...

11.11.2025 20:21 — 👍 18 🔁 12 💬 1 📌 3

crazy

IG em_clarkson

14.11.2025 02:54 — 👍 10996 🔁 2474 💬 154 📌 232

A large stage with two large screens showing the words "I'm back by popular demand" in large red capital letters on a light blue background. Pusha T and Malice from Clipse are standing in front, with a large crowd in the foreground.

A large stage with two large screens showing a young black woman wearing a cap sleeved red and white top, with her right arm held out in front of her. Pusha T and Malice from Clipse are standing in front, with a large crowd in the foreground. Pusha T is holding a microphone to his mouth.

Monday night's elite-level Clipse gig in Manchester. Almost all the new album and a back cat of certified bangers.

12.11.2025 12:56 — 👍 1 🔁 0 💬 0 📌 0

That's it for today, come back tomorrow when our topic is 'famous geographers'.

05.11.2025 20:32 — 👍 4 🔁 0 💬 1 📌 0

score one for "the pundits are projecting their own preoccupations onto the electorate"

05.11.2025 01:15 — 👍 5718 🔁 818 💬 20 📌 3

Just putting this here as another IPXX example. A useful blueprint or epistemic envy? bsky.app/profile/adam...

04.11.2025 09:41 — 👍 1 🔁 0 💬 0 📌 0

Arial image of roundabout, a small l, grassy park area, and a roadside hotel. Credit: Shutterstock

Today I did something I should have done months ago. I went to an 'asylum seeker hotel protest' to speak to all involved. Protestors, live stream vlogger, police, counter-protestors. My main take away is that anyone wishing to understand this movement should do the same. Chat. Listen. Reflections🧵

01.11.2025 21:02 — 👍 2 🔁 1 💬 1 📌 0

I'm excited to finally have a preprint of this paper up, a few years in the making.

In it we argue that industry-driven manipulation of social media research is well underway and that norms and institutions in the field are ill-prepared to resist tech's influence.

arxiv.org/abs/2510.19894

24.10.2025 00:12 — 👍 148 🔁 56 💬 4 📌 11

Training and Events Calendar – White Rose DTP

Early train->Skegness (with some keen sunseekers ☀️ ) en route to York for the White Rose DTP Welcome Event

Reconnecting with our fantastic students, hearing their hopes & fears for the coming year and unveiling our Digital Tech, Communication & AI training programme wrdtp.ac.uk/training/tra... 🤓

29.10.2025 06:58 — 👍 0 🔁 0 💬 0 📌 0

Glad to hear it.

Being online has taught me (the hard way) that electronic media’s affordance of instant response is never useful, often exacerbating existing problems or creating entirely new ones.

27.10.2025 22:33 — 👍 1 🔁 0 💬 1 📌 0

"We focus on Israel during 2023, a period marked by intense political polarization...We find that exposure to toxic behavior by ingroup members is the primary driver of contagious toxicity, compared to smaller, less consistent associations with outgroup toxicity."

22.10.2025 17:36 — 👍 63 🔁 14 💬 1 📌 1

3 days in Milan in summer and I was like “where do I sign”

18.10.2025 19:36 — 👍 2 🔁 0 💬 0 📌 0

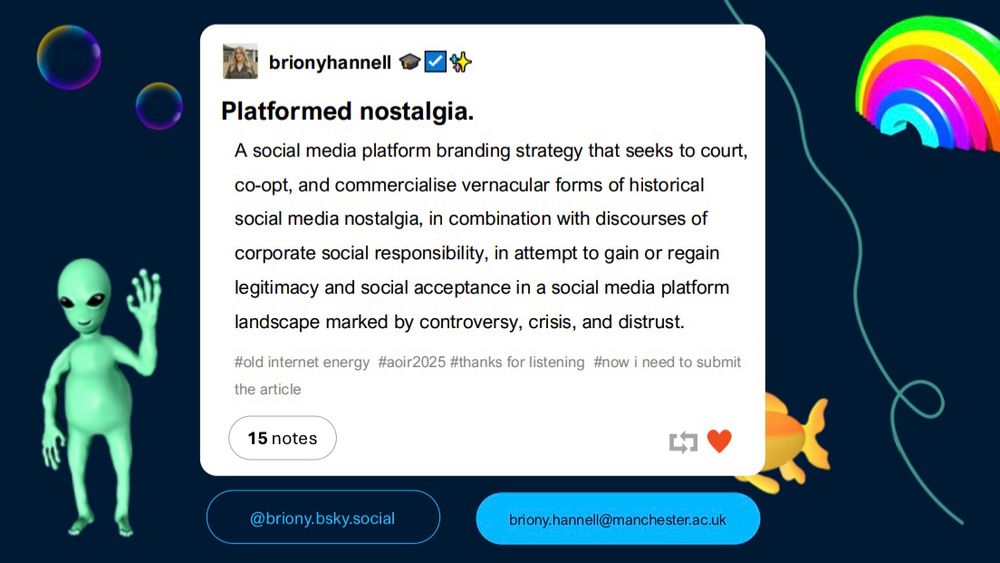

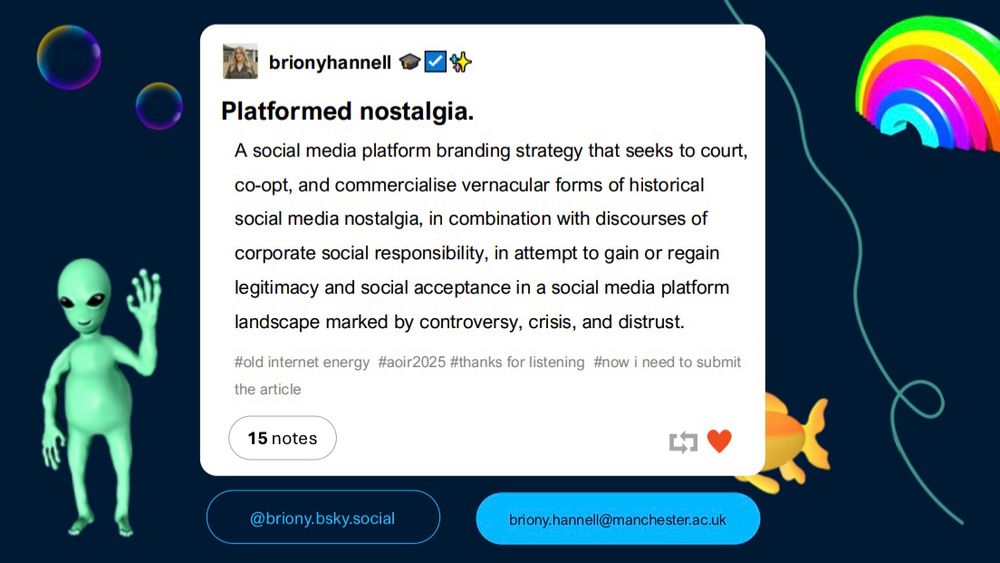

Presentation slide defining platformed nostalgia as a social media platform branding strategy that commercialises historical social media nostalgia in an attempt to (re)gain legitimacy and social acceptance in a landscape marked by controversy, crisis, and distrust.

At #aoir2025 I’m presenting on what I term “platformed nostalgia”, which I explore via an analysis of Automattic-era Tumblr’s efforts to commercialise historical social media nostalgia in its marketing (particularly in response to the X-odus). I’m in the Memes panel at 9am tomorrow. Sneak peak 👀

16.10.2025 19:51 — 👍 12 🔁 3 💬 0 📌 0

Trailer for Synthetic Sincerity which I wrote , directed by Marc Isaacs which will have its world Premiere in competition at IDFA in November

16.10.2025 07:02 — 👍 1 🔁 1 💬 0 📌 0

Great evening of knowledge, wordplay and truths from Chuck D at Leeds International Festival.

“They treating the masses like them asses” 🫡

#LIFI25

14.10.2025 21:27 — 👍 0 🔁 0 💬 0 📌 0

Twice as many full stops in the top post than the whole of his last book.

09.10.2025 21:45 — 👍 2 🔁 0 💬 1 📌 0

Entrance of Graz train station with Graz Hauptbahnhof written in capital letters on a light coloured stone building against clear blue sky

On way home from superb Cultural Climate Models meeting in Graz with @davidhiggins.bsky.social @caroschwegler.bsky.social et al. Looking forward to seeing in print the new approach to modelling from this truly interdisciplinary international collab

gewi.uni-graz.at/en/unsere-fo...

08.10.2025 10:34 — 👍 6 🔁 1 💬 0 📌 1

100% pure nuance.

https://www.theguardian.com/profile/jonathan-liew

Autistic

I make spreadsheets that help people navigate the FPL schedule - on a run of six consecutive top 10k finishes - Partnered with @FFH_HQ

Assistant Professor in Computational Social Science at University of Amsterdam

Studying the intersection of AI, social media, and politics.

Polarization, misinformation, radicalization, digital platforms, social complexity.

Senior Lecturer Sociology & Co-Director Migration Research Group, University of Sheffield, UK.

www.lucymayblin.com

www.sheffield.ac.uk/migration-research-group

www.Channelcrossings.org

Open access peer-reviewed journal connecting debates on emerging Big Data practices & how they reconfigure academic, social, industry, & government relations.

I'm not a geographer but I'd like to think like geographers | Ambivalence & ambiguity is my intellectual gravity | Doing #climate social science at NIES, Japan | Views my own | https://researchmap.jp/shinichiro.asayama/?lang=en

hi this is @annierau.bsky.social! my DMs are open

How the administration is breaking the government, and what that means for all of us. All of our content is free to republish and remix. https://unbreaking.org/

Posting random classic STS papers or books. What are the classics, you ask?? Let us know your suggestions or thoughts in the replies!

Department of Science and Technology Studies

60 year old nerdy guy with a job researching AI and the science of online life at the University of Southampton, and a side hustle in standup comedy.

Green Party Leader (England & Wales)

London Assembly Member.

Chair of London's Fire Committee.

🏳️🌈

https://podcasts.apple.com/podcast/id1837201724?i=1000724643828

Princeton Sociology Prof; ethnographer of NASA; tech policy; digitalSTS; ethics and critical technical practice; mobile Linux. Conscientious objector to personal data economy.

https://janet.vertesi.com

https://www.optoutproject.net

@cyberlyra@hachyderm.io

Senior Lecturer in Data, AI, & Society.

AI & In/equality Lead for Centre for Machine Intelligence.

Social, cultural & digital policy.

Author of Understanding Well-being Data: https://link.springer.com/book/10.1007/978-3-030-72937-0

Sisseton-Wahpeton Oyate citizen & Professor of American Indian Studies, University of Minnesota, Summer internship for INdigenous peoples in Genomics (SING) Canada. MSP/LAX/YEG.

https://kimtallbear.substack.com; https://www.youtube.com/@ktallbear

UMass Amherst, Initiative for Digital Public Infrastructure, Global Voices, Berkman Klein Center. Formerly Center for Civic Media, MIT Media Lab.

climate *zeitgeist* reporter for @washingtonpost.com. DM for Signal.

▪︎ Reading, writing & thinking about the moral foundation of scholarship

▪︎ Author of Doing Good Social Science: http://bit.ly/3EgFA2z

▪︎ More about my work: immersiveresearch.co.uk

▪︎ DM for speaking/workshop requests