#acl2025 anyone get a good quote of phil resnik's last comment?

context: (some?all?) panelists & him agree the field needs more deep, careful research on smaller models to do better science. everyone is frustrated with impossibility of large-scale pretraining experiments

28.07.2025 15:24 — 👍 7 🔁 1 💬 1 📌 0

@kennyjoseph.bsky.social , Kenny check this thread out

24.07.2025 11:38 — 👍 2 🔁 0 💬 0 📌 0

aclanthology.org/2023.emnlp-m..., for Active Learning I really liked this paper, uses LLMs as annotator for knowledge distillation for small LMs

24.07.2025 09:46 — 👍 1 🔁 0 💬 0 📌 0

What are your favorite recent papers on using LMs for annotation (especially in a loop with human annotators), synthetic data for task-specific prediction, active learning, and similar?

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

23.07.2025 08:10 — 👍 74 🔁 23 💬 14 📌 3

This is so mean !!!

21.07.2025 06:51 — 👍 0 🔁 0 💬 0 📌 0

Check out our take on Chain-of-Thought.

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

01.07.2025 17:45 — 👍 14 🔁 4 💬 1 📌 1

These are just battle scars of doing Data Science and ML Engineering in the industry!!

01.07.2025 17:28 — 👍 0 🔁 0 💬 0 📌 0

Screenshot of website

The Georgia Tech School of Interactive Computing is hosting the 2025 Summit on Responsible Computing, AI, and Society, October 27-29, 2025.

Overview

The Summit on Responsible Computing, AI, and Society aims to explore the future of computing for health, sustainability, human-centered AI, and policy. The summit will bring together luminary researchers in computing for health, sustainability, human-centered AI, and tech policy to lay out the frontiers of these critical fields, and to plot out how they must evolve.

Hi everyone. I'm excited to announce that I will be organizing a 2nd Summit on Responsible Computing, AI, and Society rcais.github.io October 27-29, 2025.

We will explore the future of computing for health, sustainability, human-centered AI, and policy.

Please consider submitting a 1-page abstract

01.07.2025 16:41 — 👍 17 🔁 4 💬 1 📌 2

Send him a note of appreciation 😊

27.06.2025 10:50 — 👍 0 🔁 0 💬 0 📌 0

To @pcarragher.bsky.social @lleibm.bsky.social , @jmendelsohn2.bsky.social , Evan and Catherine, and others for some really fruitful and nice convos, hope to see you all soon.

26.06.2025 21:45 — 👍 2 🔁 0 💬 0 📌 0

A huge shoutout to the organizing team, and to the web chair

@andersgiovanni.com

for updating the schedule in such an easy to follow manner, hope you get some well deserved rest (as an ex web chair I know the pain)

26.06.2025 21:44 — 👍 2 🔁 0 💬 2 📌 0

View of Measuring Dimensions of Self-Presentation in Twitter Bios and their Links to Misinformation Sharing

So ICWSM concluded today and it was a blast, was a great honor to attend @icwsm.bsky.social at Copenhagen and present my work with @kennyjoseph.bsky.social and other colleagues. The paper link is here :

ojs.aaai.org/index.php/IC...,

26.06.2025 21:39 — 👍 9 🔁 2 💬 1 📌 0

This is great! The idea is somewhat obvious (good!), and I'm sure many have toyed with the connection to learning-to-rank. However, no work had developed it. This should be relevant for constructing valid PIs from just preferential feedback. openreview.net/pdf?id=ENJd3...

26.06.2025 11:17 — 👍 2 🔁 1 💬 0 📌 0

Me we were in the same session :) (Session 8)

26.06.2025 09:36 — 👍 0 🔁 0 💬 1 📌 0

🚨 New preprint! 🚨

Phase transitions! We love to see them during LM training. Syntactic attention structure, induction heads, grokking; they seem to suggest the model has learned a discrete, interpretable concept. Unfortunately, they’re pretty rare—or are they?

24.06.2025 18:29 — 👍 49 🔁 10 💬 3 📌 0

Generative language systems are everywhere, and many of them stereotype, demean, or erase particular social groups.

16.06.2025 21:49 — 👍 9 🔁 2 💬 1 📌 0

Alright, people, let's be honest: GenAI systems are everywhere, and figuring out whether they're any good is a total mess. Should we use them? Where? How? Do they need a total overhaul?

(1/6)

15.06.2025 00:20 — 👍 33 🔁 11 💬 1 📌 0

🧵 1/ Las redes están llenas de odio. ¿Puede la inteligencia artificial ayudarnos a detectarlo…

⚖️ sin discriminar,

🚫 sin reforzar estereotipos,

🔁 y sin aprender a odiar?

Esa es la gran pregunta de mi tesis.

👇 Te lo cuento en este #HiloTesis @crueuniversidades.bsky.social @filarramendi.bsky.social

10.06.2025 09:20 — 👍 15 🔁 9 💬 1 📌 1

As we go through a lot of excitement about RL recently with lots of cool work/results, here is a reminder that RL with a reverse KL-regularizer to the base model cannot learn any new skills that were not already present in the base model. It can only amplify the weak skills.

👇

27.05.2025 17:40 — 👍 8 🔁 1 💬 2 📌 0

My path into AI

How I got here. Building a career brick by brick over 8 years.

My path into AI

The sort of small wins that accumulate into a real career in AI.

When I started grad school AI prof's didn't have space for me in their group and when I ended I had no papers at NeurIPS/ICLR/ICML, yet the process can still work.

www.interconnects.ai/p/my-path-in...

14.05.2025 14:29 — 👍 29 🔁 6 💬 1 📌 0

I posted this on LinkedIn too and it has over 600 reactions there, with the caveat that I don't know how many are from bots.

12.05.2025 20:03 — 👍 0 🔁 1 💬 0 📌 0

Out of all the mountains of work on "debiasing" word2vec, BERT, and LLMs, what anecdotes or evidence do we have that debiasing techniques have *actually been used in practice*, in industry or research?

(not referring to "cultural alignment" techniques)

(bonus if used and *to good effect*)

05.05.2025 21:17 — 👍 44 🔁 7 💬 9 📌 0

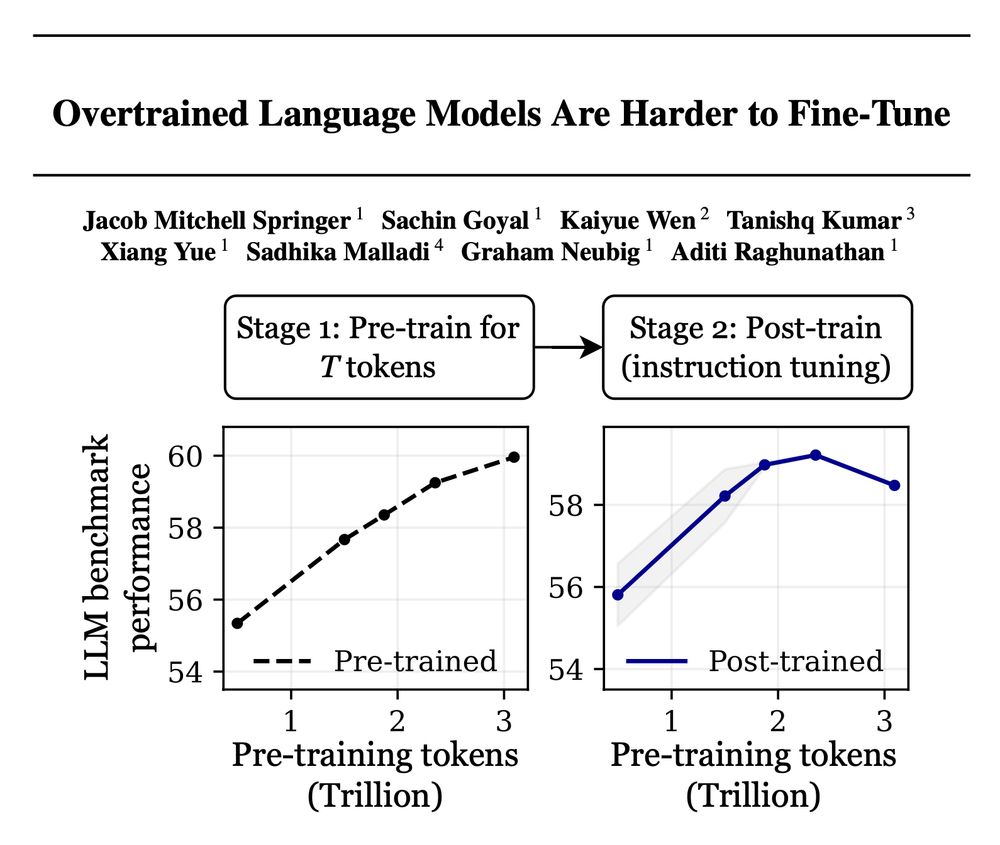

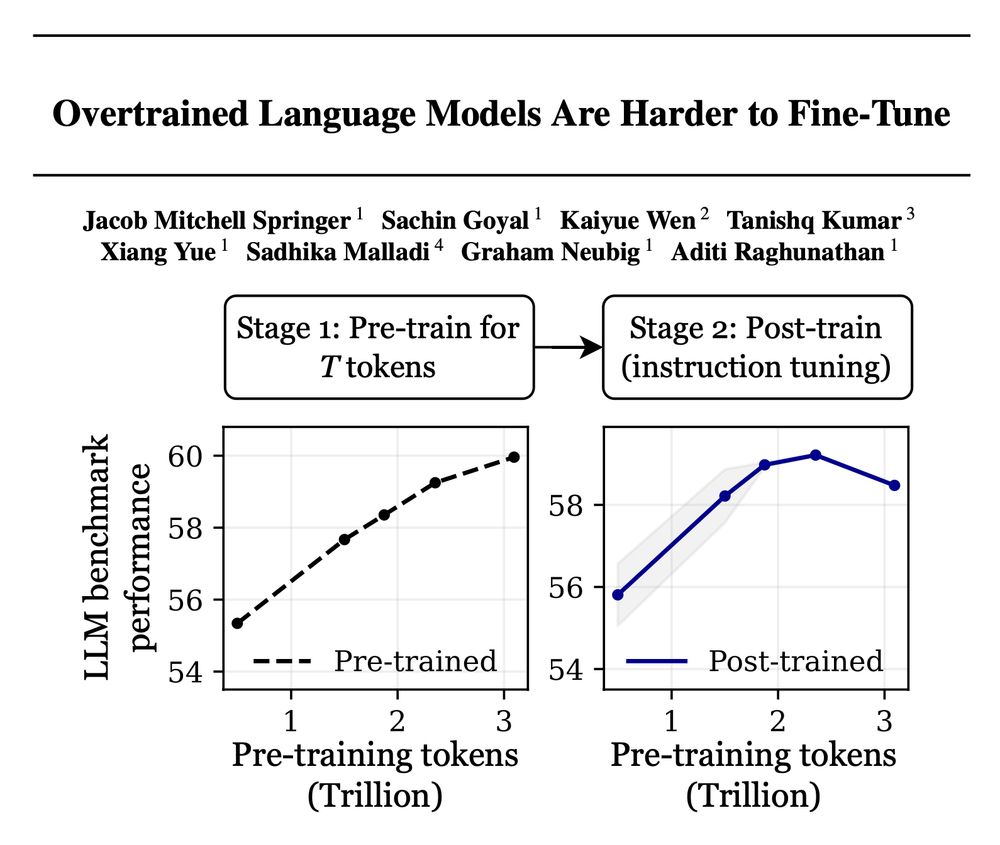

Training with more data = better LLMs, right? 🚨

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10

26.03.2025 18:35 — 👍 34 🔁 14 💬 1 📌 1

US tech firms - IME - rely heavily on grads of higher ed in general, and US higher ed in particular...

And yet I've not heard much noise from the Pichais, Nadellas, Zuckerbergs, Cooks, Benioffs, Sus, Jassys, Huangs, etc. about attacks on higher education...

Am I missing it or does it not exist?

13.03.2025 13:53 — 👍 114 🔁 14 💬 12 📌 1

Not a bad turnout for a Friday afternoon

07.03.2025 17:47 — 👍 86 🔁 8 💬 1 📌 0

Measuring Faithfulness of Chains of Thought by Unlearning Reasoning Steps

When prompted to think step-by-step, language models (LMs) produce a chain of thought (CoT), a sequence of reasoning steps that the model supposedly used to produce its prediction. However, despite mu...

🚨🚨 New preprint 🚨🚨

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

21.02.2025 12:42 — 👍 44 🔁 13 💬 2 📌 1

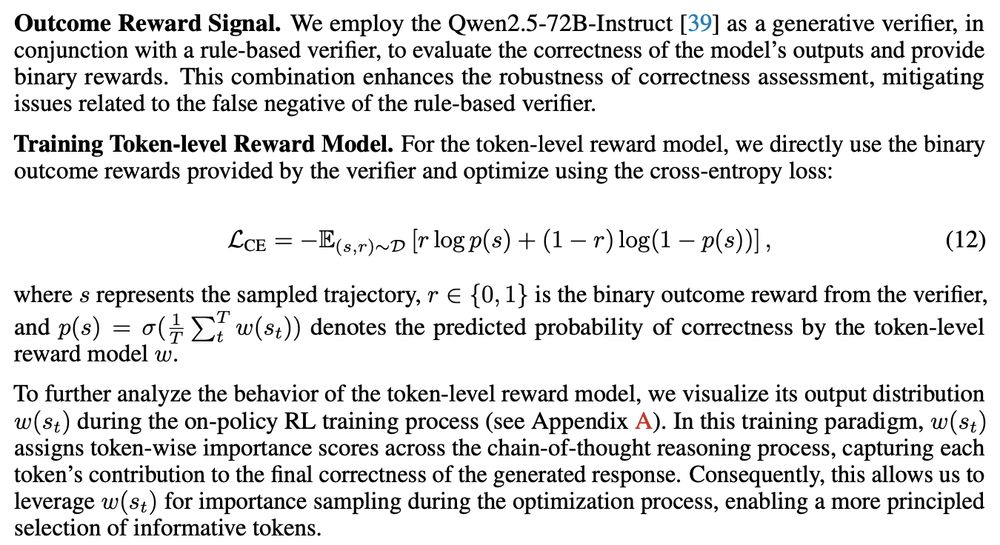

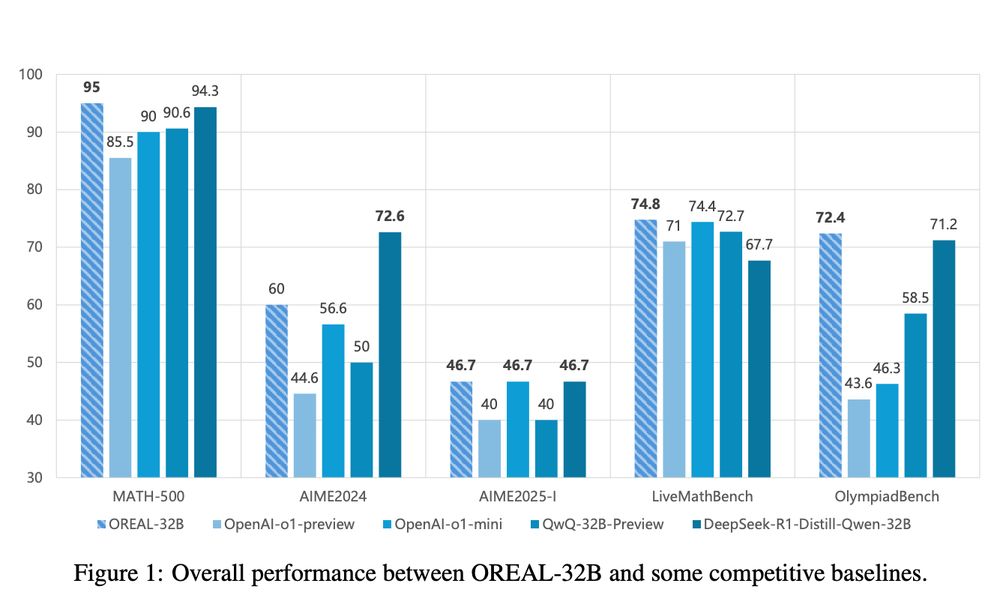

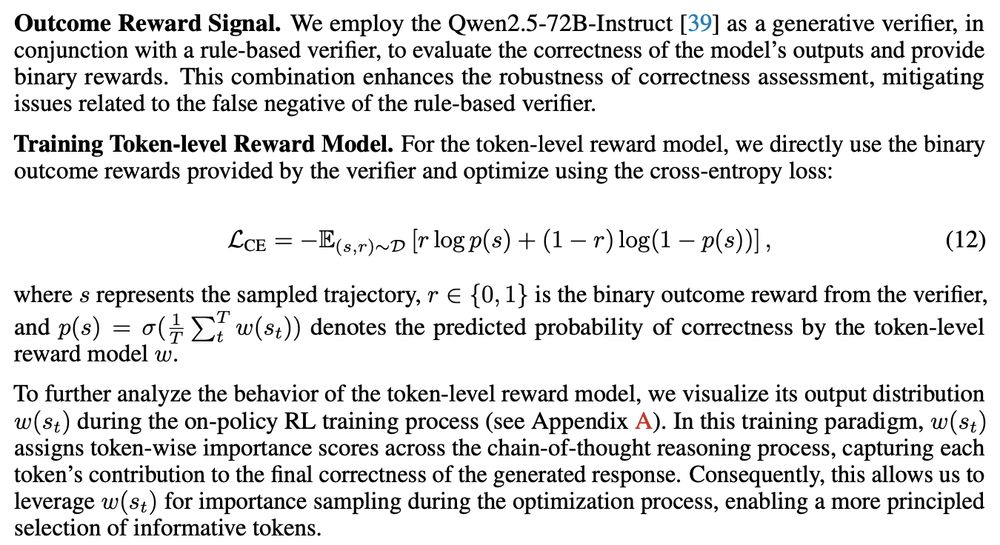

Rare that a paper these days uses the original literature of "Outcome reward model" and not just doing bradley-terry model on right/wrong labels.

Nature is healing.

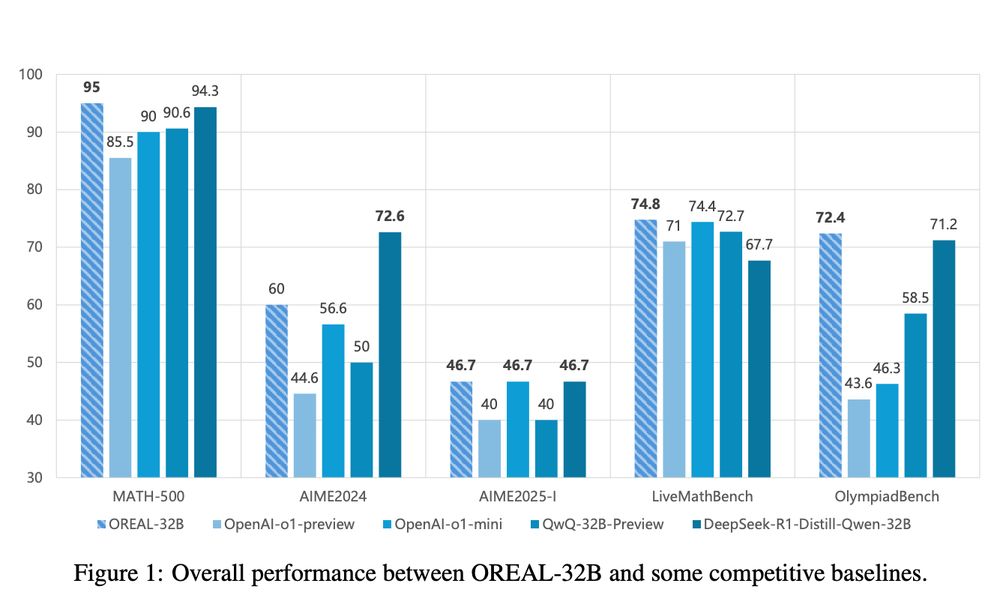

Exploring the Limit of Outcome Reward for Learning Mathematical Reasoning

Lyu et al

arxiv.org/abs/2502.06781

15.02.2025 15:50 — 👍 14 🔁 4 💬 2 📌 0

Association for Uncertainty in AI.

Upcoming conference: #uai2025 July 21-25th in Rio de Janeiro, Brazil 🇧🇷 !

https://auai.org/uai2025

Research in NLP (mostly LM interpretability & explainability).

Assistant prof at UMD CS + CLIP.

Previously @ai2.bsky.social @uwnlp.bsky.social

Views my own.

sarahwie.github.io

PhD Candidate @uwcse.bsky.social studying online community governance and moderation, with a focus on Reddit.

AI researcher & teacher at SCAI, ASU. Former President of AAAI & Chair of AAAS Sec T. Here to tweach #AI. YouTube Ch: http://bit.ly/38twrAV Twitter: rao2z

Assistant Professor at the Department of Computer Science, University of Liverpool.

https://lutzoe.github.io/

Associate Professor, CMU. Researcher, Google. Evaluation and design of information retrieval and recommendation systems, including their societal impacts.

School of Engineering and Applied Sciences at the University at Buffalo | #UBSEAS supports big thinking and creative freedom at #UBuffalo, NYS flagship university.

engineering.buffalo.edu

clicklinkin.bio/ubengineering

Assistant Professor @ UChicago CS & DSI UChicao

Leading Conceptualization Lab http://conceptualization.ai

Minting new vocabulary to conceptualize generative models.

Assistant Prof of CS at the University of Waterloo, Faculty and Canada CIFAR AI Chair at the Vector Institute. Joining NYU Courant in September 2026. Co-EiC of TMLR. My group is The Salon. Privacy, robustness, machine learning.

http://www.gautamkamath.com

Uses machine learning to study literary imagination, and vice-versa. Likely to share news about AI with sentient space crabs.

Information Sciences and English, UIUC. Distant Horizons (Chicago, 2019). tedunderwood.com

Visiting Professor at TU Graz

Postdoc Complexity Science Hub Vienna &

Postdoc Central European University

https://open.spotify.com/show/6tlDTnZ00diZQM9xVYi8zq

#NetworkFairness #NetworkInequalities

#ScienceOfScience #Poverty #SDGs

Wellesley College Professor | Computer & Data Sciences |

PhD candidate at MPI MiS, Lattice and SciencesPo médialab | Computational Social Science, Narratives, NLP, Complex Networks | https://pournaki.com

CS PhD student at CMU.

geometric deep learning | network science | reliability detection

For now aiming to entertain people with my remodeling mistakes.

Research Assistant at the University of Trento in Computational Social Science

Site: https://jordicondom.github.io/

Google scholar: https://scholar.google.com/citations?user=FmSvyV8AAAAJ&hl=en&authuser=1

Computer science doctorand at Uppsala University, @uuinfolab.bsky.social.

Mining social networks, discovering new music, occasionally playing baseball and softball.

Probably not George Michael, but search engines believe otherwise.

PhD student in Natural Language Processing and Computational Social Science at the University of Edinburgh 🏴