Our new paper in #PNAS (bit.ly/4fcWfma) presents a surprising finding—when words change meaning, older speakers rapidly adopt the new usage; inter-generational differences are often minor.

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

29.07.2025 12:05 —

👍 34

🔁 17

💬 3

📌 2

Age doesn't matter to pick up new word usages. The pronunciation may sound odd across generations but not the semantics 👴👵👨👩

29.07.2025 16:52 —

👍 5

🔁 0

💬 0

📌 0

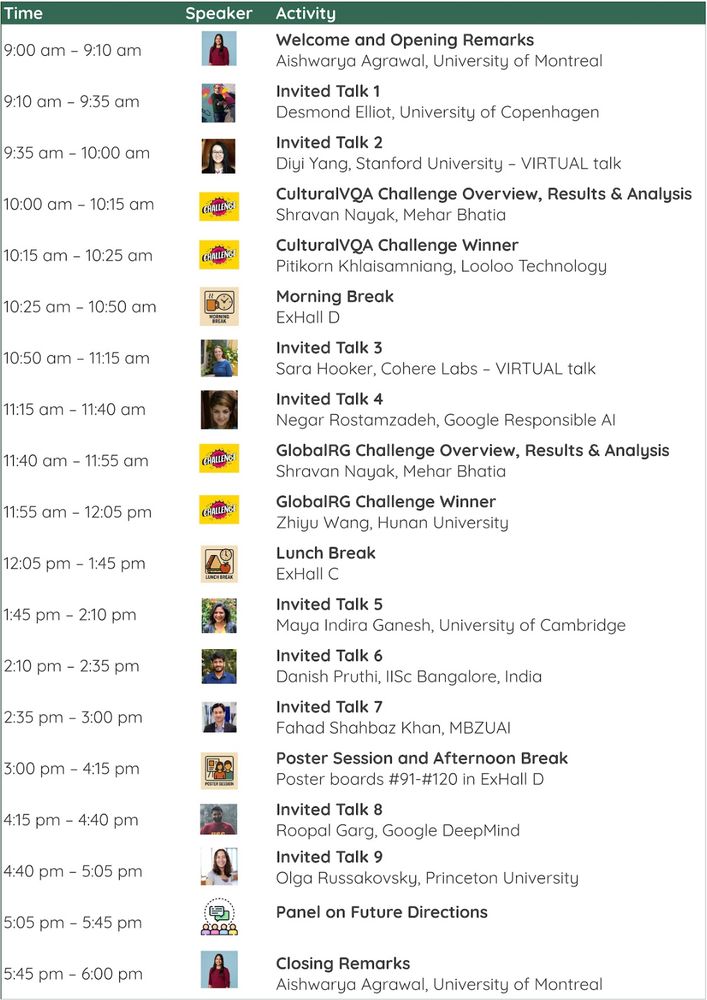

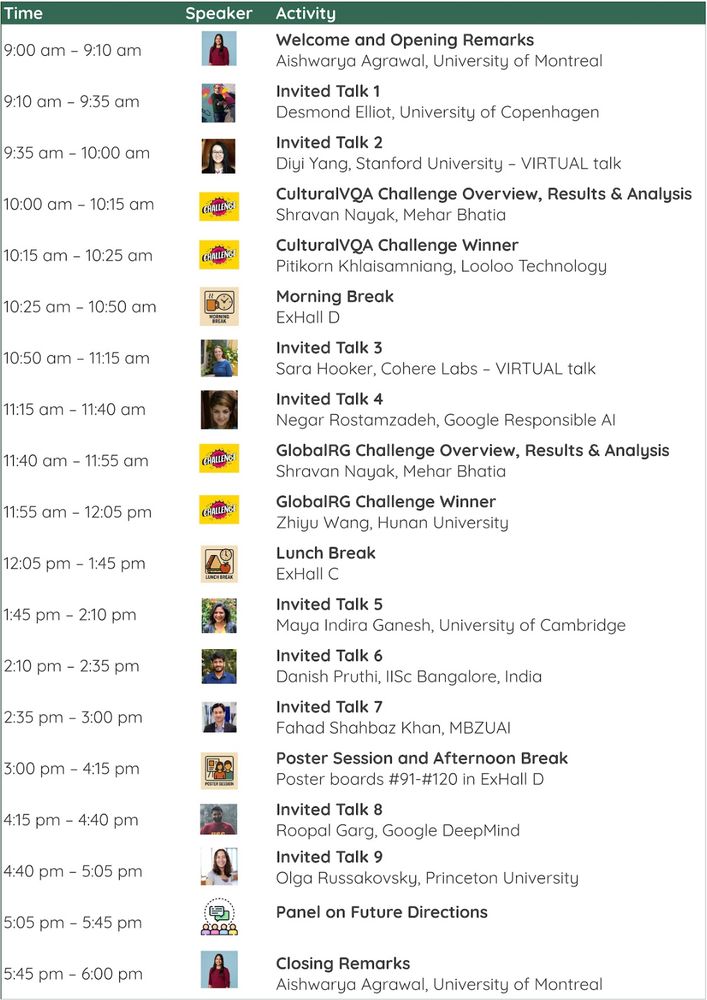

🗓️ Save the date! It's official: The VLMs4All Workshop at #CVPR2025 will be held on June 12th!

Get ready for a full day of speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware 🌐

Check out the schedule below!

06.06.2025 09:19 —

👍 4

🔁 3

💬 0

📌 1

Language Models Largely Exhibit Human-like Constituent Ordering Preferences

Though English sentences are typically inflexible vis-à-vis word order, constituents often show far more variability in ordering. One prominent theory presents the notion that constituent ordering is ...

Ada is an undergrad and will soon be looking for PhDs. Gaurav is a PhD student looking for intellectually stimulating internships/visiting positions. They did most of the work without much of my help. Highly recommend them. Please reach out to them if you have any positions.

01.05.2025 15:14 —

👍 6

🔁 2

💬 1

📌 0

Humans have a tendency to move heavier constituents to the end of the sentence. While LLMs show similar behaviour, what's surprising is that pretrianed models behave closer to humans than instruction-tuned models. And syllables rather than tokens define a better metric to define the heaviness.

01.05.2025 15:13 —

👍 1

🔁 0

💬 1

📌 0

Incredibly proud of my students @adadtur.bsky.social and Gaurav Kamath for winning a SAC award at #NAACL2025 for their work on assessing how LLMs model constituent shifts.

01.05.2025 15:11 —

👍 17

🔁 5

💬 1

📌 0

Great work from labmates on LLMs vs humans regarding linguistic preferences: You know when a sentence kind of feels off e.g. "I met at the park the man". So in what ways do LLMs follow these human intuitions?

01.05.2025 15:04 —

👍 7

🔁 3

💬 0

📌 0

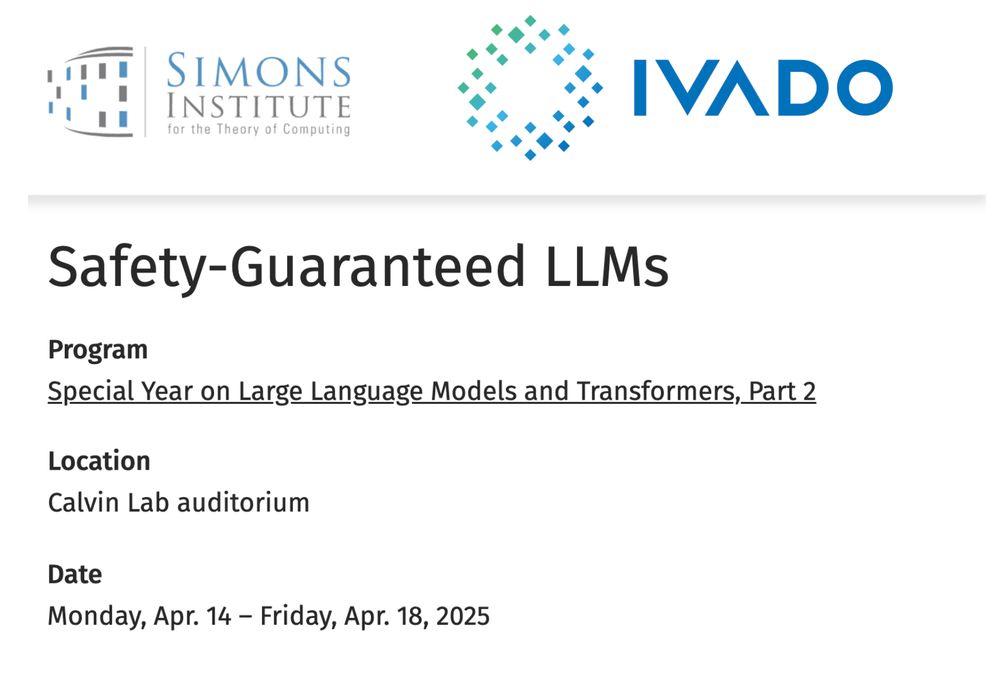

List of #SafetyGuaranteedLLMs talks on Monday Apr 14 2025 PDT. Speakers @rogergrosse.bsky.social Boaz Barak, Ethan Perez, Georgios Piliouras

14.04.2025 05:44 —

👍 4

🔁 0

💬 0

📌 0

The most exciting event on LLM safety is happening this week at @simonsinstitute.bsky.social with many excellent speakers. Organized by @yoshuabengio.bsky.social et al. Join us in person or virtual. In collaboration with @ivado.bsky.social. More details here:

simons.berkeley.edu/workshops/sa...

14.04.2025 05:41 —

👍 7

🔁 2

💬 0

📌 1

Though in-person registration is now full, you can still register to view the private livestream for next week's workshop on Safety-Guaranteed LLMs, co-organized with @ivado.bsky.social. We'll be posting live here as well.

simons.berkeley.edu/workshops/sa...

11.04.2025 04:43 —

👍 4

🔁 2

💬 0

📌 0

sorry to hear but please don't boycott us. We are having a tough time with US already :). I hate the new system too. Earlier it was just a pdf. You can just send the report to the supervisor with pass/fail and feedback and perhaps they can take care from there.

03.04.2025 21:05 —

👍 1

🔁 0

💬 1

📌 0

Never been part of a project like this before - it was a very rewarding+unique experience!

Everyone in the lab contributed different chapters and it was much more exploratory than your average phd project.

My chapter studied R1's reasoning on "image generation/editing" (via ASCII) 🧵👇

1/N

01.04.2025 21:19 —

👍 13

🔁 2

💬 1

📌 1

I will be giving a talk about this work @SimonsInstitute tomorrow (Apr 2nd 3PM PT). Join us, both in-person or virtually.

simons.berkeley.edu/workshops/fu...

01.04.2025 20:16 —

👍 6

🔁 0

💬 0

📌 0

Introducing the DeepSeek-R1 Thoughtology -- the most comprehensive study of R1 reasoning chains/thoughts ✨. Probably everything you need to know about R1 thoughts. If we missed something, please let us know.

01.04.2025 20:12 —

👍 17

🔁 4

💬 0

📌 1

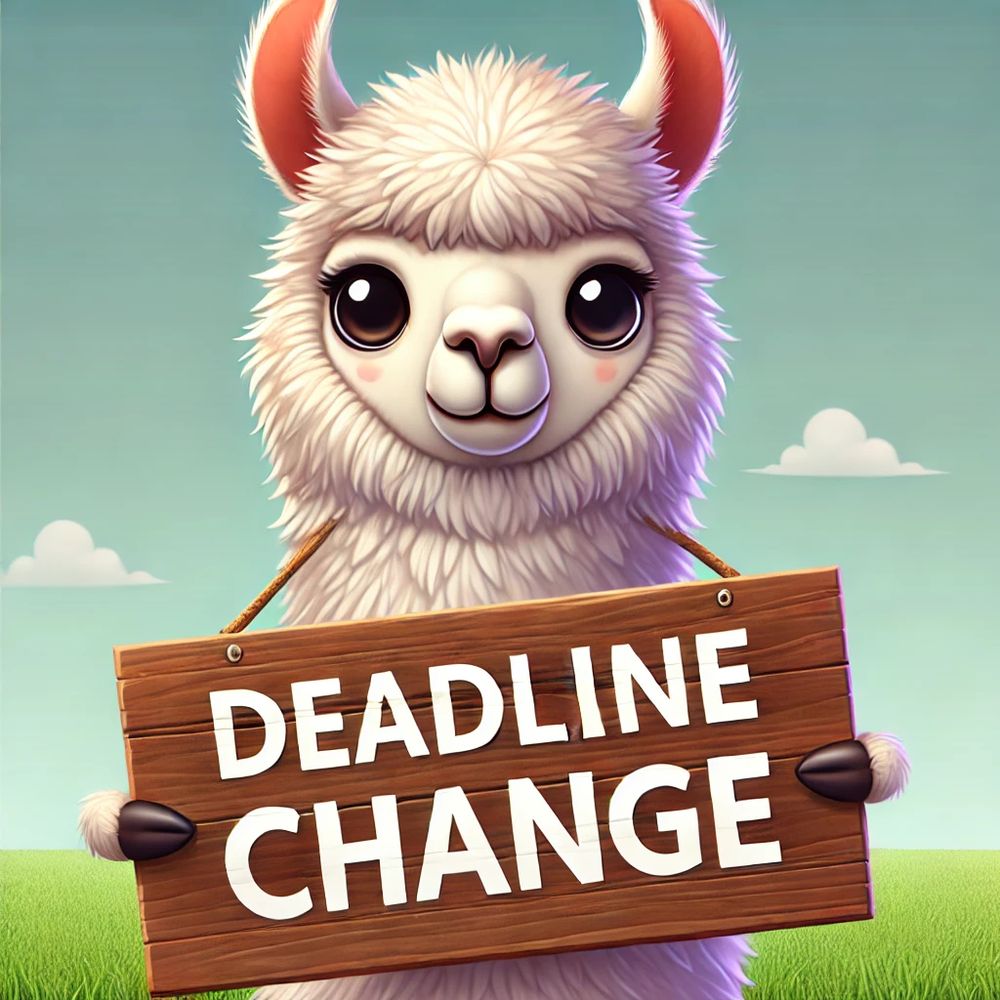

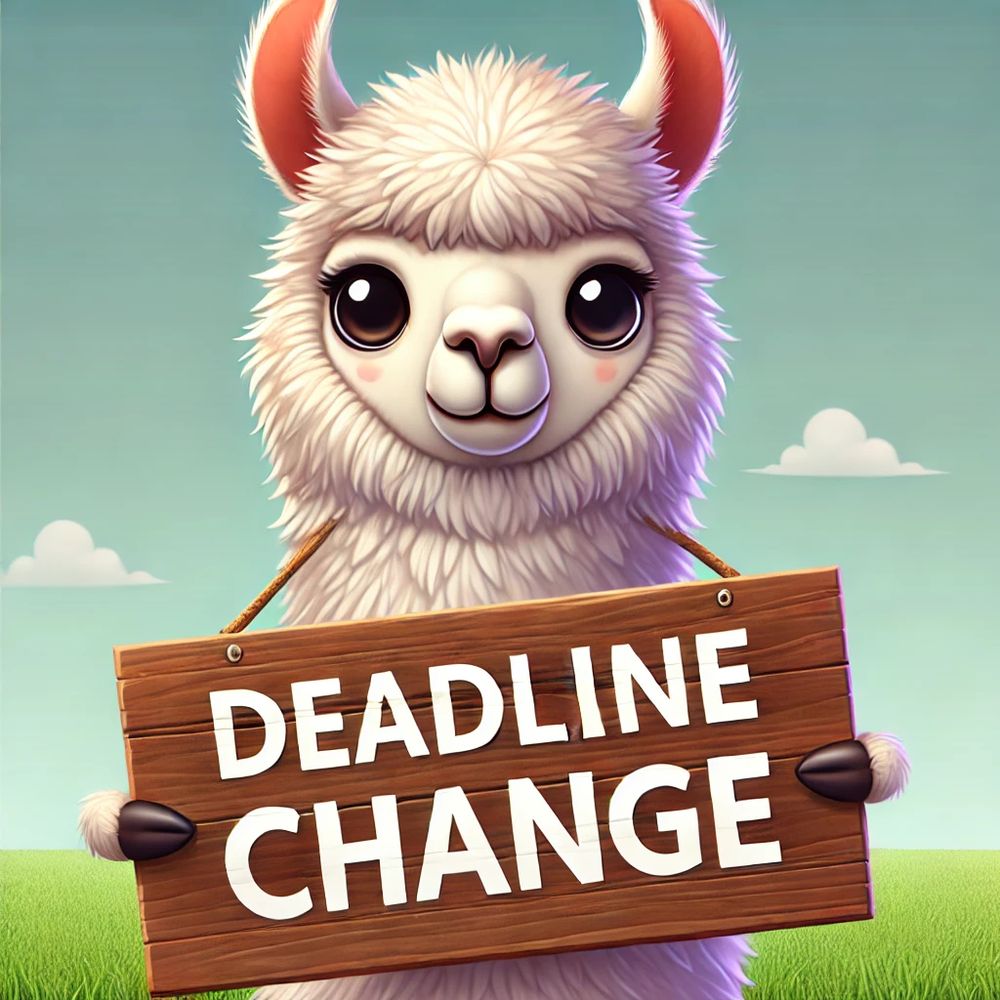

A bit of a mess around the conflict of COLM with the ARR (and to lesser degree ICML) reviews release. We feel this is creating a lot of pressure and uncertainty. So, we are pushing our deadlines:

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

20.03.2025 18:20 —

👍 37

🔁 31

💬 3

📌 2

As someone who has tried to make even basic image editing work in my research (e.g. "move cup to left of table"):

Gemini's new editing capabilities are seriously impressive!

Playing around with it is quite fun...

Edit 1: "edit the image to contain 3 more people"

18.03.2025 15:48 —

👍 9

🔁 1

💬 3

📌 0

Why do LLMs have a hard time aligning, while humans are better at it? 🌟The answer lies in the lack of a societal alignment framework for LLMs 🌍.

Incredible effort by @karstanczak.bsky.social in pulling views from multiple disciplines and experts in these fields.

arxiv.org/abs/2503.00069

04.03.2025 17:22 —

👍 7

🔁 0

💬 0

📌 0

How to Get Your LLM to Generate Challenging

Problems for Evaluation? 🤔 Check out our CHASE recipe. A highly relevant problem given that most human-curated datasets are crushed within days.

21.02.2025 18:53 —

👍 4

🔁 2

💬 0

📌 0

Finally it's handy that all my twitter posts got migrated here to bsky:

I'll be presenting AURORA at @neuripsconf.bsky.social on Wednesday!

Come by to discuss text-guided editing (and why imo it is more interesting than image generation), world modeling, evals and vision-and-language reasoning

08.12.2024 18:13 —

👍 23

🔁 2

💬 1

📌 0

Congratulations

@andreasmadsen.bsky.social

on successfully defending your PhD ⚔️ 🎉🎉 Grateful to you for stretching my interests into interpretability and engaging me with exciting deas. Good luck with your mission on building faithfully interpretable models.

29.11.2024 18:25 —

👍 9

🔁 0

💬 0

📌 0

Stages of #ICLR reviewing:

Stage 1: 😍 I hope I learn something new

Stage 2: 🤗 I hope I am constructive enough while being critical. Submits review

Stage 3: 🤯 Receives 5 page response + revision with many new pages

Stage 4: 😱 Crap, how do I get out of this?

Stage 5: 😵💫 What year is it?

26.11.2024 05:08 —

👍 17

🔁 0

💬 0

📌 0

@sivareddyg.bsky.social Which platforms? Maybe consider @buffer.com

24.11.2024 01:40 —

👍 1

🔁 1

💬 0

📌 0

Nice! Hello friend. Long time!

24.11.2024 02:21 —

👍 1

🔁 0

💬 1

📌 0

It's beautiful to start from scratch sometimes 😇

24.11.2024 01:28 —

👍 40

🔁 2

💬 1

📌 0

Creating a 🦋 starter pack for people working in IR/RAG: go.bsky.app/88ULgwY

I can’t seem to find everyone though, help definitely appreciated to fill this out (DM or comment)!

23.11.2024 21:19 —

👍 86

🔁 23

💬 32

📌 1

I am a lazy bsky :) or whatever you call it now.

23.11.2024 16:57 —

👍 0

🔁 0

💬 0

📌 0