Epic collage of Bionic Vision Lab activities. From top to bottom, left to right:

A) Up-to-date group picture

B) BVL at Dr. Beyeler's Plous Award celebration (2025)

C) BVL at The Eye & The Chip (2023)

D/F) Dr. Aiwen Xu and Justin Kasowski getting hooded at the UCSB commencement ceremony

E) BVL logo cake created by Tori LeVier

G) Dr. Beyeler with symposium speakers at Optica FVM (2023)

H, I, M, N) Students presenting conference posters/talks

J) Participant scanning a food item (ominous pizza study)

K) Galen Pogoncheff in VR

L) Argus II user drawing a phosphene

O) Prof. Beyeler demoing BionicVisionXR

P) First lab hike (ca. 2021)

Q) Statue for winner of the Mac'n'Cheese competition (ca. 2022)

R) BVL at Club Vision

S) Students drifting off into the sunset on a floating couch after a hard day's work

Excited to share that I’ve been promoted to Associate Professor with tenure at UCSB!

Grateful to my mentors, students, and funders who shaped this journey and to @ucsantabarbara.bsky.social for giving the Bionic Vision Lab a home!

Full post: www.linkedin.com/posts/michae...

02.08.2025 18:12 — 👍 18 🔁 4 💬 1 📌 0

Program – EMBC 2025

Loading...

At #EMBC2025? Come check out two talks from my lab in tomorrow’s Sensory Neuroprostheses session!

🗓️ Thurs July 17 · 8-10AM · Room B3 M3-4

🧠 Efficient threshold estimation

🧑🔬 Deep human-in-the-loop optimization

🔗 embc.embs.org/2025/program/

#BionicVision #NeuroTech #IEEE #EMBS

16.07.2025 16:54 — 👍 3 🔁 1 💬 0 📌 0

Program – EMBC 2025

Loading...

👁️⚡ Headed to #EMBC2025? Catch two of our lab’s talks on optimizing retinal implants!

📍 Sensory Neuroprostheses

🗓️ Thurs July 17 · 8-10AM · Room B3 M3-4

🧠 Efficient threshold estimation

🧑🔬 Deep human-in-the-loop optimization

🔗 embc.embs.org/2025/program/

#BionicVision #NeuroTech #IEEE #EMBS #Retina

13.07.2025 17:24 — 👍 0 🔁 2 💬 1 📌 0

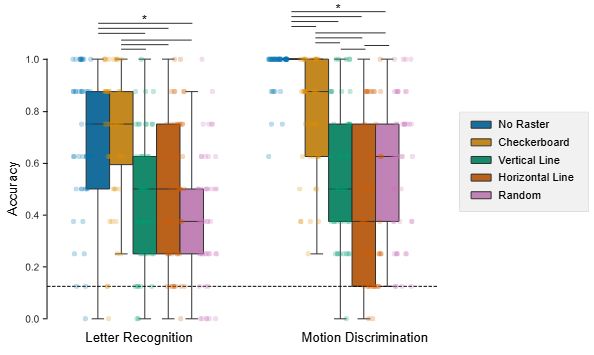

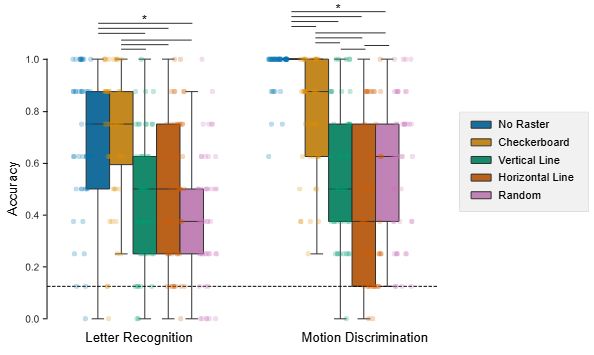

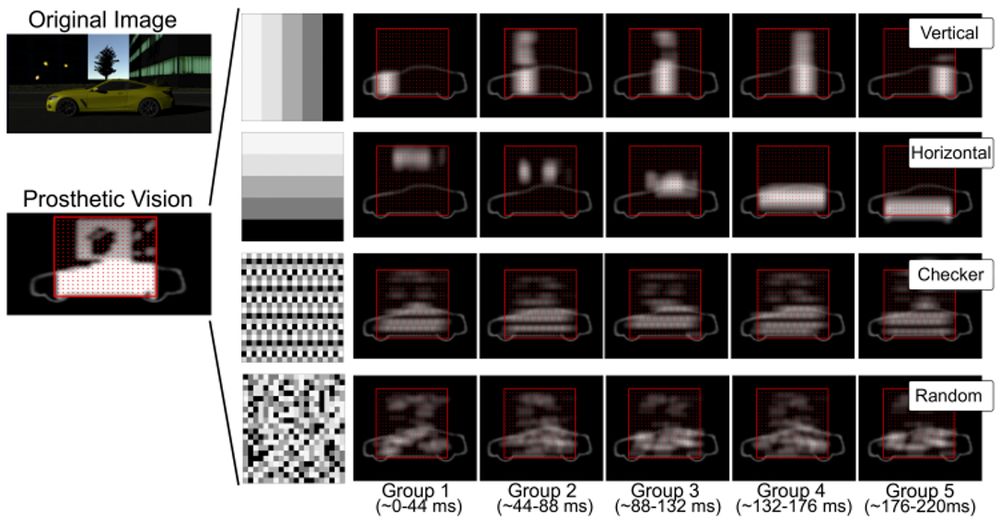

This matters. Checkerboard rastering:

✔️ works across tasks

✔️ requires no fancy calibration

✔️ is hardware-agnostic

A low-cost, high-impact tweak that could make future visual prostheses more usable and more intuitive.

#BionicVision #BCI #NeuroTech

09.07.2025 16:55 — 👍 1 🔁 0 💬 0 📌 0

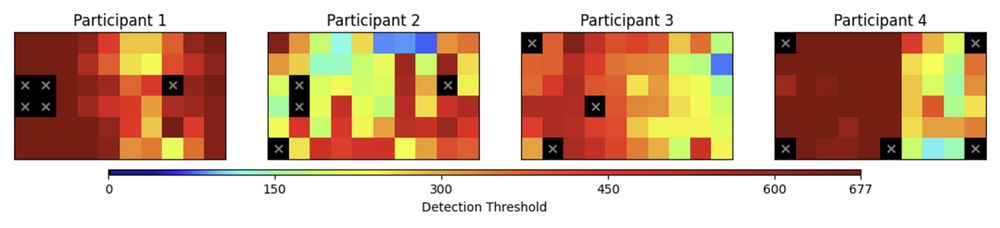

Boxplots showing task accuracy for two experimental tasks—Letter Recognition and Motion Discrimination—grouped by five raster patterns: No Raster (blue), Checkerboard (orange), Vertical (green), Horizontal (brown), and Random (pink). Each colored boxplot shows the median, interquartile range, and individual participant data points.

In both tasks, Checkerboard and No Raster yield the highest median accuracy.

Horizontal and Random patterns perform the worst, with more variability and lower scores.

Significant pairwise differences (p < .05) are indicated by horizontal bars above the plots, showing that Checkerboard significantly outperforms Random and Horizontal in both tasks.

A dashed line at 0.125 marks chance-level performance (1 out of 8).

These results suggest Checkerboard rastering improves perceptual performance compared to conventional or unstructured patterns.

✅ Checkerboard consistently outperformed the other patterns—higher accuracy, lower difficulty, fewer motion artifacts.

💡 Why? More spatial separation between activations = less perceptual interference.

It even matched performance of the ideal “no raster” condition, without breaking safety rules.

09.07.2025 16:55 — 👍 1 🔁 0 💬 1 📌 0

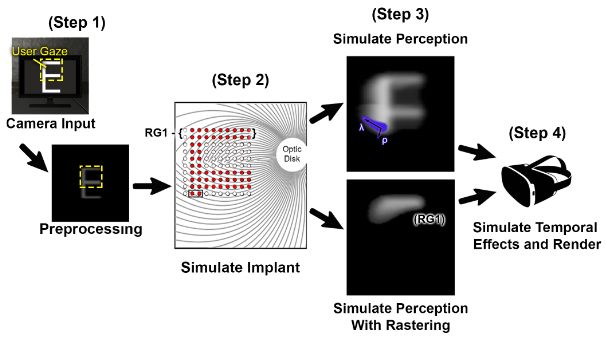

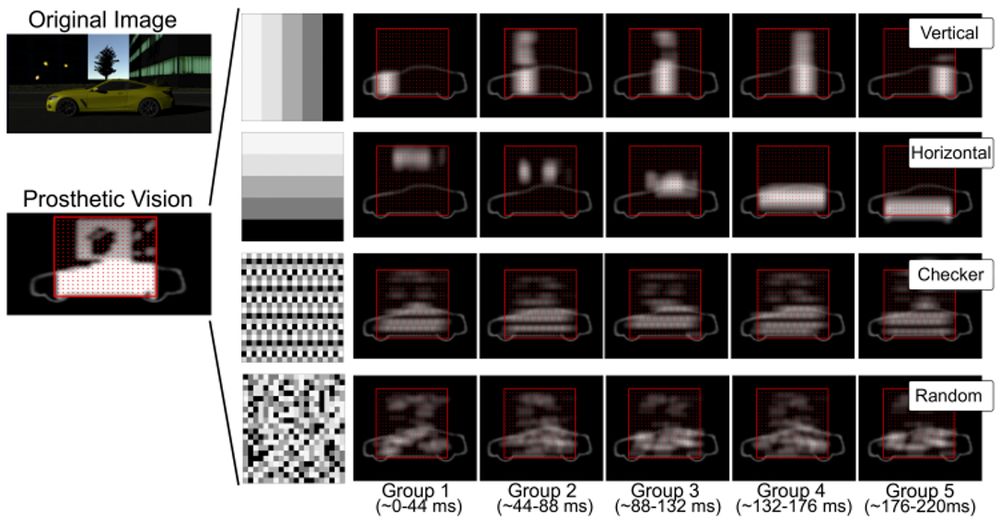

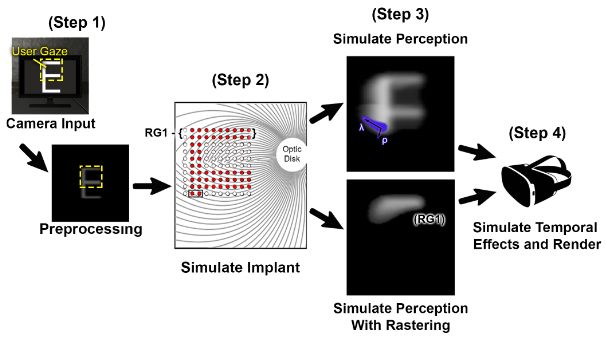

Diagram showing the four-step pipeline for simulating prosthetic vision in VR.

Step 1: A virtual camera captures the user’s view, guided by eye gaze. The image is converted to grayscale and blurred for preprocessing.

Step 2: The preprocessed image is mapped onto a simulated retinal implant with 100 electrodes. Electrodes are activated based on local image intensity and grouped into raster groups. Raster Group 1 is highlighted.

Step 3: Simulated perception is shown with and without rastering. Without rastering (top), all electrodes are active, producing a more complete but unrealistic percept. With rastering (bottom), only 20 electrodes are active per frame, resulting in a temporally fragmented percept. Phosphene shape depends on parameters for spatial spread (ρ) and elongation (λ).

Step 4: The rendered percept is updated with temporal effects and presented through a virtual reality headset.

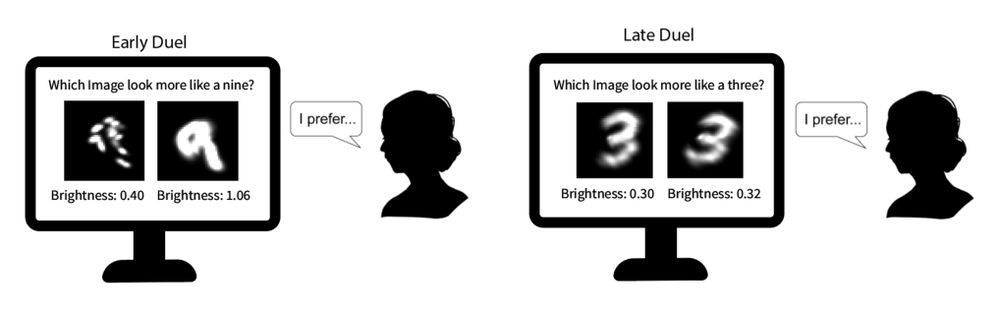

We ran a simulated prosthetic vision study in immersive VR using gaze-contingent, psychophysically grounded models of epiretinal implants.

🧪 Powered by BionicVisionXR.

📐 Modeled 100-electrode Argus-like array.

👀 Realistic phosphene appearance, eye/head tracking.

09.07.2025 16:55 — 👍 0 🔁 0 💬 1 📌 0

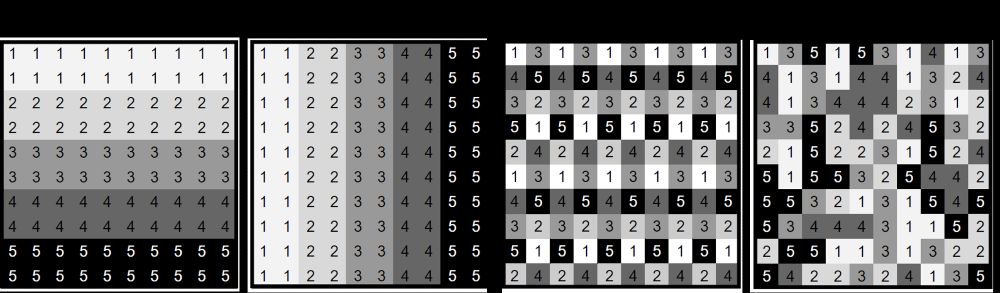

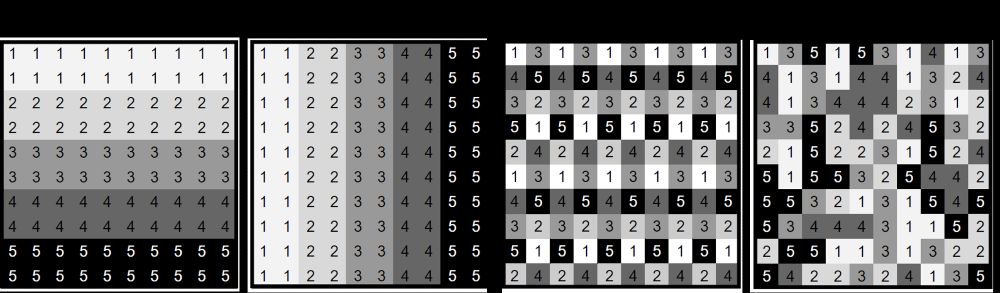

Raster pattern configurations used in the study, shown as 10×10 electrode grids labeled with numbers 1 through 5, representing five sequentially activated timing groups.

1. Horizontal: Each row of electrodes belongs to one group, with activation proceeding top to bottom.

2. Vertical: Each column is a group, activated left to right.

3. Checkerboard: Electrode groups are arranged to maximize spatial separation, forming a checkerboard-like layout.

4. Random: Group assignments are randomly distributed across the grid, with no spatial structure. This pattern was re-randomized every five frames to test unstructured activation.

Each group is represented with different shades of gray and labeled numerically to indicate activation order.

Checkerboard rastering has been used in #BCI and #NeuroTech applications, often based on intuition.

But is it actually better, or just tradition?

No one had rigorously tested how these patterns impact perception in visual prostheses.

So we did.

09.07.2025 16:55 — 👍 0 🔁 0 💬 1 📌 0

Raster patterns in simulated prosthetic vision. On the left, a natural scene of a yellow car is shown, followed by its transformation into a prosthetic vision simulation using a 10×10 grid of electrodes (red dots). Below this, a zoomed-in example shows the resulting phosphene pattern. To comply with safety constraints, electrodes are divided into five spatial groups activated sequentially across ~220 milliseconds. Each row represents a different raster pattern: vertical (columns activated left to right), horizontal (rows top to bottom), checkerboard (spatially maximized separation), and random (reshuffled every five frames). For each pattern, five panels show how the scene is progressively built across the five raster groups. Vertical and horizontal patterns show strong directional streaking. Checkerboard shows more uniform activation and perceptual clarity. Random appears spatially noisy and inconsistent.

👁️🧠 New paper alert!

We show that checkerboard-style electrode activation improves perceptual clarity in simulated prosthetic vision—outperforming other patterns in both letter and motion tasks.

Less bias, more function, same safety.

🔗 doi.org/10.1088/1741...

#BionicVision #NeuroTech

09.07.2025 16:55 — 👍 1 🔁 0 💬 1 📌 1

Assistive Technology Use In The Home and AI and Adaptive Optics Ophthalmoscopes

Lily Turkstra (University of California - Santa Barbara) Dr. Johnny Tam (National Eye Institute - Bethesda, MD) Lily Turkstra , PhD Student,...

🎙️Our very own Lily Turkstra was featured on WYPL-FM’s Eye on Vision podcast to discuss how blind individuals use assistive tech at home, from tactile labels to digital tools.

📻 Listen: eyeonvision.blogspot.com/2025/05/assi...

📰 Read: bionicvisionlab.org/publications...

#BlindTech #Accessibility

24.06.2025 20:14 — 👍 2 🔁 1 💬 0 📌 0

VSS PresentationPresentation – Vision Sciences Society

Last but not least is Lily Turkstra, whose poster is assessing the efficacy of visual augmentations for high-stress navigation:

Tue, 2:45 - 6:45pm, Pavilion: Poster #56.472

www.visionsciences.org/presentation...

👁️🧪 #XR #VirtualReality #Unity3D #VSS2025

20.05.2025 14:10 — 👍 3 🔁 2 💬 0 📌 0

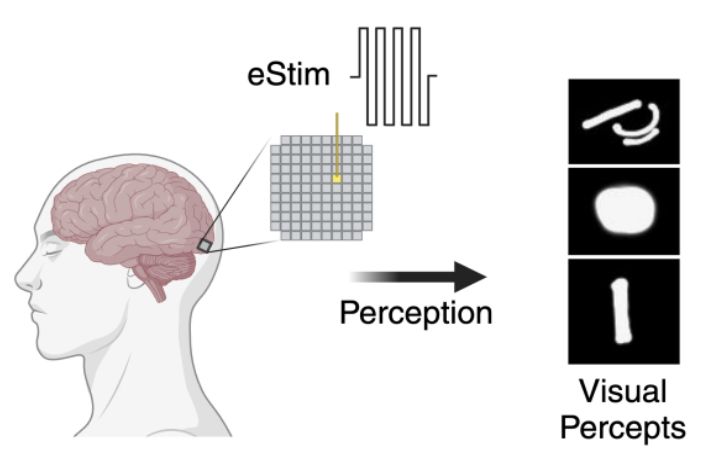

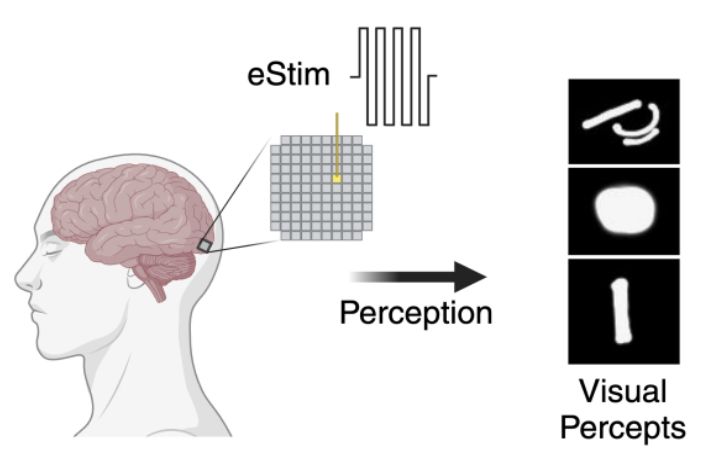

Schematic illustrating the phosphenes elicited by an intracortical prosthesis. A 96-channel Utah array is shown, stimulated with biphasic pulse trains. An arrow points to drawings of visual percepts elicited by electrical stimulation

Jacob Granley headshot

Coming up: Jacob Granley on whether V1 maintains working memory via spiking activity. Prior evidence from fMRI and LFPs - now, rare intracortical recordings in a blind human offer a chance to test it directly. 👁️ #VSS2025

🕥 Sun 10:45pm · Talk Room 1

🧠 www.visionsciences.org/presentation...

18.05.2025 12:26 — 👍 5 🔁 2 💬 1 📌 0

Example stimulus from Byron's image dataset: an unobscured version (left) depicting a man on a bike approaching a woman trying to cross the bike lane; a simulation of peripheral vision loss (center), where the woman is clearly visible but the man on the bike is obscured; and a simulation of central vision loss (right), where the man on the bike is apparent but the woman is obscured

Byron Johnson headshot

Our @bionicvisionlab.org is at #VSS2025 with 2 talks and a poster!

First up is PhD Candidate Byron A. Johnson:

Fri, 4:30pm, Talk Room 1: Differential Effects of Peripheral and Central Vision Loss on Scene Perception and Eye Movement Patterns

www.visionsciences.org/presentation...

16.05.2025 19:27 — 👍 8 🔁 3 💬 1 📌 0

Michael Beyeler smiles while receiving the framed Plous Award at UC Santa Barbara. College of Letters & Science's Dean Shelly Gable presents the award in front of a slide thanking collaborators and funders, with photos of colleagues and logos from NIH and the Institute for Collaborative Biotechnologies. The audience watches the moment from their seats.

The lecture hall of Mosher Alumni House is packed as Prof. Beyeler gets started with his lecture titled "Learning to See Again: Building a Smarter Bionic Eye"

Michael Beyeler stands with members of the Bionic Vision Lab in front of a congratulatory banner celebrating his 2024–25 UCSB Plous Award. Everyone is smiling, with some holding drinks, and Michael is holding his young son. The group is gathered outdoors under string lights, with tall eucalyptus trees in the background.

Not usually one to post personal pics, but let’s take a break from doomscrolling, yeah?

Some joyful moments from the Plous Award Ceremony: Honored to give the lecture, receive the framed award & celebrate with the people who made it all possible!

@bionicvisionlab.org @ucsantabarbara.bsky.social

17.04.2025 16:16 — 👍 4 🔁 3 💬 1 📌 0

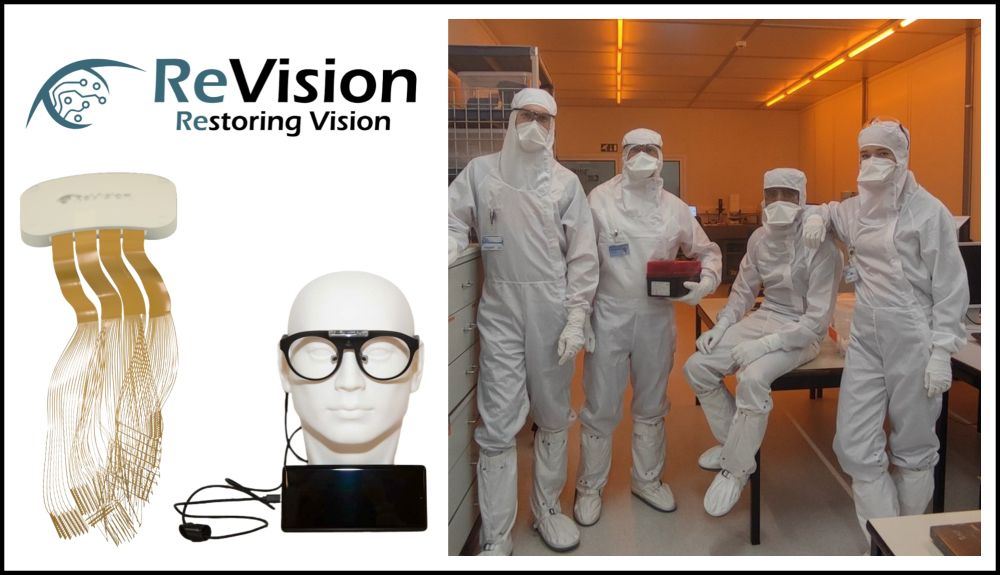

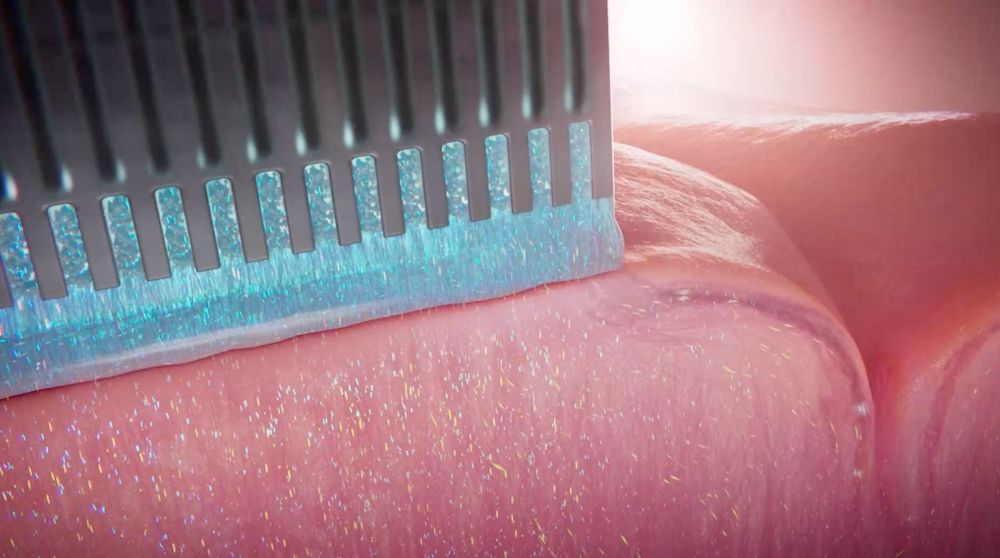

bionic eye glasses

Modern visual prosthetics can generate flashes of light but don’t restore natural vision. What might bionic vision look like?

In CS asst. prof Michael Beyeler's @bionicvisionlab.org his team explores how smarter, more adaptive tech could move toward a bionic eye that's functional & usable.👁️

11.04.2025 18:00 — 👍 1 🔁 1 💬 1 📌 0

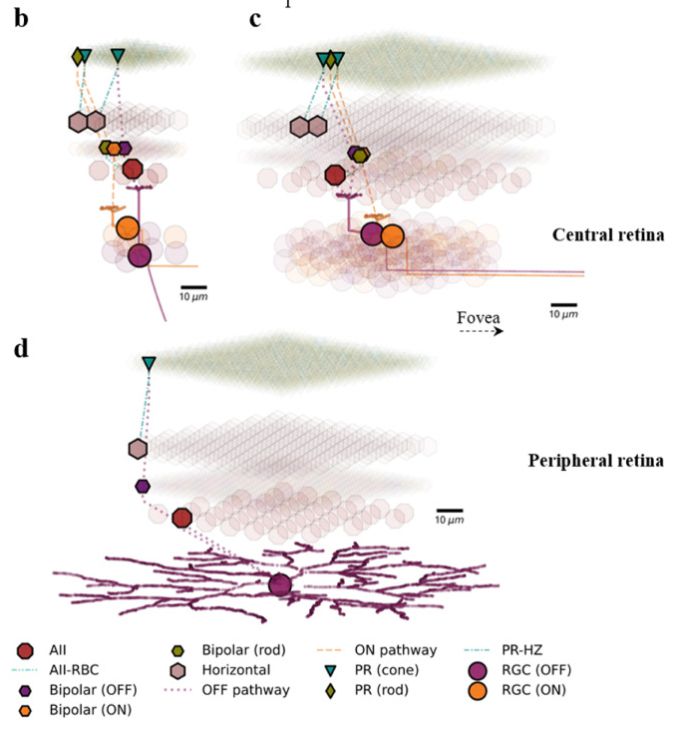

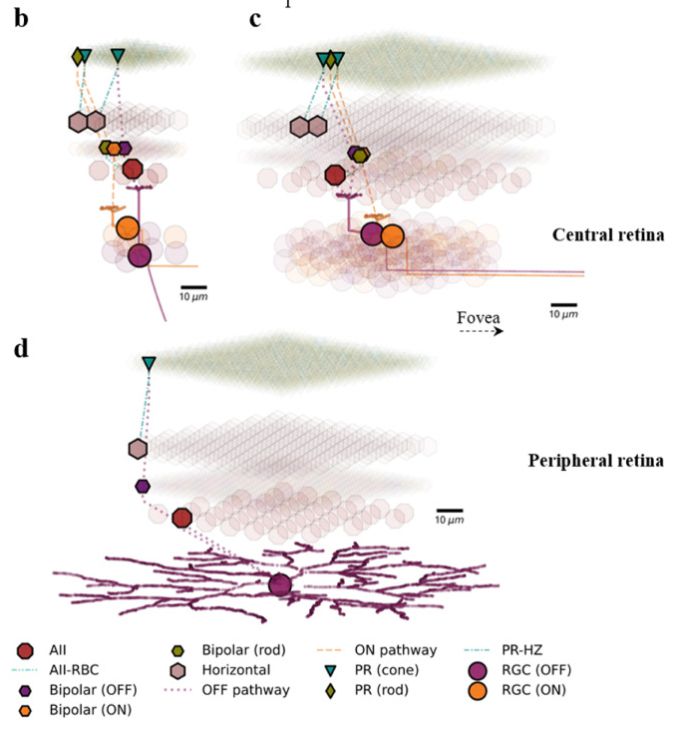

Examples of discrete neuronal network models of the human retina, including the central (top) and peripheral retina (bottom). Photoreceptors, bipolar cells, and ganglion cells are shown.

Virtual Human Retina: A simulation platform designed for studying human retinal degeneration and optimizing stimulation strategies for retinal implants 👁️🧠🧪

doi.org/10.1016/j.br...

23.01.2025 21:17 — 👍 3 🔁 3 💬 1 📌 1

Outstanding Contributions

Assistant professor Michael Beyeler is selected for the highly regarded Harold J. Plous Memorial Award.

Our PI @mbeyeler.bsky.social has received the 2024-’25 Harold J. Plous Memorial Award by @ucsb.bsky.social, in recognition of his “outstanding contributions in research, teaching, and service.“

New article by @ucsbengineering.bsky.social:

engineering.ucsb.edu/news/outstan...

13.12.2024 19:26 — 👍 4 🔁 3 💬 0 📌 0

Biohybrid neural interfaces: an old idea enabling a completely new space of possibilities | Science Corporation

Science Corporation is a clinical-stage medical technology company.

I had an idea way back in college which I've long thought could be, in many ways, the ultimate BCI technology. What if instead of using electrodes, we used biological neurons embedded in electronics to communicate with the brain?

Enter biohybrid neural interfaces: science.xyz/news/biohybr...

23.11.2024 19:49 — 👍 84 🔁 18 💬 4 📌 9

Aligning visual prosthetic development with implantee needs: Now out in TVST!

#BionicVision #NeuroTech #BCI #Blindness #Accessibility

21.11.2024 17:31 — 👍 1 🔁 2 💬 0 📌 0

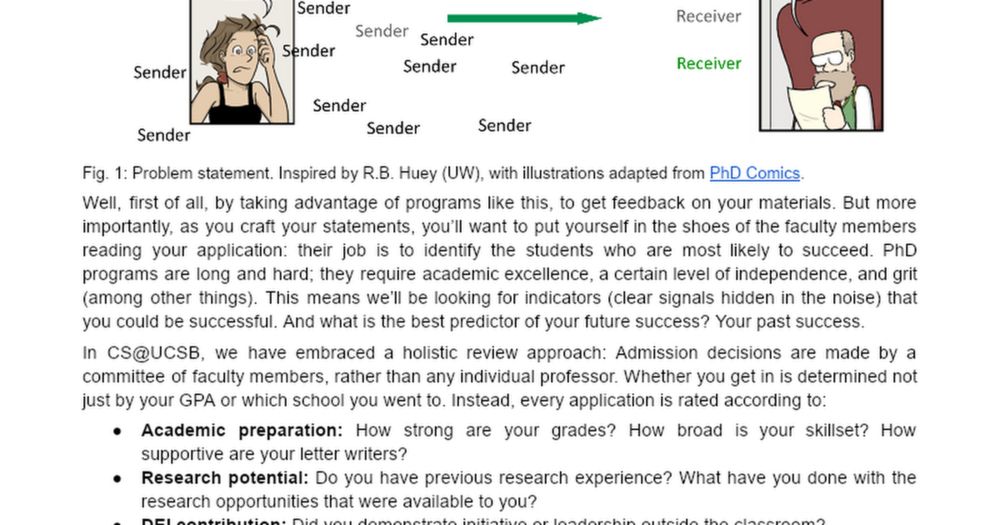

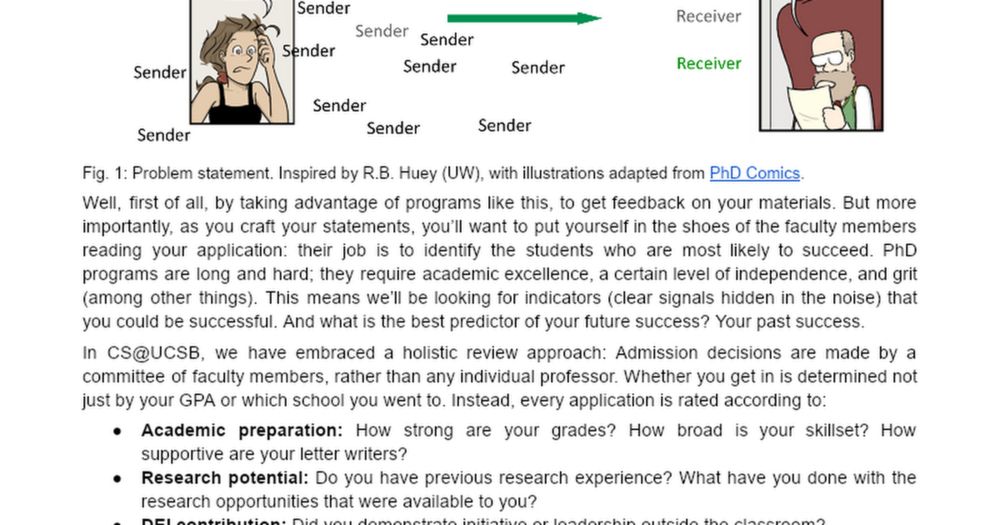

The Road to a Successful PhD Application

The Road to a Successful PhD Application Disclaimer: This guide was written by Prof. Michael Beyeler, with input from faculty colleagues. It does not necessarily reflect the views of all CS faculty me...

Starting your #PhD journey? Crafting a standout Statement of Purpose is key. Here's a guide with tips & common pitfalls to help you succeed (tailored to #CompSky at #UCSB, but much of it should apply to most depts):

docs.google.com/document/d/1...

#AcademicSky #AcademicChatter #FirstGen #CSforALL

20.11.2024 19:24 — 👍 10 🔁 5 💬 0 📌 0

Applying to the PhD program in #CompSky at #UCSB? It's not too late to get expert feedback on your application materials! 🧪

New deadline: Nov 22 Anywhere on Earth

cs.ucsb.edu/education/gr...

#Diversity #FirstGen #STEM #WomenInSTEM #CSforALL #AcademicSky

19.11.2024 02:04 — 👍 4 🔁 2 💬 0 📌 0

Startups Like Science and Musk's Neuralink Aim to Help Blind People See

Technological advances have spurred an "explosion of interest" in restoring vision.

New Bloomberg article putting recent developments in #BionicVision into perspective:

www.bloomberg.com/news/article...

with quotes from Max Hodak (Science), Xing Chen (Pitt), Yağmur Güçlütürk (Radboud), @mbeyeler.bsky.social (UCSB), others

#NeuroTech #BCI #Neuralink #PRIMA #Phosphoenix #ReVision

13.11.2024 20:56 — 👍 2 🔁 2 💬 0 📌 0

Jacob Granley from the Bionic Vision Lab presenting at the podium as he presents his talk on control of electrically evoked neural activity via deep neural networks in human visual cortex

The 2nd Brain & The Chip is underway in Elche, Spain!

Leading #BionicVision and #BCI researchers are gathering to discuss latest developments in intracortical neural interfaces.

www.bionic-vision.org/events/brain...

12.11.2024 16:51 — 👍 1 🔁 1 💬 2 📌 1

Folks please do your best to include "alt text" for any images you post. When blind users use bluesky, this enables them to engage with the images you post. #Accessibility #AltText

11.11.2024 05:04 — 👍 8 🔁 4 💬 0 📌 0

YouTube video by What is The Future for Cities?

What is accessibility and integration in urban futures? Lucas Gil Nadolskis (270I)

“When you say someone is disabled, there’s an assumption they’re not functional–which couldn’t be more wrong!”

Our PhD student Lucas Nadolskis shares insights on #Accessibility, #Inclusion vs. #Integration, and reimagining cities for all:

www.youtube.com/watch?v=KDSn...

12.11.2024 05:04 — 👍 1 🔁 1 💬 0 📌 0

Prof. @ucsantabarbara.bsky.social - Runs a lab slslab.org - Works on computation, neuroscience, behavior, vision, optics, imaging, 2p / multiphoton, optical computing, machine learning / AI - Blogs at labrigger.com - Founded @pacificoptica.bsky.social

Associate Professor at UCSB. Interested in the origins and development of humans' understanding of social relationships. liberman.psych.ucsb.edu

Cognitive scientist studying the development of the social mind. Assistant professor at UCSB. 🇨🇦🏳️🌈 (he/him)

bmwoo.github.io

Doctor of NLP/Vision+Language from UCSB

Evals, metrics, multilinguality, multiculturality, multimodality, and (dabbling in) reasoning

https://saxon.me/

At KITP on the UC Santa Barbara campus, researchers in theoretical physics and allied fields collaborate on questions at the leading edges of science.

www.kitp.ucsb.edu

Associate professor at UCSB. Evolutionary and computational perspectives on human mating. Occasional nature photos. He/him.

https://www.danconroybeam.com/

Former NIH Program Director for Neuroengineering. Current Co-Director of Wisconsin Institute for Translational Neuroengineering (WITNe).

Opinions are official positions of the U.S Government and Lyle Lovett.

Assistant Professor Uni Lucerne studying AI for medical data analysis (http://mlmia-unitue.de) 🤖👩⚕️

Neuroscientist, Universidad de Valparaiso, Chile, https://www.cinv.uv/apalacios

Account for the Carleton and Rodriguez laboratories at the university of Geneva, Switzerland. rodriguez-carleton-labs.unige.ch

Studying claustrum and cognitive dysfunctions in neuropsychiatric disorders.

At the junction of optics, neuroscience, ophthalmology and bioengineering.

https://aria.cvs.rochester.edu/our_work/blood_flow.html

Neuroscientist @__NERF | @BiologyKULeuven | @VIBLifeSciences | @leuvenbrainins1. Opinions my own. https://bsky.app/profile/boninlab.bsky.social

Neuroscience, NeuroAI | https://kfranke.com/ | Senior Scientist at Stanford working at https://enigmaproject.ai/

Brains, minds, computers, cats and carbonara.

🚨 On the postdoc job market 🚨

PhD candidate at ViCCo group & Max Planck School of Cognition. Working on brain representation of the visual world 🌏→🧠❔

www.olivercontier.com

Sir Henry Dale Fellow @ University of Manchester. Vision, light and clocks! 👁️💡⏰

community engagement, neurotech, advocacy, education

E&ILCA's main focus is global liaising for genetically caused blindness. E&ILCA initiates and fosters vast networks of top life-science clusters and most influential groups.

COO @SilicoLabs | Registered Physical Therapist | PhD in Rehab Sciences at U of T | Ex-Torontonion, Proud Montrealer