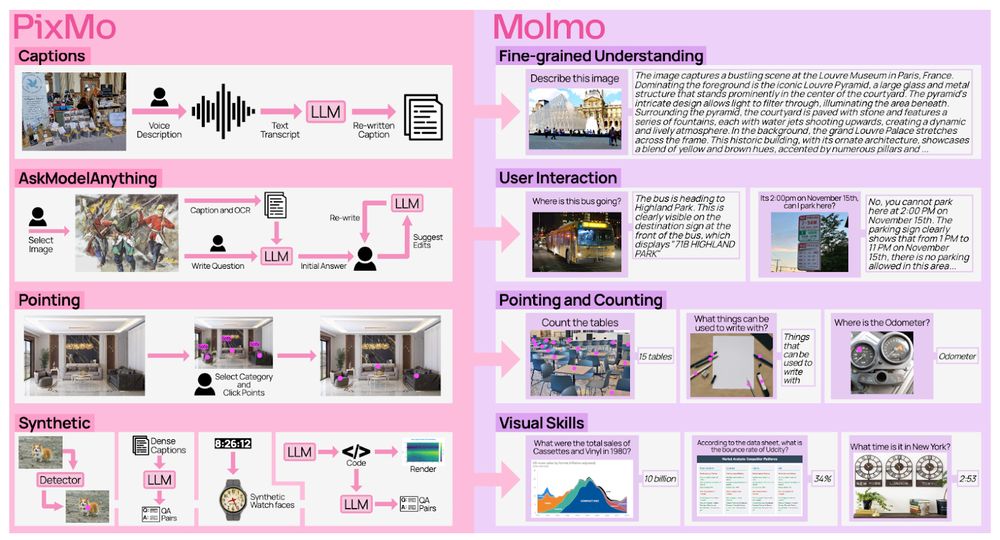

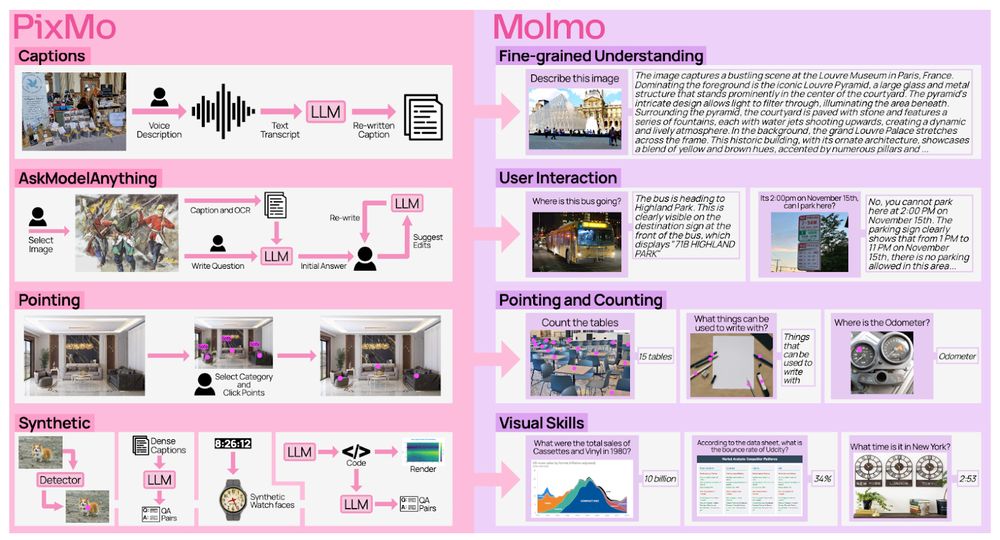

Overview of PixMo and its relation to Molmo's ability. PixMo's captions data enables Molmo's fine-grained understanding; PixMo's AskModelAnything enables Molmo's user interaction; PixMo's pointing data enables Molmo's pointing and counting; PixMo's synthetic data enables Molmo's visual skills.

Remember Molmo? The full recipe is finally out!

Training code, data, and everything you need to reproduce our models. Oh, and we have updated our tech report too!

Links in thread 👇

09.12.2024 18:33 —

👍 78

🔁 14

💬 1

📌 1

The OLMo 2 models sit at the Pareto frontier of training FLOPs vs model average performance.

Meet OLMo 2, the best fully open language model to date, including a family of 7B and 13B models trained up to 5T tokens. OLMo 2 outperforms other fully open models and competes with open-weight models like Llama 3.1 8B — As always, we released our data, code, recipes and more 🎁

26.11.2024 20:51 —

👍 151

🔁 36

💬 5

📌 12

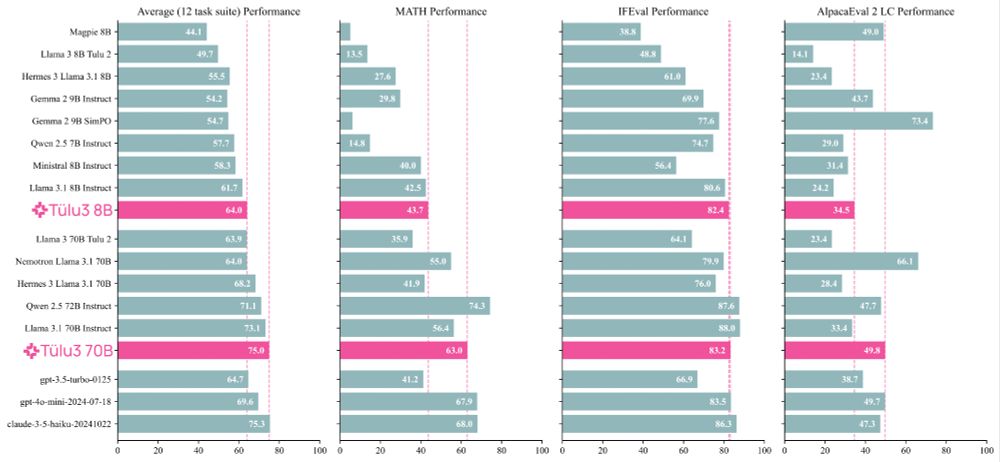

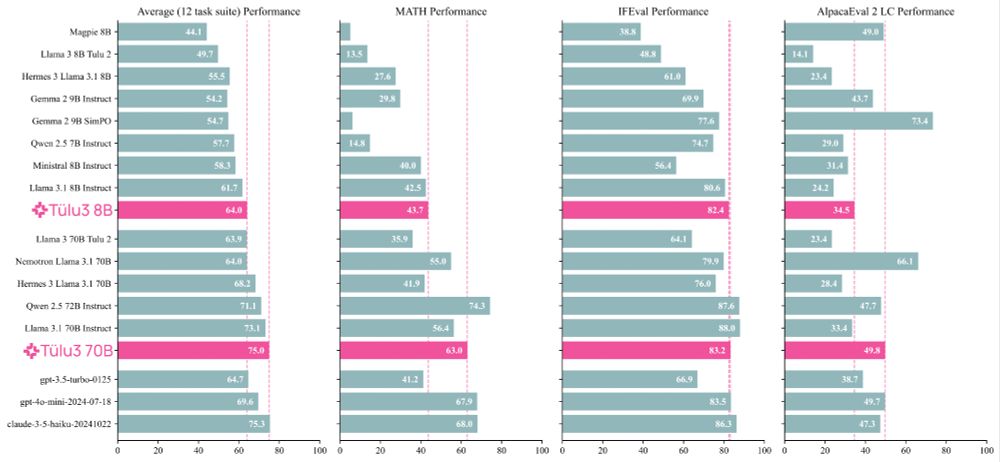

Meet Tülu 3, a set of state-of-the-art instruct models with fully open data, eval code, and training algorithms.

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

21.11.2024 17:15 —

👍 111

🔁 31

💬 2

📌 7

🙋♂️

21.11.2024 16:26 —

👍 2

🔁 0

💬 0

📌 0