How can we use models of cognition to help LLMs interpret figurative language (irony, hyperbole) in a more human-like manner? Come to our #ACL2025NLP poster on Wednesday at 11AM (exhibit hall - exact location TBA) to find out! @mcgill-nlp.bsky.social @mila-quebec.bsky.social @aclmeeting.bsky.social

28.07.2025 09:16 —

👍 3

🔁 2

💬 0

📌 0

What do systematic hallucinations in LLMs tell us about their generalization abilities?

Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :)

@mcgill-nlp.bsky.social @mila-quebec.bsky.social

28.07.2025 09:18 —

👍 2

🔁 2

💬 0

📌 0

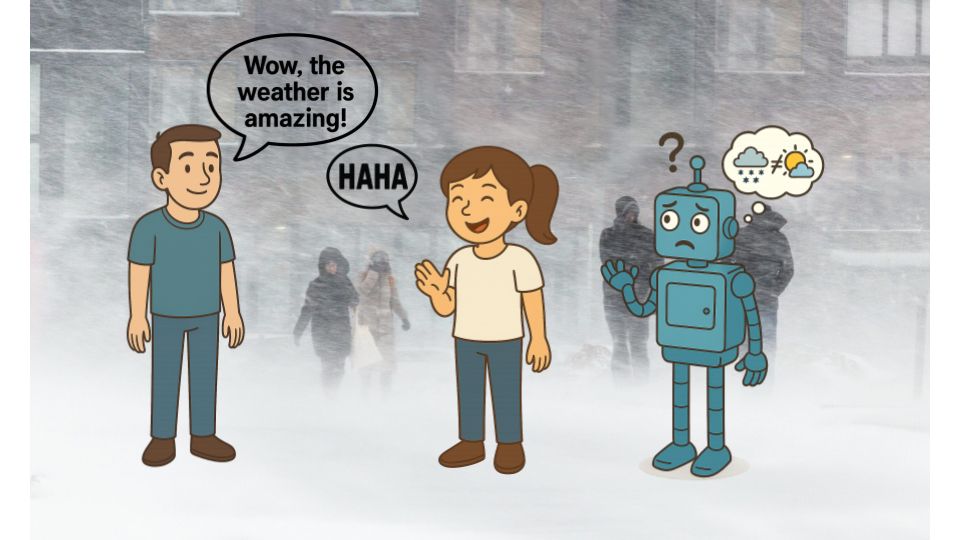

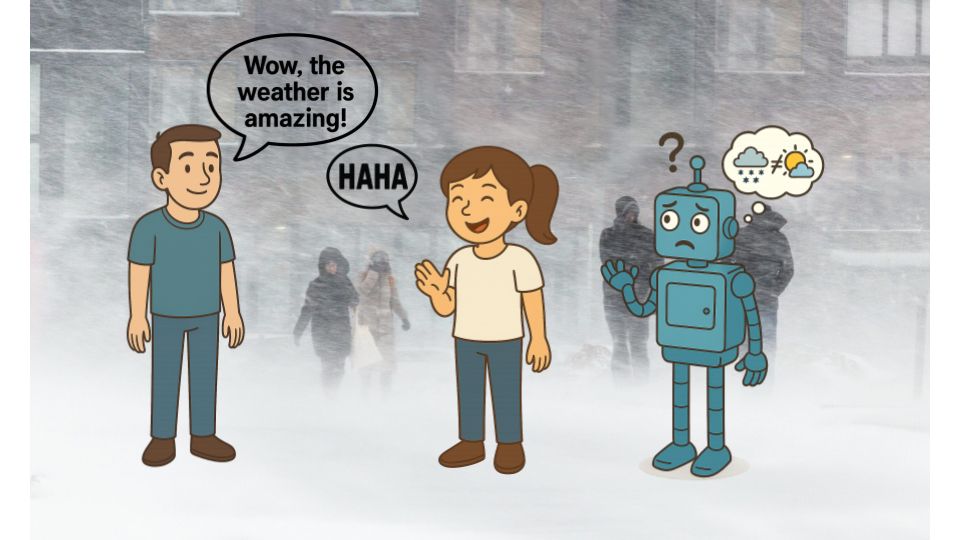

A blizzard is raging through Montreal when your friend says “Looks like Florida out there!” Humans easily interpret irony, while LLMs struggle with it. We propose a 𝘳𝘩𝘦𝘵𝘰𝘳𝘪𝘤𝘢𝘭-𝘴𝘵𝘳𝘢𝘵𝘦𝘨𝘺-𝘢𝘸𝘢𝘳𝘦 probabilistic framework as a solution.

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

26.06.2025 15:52 —

👍 15

🔁 7

💬 1

📌 4

🙏 Huge thanks to my collaborators @mengcao.bsky.social, Marc-Antoine Rondeau, and my advisor Jackie Cheung for their invaluable guidance and support throughout this work, and to friends at @mila-quebec.bsky.social and @mcgill-nlp.bsky.social 💙 7/n

06.06.2025 18:12 —

👍 3

🔁 0

💬 0

📌 0

🧠 TL;DR: These irrelevant context hallucinations show that LLMs go beyond mere parroting 🦜 — they do generalize, based on contextual cues and abstract classes. But not reliably. They're more like chameleons 🦎 — blending with the context, even when they shouldn’t. 6/n

06.06.2025 18:11 —

👍 8

🔁 0

💬 1

📌 1

🔍 What’s going on inside?

With mechanistic interpretability, we found:

- LLMs first compute abstract classes (like “language”) before narrowing to specific answers

- Competing circuits inside the model: one based on context, one based on query. Whichever is stronger wins. 5/n

06.06.2025 18:11 —

👍 3

🔁 0

💬 1

📌 0

Sometimes this yields the right answer for the wrong reasoning (“Portuguese” from “Brazil”), other times, it produces confident errors (“Japanese” from “Honda”). 4/n

06.06.2025 18:11 —

👍 1

🔁 0

💬 1

📌 0

Turns out, we can. They follow a systematic failure mode we call class-based (mis)generalization: the model abstracts the class from the query (e.g., languages) and generalizes based on features from the irrelevant context (e.g., Honda → Japan). 3/n

06.06.2025 18:10 —

👍 6

🔁 0

💬 1

📌 0

These examples show answers — even to the same query — can shift under different irrelevant contexts. Can we predict these shifts? 2/n

06.06.2025 18:10 —

👍 9

🔁 0

💬 1

📌 0

Do LLMs hallucinate randomly? Not quite.

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

06.06.2025 18:09 —

👍 46

🔁 18

💬 1

📌 3