As Andy thoughtfully explains, certain technologies may be new, but our psychology isn't, and decades of psychology research can be used to explain questions like what goes viral online and why people enjoy sycophantic chatbots.

05.02.2026 21:13 — 👍 0 🔁 0 💬 0 📌 0

Really enjoyed speaking with @andyluttrell.bsky.social about the psychology of technology after appearing on his podcast a few years ago to discuss science communication.

05.02.2026 21:13 — 👍 1 🔁 0 💬 1 📌 0

This month on Opinion Science, I talk with @steverathje.bsky.social about his research on the "psychology of technology." We cover the predictors of what goes viral online and the allure and influence of agreeable AI chatbots.

03.02.2026 18:17 — 👍 3 🔁 1 💬 1 📌 1

The Psychology of Virality in the Age of AI

Steve Rathje joins me to discuss why conflict goes viral, how social media shapes polarization, and what AI chatbots mean for belief and bias.

In my latest podcast episode, I discuss the psychology of virality with @steverathje.bsky.social, explore how agreeable AI chatbots may influence our beliefs, and examine how scientists can communicate effectively in a noisy, polarized media environment.

matthewfacciani.substack.com/p/the-psycho...

20.01.2026 23:03 — 👍 14 🔁 8 💬 0 📌 0

Enjoyed talking with @sudkrc.bsky.social on one of my favorite podcasts, the Stanford Psychology Podcast! We discuss how I got into psychology (it all began at Stanford), my recent work on the psychology of virality and sycophantic AI, and much more.

20.12.2025 22:53 — 👍 7 🔁 0 💬 0 📌 0

166 - Steve Rathje: The Psychology of Virality

NEW EPISODE OUT🗣️!! In this episode, Su @sudkrc.bsky.social chats with Dr. Steve Rathje @steverathje.bsky.social on why certain content spreads rapidly online and offline! LISTEN NOW🎧: open.spotify.com/episode/7CoK...

19.12.2025 22:54 — 👍 6 🔁 4 💬 1 📌 2

"Using 'virality' as the main way to decide the information people see every day will (like actual viruses) make us sick."

@jayvanbavel.bsky.social and I wrote a column on our recent paper on the psychology of virality. Check it out here: www.powerofusnewsletter.com/p/why-some-i...

17.12.2025 19:49 — 👍 4 🔁 1 💬 0 📌 0

Why Some Ideas Go Viral—and Most Don’t

What decades of research reveal about why certain content spreads—and how social forces shape what we all see.

While studies find that moral outrage & negativity goes viral on social media, this is also true of the offline world. Gossip is also mostly negative & about people we dislike

I explain why some ideas go viral--but most don't with @steverathje.bsky.social

www.powerofusnewsletter.com/p/why-some-i...

16.12.2025 18:20 — 👍 18 🔁 6 💬 0 📌 1

🚨 New working paper 🚨

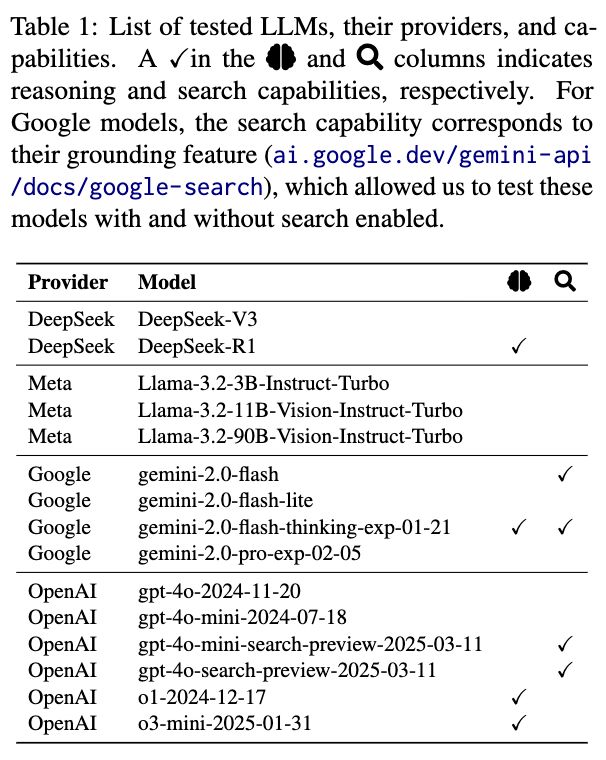

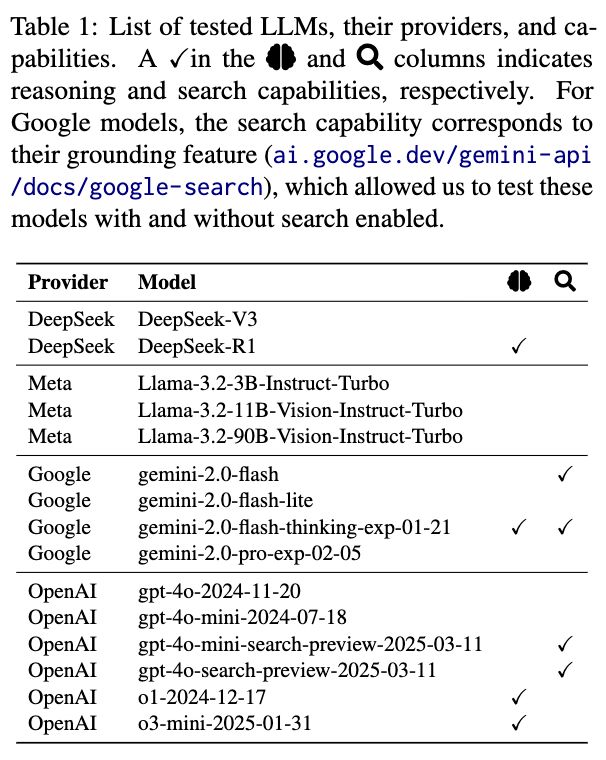

Can LLMs with reasoning + web search reliably fact-check political claims?

We evaluated 15 models from OpenAI, Google, Meta, and DeepSeek on 6,000+ PolitiFact claims (2007–2024).

Short answer: Not reliably—unless you give them curated evidence.

arxiv.org/abs/2511.18749

29.11.2025 22:06 — 👍 216 🔁 64 💬 17 📌 4

New research by @steverathje.bsky.social et al

Epistemic Fragility in Large Language Models: Prompt Framing Systematically Modulates Misinformation Correction

WGemini 2.5 Pro had 74% lower odds of strong correction than Claude Sonnet 4.5, highlighting epistemic fragility

arxiv.org/pdf/2511.22746

04.12.2025 13:47 — 👍 2 🔁 3 💬 0 📌 0

LinkedIn

This link will take you to a page that’s not on LinkedIn

Are you curious about the results of our #wisdomturingtest? Want to find out who was the AI? If so, tune-in to the second part of the ON WISDOM podcast on the "Wisdom Turing Test," with the amazing @steverathje.bsky.social : onwisdompodcast.fireside.fm/67

#TuringTest #ChineseRoom #AIsycophancy

17.11.2025 22:53 — 👍 5 🔁 1 💬 0 📌 0

Abstract and results summary

🚨 New preprint 🚨

Across 3 experiments (n = 3,285), we found that interacting with sycophantic (or overly agreeable) AI chatbots entrenched attitudes and led to inflated self-perceptions.

Yet, people preferred sycophantic chatbots and viewed them as unbiased!

osf.io/preprints/ps...

Thread 🧵

01.10.2025 15:16 — 👍 171 🔁 88 💬 5 📌 15

✨New preprint! Why do people express outrage online? In 4 studies we develop a taxonomy of online outrage motives, test what motives people report, what they infer for in- vs. out-partisans, and how motive inferences shape downstream intergroup consequences. Led by @felix-chenwei.bsky.social 🧵👇

11.11.2025 16:34 — 👍 40 🔁 18 💬 1 📌 1

Really enjoyed speaking with tech ethicist Tristan Harris, who you might know from the Netflix documentary "The Social Dilemma" or his work with the Center for Humane Technology.

🎥 Watch here on YouTube: www.youtube.com/watch?v=TFm3...

🎧 Listen on Spotify: open.spotify.com/episode/0Oi6...

22.10.2025 15:36 — 👍 6 🔁 0 💬 1 📌 0

The Science Behind Why Social Media Makes Us Miserable

I was on the @andrew-yang.bsky.social podcast to discuss the impact of social media.

We discussed what goes viral online, how it impacts our lives, and what we can do about it (with @steverathje.bsky.social):

www.youtube.com/watch?v=YrDV...

21.10.2025 20:37 — 👍 15 🔁 4 💬 0 📌 0

The Science of Smartphones

Hello, I hope that you’re doing great.

What do 92% of scientists agree on regarding social media and smartphone use? blog.andrewyang.com/p/the-scienc...

21.10.2025 12:17 — 👍 18 🔁 5 💬 2 📌 0

Really enjoyed talking with @andrew-yang.bsky.social and @jayvanbavel.bsky.social about the science of social media. Thanks for having us on your podcast, @andrew-yang.bsky.social

20.10.2025 14:35 — 👍 3 🔁 1 💬 0 📌 0

Why does online content seem so angry and emotional? Professors @jayvanbavel.bsky.social and @steverathje.bsky.social join andrewyang.com/podcast to talk the science of social media including why polarization gets revved up by a tiny percentage of accounts.

20.10.2025 13:56 — 👍 21 🔁 8 💬 0 📌 1

Why do some ideas spread widely, while others fail to catch on?

Our new review paper on the PSYCHOLOGY OF VIRALITY is now out in @cp-trendscognsci.bsky.social (it was led by @steverathje.bsky.social)

Read the full paper here: www.cell.com/trends/cogni...

07.10.2025 21:49 — 👍 55 🔁 25 💬 0 📌 1

Issue: Trends in Cognitive Sciences

📚 Read the full issue here: cell.com/trends/cogni...

📖 Our article here: doi.org/10.1016/j.ti...

📝 And the pre-print here: osf.io/preprints/ps...

07.10.2025 18:28 — 👍 3 🔁 0 💬 0 📌 0

Our recent review article "The Psychology of Virality" with @jayvanbavel.bsky.social

is on the front cover of this month's issue of

@cp-trendscognsci.bsky.social.

07.10.2025 18:28 — 👍 13 🔁 4 💬 1 📌 0

Would you notice if Gemini or ChatGPT was just flattering you?

Read @steverathje.bsky.social's new preprint to learn about how people actually feel towards overly agreeable chatbots.

OSF: osf.io/preprints/ps...

(summary in thread below!)

06.10.2025 19:37 — 👍 3 🔁 1 💬 1 📌 0

Screenshot of paper title: Sycophantic AI Decreases Prosocial Intentions and Promotes Dependence

AI always calling your ideas “fantastic” can feel inauthentic, but what are sycophancy’s deeper harms? We find that in the common use case of seeking AI advice on interpersonal situations—specifically conflicts—sycophancy makes people feel more right & less willing to apologize.

03.10.2025 22:53 — 👍 115 🔁 46 💬 2 📌 7

Cool new study by @joelleforestier.bsky.social @page-gould.bsky.social & Alison Chasteen

Can social media contact reduce prejudice?

#PrejudiceResearch

psycnet.apa.org/fulltext/202...

03.10.2025 13:34 — 👍 24 🔁 8 💬 1 📌 0

In a new paper, we find that sycophantic #AI chatbots make people more extreme--operating like an echo chamber

Yet, people prefer sycophantic chatbots and see them as less biased

Only open-minded people prefer disagreeable chatbots: osf.io/preprints/ps...

Led by @steverathje.bsky.social

02.10.2025 15:55 — 👍 56 🔁 34 💬 4 📌 5

Thanks! Excited to read your book.

02.10.2025 04:25 — 👍 0 🔁 0 💬 0 📌 0

So excited to see this research! My students just learned the word “sycophantic” today, for exactly this reason! We talked about the types and qualities of conversations you can have with a sycophant, and why this matters for how we process the output of LLMs.

01.10.2025 17:44 — 👍 22 🔁 2 💬 1 📌 1

Cool! Thank you for sharing!

01.10.2025 18:24 — 👍 1 🔁 0 💬 0 📌 0

Thank you!!

01.10.2025 16:56 — 👍 0 🔁 0 💬 0 📌 0

This is still a working paper, so please let us know if you have any feedback!

01.10.2025 15:16 — 👍 0 🔁 0 💬 1 📌 0

Senior writer at @chronicle.com, writing about scholarship, scholars, and society. stephanie.lee@chronicle.com / Signal: stephaniemlee.07 / stephaniemlee.com / San Francisco

i am a cognitive scientist working on auditory perception at the University of Auckland and the Yale Child Study Center 🇳🇿🇺🇸🇫🇷🇨🇦

lab: themusiclab.org

personal: mehr.nz

intro to my research: youtu.be/-vJ7Jygr1eg

The official Stanford Psychology podcast!

Founded by Eric Neumann & Anjie Cao; co-led by Enna Chen and Adani Abutto. Every episode features a leading researcher discussing their most recent work.

Website: stanfordpsychologypodcast.com

Social Psychology PhD candidate at UPitt

Studying #conflictresolution, #apologies, #forgiveness, #selfforgiveness, and #moralpsychology.

Studying how digital tech reshape political communication and democratic accountability. Postdoctoral Fellow at CAPT/GRAIL, Purdue University. Background in international journalism, globetrotting and contact improvisation dance.

Philosopher and applied mathematician at UC Irvine. Author of The Misinformation Age and Origins of Unfairness. Irish dancer. Mother. Mother of Chickens.

Entrepreneur who wants good things for people. Putting money in people’s hands is the best thing we can do in the age of AI. Let’s make it happen. CEO Noble Mobile. Andrewyang.com

Social psychologist | Goal pursuit, political behavior, well-being | The psychology of maintaining what we have

Professor of Creative Pedagogies | Poet | Game Designer | Slow AI

#SciComm #HigherEd #Poetry #GenAI

https://theslowai.substack.com/

https://linktr.ee/sam.illingworth

We conduct cutting-edge research to understand what divides and what unites us.

Website: https://www.centerconflictcooperation.com/

https://olivia.science

assistant professor of computational cognitive science · she/they · cypriot/kıbrıslı/κυπραία · σὺν Ἀθηνᾷ καὶ χεῖρα κίνει

PhD candidate @ Stanford NLP

https://myracheng.github.io/

Director of Education for MediaSmarts, Canada's centre for digital media literacy. He/him. Open to correction.

Educational researcher focused on EdTech x AI,student engagement and motivation. Associate professor at USC Rossier. Associate Director of USC Center for Generative AI and Society

I am an assistant professor at Tilburg University's Department of Social Psychology.

I have a website at https://vuorre.com.

All posts are posts.

Co-founder, Survey 160. Loves survey and voter participation. Researcher. Democrat. YIMBY. Husband and Dad. Opinion haver and measurer. Outdoors and cooking enthusiast. He/Him

Assoc. Prof of Linguistics at Berkeley. Language, identity & prosody, esp. in politics & human-computer interaction. Trivia person, marathoner, coach. LA & the Bay! No one is illegal on stolen land. Views are my own.

https://nicolerholliday.wordpress.com

Assistant Professor at UCLA | Alum @MIT @Princeton @UC Berkeley | AI+Cognitive Science+Climate Policy | https://ucla-cocopol.github.io/

asst prof @Stanford linguistics | director of social interaction lab 🌱 | bluskies about computational cognitive science & language