Our work establishes a framework for modeling neural data under interventions. Our paper and code are available here:

Paper: openreview.net/pdf?id=n7qKt...

Code: github.com/amin-nejat/i...

Finally, a huge thanks to my co-author Yixin Wang for her contributions (n/n).

16.07.2025 18:13 — 👍 1 🔁 0 💬 0 📌 0

In electrophysiological recordings from the monkey prefrontal cortex during electrical micro-stimulation, we show that iSSM generalizes to unseen test interventions, an important property of identifiable models (8/n).

16.07.2025 18:12 — 👍 0 🔁 0 💬 1 📌 0

We apply iSSM to calcium recordings and photo-stimulation from mice's ALM area during a short-term memory task. We show that the latent variables inferred by the iSSM can distinguish between correct and incorrect trials, showing their behavioral relevance (7/n).

16.07.2025 18:11 — 👍 0 🔁 0 💬 1 📌 0

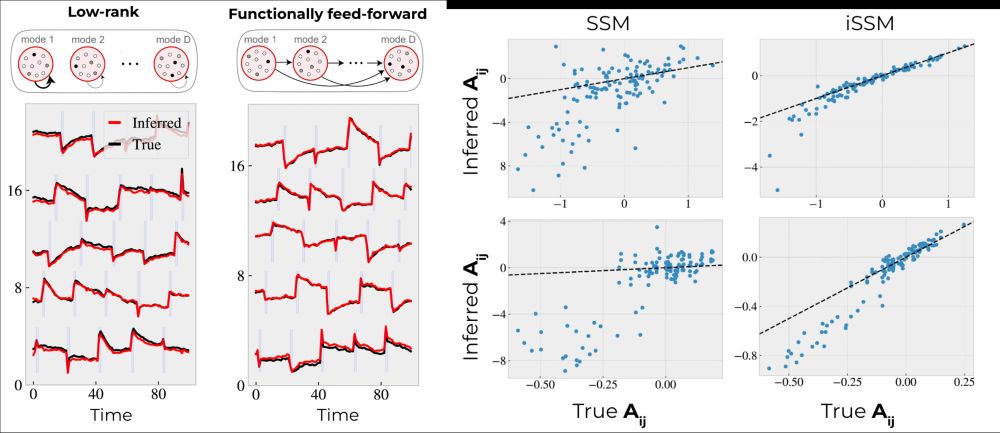

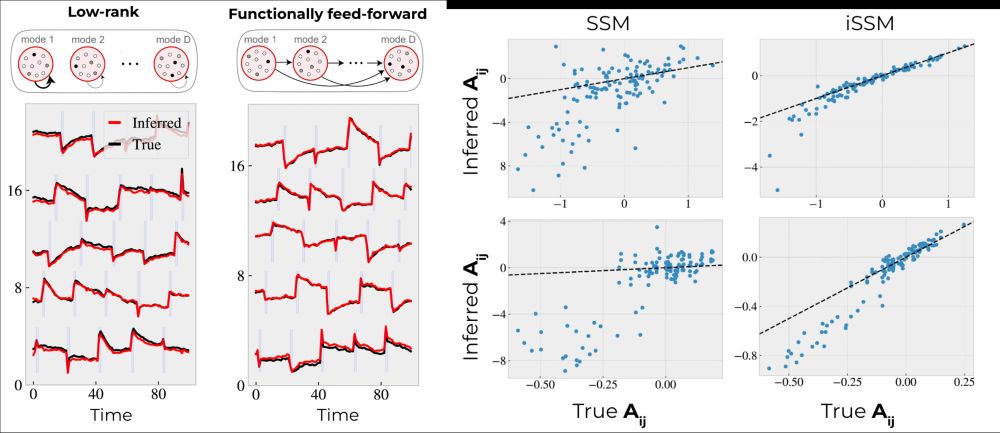

In the linear models of persistent activity in working memory, we show that iSSM can recover the true connectivity matrix with a high precision. For partial observations, this identification problem was originally posed as an open problem in the literature (Qian et al 2024) (6/n).

16.07.2025 18:11 — 👍 0 🔁 0 💬 1 📌 0

In models of motor cortex dynamics with linear dynamics and nonlinear observations, we show that iSSM can identify the underlying dynamics and emissions, and recover the true latent variables. The accuracy of this recovery improves when more interventions are applied (5/n).

16.07.2025 18:11 — 👍 1 🔁 0 💬 1 📌 0

Under some assumptions (bounded completeness of the observation noise, injectivity of the mixing function, and faithfulness) we prove that, under sufficiently diverse interventions, iSSM is able to recover the true latents, dynamics, emissions, and noise parameters (4/n).

16.07.2025 18:11 — 👍 0 🔁 0 💬 1 📌 0

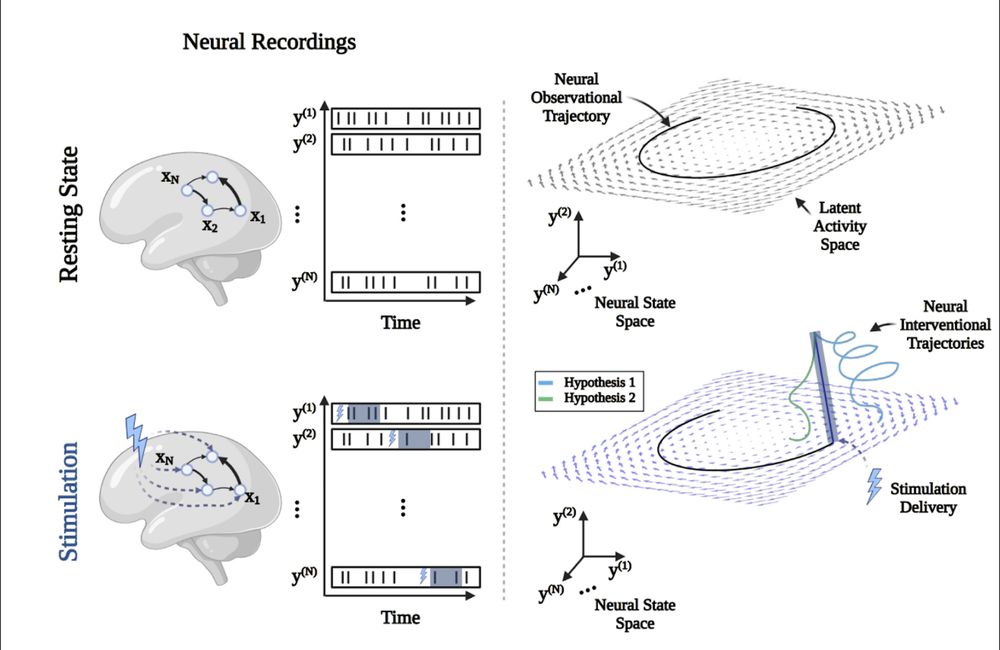

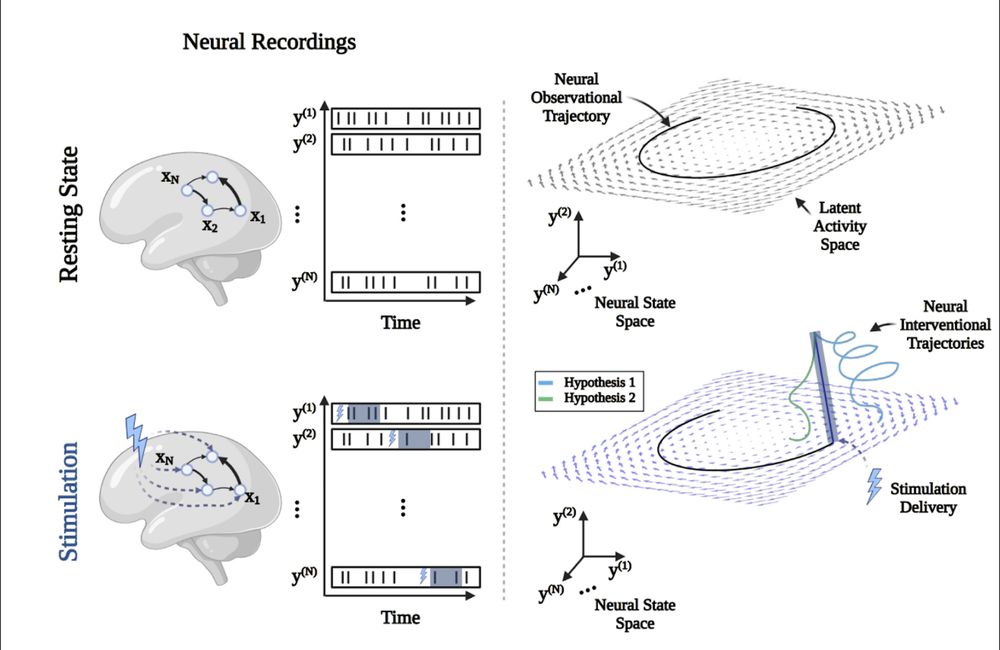

We propose interventional state space models (iSSM), a statistical framework for the joint modeling of observational and interventional data. Compared to SSM, iSSM models interventions in a causal manner, where the interventions decouple nodes from their causal parents (3/n).

16.07.2025 18:11 — 👍 0 🔁 0 💬 1 📌 0

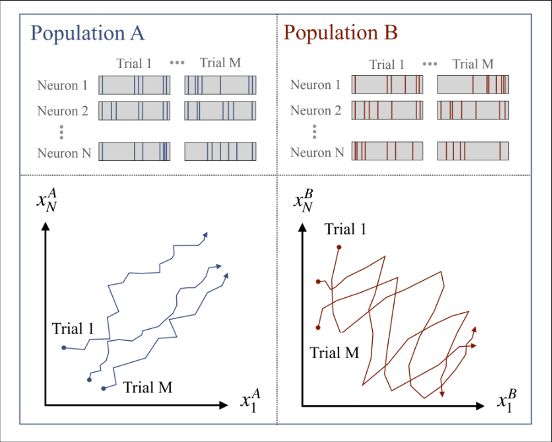

Can we use interventional data to identify the dynamics? Intuitively, interventions kick the state of the system outside of its attractor manifold, allowing for the exploration of the state space (Jazayeri et al 2017) (2/n).

16.07.2025 18:10 — 👍 0 🔁 0 💬 1 📌 0

In neuroscience we often ask which dynamical system model generated the data? However, our ability to distinguish between dynamical hypotheses from data is hindered by model non-identifiability. For example, the two systems below are indistinguishable using observational data (1/n).

16.07.2025 18:10 — 👍 1 🔁 0 💬 1 📌 0

Pleased to announce that our paper on "Identifying Neural Dynamics Using Interventional State Space Models" has been selected for a poster presentation in #ICML2025. Please check the thread for paper details (0/n).

Presentation info: icml.cc/virtual/2025....

16.07.2025 18:09 — 👍 12 🔁 3 💬 1 📌 0

We compute pairwise distances for 2 pretrained models and 10 input prompts. Our results suggest that Causal OT (and SSD) mainly depend on the prompt, regardless of the model. This is reflected in the similar pattern in the four quadrants of the distance matrices (11/n).

22.04.2025 18:14 — 👍 1 🔁 0 💬 1 📌 0

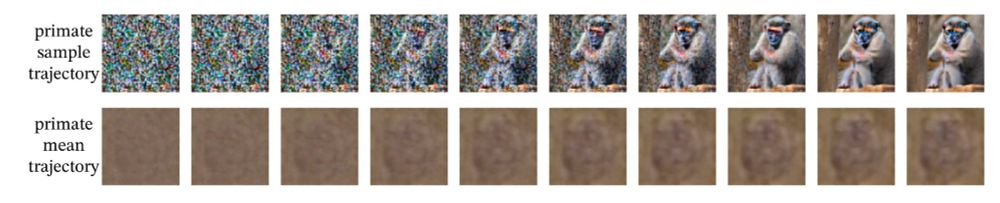

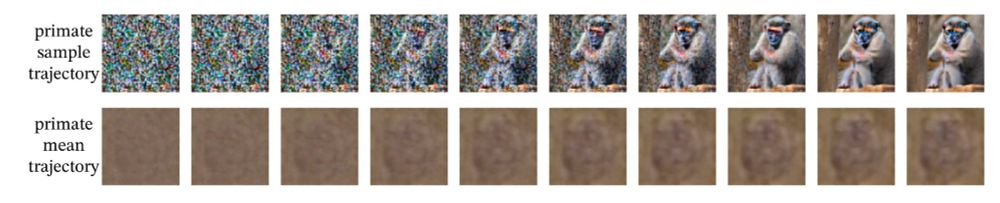

Our final result is on latent text-to-image diffusion models. We took pretrained models and generated text-conditional samples and mean trajectories decoded into images. The lack of structure in means suggests that stochasticity and dynamics are critical for image generation (10/n).

22.04.2025 18:14 — 👍 0 🔁 0 💬 1 📌 0

We show that Causal OT can utilize the across time correlations to distinguish between the three systems (9/n).

22.04.2025 18:13 — 👍 0 🔁 0 💬 1 📌 0

Our second example focuses on distinguishing between flow fields. We generated data from three dynamical systems (saddle, point attractor, and line attractor) and adversarially tuned the parameters such that the marginal distributions from all these systems become the same (8/n).

22.04.2025 18:12 — 👍 0 🔁 0 💬 1 📌 0

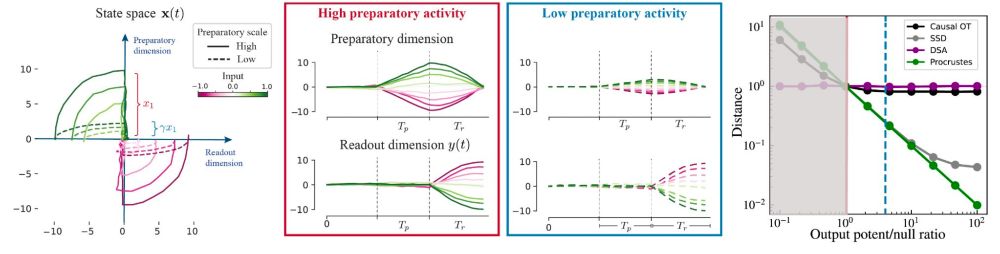

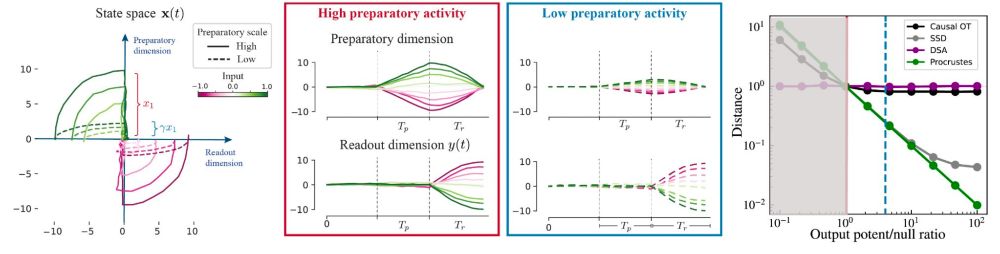

We apply this intuition to the leading model of preparatory dynamics in the motor cortex. We show Causal OT can distinguish between the readout (i.e. muscle activity) and motor dynamics even when preparatory activity lies in the low-variance dimensions of population dynamics (7/n).

22.04.2025 18:12 — 👍 1 🔁 0 💬 1 📌 0

Our first experiment on a toy 1-d example builds an important intuition that Causal OT can distinguish between systems where the past can be more or less predictive of the future, even when the marginal statistics are exactly the same (6/n).

22.04.2025 18:12 — 👍 0 🔁 0 💬 1 📌 0

We then introduce Causal OT, a distance metric that respects both stochasticity and dynamics. Causal OT admits a closed-form solution for Gaussian Processes and importantly, it respects time causality, a property useful for processes with various predictability characteristics (5/n).

22.04.2025 18:11 — 👍 0 🔁 0 💬 1 📌 0

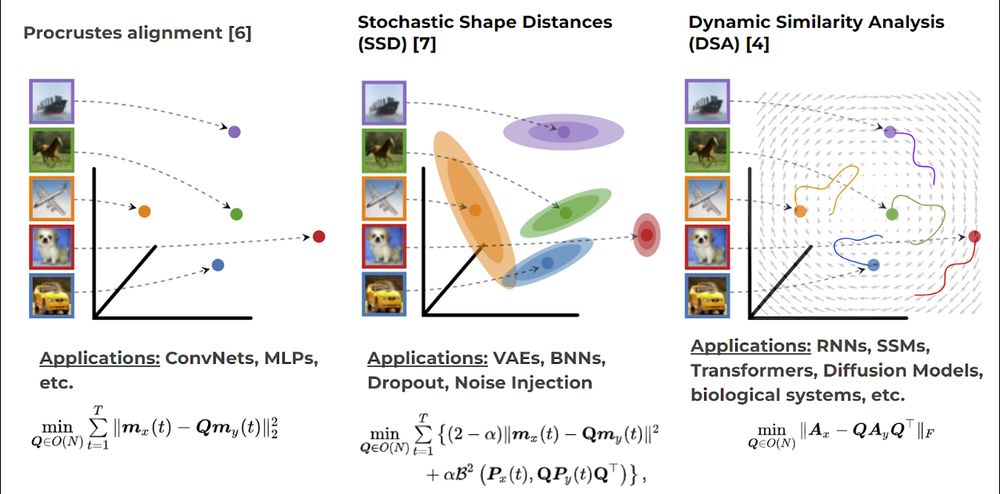

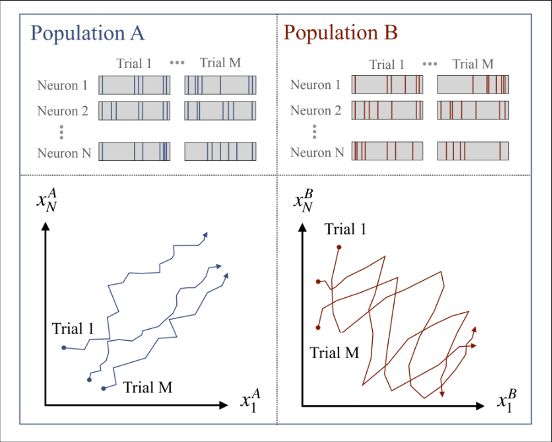

We argue that neither stochasticity nor dynamics alone is sufficient to capture similarities in noisy dynamical systems. This is important because many recent models have both of these components (e.g. diffusion models and biological systems) (4/n).

22.04.2025 18:08 — 👍 0 🔁 0 💬 1 📌 0

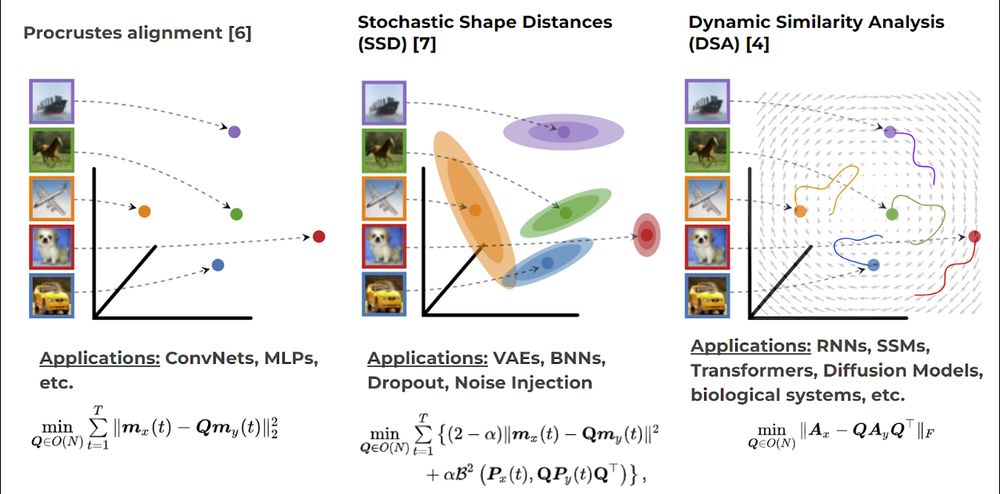

Several distance metrics have been proposed to measure representational similarities, covering a range of assumptions. Almost all methods assume deterministic responses to the inputs, while more recent methods assume stochastic or dynamic responses (3/n).

22.04.2025 18:08 — 👍 0 🔁 0 💬 1 📌 0

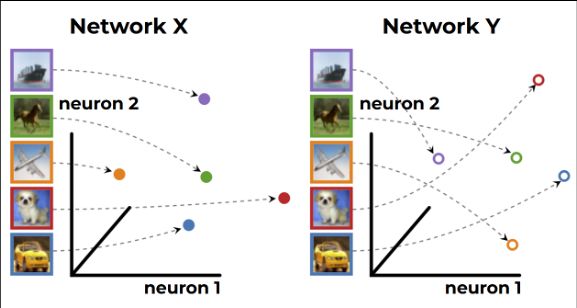

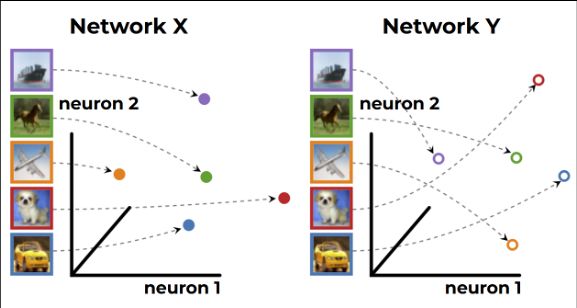

This paradigm allows us to analyze the shape space, a space where each point corresponds to a network and distances reflect the (dis)similarity between representations. Williams et al (2021) showed that analyzing shape space helps us understand the variability of representations across models (2/n).

22.04.2025 18:07 — 👍 0 🔁 0 💬 1 📌 0

A central question in AI is to understand how hidden representations are shaped in models. A useful paradigm is to define metric spaces that quantify differences in representations across networks. Given two networks, the goal is to compare the high-dimensional responses to the same inputs (1/n).

22.04.2025 18:07 — 👍 0 🔁 0 💬 1 📌 0

ICLR 2025 Comparing noisy neural population dynamics using optimal transport distances OralICLR 2025

Excited to announce that our paper on "Comparing noisy neural population dynamics using optimal transport distances" has been selected for an oral presentation in #ICLR2025 (1.8% top papers). Check the thread for paper details (0/n).

Presentation info: iclr.cc/virtual/2025....

22.04.2025 18:06 — 👍 23 🔁 7 💬 1 📌 0

We apply this intuition to the leading model of preparatory dynamics in the motor cortex. We show Causal OT can distinguish between the readout (i.e. muscle activity) and motor dynamics even when preparatory activity lies in the low-variance dimensions of population dynamics (7/n).

22.04.2025 17:53 — 👍 0 🔁 0 💬 0 📌 0

Our first experiment on a toy 1-d example builds an important intuition that Causal OT can distinguish between systems where the past can be more or less predictive of the future, even when the marginal statistics are exactly the same (6/n).

22.04.2025 17:52 — 👍 1 🔁 0 💬 1 📌 0

We then introduce Causal OT, a distance metric that respects both stochasticity and dynamics. Causal OT admits a closed-form solution for Gaussian Processes and importantly, it respects time causality, a property useful for processes with various predictability characteristics (5/n).

22.04.2025 17:51 — 👍 0 🔁 0 💬 1 📌 0

We argue that neither stochasticity nor dynamics alone is sufficient to capture similarities in noisy dynamical systems. This is important because many recent models have both of these components (e.g. diffusion models and biological systems) (4/n).

22.04.2025 17:51 — 👍 0 🔁 0 💬 1 📌 0

Several distance metrics have been proposed to measure representational similarities, covering a range of assumptions. Almost all methods assume deterministic responses to the inputs, while more recent methods assume stochastic or dynamic responses (3/n).

22.04.2025 17:50 — 👍 0 🔁 0 💬 1 📌 0

This paradigm allows us to analyze the shape space, a space where each point corresponds to a network and distances reflect the (dis)similarity between representations. Williams et al (2021) showed that analyzing shape space helps us understand the variability of representations across models (2/n).

22.04.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

A central question in AI is to understand how hidden representations are shaped in models. A useful paradigm is to define metric spaces that quantify differences in representations across networks. Given two networks, the goal is to compare the high-dimensional responses to the same inputs (1/n).

22.04.2025 17:47 — 👍 0 🔁 0 💬 1 📌 0

Graduate Student in Comp Neuro/ML at Champalimaud Foundation, Lisbon.

Explorer of the Black Box

🪰🧠🤖 currently interning @Google Deepmind | Incoming Kempner Fellow @Harvard Uni | PhD@EPFL | Previously @UniBogazici @FlatironCCN

Neuroscience PhD student at NYU in the Movshon and Chung Labs.

https://www.cns.nyu.edu/~saraf/

ss6786@nyu.edu

PhD candidate @ UvA 🇳🇱, ELLIS 🇪🇺 | {video, neuro, cognitive}-AI

Neural networks 🤖 and brains 🧠 watching videos

🔗 https://sites.google.com/view/csartzetaki/

Associate Research Scientist at Center for Theoretical Neuroscience Zuckerman Mind Brain Behavior Institute

Kavli Institute for Brain Science

Columbia University Irving Medical Center

K99-R00 scholar @NIH @NatEyeInstitute

https://toosi.github.io/

|| assistant prof at University of Montreal || leading the systems neuroscience and AI lab (SNAIL: https://www.snailab.ca/) 🐌 || associate academic member of Mila (Quebec AI Institute) || #NeuroAI || vision and learning in brains and machines

Post-doc at Mila & U de Montréal in Guillaume Lajoie & Matt Perich's labs

Focus on neuroscience, RL for motor learning, neural control of movement, NeuroAI.

Postdoc @Harvard interested in neuro-AI and neurotheory. Previously @columbia, @ucberkeley, and @apple. 🧠🧪🤖

Prof.@AGH_Krakow. Intersecting #neuroscience/#AI and #impact #social development.

#innovation #globalhealth All links: http://linktr.ee/alecrimi

Assistant Professor of Physics & Neuroscience at Princeton University. Studying how C. elegans nervous system processes information to generate actions.

Neuro + AI Research Scientist at DeepMind; Affiliate Professor at Columbia Center for Theoretical Neuroscience.

Likes studying learning+memory, hippocampi, and other things brains have and do, too.

she/her.

Assistant Professor of Machine Learning

Generative AI, Uncertainty Quantification, AI4Science

Amsterdam Machine Learning Lab, University of Amsterdam

https://naesseth.github.io

Machine Learning Professor

https://cims.nyu.edu/~andrewgw

Boutique Neurotheory & Snazz Compneuro at IST Austria

Assistant prof @nyu

neuroinformaticslab.com

Research Engineer @ Muse 🧠💤👨💻 Into computational neuroscience, meso-scale brain models, neural rhythms, wearables, mobile EEG, consciousness, sleep

P.S. #WomanLifeLiberty

Computational Vision @ 🍎;

Former Neuro PhD @ NYU

Professor, Department of Psychology and Center for Brain Science, Harvard University

https://gershmanlab.com/