The curriculum effect in visual learning: the role of readout dimensionality

Generalization of visual perceptual learning (VPL) to unseen conditions varies across tasks. Previous work suggests that training curriculum may be integral to generalization, yet a theoretical explan...

🚨 New preprint alert!

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

30.09.2025 14:25 — 👍 79 🔁 25 💬 1 📌 2

Excited to share that POSSM has been accepted to #NeurIPS2025! See you in San Diego 🏖️

20.09.2025 15:40 — 👍 11 🔁 3 💬 1 📌 1

Neural Interfaces

Neural Interfaces is a comprehensive book on the foundations, major breakthroughs, and most promising future developments of neural interfaces. The bo

I'm very excited to announce the publication of our new book Neural Interfaces, published by Elsevier. The book is a comprehensive resource for all those interested and gravitating around neural interfaces and brain-computer interfaces (BCIs).

shop.elsevier.com/books/neural...

19.08.2025 20:18 — 👍 6 🔁 1 💬 1 📌 0

🐐

12.07.2025 20:23 — 👍 1 🔁 0 💬 0 📌 0

Step 1: Understand how scaling improves LLMs.

Step 2: Directly target underlying mechanism.

Step 3: Improve LLMs independent of scale. Profit.

In our ACL 2025 paper we look at Step 1 in terms of training dynamics.

Project: mirandrom.github.io/zsl

Paper: arxiv.org/pdf/2506.05447

12.07.2025 18:44 — 👍 4 🔁 1 💬 2 📌 0

(1/n)🚨Train a model solving DFT for any geometry with almost no training data

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

10.06.2025 19:49 — 👍 12 🔁 4 💬 1 📌 1

Manitokan are images set up where one can bring a gift or receive a gift. 1930s Rocky Boy Reservation, Montana, Montana State University photograph. Colourized with AI

Preprint Alert 🚀

Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always the case. For example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan.

1/8

05.06.2025 15:32 — 👍 35 🔁 13 💬 1 📌 3

Finally, we show POSSM's performance on speech decoding - a long context task that can quickly grow expensive for Transformers. In the unidirectional setting, POSSM beats the GRU baseline, achieving a phoneme error rate (PER) of 27.3 while having more robustness to variation in preprocessing.

🧵6/7

06.06.2025 17:40 — 👍 3 🔁 0 💬 1 📌 0

Cross-species transfer! 🐵➡️🧑

Excitingly, we find that POSSM pretrained solely on monkey reaching data achieves SOTA performance when decoding imagined handwriting in human subjects! This shows the potential of leveraging NHP data to bootstrap human BCI decoding in low-data clinical settings.

🧵5/7

06.06.2025 17:40 — 👍 4 🔁 0 💬 2 📌 0

By pretraining on 140 monkey reaching sessions, POSSM effectively transfers to new subjects and tasks, matching or outperforming several baselines (e.g., GRU, POYO, Mamba) across sessions.

✅ High R² across the board

✅ 9× faster inference than Transformers

✅ <5ms latency per prediction

🧵4/7

06.06.2025 17:40 — 👍 3 🔁 0 💬 1 📌 0

POSSM combines the real-time inference of an RNN with the tokenization, pretraining, and finetuning abilities of a Transformer!

Using POYO-style tokenization, we encode spikes in 50ms windows and stream them to a recurrent model (e.g., Mamba, GRU) for fast, frequent predictions over time.

🧵3/7

06.06.2025 17:40 — 👍 3 🔁 0 💬 1 📌 0

The problem with existing decoders?

😔 RNNs offer efficient, causal inference, but rely on rigid, binned input formats - limiting generalization to new neurons or sessions.

😔 Transformers enable generalization via tokenization, but have high computational costs due to the attention mechanism.

🧵2/7

06.06.2025 17:40 — 👍 4 🔁 0 💬 1 📌 0

New preprint! 🧠🤖

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

06.06.2025 17:40 — 👍 53 🔁 24 💬 2 📌 8

I am joining @ualberta.bsky.social as a faculty member and

@amiithinks.bsky.social!

My research group is recruiting MSc and PhD students at the University of Alberta in Canada. Research topics include generative modeling, representation learning, interpretability, inverse problems, and neuroAI.

29.05.2025 18:53 — 👍 11 🔁 2 💬 1 📌 0

POYO+

POYO+: Multi-session, multi-task neural decoding from distinct cell-types and brain regions

Scaling models across multiple animals was a major step toward building neuro-foundation models; the next frontier is enabling multi-task decoding to expand the scope of training data we can leverage.

Excited to share our #ICLR2025 Spotlight paper introducing POYO+ 🧠

poyo-plus.github.io

🧵

25.04.2025 22:14 — 👍 44 🔁 10 💬 1 📌 1

Interested in foundation models for #neuroscience? Want to contribute to the development of the next-generation of multi-modal models? Come join us at IVADO in Montreal!

We're hiring a full-time machine learning specialist for this work.

Please share widely!

#NeuroAI 🧠📈 🧪

11.04.2025 16:17 — 👍 57 🔁 31 💬 1 📌 1

📽️Recordings from our

@cosynemeeting.bsky.social

#COSYNE2025 workshop on “Agent-Based Models in Neuroscience: Complex Planning, Embodiment, and Beyond" are now online: neuro-agent-models.github.io

🧠🤖

07.04.2025 20:57 — 👍 36 🔁 11 💬 1 📌 0

Talk recordings from our COSYNE Workshop on Neuro-foundation Models 🌐🧠 are now up on the workshop website!

neurofm-workshop.github.io

05.04.2025 00:41 — 👍 34 🔁 10 💬 1 📌 1

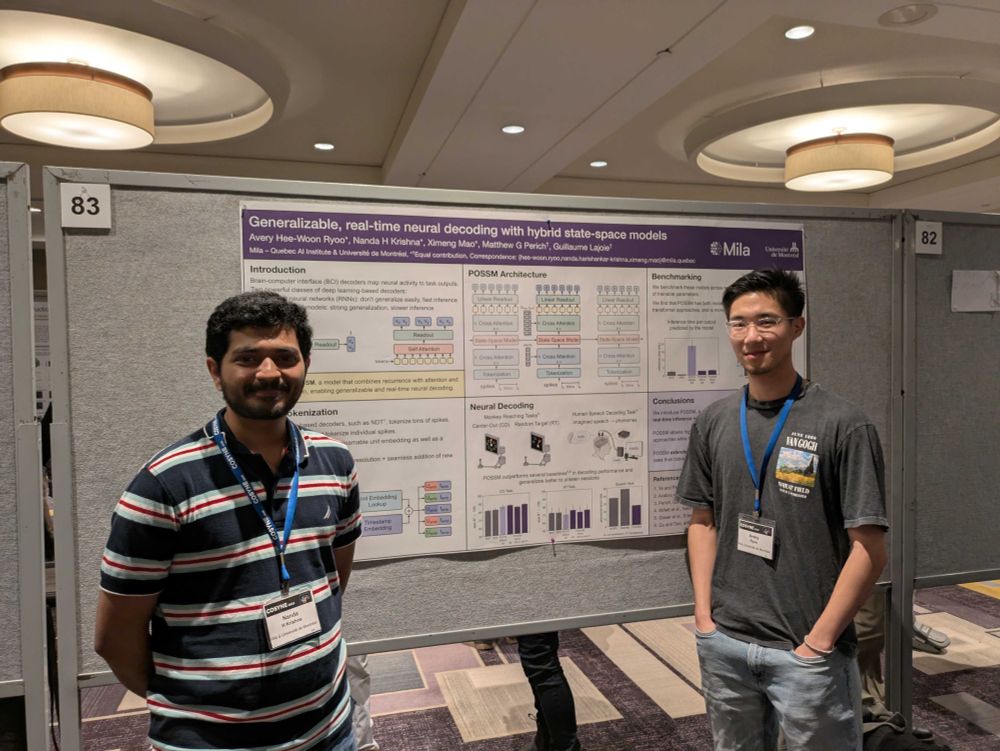

Very late, but had a 🔥 time at my first Cosyne presenting my work with @nandahkrishna.bsky.social, Ximeng Mao, @mattperich.bsky.social, and @glajoie.bsky.social on real-time neural decoding with hybrid SSMs. Keep an eye out for a preprint (hopefully) soon 👀

#Cosyne2025 @cosynemeeting.bsky.social

04.04.2025 05:21 — 👍 31 🔁 6 💬 2 📌 0

Excited to be at #Cosyne2025 for the first time! I'll be presenting my poster [2-104] during the Friday session. E-poster here: www.world-wide.org/cosyne-25/se...

27.03.2025 19:53 — 👍 8 🔁 3 💬 0 📌 0

We'll be presenting two projects at #Cosyne2025, representing two main research directions in our lab:

🧠🤖 🧠📈

1/3

27.03.2025 19:13 — 👍 46 🔁 9 💬 1 📌 1

@oliviercodol.bsky.social my opportunity to lose to scientists in a different field

27.03.2025 02:06 — 👍 2 🔁 0 💬 1 📌 0

Just a couple days until Cosyne - stop by [3-083] this Saturday and say hi! @nandahkrishna.bsky.social

24.03.2025 18:19 — 👍 6 🔁 3 💬 0 📌 0

This will be a more difficult Cosyne than normal, due to both the travel restrictions for people coming from the US and the strike that may be happening at the hotel in Montreal.

But, we can still make this an awesome meeting as usual, y'all. Let's pull together and make it happen!

🧠📈

#Cosyne2025

23.03.2025 21:26 — 👍 44 🔁 5 💬 2 📌 0

Hi! Currently there are no plans to livestream, but we may *potentially* post recordings in the future (contingent on speaker permission)

11.03.2025 01:54 — 👍 2 🔁 0 💬 1 📌 0

Join us at #COSYNE2025 to explore recent advancements in large-scale training and analysis of brain data! 🧠🟦

We also made a starter pack with (most of) our speakers: go.bsky.app/Ss6RaEF

10.03.2025 21:21 — 👍 17 🔁 4 💬 0 📌 0

COSYNE 2025 Workshop - Building a foundation model for the brain

Join us to explore neuro-foundation models. March 31-April 1, 2025 in Mont Tremblant, Canada.

We have a great lineup of speakers and panelists, you can check out our schedule here: neurofm-workshop.github.io. Co-organized with: @mehdiazabou.bsky.social, @nandahkrishna.bsky.social, @colehurwitz.bsky.social, Eva Dyer, and @tyrellturing.bsky.social. We hope to see you there!

10.03.2025 19:55 — 👍 5 🔁 0 💬 1 📌 2

How can large-scale models + datasets revolutionize neuroscience 🧠🤖🌐? We are excited to announce our workshop: “Building a foundation model for the brain: datasets, theory, and models” at @cosynemeeting.bsky.social #COSYNE2025. Join us in Mont-Tremblant, Canada from March 31 – April 1!

10.03.2025 19:55 — 👍 44 🔁 17 💬 2 📌 9

forms.gle/1DPPVe8KLRWD...

here's a google form for ease!

01.02.2025 22:48 — 👍 0 🔁 0 💬 0 📌 0

Staff Reporter at The Transmitter. Science Journalist.

PhD student at McGill University working on the intersection of Neuroscience and AI

Mathematician, writer, Cornell professor. All cards on the table, face up, all the time. www.stevenstrogatz.com

I lead Cohere For AI. Formerly Research

Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

machine learning asst prof at @cs.ubc.ca and amii

statistical testing, kernels, learning theory, graphs, active learning…

she/her, 🏳️⚧️, @queerinai.com

Mathematician/informatician thinking probabilistically, expecting the same of you.

Edinburgh 🏴 / they≥she>he≥0 🏳️⚧️

It is the categories in the mind and the guns in their hands which keep us enslaved.

👩🏻🏫 asst teaching prof @ uc san diego

🧠 cognitive scientist

🤖 Machine Learning & 🌊 Simulation | 📺 YouTuber | 🧑🎓 PhD student @ Thuerey Group

The Cognitive Computational Neuroscience Conference is an annual forum for discussion among researchers in cognitive science, neuroscience, and AI, dedicated to understanding the computations that underlie complex behavior.

https://2025.ccneuro.org

So far I have not found the science, but the numbers keep on circling me.

Views my own, unfortunately.

neuromantic - ML and cognitive computational neuroscience - PhD student at Kietzmann Lab, Osnabrück University.

⛓️ https://init-self.com

neuro and AI (they/she)

Allen Institute for Neural Dynamics, theory lead | UW affiliate asst prof

AI, Neuroscience and Music

Assistant Professor of Psychology at Carnegie Mellon University | Studying how we acquire, adapt, and retain skilled movements | Physical Intelligence Lab: www.tsaylab.com

PhD student at Université de Montréal and Mila

https://mj10.github.io/

Interested in iBCIs and the software that drives them. Most recently working on www.ezmsg.org

Computational cog neuro | PhD candidate @ Yale | NSFGRFP | Dartmouth ‘20

ericabusch.github.io

PhD student at Mila – Quebec AI Institute and University of Montreal. Neural-AI / Brain-compute interface.

Scientific AI/ machine learning, dynamical systems (reconstruction), generative surrogate models of brains & behavior, applications in neuroscience & mental health