Last week, I had the honor to speak about this at the RISEof speaking ML seminar, hosted by Olof Mogren. You can find the recording here www.youtube.com/watch?v=olpX...

14.11.2025 08:44 — 👍 2 🔁 0 💬 0 📌 0@felix-m-koehler.bsky.social

🤖 Machine Learning & 🌊 Simulation | 📺 YouTuber | 🧑🎓 PhD student @ Thuerey Group

Last week, I had the honor to speak about this at the RISEof speaking ML seminar, hosted by Olof Mogren. You can find the recording here www.youtube.com/watch?v=olpX...

14.11.2025 08:44 — 👍 2 🔁 0 💬 0 📌 0🧵 Project Page: tum-pbs.github.io/emulator-sup...

📃 Paper: arxiv.org/pdf/2510.23111

Feel free to stop by at NeurIPS in San Diego during the poster session on Friday evening 4:30 p.m. PST — 7:30 p.m. PST at # 2106. 😊 I am looking forward to the exchange!

Proven theoretically for linear PDEs, validated experimentally on nonlinear ones like Burgers' equation. Time to rethink data-driven ML benchmarks for higher physical fidelity!

14.11.2025 08:44 — 👍 1 🔁 0 💬 1 📌 0

We show neural emulators trained on low-fidelity data can outperform their source simulators—thanks to inductive biases and smarter error accumulation—beating them against high-fidelity references.

14.11.2025 08:44 — 👍 1 🔁 0 💬 1 📌 0Can your AI surpass the simulator that taught it? What if the key to more accurate PDE modeling lies in questioning your training data's origins? 🤔

Excited to share my #NeurIPS 2025 paper with @thuereygroup.bsky.social: "Neural Emulator Superiority"!

📢 Calling for (4-page) workshop papers on hashtag #differentiable programming and hashtag #SciML @euripsconf.bsky.social.

19.09.2025 12:37 — 👍 1 🔁 0 💬 0 📌 0It may only be a band-aid, but we have just announced our new "Salon des Refusés" sessions for papers rejected due to space constraints: bsky.app/profile/euri...

19.09.2025 09:35 — 👍 14 🔁 5 💬 4 📌 0

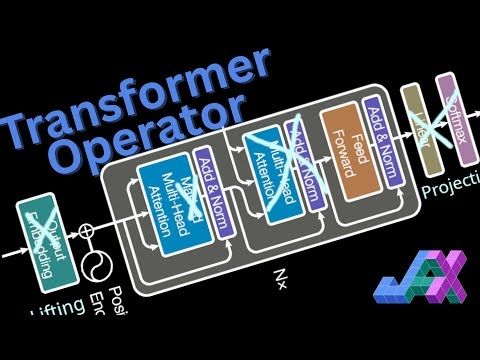

We will use APEBench to train, test and benchmark it in an advection scenario against a feedforward ConvNet.

arxiv.org/abs/2411.00180

Check Out my latest video on implementing an attention-based neural operator/emulator (i.e. a Transformer) in JAX:

youtu.be/GVVWpyvXq_s

Travelling to Singapore next week for #ICLR2025 presenting this paper (Sat 3 pm nr. 538): arxiv.org/abs/2502.19611

DM me (Whova, Email or bsky) if you want to chat about (autoregressive) neural emulators/operators for PDE, autodiff, differentiable physics, numerical solvers etc. 😊

Notebook: github.com/Ceyron/machi...

04.04.2025 14:36 — 👍 0 🔁 0 💬 0 📌 0

Check out my latest video on approximating the full Lyapunov spectrum for the Lorenz system: youtu.be/Enves8MDwms

Nice showcase of #JAX's features:

- `jax.lax.scan` for autoregressive rollout

- `jax.linearize` repeated jvp

- `jax.vmap`: automatic vectorization

Art.

28.03.2025 13:24 — 👍 0 🔁 0 💬 0 📌 0

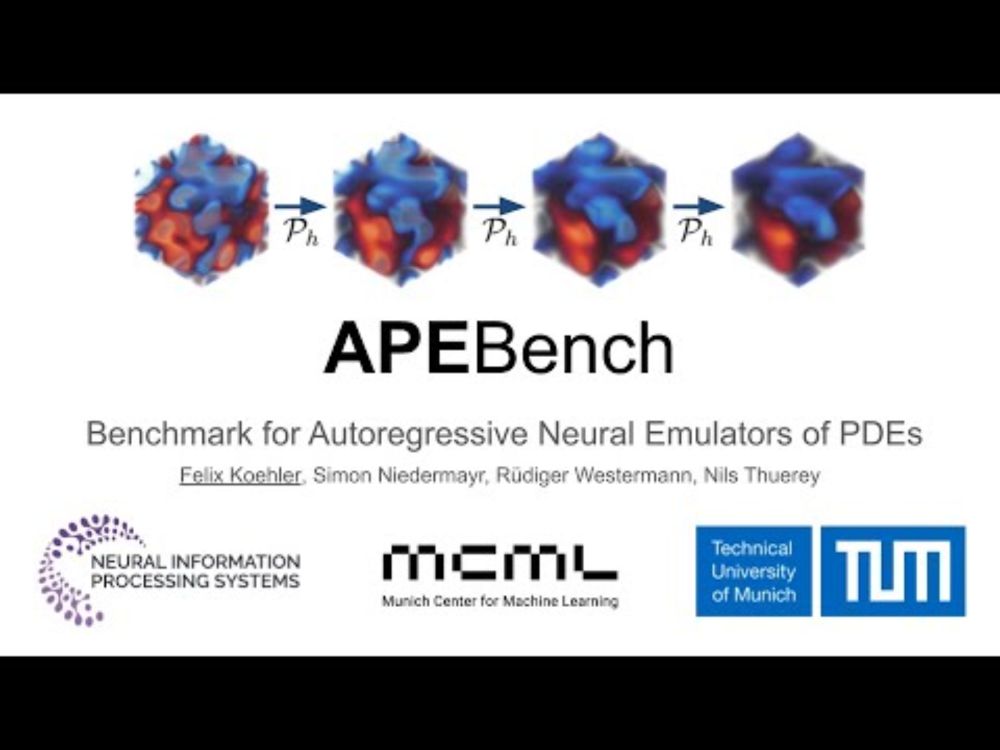

Today, I had the chance to present my #NeurIPS paper "APEBench" @SimAI4Science . You can find the recording on YouTube: youtu.be/wie-SzD6AJE

18.02.2025 18:47 — 👍 5 🔁 1 💬 0 📌 0To get started with APEBench install it via `pip install apebench` and check out the public documentation: tum-pbs.github.io/apebench/

12.02.2025 16:08 — 👍 0 🔁 0 💬 0 📌 0Finally, there are so many cool experiments we did to find insights in neural emulators, to highlight limitations they inherit from the numerical simulator counterparts, etc. You find all the details in the paper: arxiv.org/pdf/2411.00180

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

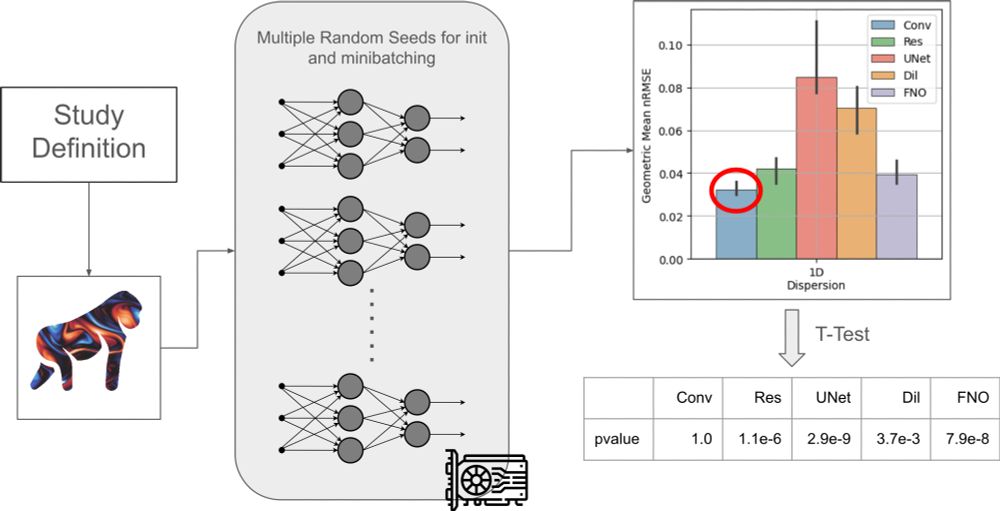

And to enforce good practices APEBench is designed around controllable deterministic pseudo-randomness that allows for straightforward run of seed statistics that can be used to perform hypothesis tests.

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0Another important contribution is that APEBench defines most of its PDEs via a new parameterization that we call "difficulties". Those allow for expressing a wide range of different dynamics with a reduced and interpretable set of numbers.

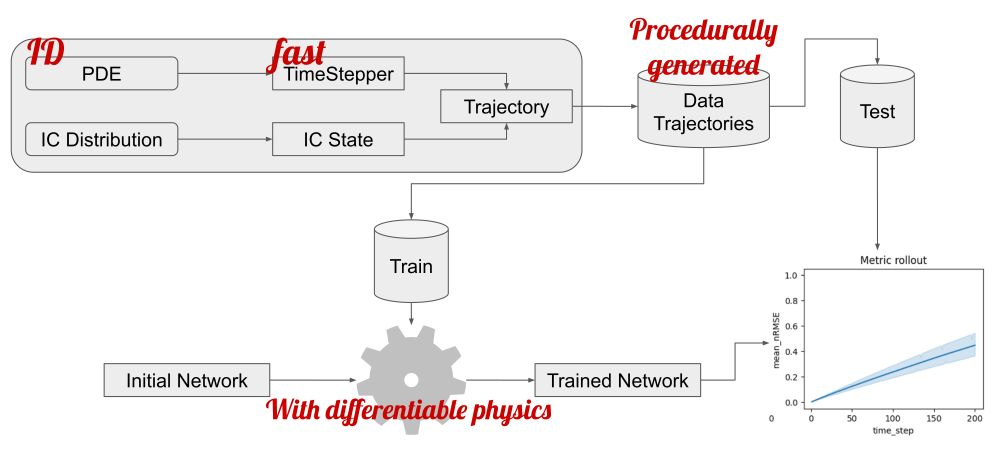

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0This allows for investigating how unrolled training helps with long-term accuracy.

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

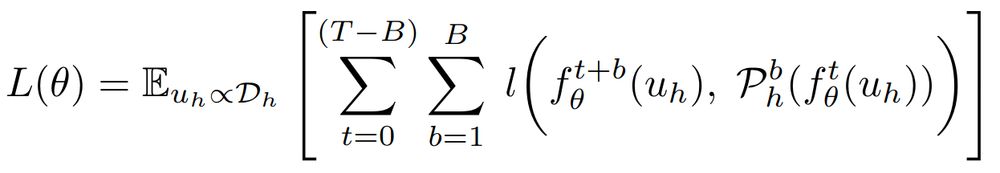

Temporal Axis also means various configurations of how emulator and simulator interact during training, for example, in terms of supervised unrolled training. We generalize many approaches seen in the literature in terms of unrolled steps T and branch steps B.

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0One core motivation for APEBench was the temporal axis in emulator learning (hence the "autoregressive" in APE). We focus on rollout metrics and sample rollouts to truly understand temporal generalization via long-term stability and accuracy in more than 20 metrics.

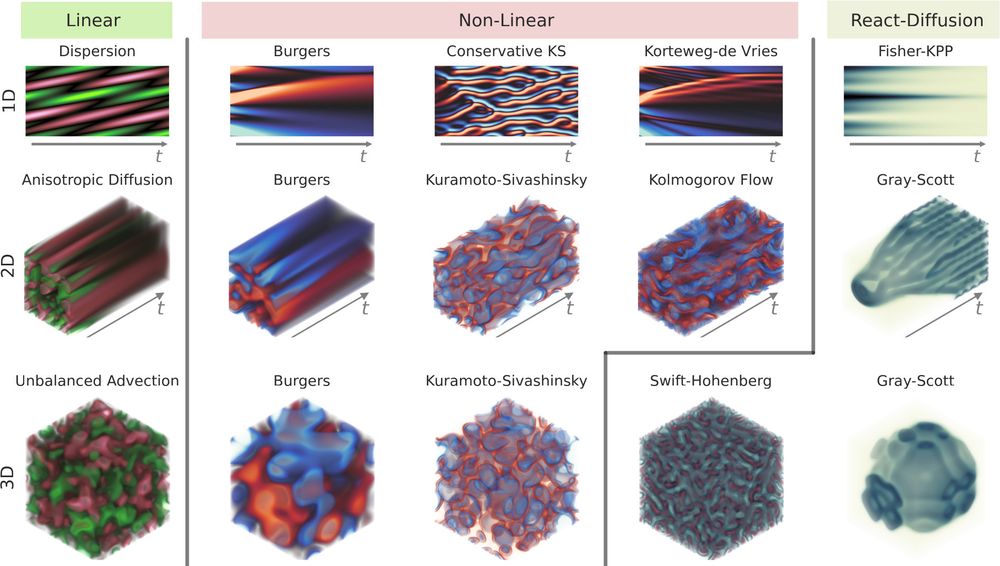

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0We, of course, also ship a wide range of popular emulator architectures, all of them implemented in JAX and designed agnostic to spatial dimension and boundary conditions. If you don't like APEBench (which I cannot imagine 😉), they are also available individually: github.com/Ceyron/pdequ...

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

The solver is also available as an individual package: Exponax: github.com/Ceyron/exponax

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

This numerical solver is based on Fourier-pseudo spectral ETDRK methods, one of the most efficient numerical techniques to solve semi-linear PDEs on periodic boundaries for which we provide a wide range of pre-defined configurations (46 as of the initial release).

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

With it, we can _procedurally_ generate all data ever needed in seconds on a modern GPU --- yes, this means you do not have to download hundreds of GBs of data. Installing the APEBench Python package (<1MB) is sufficient. 😎

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0The key innovation is to tightly integrate a classical numerical solver that produces all the synthetic training data with incredible efficiency and allows for easy scenario customization.

12.02.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0Thanks @munichcenterml.bsky.social for highlighting my recent #NeurIPS paper: APEBench,

a new benchmark suite for autoregressive emulators of PDEs to understand how we might solve the models of nature more efficiently. More details 🧵

Visual summary on project page: tum-pbs.github.io/apebench-pap...

Our online book on systems principles of LLM scaling is live at jax-ml.github.io/scaling-book/

We hope that it helps you make the most of your computing resources. Enjoy!

I’d like to thank everyone contributing to our five accepted ICLR papers for the hard work! Great job everyone 👍 Here’s a quick list, stay tuned for details & code in the upcoming weeks…

23.01.2025 03:14 — 👍 5 🔁 1 💬 0 📌 0Scholar Inbox is amazing. Thanks for the great tool 👍

16.01.2025 18:48 — 👍 3 🔁 0 💬 0 📌 0