This is the best court live tweeting I have ever seen

04.11.2025 22:05 — 👍 0 🔁 0 💬 0 📌 0Matt

@rafuse.dev.bsky.social

hi

@rafuse.dev.bsky.social

hi

This is the best court live tweeting I have ever seen

04.11.2025 22:05 — 👍 0 🔁 0 💬 0 📌 0Incredible work

04.11.2025 22:03 — 👍 0 🔁 0 💬 0 📌 0Lansdowne 2.0 is a travesty of a project.

03.11.2025 15:31 — 👍 0 🔁 0 💬 0 📌 0What does the new design look like? I haven't seen any renders.

03.11.2025 02:20 — 👍 1 🔁 0 💬 1 📌 0At least now I can go back to not caring about sports again.

02.11.2025 04:20 — 👍 0 🔁 0 💬 0 📌 0This is cinema

02.11.2025 03:36 — 👍 0 🔁 0 💬 0 📌 0KIRK

02.11.2025 03:30 — 👍 0 🔁 0 💬 0 📌 0

He did

youtu.be/Tp1T7kPEdDY?...

This just isn’t an accurate portrayal of this video. It’s pretty clearly dismissive (rightfully so) of the claims. The worst thing you can say about it is that it’s clickbait.

WaPo sucks but this guy worked alongside Dave before he left.

The guy in the video was also a coworker of Dave’s and they collaborated on these shorts. “Some right wing guy” is pretty reductive.

29.10.2025 16:24 — 👍 5 🔁 0 💬 0 📌 0This is absolutely disgusting - let alone overriding a birth certificate. Coupled with the fact facial recognition is documented to have trouble with non-white faces and this is an absolute disaster.

29.10.2025 15:16 — 👍 1 🔁 1 💬 0 📌 0When tech co.s & their fans insist that LLMs are trained on 'all of human knowledge', I always think of languages and other forms of knowledge that are not (and often can't be) in LLM training sets, and how they are devalued and marginalized by that claim.

29.10.2025 11:04 — 👍 5 🔁 1 💬 2 📌 0This in particular is frustrating when the city seems entirely unwilling to implement full-time bus lanes on Bank. It really feels like a huge miss that some of the most popular bus routes in the city will still be forced to crawl with traffic off peak.

29.10.2025 11:23 — 👍 2 🔁 0 💬 0 📌 0Good luck!!

29.10.2025 11:20 — 👍 2 🔁 0 💬 0 📌 0

@glengower.ca are you willing to expand on the message in this article for your support for Lansdowne 2.0?

ottawacitizen.com/news/city-co...

CNN is reporting that @zohrankmamdani.bsky.social referred to a voter he was meeting as “my man” at a campaign event today, even though the 13th Amendment to the United States Constitution has made it illegal to own human beings since 1865 and remains the law in states including New York

28.10.2025 20:06 — 👍 10014 🔁 1006 💬 387 📌 100tough to prove*

28.10.2025 16:43 — 👍 0 🔁 0 💬 1 📌 0The last thing I'll say is that you seem to credibly believe the reports from the CEOs, and maybe apply the same skepticism you ask me to. the article itself mentions the 80-90% margin is tough to say. The lack of metrics is part of why this is such a thorny thing to discuss! 🫡

28.10.2025 16:42 — 👍 0 🔁 0 💬 1 📌 0If you have any closing articles or stuff let me know and I'll look later!

28.10.2025 16:40 — 👍 0 🔁 0 💬 1 📌 0I'm sorry man, I have to get back to work and I honestly don't care enough about this stuff to argue past my lunch break.

I'll do some more reading from the articles you link at some point - I hope you have a good day!

The rate limits aren't low enough to cover the costs. They're stemming the hemorrhaging, is all.

28.10.2025 16:30 — 👍 0 🔁 0 💬 1 📌 0* The article you reference

28.10.2025 16:28 — 👍 0 🔁 0 💬 0 📌 0

Literally in your article. There are coding startups rate limiting users because they use too many tokens.

28.10.2025 16:27 — 👍 1 🔁 0 💬 2 📌 0"I just don't buy it" Doesn't work. You can see the token costs on some of these coding startups and the subscription costs don't cover the token costs.

OpenAI is having the same problem with their models - people are still using waaaay more tokens than the subscription allows

They absolutely are though! ed covers this again and again and again. Even on the highest tier plans, users were using many many times their subscription fees in token costs. This is why inference costs MUST go down.

28.10.2025 16:25 — 👍 0 🔁 0 💬 2 📌 0So inference costs MUST go down to make Cursor profitable. But they aren't, because everyone likes the shiny models. They can't revert to cheap models because they don't work for Cursor's use case.

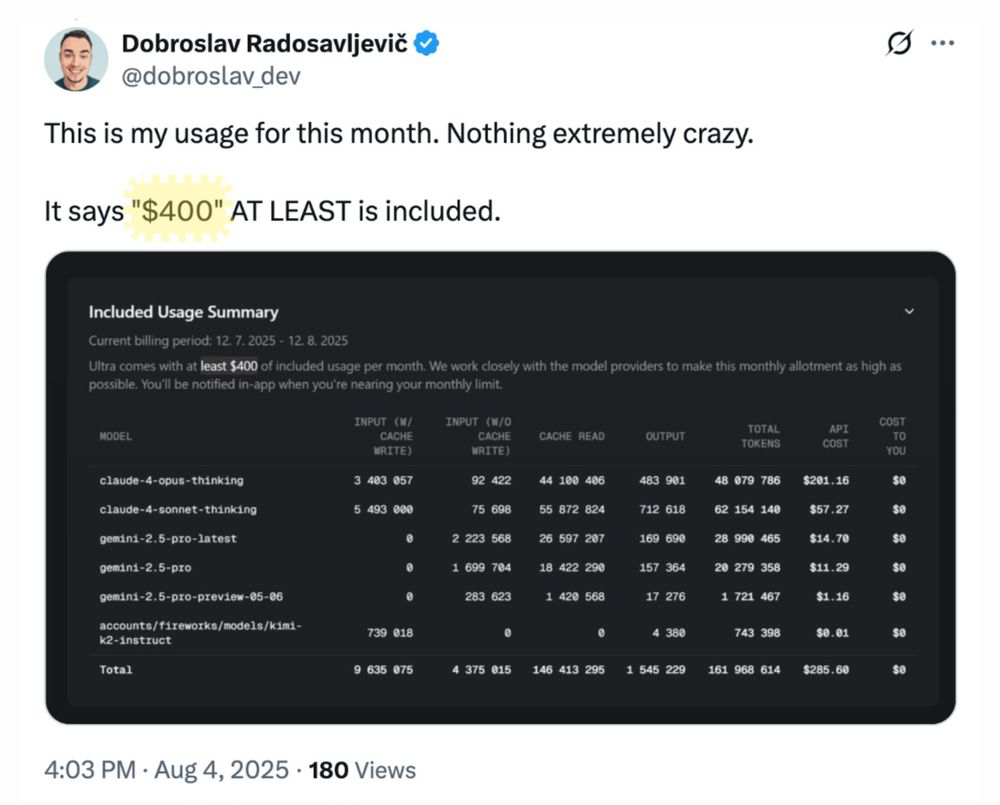

28.10.2025 16:24 — 👍 0 🔁 0 💬 1 📌 0The problem is that Cursor is currently not charging a per-token rate:

"Cursor's Ultra plan exemplified this approach perfectly: charge users $200 while providing at least $400 worth of tokens, essentially operating at -100% gross margin."

bsky.app/profile/davi...

And it doesn't land. You entire argument seems to be that higher inference good, because more margins, but companies are currently subsidizing users and masking the full cost. So the more expensive the inferencing is, the more they lose. 1/

28.10.2025 16:22 — 👍 0 🔁 0 💬 2 📌 0TVs can still execute their function if they're crappy. Smaller models do not work for my use case (or many of the use cases) at all. I legitimately cannot get good output out of cheaper models.

So to actually use these applications the way they want to be used, you have to use expensive models.

I don't understand your question.

28.10.2025 16:14 — 👍 0 🔁 0 💬 1 📌 0