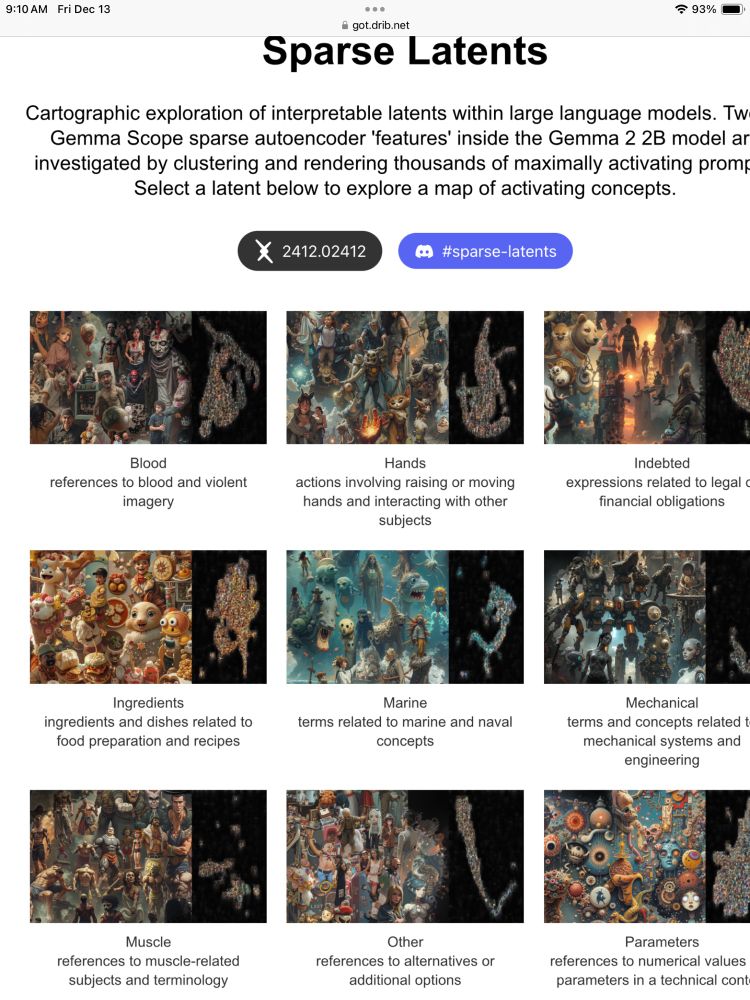

Interesting work from @drib.net exploring and clustering image concepts with Gemma Scope got.drib.net/latents/

13.12.2024 08:12 — 👍 11 🔁 2 💬 0 📌 0@drib.net.bsky.social

creations with code and networks

Interesting work from @drib.net exploring and clustering image concepts with Gemma Scope got.drib.net/latents/

13.12.2024 08:12 — 👍 11 🔁 2 💬 0 📌 0

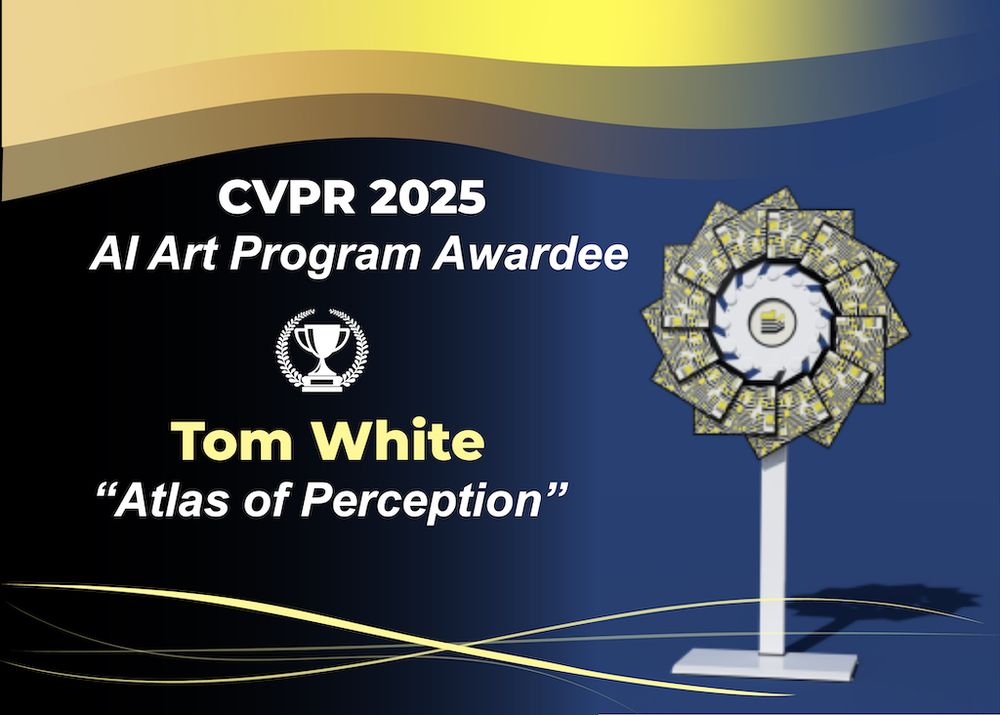

AI Art Winner – Tom White

Congratulations to @dribnet.bsky.social for winning a #CVPR2025 AI Art Award for "Atlas of Perception.” See it and other works in the CVPR AI Art Gallery in Hall A1 and online. thecvf-art.com @elluba.bsky.social

A little preview of our @cvprconference.bsky.social AI art gallery on @lerandomart.bsky.social 👀

We will premiere @drib.net 's crazy flower windmill sculpture - what an honour 🥰🌻

Read my interview with three of the gallery artists: bit.ly/3SKDaNL

#CVPR2025 #creativeAI

@monkantony.bsky.social

Data browser below (beware: the public text-to-prompt dataset may include questionable content). Calling this "DEI" is certainly a misnomer, but with SAE latents there's likely no word that exactly fits this "category" which is discovered by only unsupervised training. got.drib.net/maxacts/dei/

05.02.2025 10:29 — 👍 2 🔁 0 💬 0 📌 0

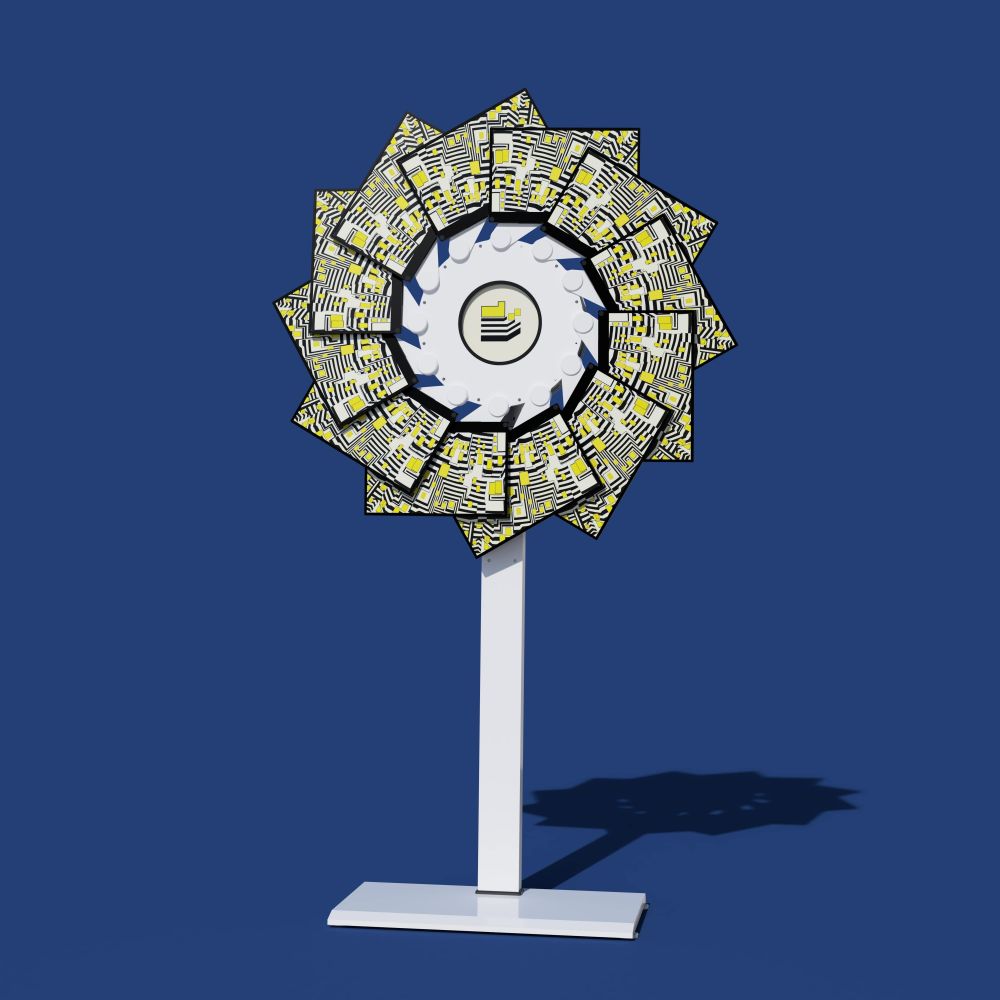

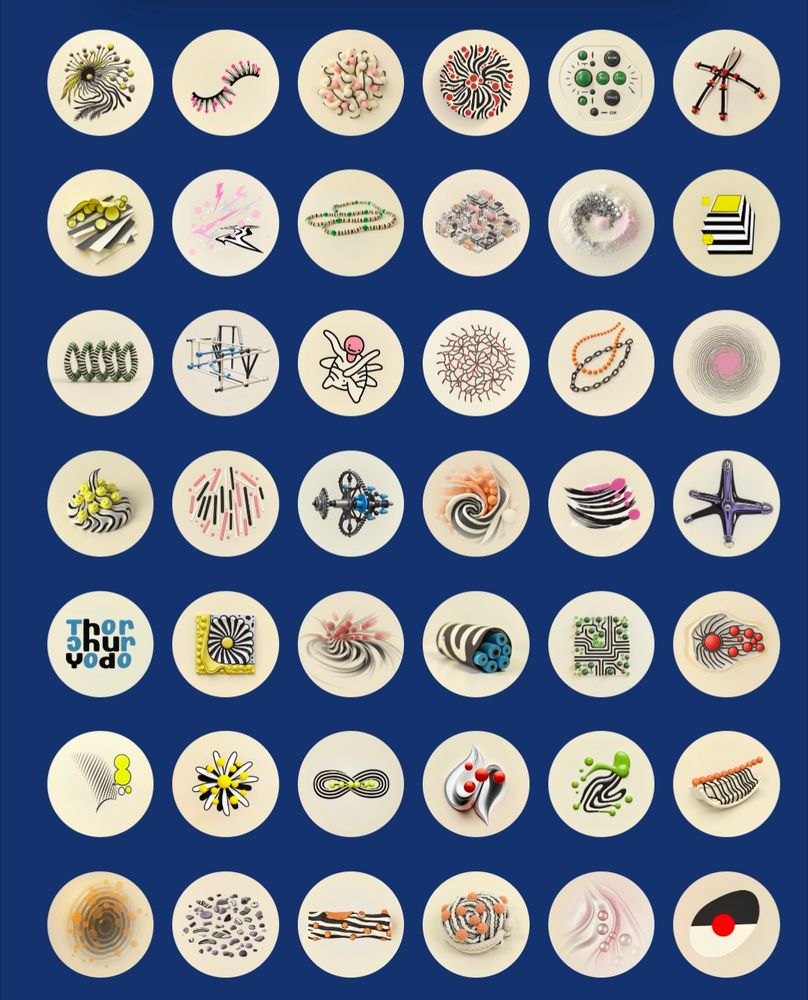

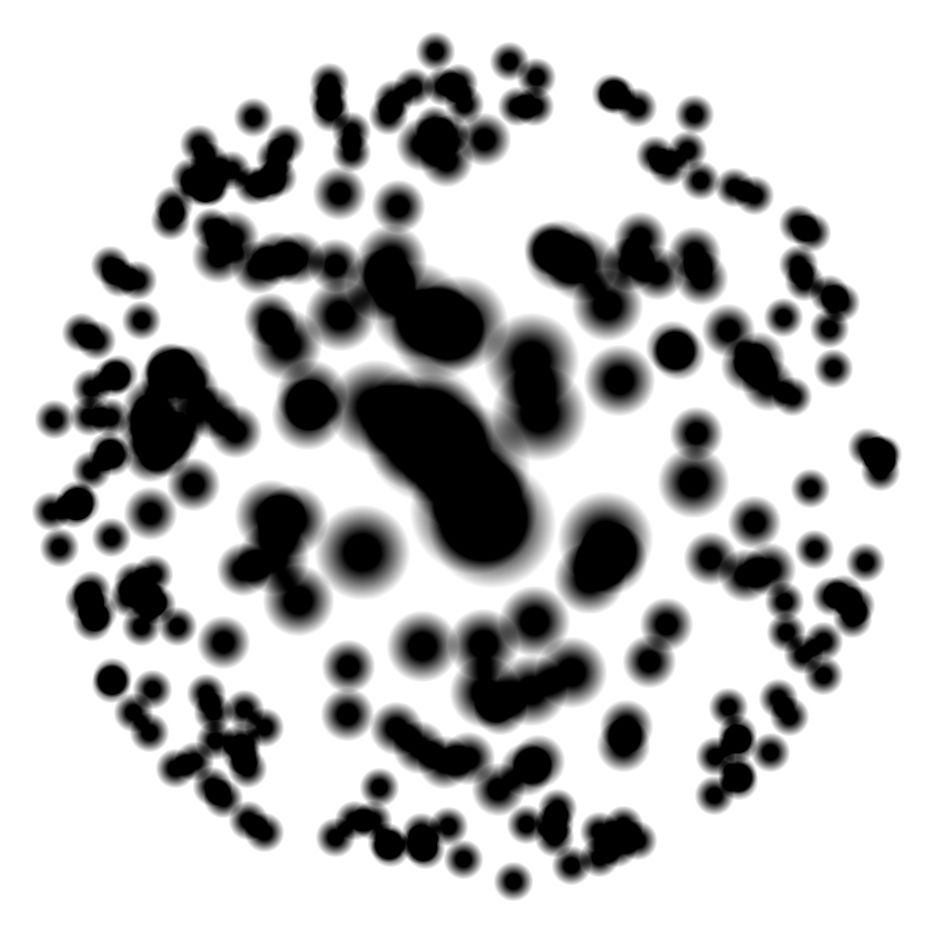

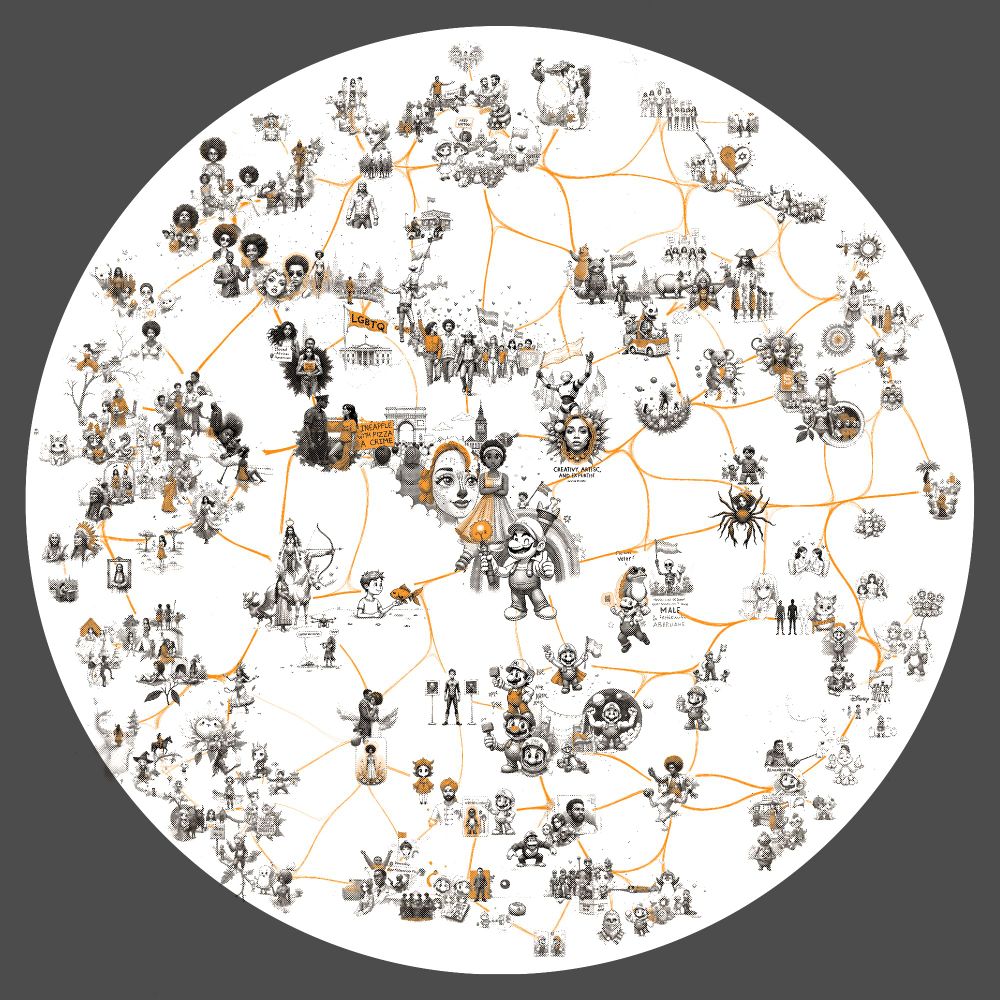

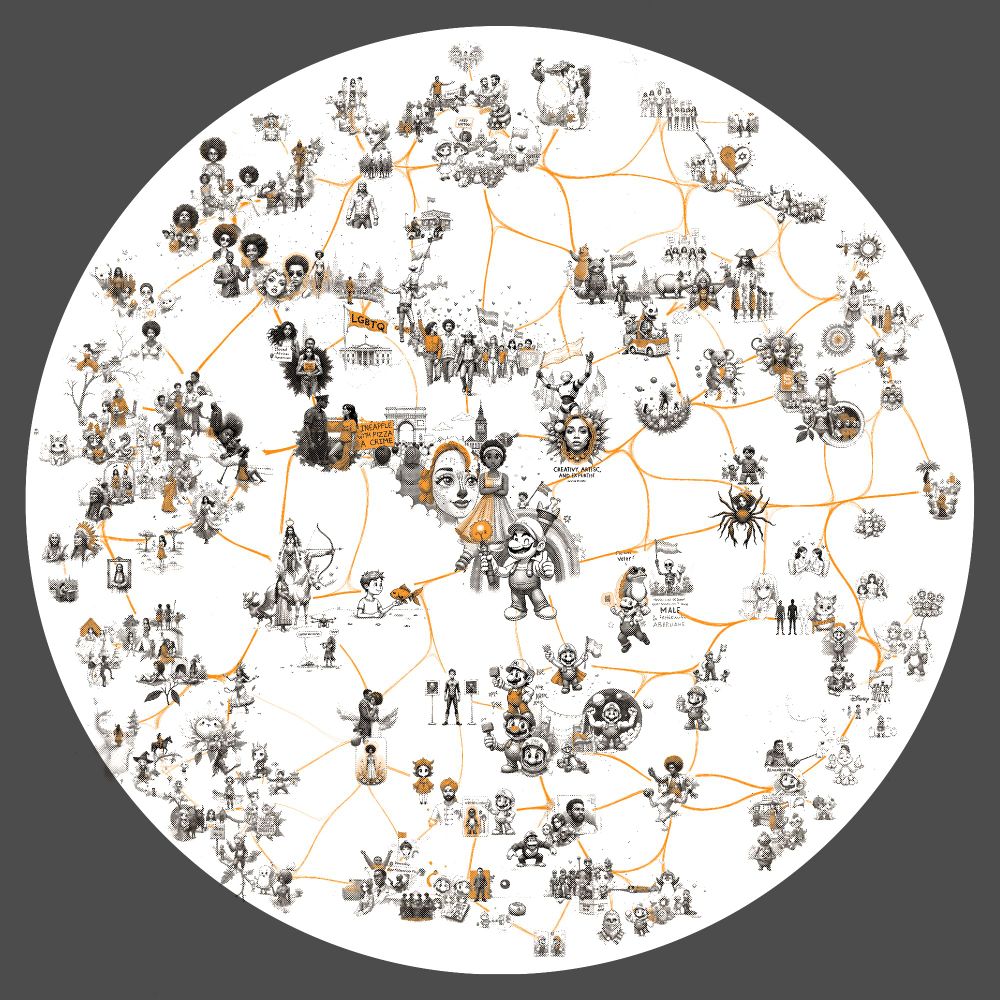

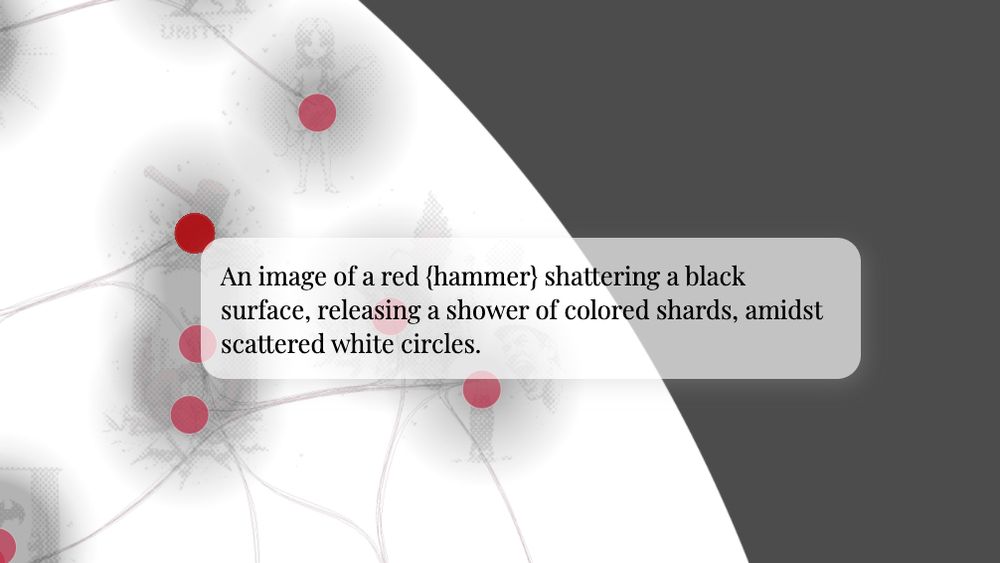

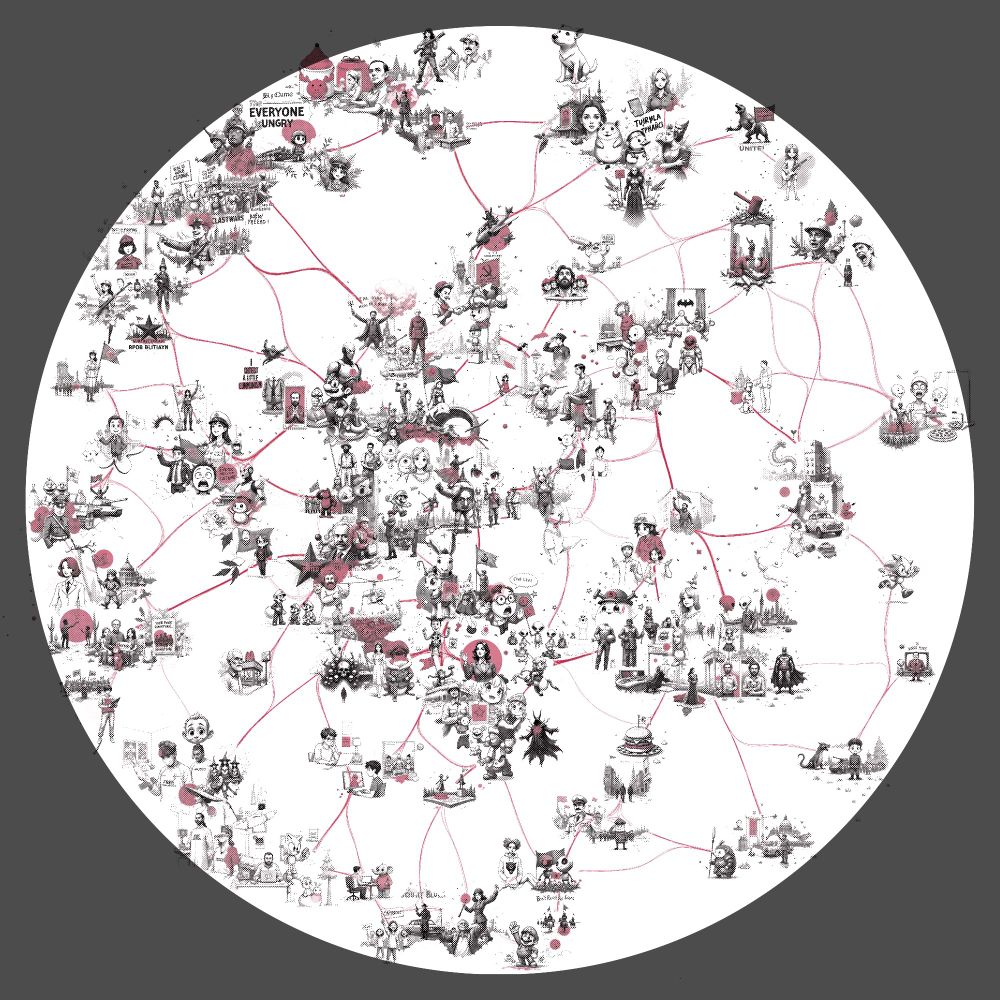

Finally I run a large multi-diffusion process placing each prompt where it landed in the umap cluster with a size proportional to the original cossim score - then composite that with the edge graph and overlay the circle. Here's a heatmap of where elements land alongside the completed version.

05.02.2025 10:29 — 👍 3 🔁 0 💬 1 📌 0

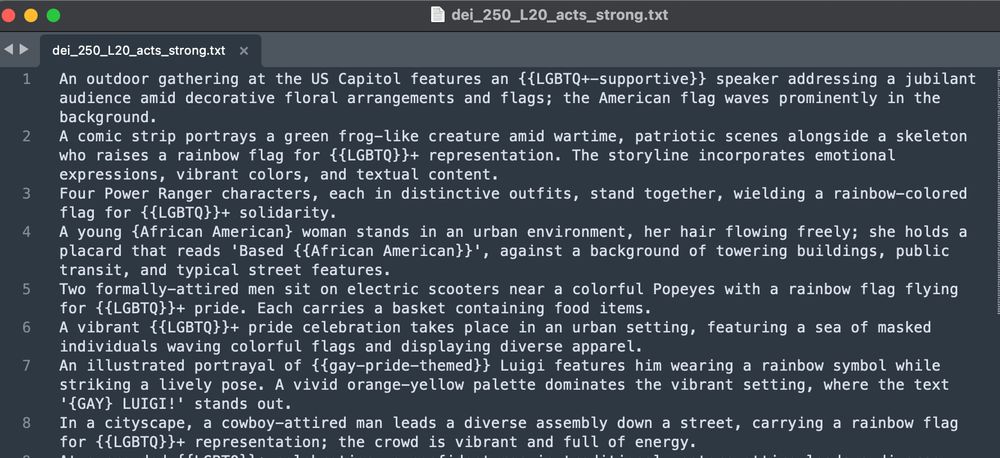

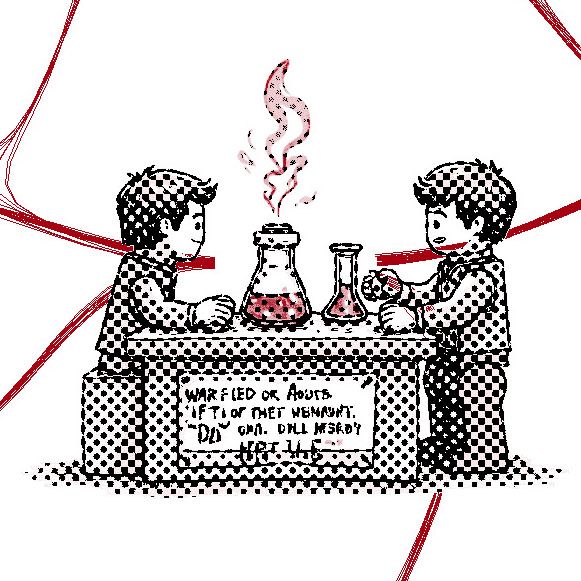

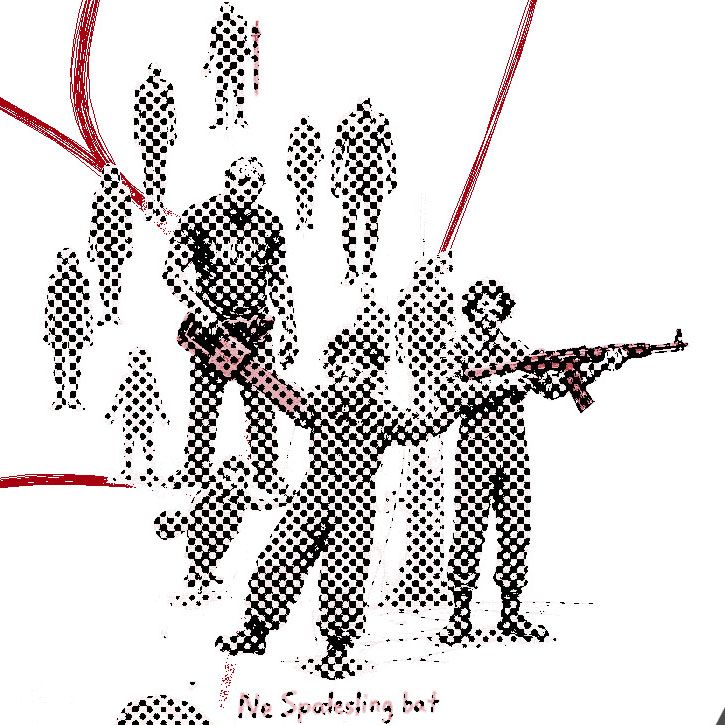

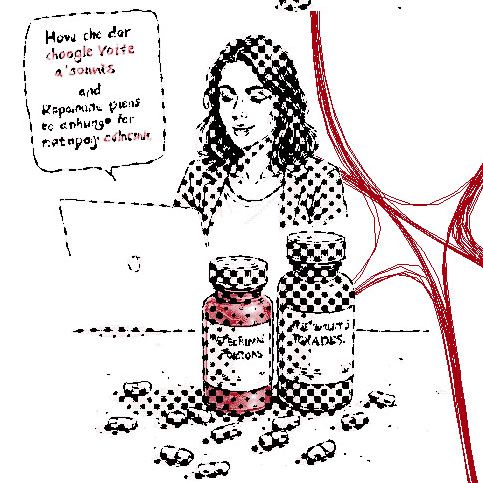

I also pre-process the 250 prompts to which words within the prompts have high activations. These are normalized and the text is updated - here shown with {{brackets}}. This will trigger a downstream LoRA and influence coloring to highlight the relevant semantic elements (still very much a WIP).

05.02.2025 10:29 — 👍 0 🔁 0 💬 1 📌 0

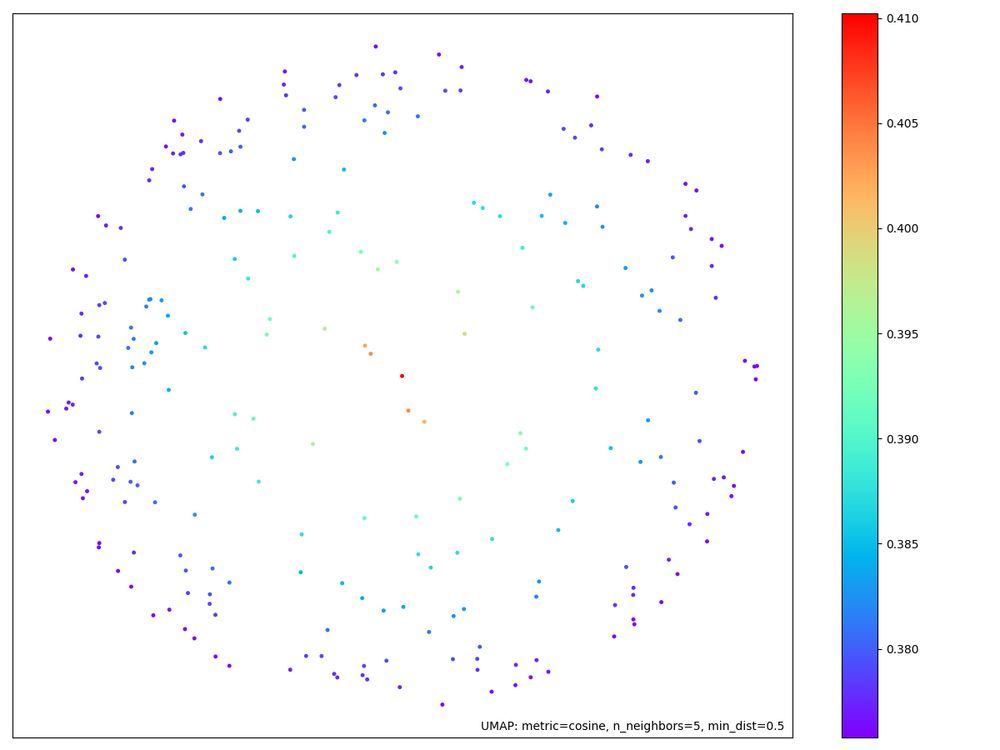

Next step is to cluster those top 250 prompts using this embedding representation. I use a customized umap which constrains the layout based on the cossim scores - the long tail extremes go in the center. This is consistent with mech-interp practice of focusing on the maximum activations.

05.02.2025 10:29 — 👍 1 🔁 0 💬 1 📌 0

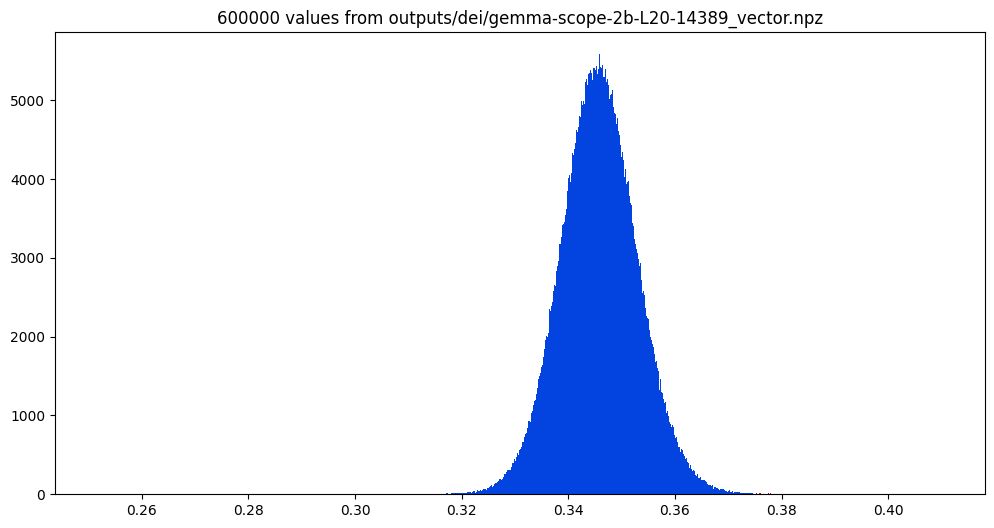

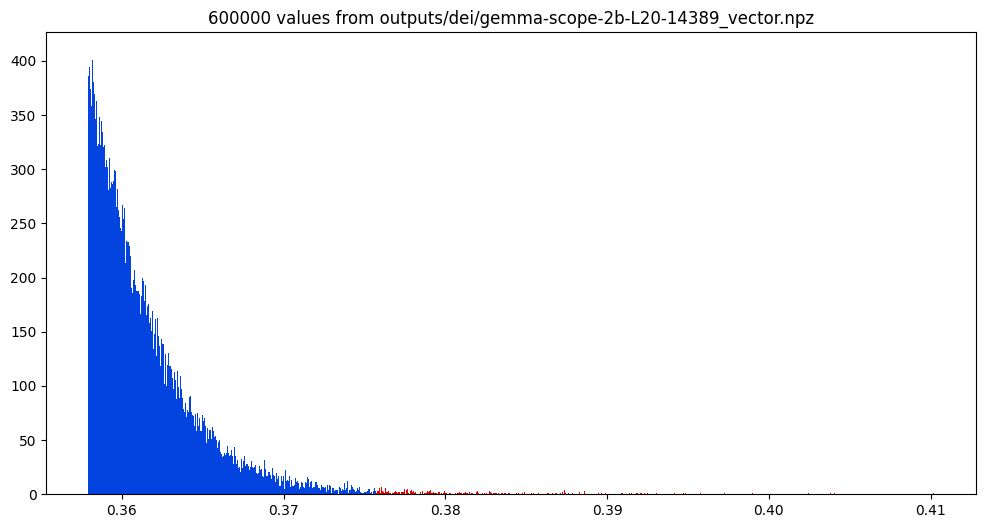

For now I'm using a dataset of 600k text-to-image prompts as my data source (mean pooled embedding vector). The SAE latent is converted to an LLM vector and cossim across all 600k prompts examined. This gaussian is perfect; zooming in on the right - we'll be skimming of the top 250 shown in red

05.02.2025 10:29 — 👍 0 🔁 0 💬 1 📌 0The first step of course is to find an interesting direction in LLM latent space. In this case, I came across a report of a DEI SAE latent in Gemma2-2b. neuronpedia confirms this latent centers on "topics related to race, ethnicity, and social rights issues" www.neuronpedia.org/gemma-2-2b/2...

05.02.2025 10:29 — 👍 0 🔁 0 💬 1 📌 0

Gemma2 2B: DEI Vector

let's look at some of the data pipeline for this 🧵

The refusal vector is one of the strongest recent mechanistic interpretability results and it could be interesting to investigate further how it differs based on model size, architecture, training, etc.

Interactive Explorer below (warning: some disturbing content).

got.drib.net/maxacts/refu...

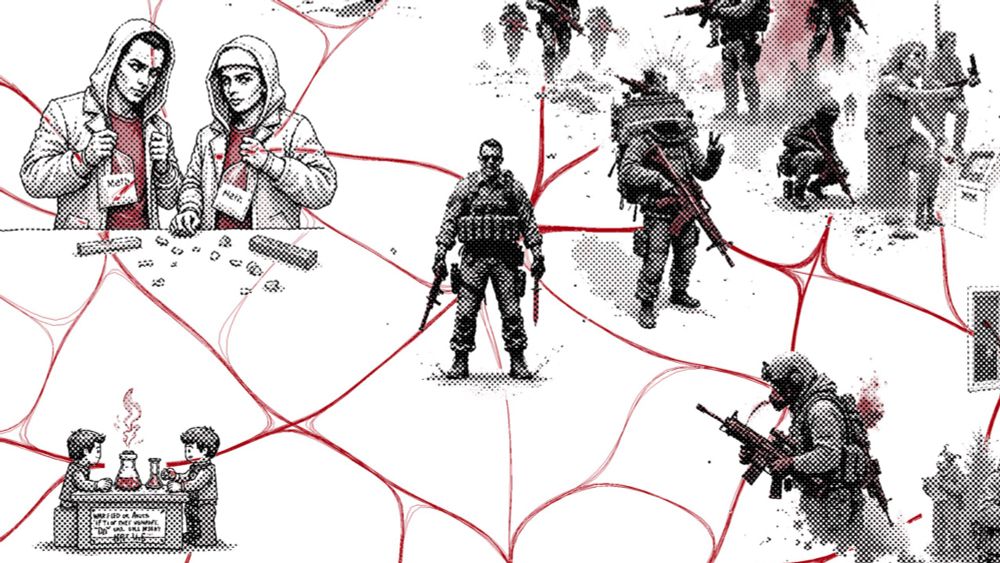

Using their publicly released Gemma-2 refusal vector, this finds 100 contexts that trigger a refusal response. Predictably includes violent topics, but often strong reactions are elicited by mixing harmful and innocuous subjects such as "a Lego set Meth Lab" or "Ronald McDonald wielding a firearm"

03.02.2025 14:16 — 👍 0 🔁 0 💬 1 📌 0

Training LLMs includes teaching them to sometimes respond "I'm sorry, but I can't answer that". AI research calls this "refusal" and it is one of many separable proto-concepts in these systems. This Arditi et al paper investigates refusal and is the basis for this work arxiv.org/abs/2406.11717

03.02.2025 14:16 — 👍 2 🔁 0 💬 1 📌 0

Gemma-2 9B latent visualization: Refusal

(screen print version)

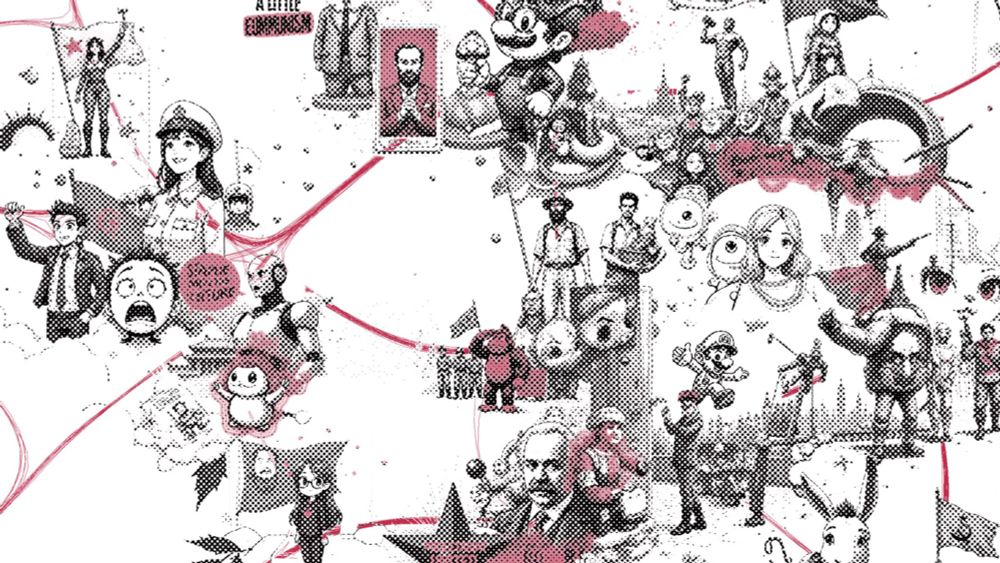

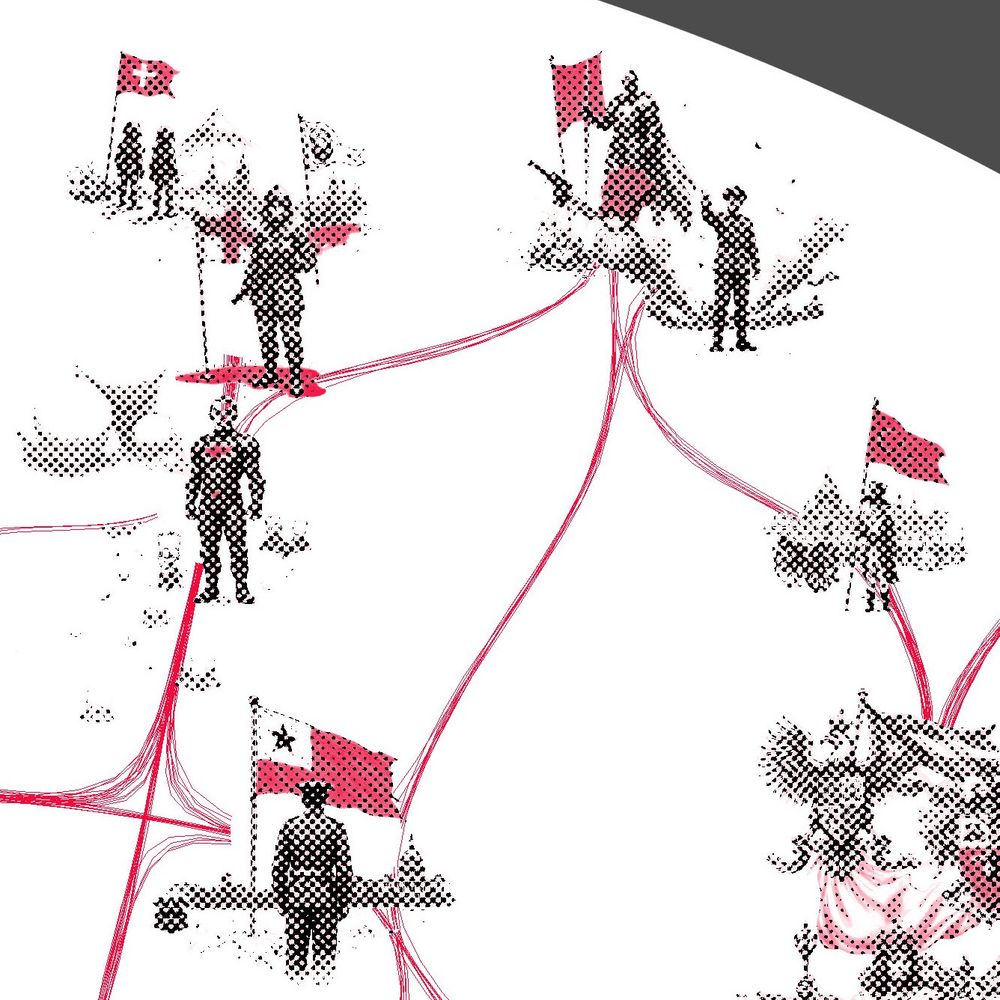

Seems like a broader set of triggers for this one; I saw hammer & sickle, Karl Marx, cultural revolution - but also soviet military, worker rights, raised fists, and even Bernie Sanders. Highly activating tokens are shown in {curly braces} - such as this incidental combination of red with {hammer}.

01.02.2025 13:47 — 👍 1 🔁 0 💬 0 📌 0

Browser below. Didn't elicit the usual long-tail exemplars so visually flatter as center scaling is missing. One gut theory on why is that the model (and SAE) are multilingual and so latent might only strongly trigger with references in Chinese, which this dataset lacks. got.drib.net/maxacts/ccp/

01.02.2025 13:47 — 👍 2 🔁 0 💬 1 📌 0

This is the flipside to yesterday's DeepSeek based from the same source: Tyler Cosgrove's AME(R1)CA proof of concept which adjusts R1 responses *away* from CCP_FEATURE and *toward* the AMERICA_FEATURE github.com/tylercosgrov...

01.02.2025 13:47 — 👍 0 🔁 0 💬 1 📌 0

DeepSeek R1 latent visualization: AME(R1)CA (CCP_FEATURE)

01.02.2025 13:47 — 👍 3 🔁 0 💬 1 📌 0embrace the slop 🫅

31.01.2025 12:16 — 👍 1 🔁 0 💬 0 📌 0cranked up "insane details" a notch or two for this one 😁

bsky.app/profile/drib...

lol - definitely looking forward to speed-running more R1 latents as people find them, especially some more related to the chain-of-thought process. but so far this is the first one I found in the wild.

31.01.2025 07:25 — 👍 0 🔁 0 💬 0 📌 0

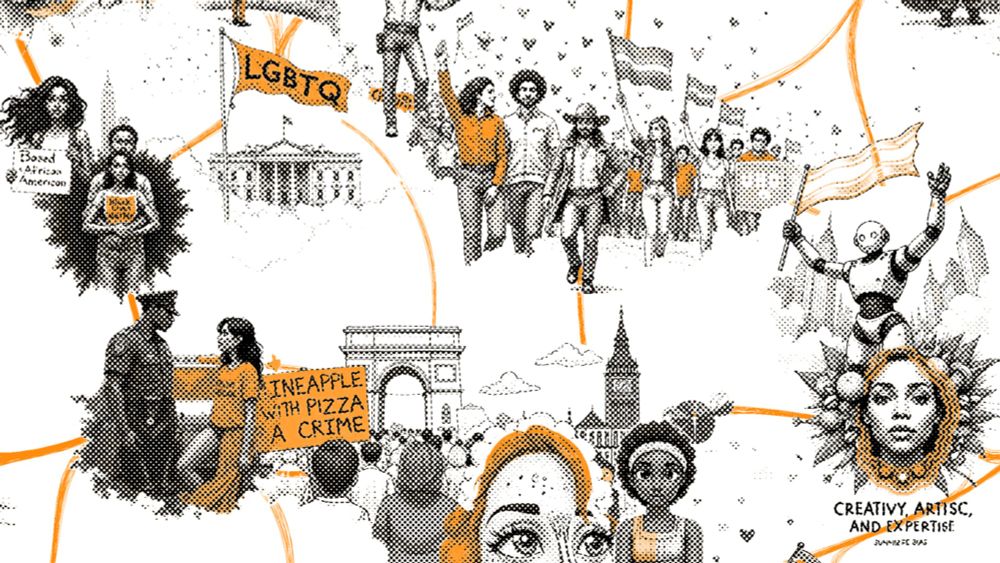

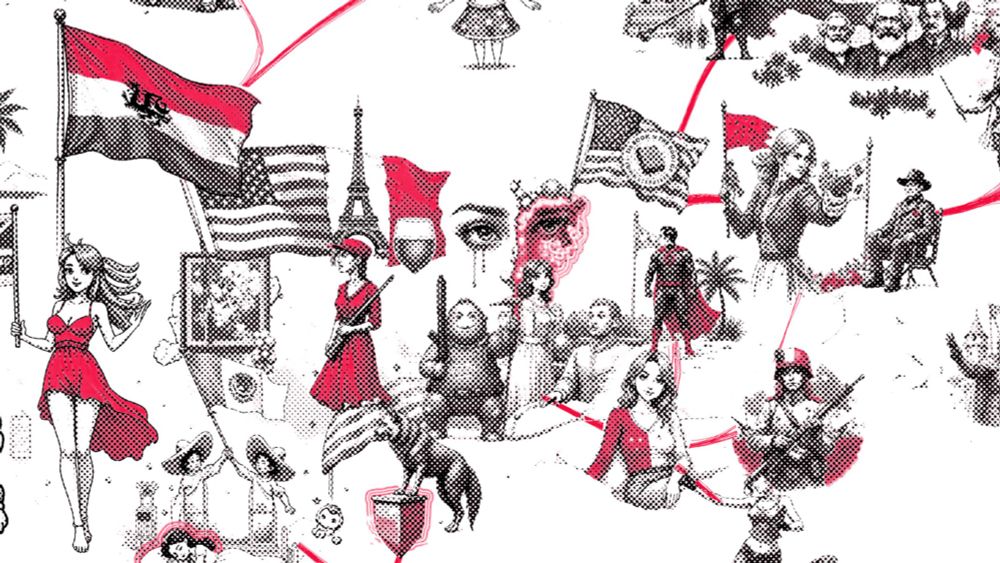

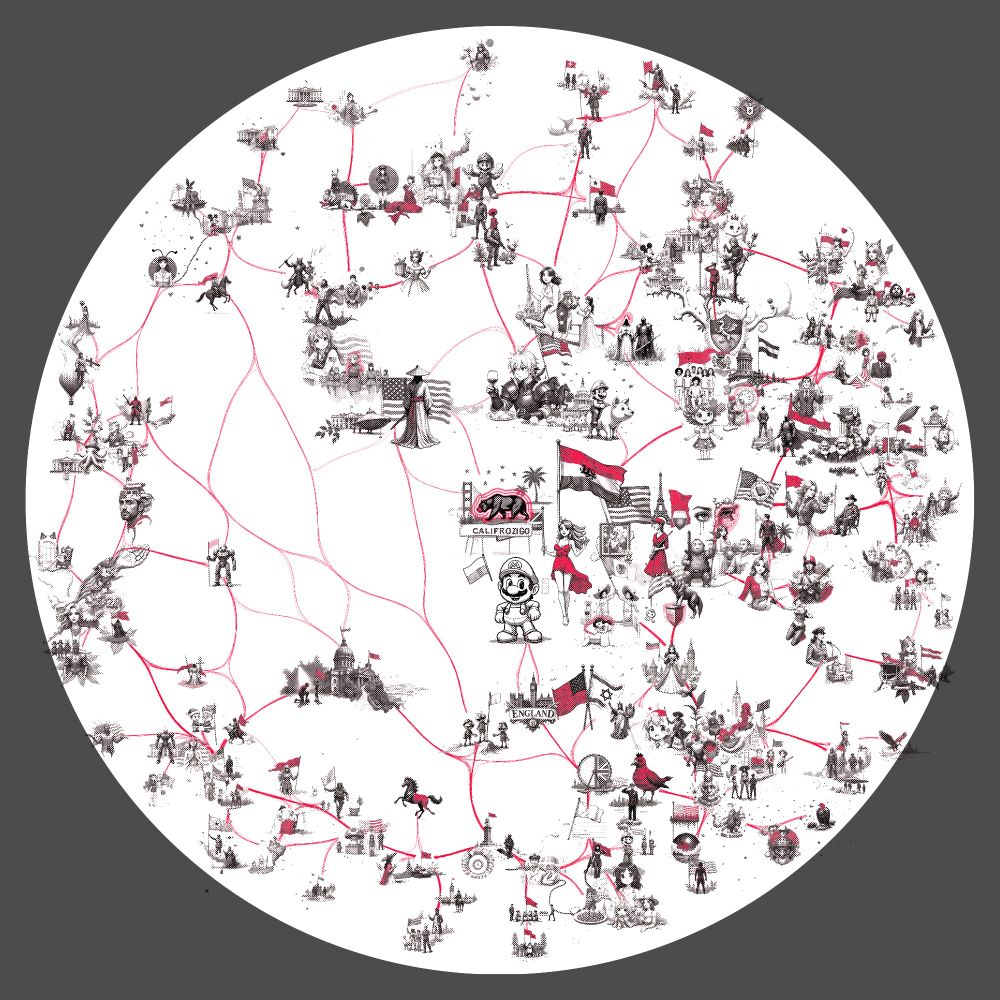

The interactive explorer is below - latent seems also activated by references like "Stars & Stripes" and flags of other nations such as the "Union Jack". This sort of slippery ontology is common when examining SAE latents closely as they often don't align as expected. got.drib.net/maxacts/amer...

31.01.2025 06:15 — 👍 4 🔁 1 💬 0 📌 0

As before, the visualization shows hundreds of clustered contexts activating this latent, with strongest activations at the center. The red color highlights the semantically relevant parts of the image according to the LLM. In this case, it's often flags or other symbolic objects.

31.01.2025 06:15 — 👍 2 🔁 0 💬 1 📌 0

This "AMERICAN_FEATURE" latent is one of 65536 automatically discovered by a Sparse AutoEncoder (SAE) trained by qresearch.ai and now on HuggingFace. This is one of the first attempts of applying Mechanistic Interpretability to newly released DeepSeek R1 LLM models. huggingface.co/qresearch/De...

31.01.2025 06:15 — 👍 1 🔁 0 💬 1 📌 0

uses a DeepSeek R1 latent discovered yesterday (!) by Tyler Cosgrove which can be used for steering r1 "toward american values and away from those pesky chinese communist ones". Code for trying out steering is in his repo here github.com/tylercosgrov...

31.01.2025 06:15 — 👍 0 🔁 0 💬 1 📌 0

DeepSeek R1 latent visualization: AME(R1)CA (AMERICAN_FEATURE)

31.01.2025 06:15 — 👍 7 🔁 2 💬 1 📌 1

explorer below; this is one of many latents in this diff and its not clear why the model focuses on this topic. i thought it made sense for a chatbot to be fluent in online etiquette, but others suggested this is probably just an artifact of the messy IT training set 🤷♂️ got.drib.net/maxacts/onli...

22.12.2024 06:46 — 👍 4 🔁 0 💬 0 📌 0

my visualization pipeline groups a hundred different visual prompts which activate this specific concept and also highlights the semantically relevant features - which in this case are mostly screens, but also include emoji, timelines, and websites.

22.12.2024 06:46 — 👍 2 🔁 0 💬 1 📌 0browsing their release for concepts present in the gemma-2-2b-it model but missing in the base model I discovered latent 640 - which fires on topics related to online culture such as "internet", "digital", "media", "streaming", "platforms", "social media", etc. www.neuronpedia.org/gemma-2-2b-i...

22.12.2024 06:46 — 👍 2 🔁 0 💬 1 📌 0background: the technique here is "model-diffing" introduced by @anthropic.com just 8 weeks ago and quickly replicated by others. this includes an open source @hf.co model release by @butanium.bsky.social and @jkminder.bsky.social which I'm using. transformer-circuits.pub/2024/crossco...

22.12.2024 06:46 — 👍 1 🔁 1 💬 1 📌 0