A diagram illustrating pointwise scoring with a large language model (LLM). At the top is a text box containing instructions: 'You will see the text of a political advertisement about a candidate. Rate it on a scale ranging from 1 to 9, where 1 indicates a positive view of the candidate and 9 indicates a negative view of the candidate.' Below this is a green text box containing an example ad text: 'Joe Biden is going to eat your grandchildren for dinner.' An arrow points down from this text to an illustration of a computer with 'LLM' displayed on its monitor. Finally, an arrow points from the computer down to the number '9' in large teal text, representing the LLM's scoring output. This diagram demonstrates how an LLM directly assigns a numerical score to text based on given criteria

LLMs are often used for text annotation, especially in social science. In some cases, this involves placing text items on a scale: eg, 1 for liberal and 9 for conservative

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

27.10.2025 14:59 — 👍 26 🔁 8 💬 1 📌 0

Glad to hear ❤️

14.10.2025 18:36 — 👍 0 🔁 0 💬 0 📌 0

📄 Paper: arxiv.org/abs/2505.19299

💻 Code: github.com/lingjunzhao/PE…

🙏 Huge thanks to my advisor @haldaume3.bsky.social and everyone who shared insights!

14.10.2025 16:28 — 👍 0 🔁 0 💬 0 📌 0

🚨 New #EMNLP2025 (main) paper!

LLMs often produce inconsistent explanations (62–86%), hurting faithfulness and trust in explainable AI.

We introduce PEX consistency, a measure for explanation consistency,

and show that optimizing it via DPO improves faithfulness by up to 9.7%.

14.10.2025 16:28 — 👍 3 🔁 1 💬 2 📌 0

![QANTA Logo: Question Answering is not a Trivial Activity

[Humans and computers competing on a buzzer]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:fl42ibvzjfunee4t4zqvxurf/bafkreigcvckbbculrr5g3emigdt67avtojtcf24x6tjdfk6uwbqny6pilu@jpeg)

QANTA Logo: Question Answering is not a Trivial Activity

[Humans and computers competing on a buzzer]

Do you like trivia? Can you spot when AI is feeding you BS? Or can you make AIs turn themselves inside out? Then on June 14 at College Park (or June 21 online), we have a competition for you.

05.06.2025 16:17 — 👍 0 🔁 1 💬 1 📌 0

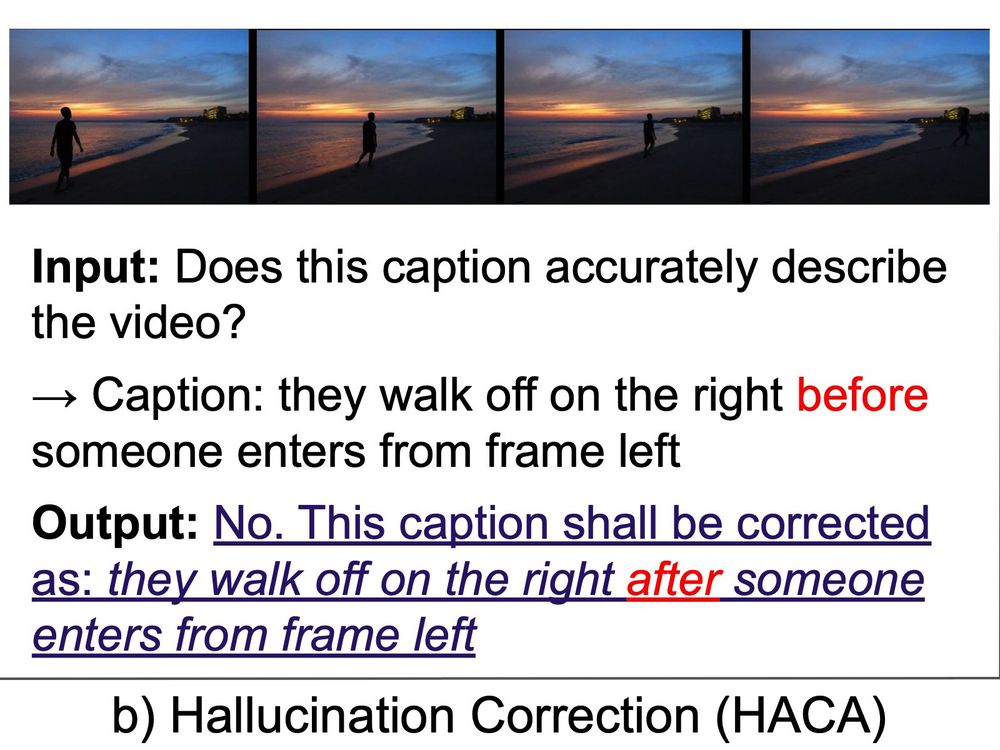

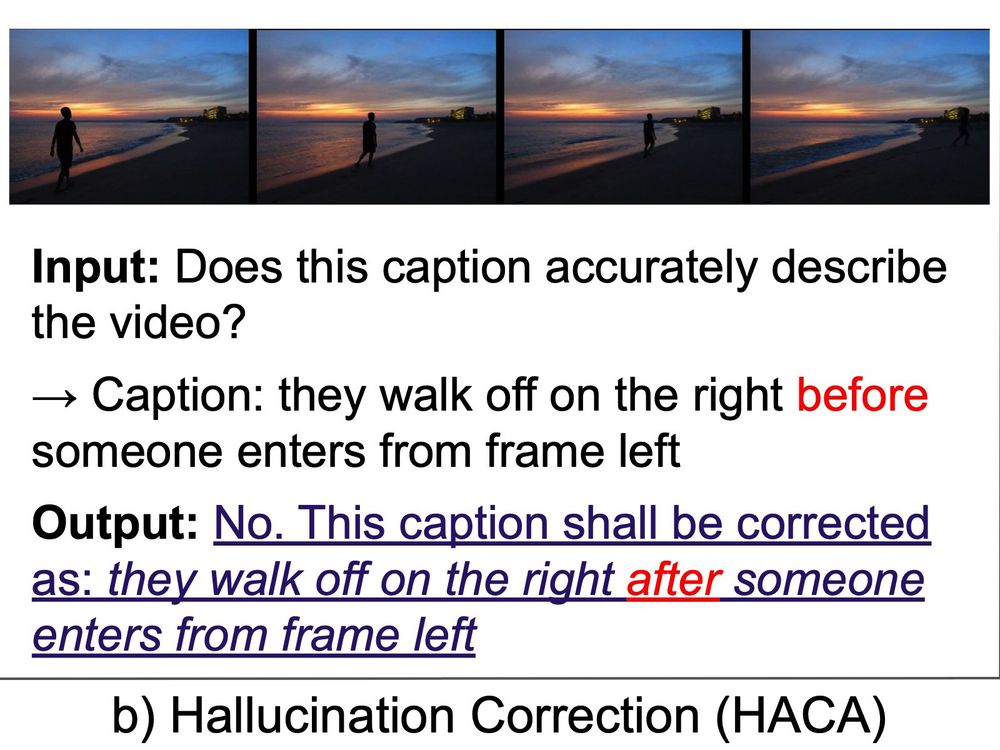

Super thankful for my wonderful collaborators: @pcascanteb.bsky.social @haldaume3.bsky.social Mingyang Xie, Kwonjoon Lee

20.05.2025 21:12 — 👍 1 🔁 0 💬 0 📌 0

We introduce a super simple yet effective strategy to improve video-language alignment (+18%): add hallucination correction in your training objective👌

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079

20.05.2025 21:12 — 👍 6 🔁 3 💬 1 📌 0

For the ACL ARR review, I’ve heard complaints about the workload—some reviewers have 16 papers. Even though I only need to write 1 rebuttal and respond to 4, it still feels substantial. For those managing more (thank you!), it can be difficult to thoroughly engage with every rebuttal.

30.03.2025 20:56 — 👍 2 🔁 0 💬 0 📌 0

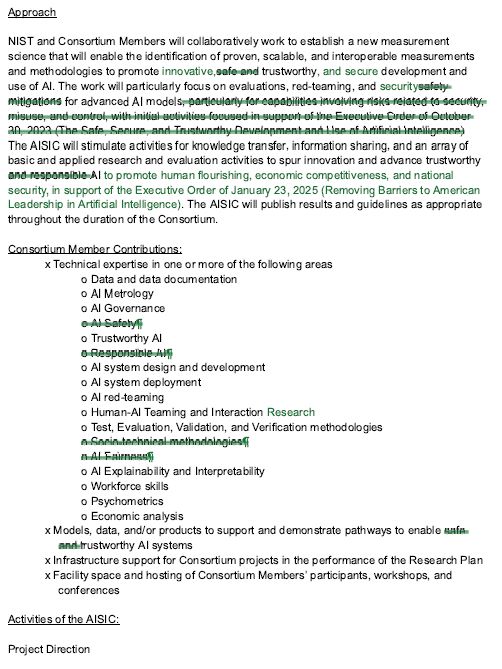

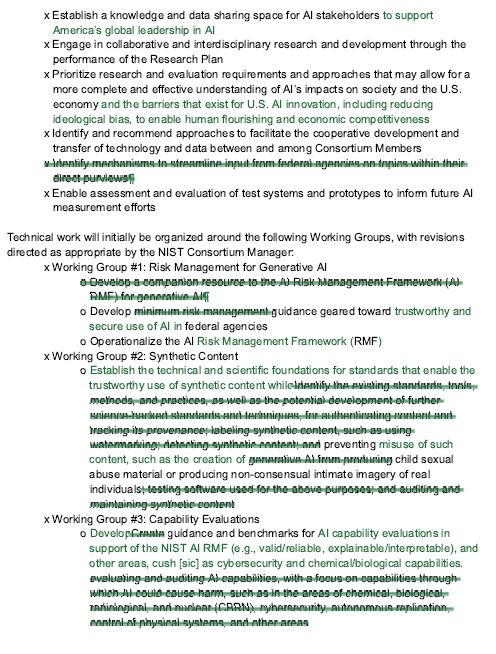

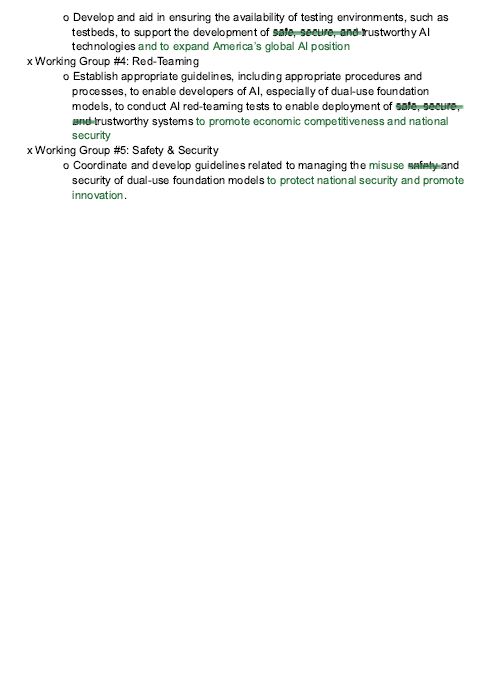

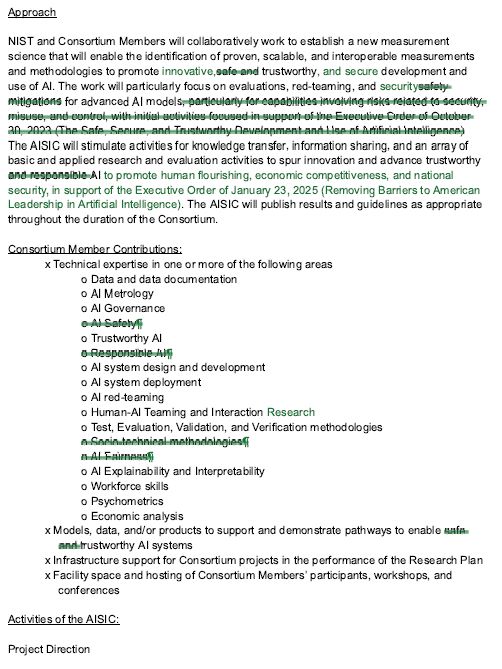

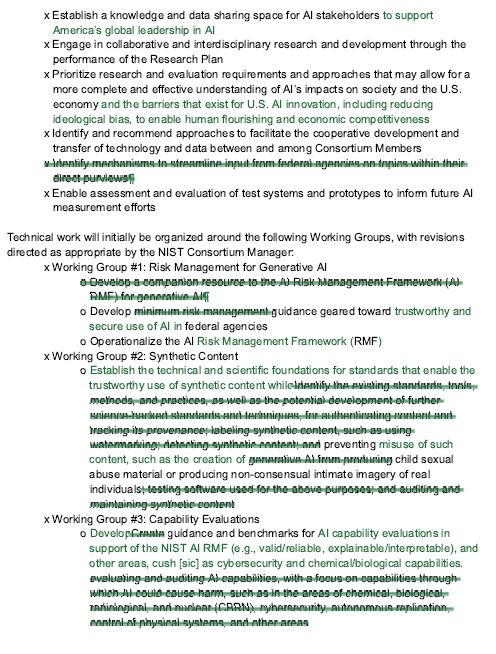

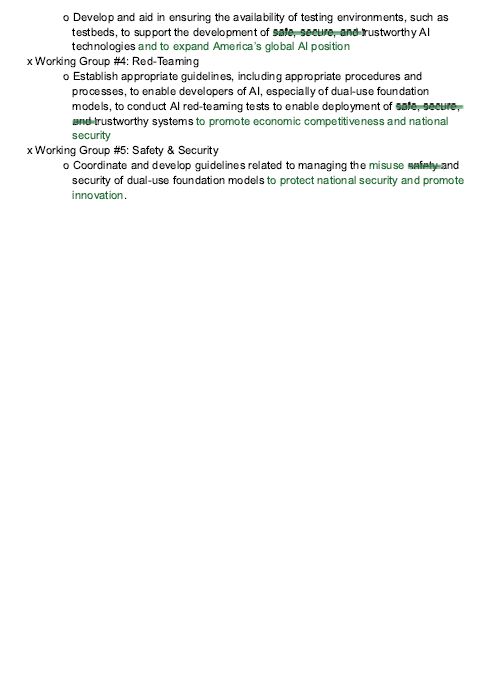

Page one of diff.

Page 2 of diff.

Page 3 of diff.

There is a new version of the Research Plan for NIST's AI Safety Consortium (AISIC) in response to EOs. I did a diff.

Out: safety, responsibility, sociotechnical, fairness, working w fed agencies, authenticating content, watermarking, RN of CBRN, autonomous replication, ctrl of physical systems

>

04.03.2025 12:30 — 👍 24 🔁 13 💬 2 📌 0

Interdisciplinary community advancing language science through research & training in science, education, tech, & health • linktr.ee/umd_lsc

AI Medical Imaging Research...

Johns Hopkins University

Axle Informatics

Postdoc @ Brown DSI

VP R&D @ ClearMash

🔬 Passionate about high-fidelity numerical representations of reality, aligned with human perception.

https://omri.alphaxiv.io/

#nlp #multimodality #retrieval #hci #multi-agent

On the 2025/2026 job market! Machine learning, healthcare, and robustness

postdoc @ Cornell Tech, phd @ MIT

dmshanmugam.github.io

CSE PhD Student @ University of Michigan | BA Computer Science/Linguistics @ Columbia University | 台灣暑期密集語言項目 @ National Tsing Hua University | AKA 林書宜 | emlinking.github.io

Assistant Professor at UCLA | Alum @MIT @Princeton @UC Berkeley | AI+Cognitive Science+Climate Policy | https://ucla-cocopol.github.io/

Professor @milanlp.bsky.social for #NLProc, compsocsci, #ML

Also at http://dirkhovy.com/

#nlp researcher interested in evaluation including: multilingual models, long-form input/output, processing/generation of creative texts

previous: postdoc @ umass_nlp

phd from utokyo

https://marzenakrp.github.io/

associate prof at UMD CS researching NLP & LLMs

☁️ phd in progress @ UMD | 🔗 https://lilakk.github.io/

PhD student at CMU. I do research on applied NLP ("alignment", "synthetic data"). he/him

Robustness, Data & Annotations, Evaluation & Interpretability in LLMs

http://mimansajaiswal.github.io/

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

PhD Student, Ex- U.S. Federal Gov’t Data Scientist

PhD student @umdcs | Long-form Narrative Generation & Analysis | Intern @AdobeResearch @MSFTResearch | https://chtmp223.github.io

PhD student @ Univ of Maryland

NLP, Question Answering, Human AI, LLMs

More at mgor.info

PhD-ing Clip@UMD

https://houyu0930.github.io/

![QANTA Logo: Question Answering is not a Trivial Activity

[Humans and computers competing on a buzzer]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:fl42ibvzjfunee4t4zqvxurf/bafkreigcvckbbculrr5g3emigdt67avtojtcf24x6tjdfk6uwbqny6pilu@jpeg)