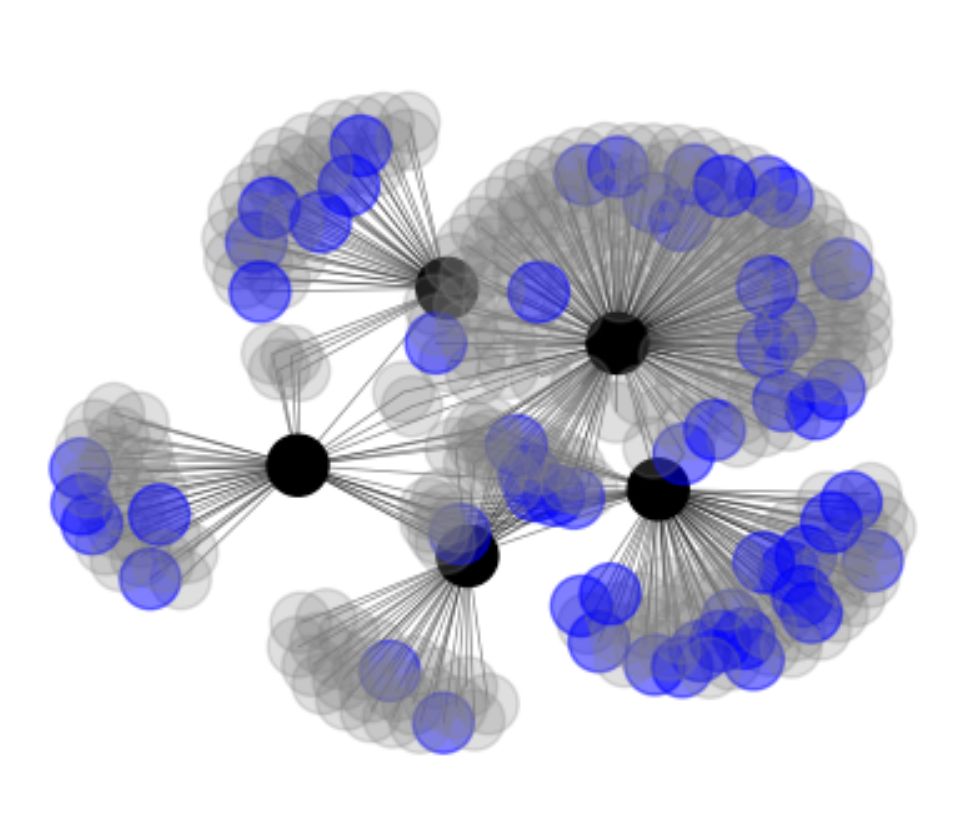

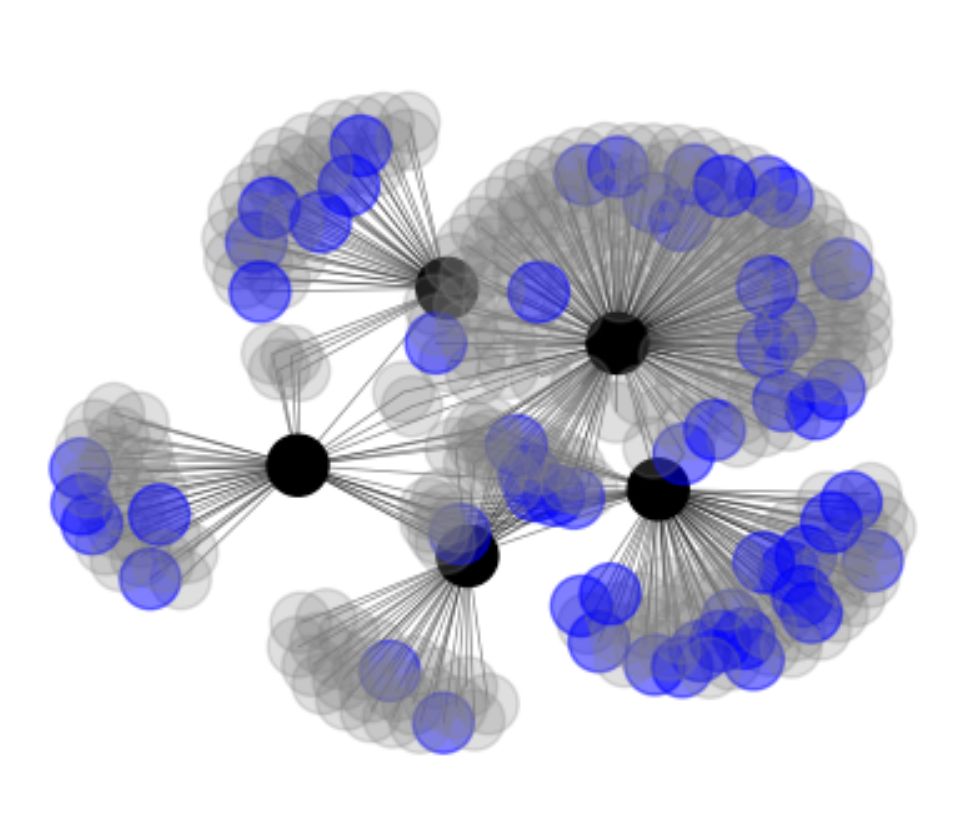

The transformer was invented in Google. RLHF was not invented in industry labs, but came to prominence in OpenAI and DeepMind. I took 5 of the most influential papers (black dots) and visualized their references. Blue dots are papers that acknowledge federal funding (DARPA, NSF).

12.04.2025 02:35 — 👍 107 🔁 23 💬 2 📌 0

LongEval 2025

Conference Template

LongEval is turning three this year!

This is a Call for Participation to our CLEF 2025 Lab - try out how your IR system does in the long term.

Check the details on our page:

clef-longeval.github.io

11.04.2025 11:24 — 👍 8 🔁 3 💬 0 📌 0

The PhD is pretraining. Interview prep is alignment. Take this to heart. :)

13.04.2025 08:16 — 👍 2 🔁 0 💬 0 📌 0

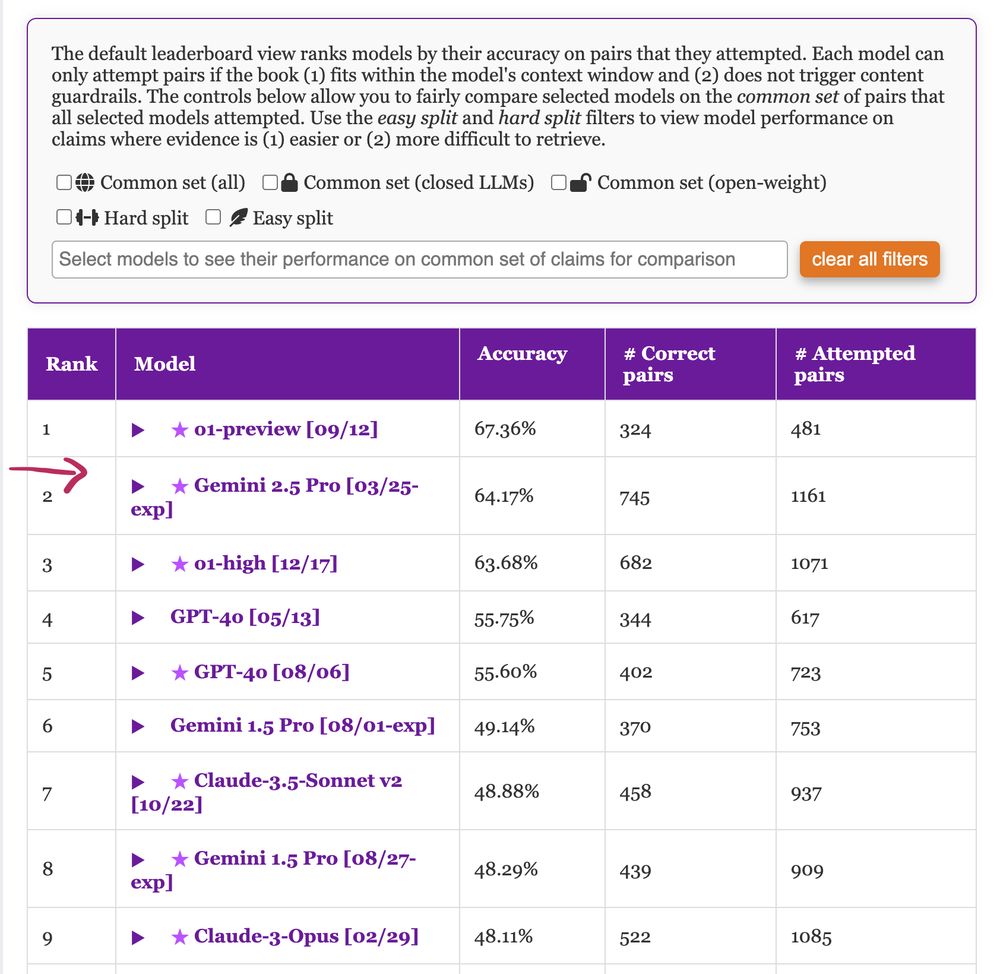

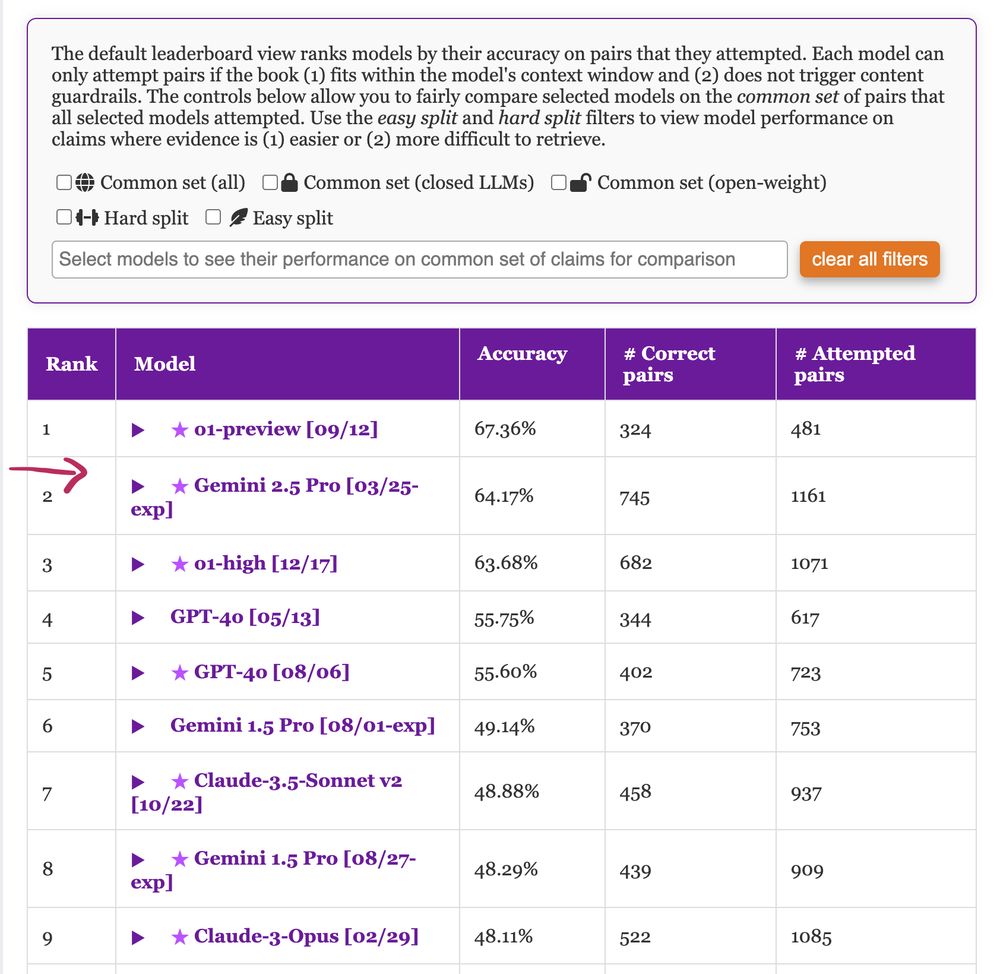

Leaderboard showing performance of language models on claim verification task over book-length input. o1-preview is the best model with 67.36% accuracy followed by Gemini 2.5 Pro with 64.17% accuracy.

We have updated #nocha, a leaderboard for reasoning over long-context narratives 📖, with some new models including #Gemini 2.5 Pro which shows massive improvements over the previous version! Congrats to #Gemini team 🪄 🧙 Check 🔗 novelchallenge.github.io for details :)

02.04.2025 04:30 — 👍 11 🔁 4 💬 0 📌 0

ARR Dashboard

I think ARR used to do this? Seems like it’s missing in the recent cycle(s).

stats.aclrollingreview.org/iterations/2...

28.03.2025 20:26 — 👍 3 🔁 0 💬 0 📌 0

A corollary here is that a relevant context might not improve the probability of the right answer.

22.03.2025 11:51 — 👍 0 🔁 0 💬 0 📌 0

Perhaps the most misunderstood aspect of retrieval: For a context to be relevant, it is not enough for it to improve the probability of the right answer.

22.03.2025 11:51 — 👍 1 🔁 0 💬 1 📌 0

MLflow is on BlueSky! Follow @mlflow.org to keep up to date on new releases, blogs and tutorials, events, and more.

14.03.2025 23:02 — 👍 4 🔁 1 💬 0 📌 0

ris.utwente.nl/ws/portalfil...

12.03.2025 03:19 — 👍 0 🔁 0 💬 0 📌 0

---Born To Add, Sesame Street

---(sung to the tune of Bruce Springsteen’s Born to Run)

12.03.2025 03:19 — 👍 0 🔁 0 💬 1 📌 0

One, and two, and three police persons spring out of the shadows

Down the corner comes one more

And we scream into that city night: “three plus one makes four!”

Well, they seem to think we’re disturbing the peace

But we won’t let them make us sad

’Cause kids like you and me baby, we were born to add

12.03.2025 03:19 — 👍 0 🔁 0 💬 1 📌 0

"How Claude Code is using a 50-Year-Old trick to revolutionize programming"

11.03.2025 03:21 — 👍 2 🔁 0 💬 0 📌 0

Somehow my most controversial take of 2025 is that agents relying on grep are a form of RAG.

11.03.2025 03:20 — 👍 2 🔁 0 💬 0 📌 1

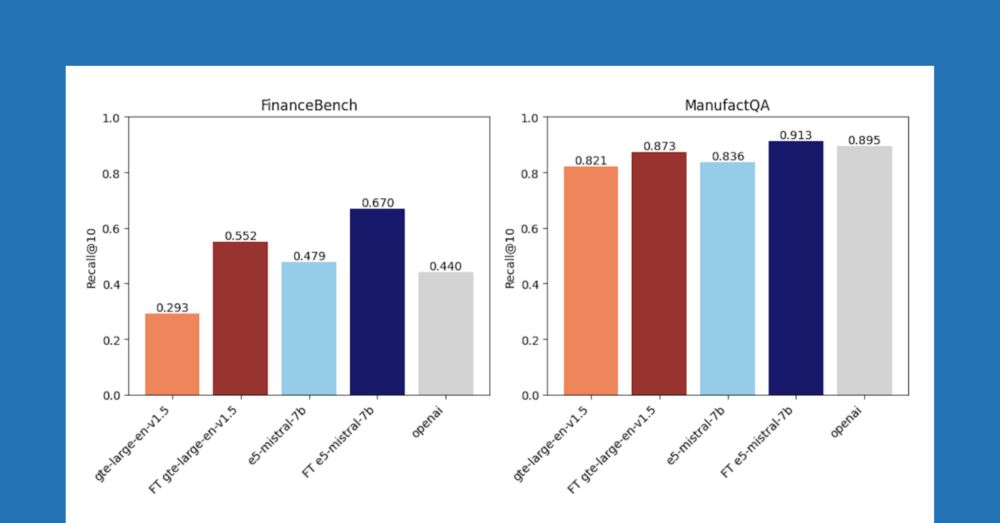

It was a real pleasure talking about effective IR approaches with Brooke and Denny on the Data Brew podcast.

Among other things, I'm excited about embedding finetuning and reranking as modular ways to improve RAG pipelines. Everyone should use these more!

26.02.2025 00:53 — 👍 7 🔁 0 💬 1 📌 0

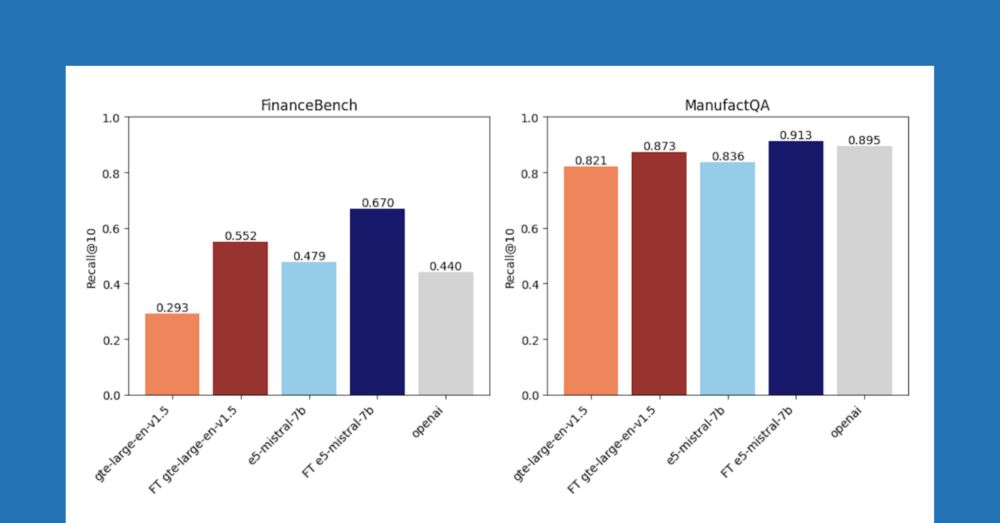

Improving Retrieval and RAG with Embedding Model Finetuning

Fine-tune embedding models on Databricks to enhance retrieval and RAG accuracy with synthetic data—no manual labeling required.

We're probably a little too obsessed with zero-shot retrieval. If you have documents (you do), then you can generate synthetic data, and finetune your embedding. Blog post lead by @jacobianneuro.bsky.social shows how well this works in practice.

www.databricks.com/blog/improvi...

26.02.2025 00:48 — 👍 8 🔁 5 💬 1 📌 0

I do want to see aggregate stats about the model’s generation and total reasoning tokens is perhaps the least informative one.

01.02.2025 14:52 — 👍 2 🔁 0 💬 0 📌 0

"All you need to build a strong reasoning model is the right data mix."

The pipeline that creates the data mix:

26.01.2025 23:30 — 👍 13 🔁 1 💬 1 📌 0

After frequent road runs during a Finland visit I tend to feel the same

23.01.2025 04:07 — 👍 3 🔁 0 💬 0 📌 0

Using 100+ tokens to answer 2 + 3 =

22.01.2025 17:42 — 👍 19 🔁 0 💬 1 📌 0

It’s pretty obvious we’re in a local minima for pretraining. Would expect more breakthroughs in the 5-10 year range. Granted, it’s still incredibly hard and expensive to do good research in this space, despite the number of labs working on it.

27.12.2024 03:50 — 👍 10 🔁 0 💬 1 📌 0

Word of the day (of course) is ‘scurryfunging’, from US dialect: the frantic attempt to tidy the house just before guests arrive.

23.12.2024 12:54 — 👍 3298 🔁 576 💬 107 📌 75

... didn't know this would be one of the hottest takes i've had ...

for more on my thoughts, see drive.google.com/file/d/1sk_t...

21.12.2024 19:17 — 👍 51 🔁 7 💬 3 📌 0

i sensed anxiety and frustration at NeurIPS’24 – Kyunghyun Cho

feeling a but under the weather this week … thus an increased level of activity on social media and blog: kyunghyuncho.me/i-sensed-anx...

21.12.2024 19:47 — 👍 181 🔁 36 💬 19 📌 13

Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference

Encoder-only transformer models such as BERT offer a great performance-size tradeoff for retrieval and classification tasks with respect to larger decoder-only models. Despite being the workhorse of n...

Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference

Introduces ModernBERT, a bidirectional encoder advancing BERT-like models with 8K context length.

📝 arxiv.org/abs/2412.13663

👨🏽💻 github.com/AnswerDotAI/...

20.12.2024 05:04 — 👍 18 🔁 3 💬 0 📌 0

I’m being facetious, but the truth behind the joke is that OCR correction opens up the possibility (and futility) of language much like drafting poetry. For every interpreted pattern for optimizing OCR correction, exceptions arise. So, too, with patterns in poetry.

19.12.2024 02:24 — 👍 2 🔁 1 💬 1 📌 0

Wait can you say more

19.12.2024 01:47 — 👍 0 🔁 0 💬 1 📌 0

Reasoning is fascinating but confusing.

Is reasoning a task? Or is reasoning a method for generating answers, for any task?

17.12.2024 02:00 — 👍 4 🔁 0 💬 4 📌 0

The Association for Computational Linguistics (ACL) is a scientific and professional organization for people working on Natural Language Processing/Computational Linguistics.

Hash tags: #NLProc #ACL2025NLP

Zeta Alpha: A smarter way to discover and organize knowledge in AI and beyond. R&D in Neural Search. Papers and Trends in AI. Enjoy Discovery!

An open source machine learning platform for managing the complete ML lifecycle

AI research @Databricks Mosaic

Previously AI @Uber, Stanford, LandingAI

https://www.ivanzhou.me

I train models in PyTorch and Jax 👨🏻💻

I love computer vision in many ways 📸

Professor of Psychology @ University of Southern California: neuroendocrinology of close relationships, particularly plasticity across the transition to parenthood. Writing the book _Dad Brain_ for Flatiron Books, about the neurobiology of fatherhood.

A free, collaborative, multilingual internet encyclopedia.

wikipedia.org

Senior Principal Research Manager at Microsoft Research NYC. Economics and Computation Group. Distinguished Scholar at Wharton.

Science News from Academic Journals etc.

Associate Professor @ UBC

computational sociology

machine learning is feminist

You only have to look at the Medusa straight on to see her. And she’s not deadly. She’s beautiful and she’s laughing.

www.lauraknelson.com

SEO consultant, hobby baker, runner, fells, road, cross-country and marathon runner. IR, NLP, LLM intrigued. MSc digital strategy, now studying MSc computer science with data science (lifelong learner). Pomeranian pooch Mum. Search Awards Judge

A business analyst at heart who enjoys delving into AI, ML, data engineering, data science, data analytics, and modeling. My views are my own.

Machine Learning PhD Student

@ Blei Lab & Columbia University.

Working on probabilistic ML | uncertainty quantification | LLM interpretability.

Excited about everything ML, AI and engineering!

epistemology of science and artificial intelligence // asst prof purdue university // argonne national lab // phd, university of chicago

www.eamonduede.com

Stats Postdoc at Columbia, @bleilab.bsky.social

Statistical ML, Generalization, Uncertainty, Empirical Bayes

https://yulisl.github.io/

NLP Masters Student @stanfordnlp || Working on DSPy 🧩 || Prev @GeorgiaTech @Microsoft @SnowflakeDB

NLP, CSS & Multimodality💫 Graduate Researcher @Stanford NLP | Research Affiliate @Georgia Tech | Data Scientist @Bombora

📍New York, NY

👩💻 https://j-kruk.github.io/

Assistant Professor at UCLA. Alum @StanfordNLP. NLP, Cognitive Science, Accessibility. https://www.coalas-lab.com/elisakreiss