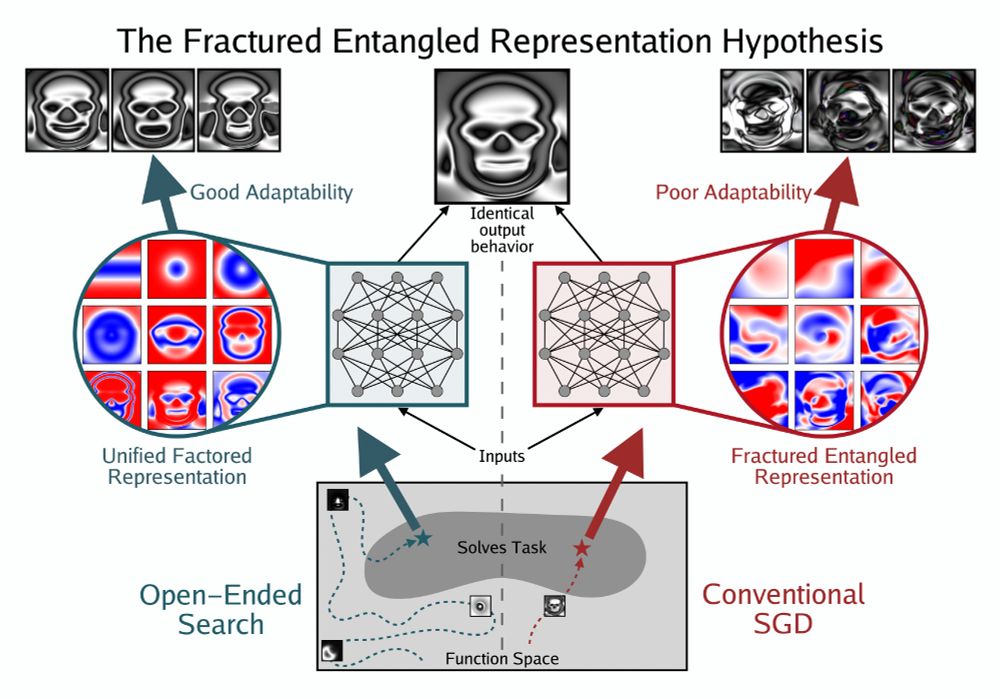

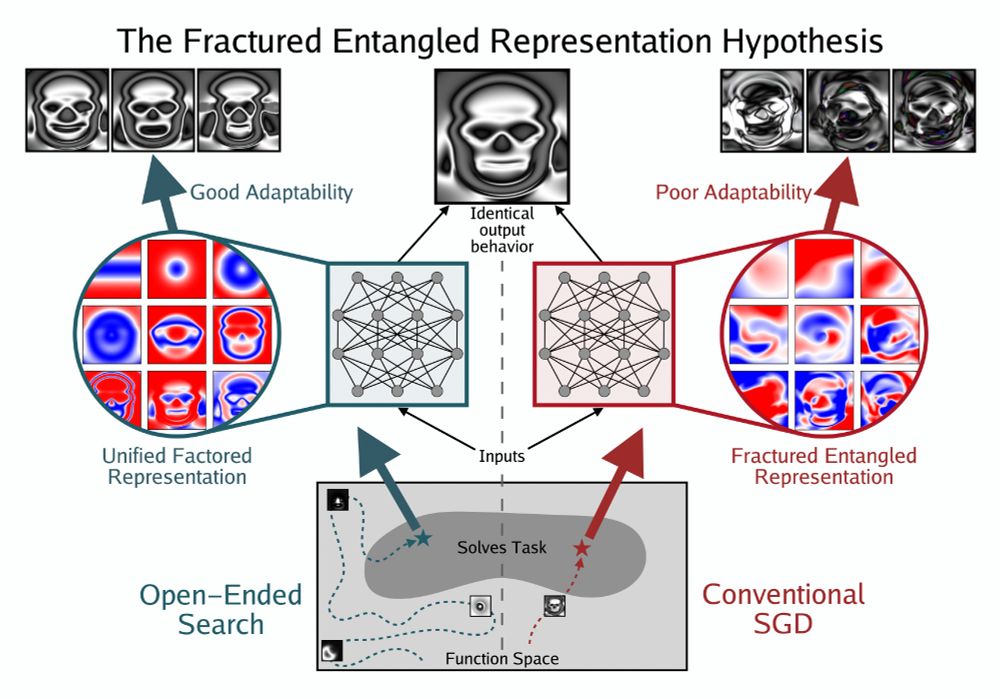

Could a major opportunity to improve representation in deep learning be hiding in plain sight? Check out our new position paper: Questioning Representational Optimism in Deep Learning: The Fractured Entangled Representation Hypothesis.

Paper: arxiv.org/abs/2505.11581

20.05.2025 17:52 —

👍 45

🔁 10

💬 0

📌 3

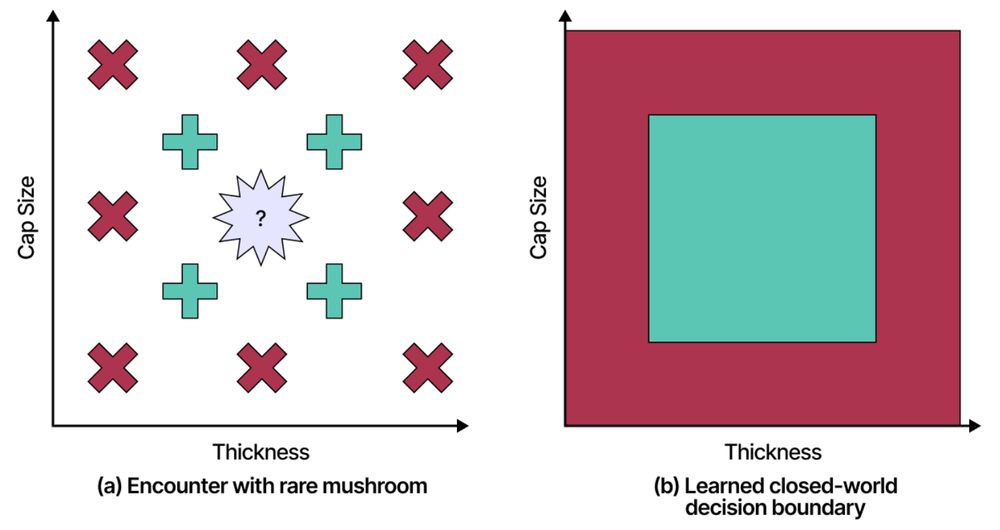

"Corner cases".

"Long tails".

You can play whack-a-mole with them.

Or, you can confront their root causes directly.

Our new paper "Evolution and The Knightian Blindspot of Machine Learning" argues we should do the latter.

24.01.2025 16:09 —

👍 17

🔁 8

💬 2

📌 0

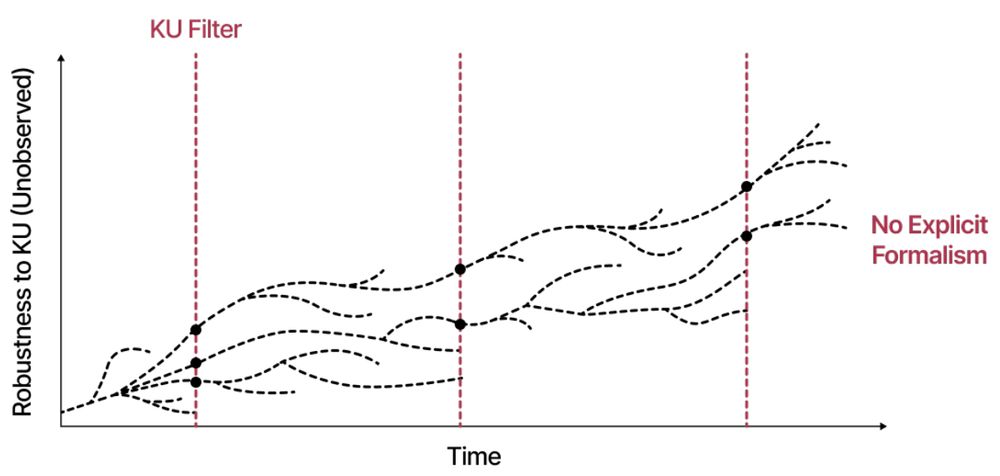

3) ML and RL have a rich history of imaginative new formalisms, like @dhadfieldmenell's CIRL, @marcgbellemare's distributional RL, etc. Highlighting this potential blindspot may unleash the field's substantial creativity, either in refuting it, or usefully encompassing it.

24.01.2025 16:00 —

👍 4

🔁 0

💬 1

📌 0

2) Open-endedness: Field that rhymes most w/ unknown unknowns -- it explicitly aims to endlessly generate them. We believe OE algos can simultaneously aim towards robustness to them

Related to @jeffclune's AI-GAs, @_rockt, @kenneth0stanley, @err_more, @MichaelD1729, @pyoudeyer

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

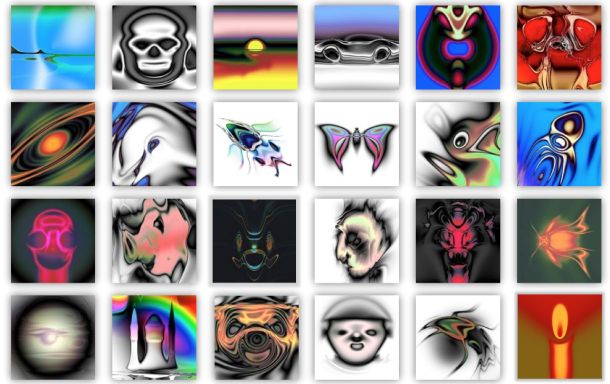

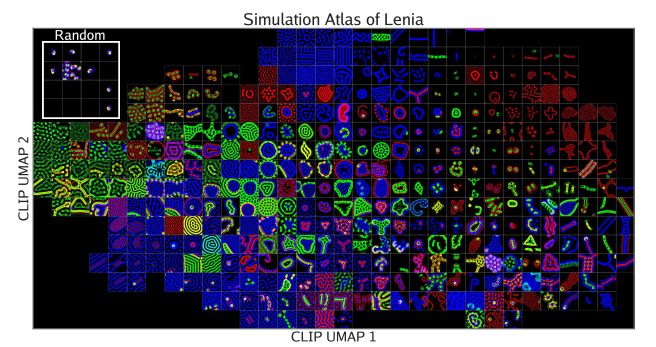

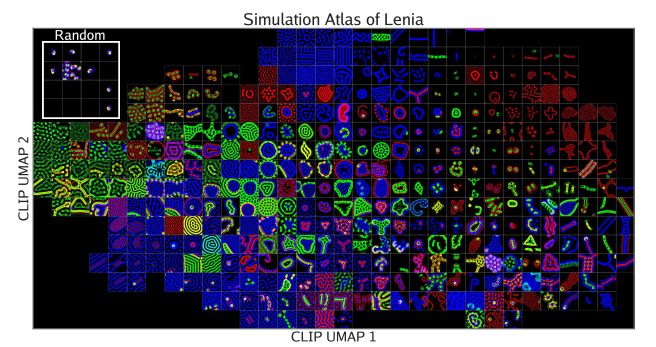

1) Artificial Life: Relative to its grand aspirations to recreate life's tapestry digitally, ALife is underappreciated. scaling + creativity may uncover novel robust neural architectures

See work done by @risi1979 @drmichaellevin @hardmaru @BertChakovsky @sina_lana + many others

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

So what to do? The message could seem negative, but we're optimistic there are many possible avenues to dealing w/ unknown unknowns. Some include fields currently more peripheral to ML, like Artificial Life or Open-endedness; others involve imagining new ML formalisms & algos

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

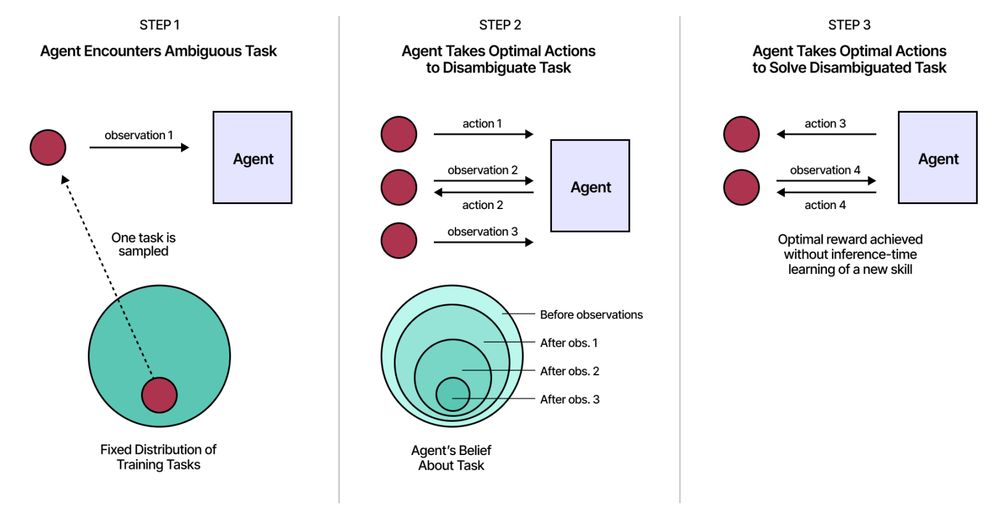

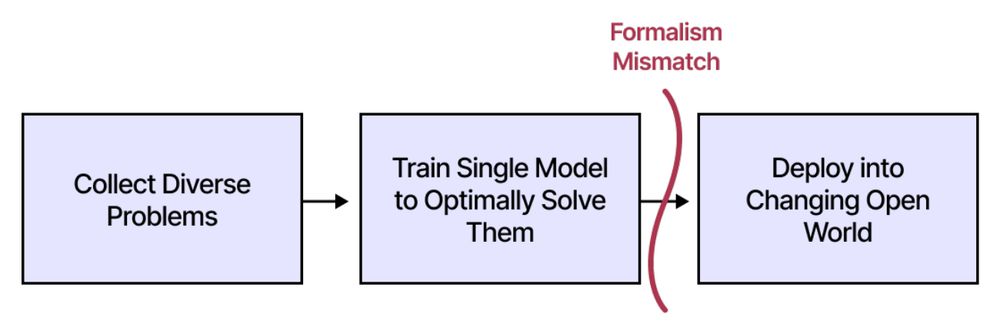

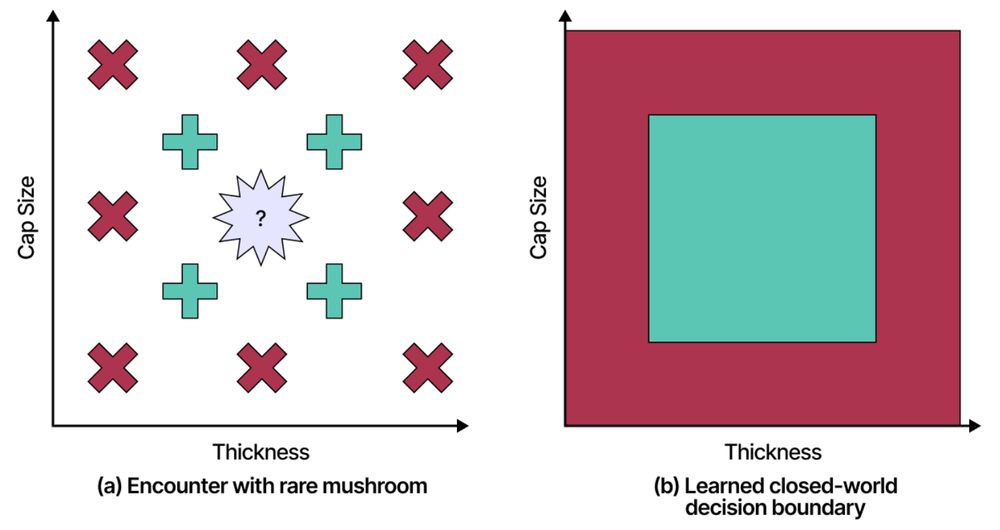

Paradigms like meta-learning ("learning how to learn") are exciting and seem like potential solutions. But they still assume a (meta-)frozen world, and need not incentivize to learn how to deal w/ the unknown (paper has more on other paradigms).

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

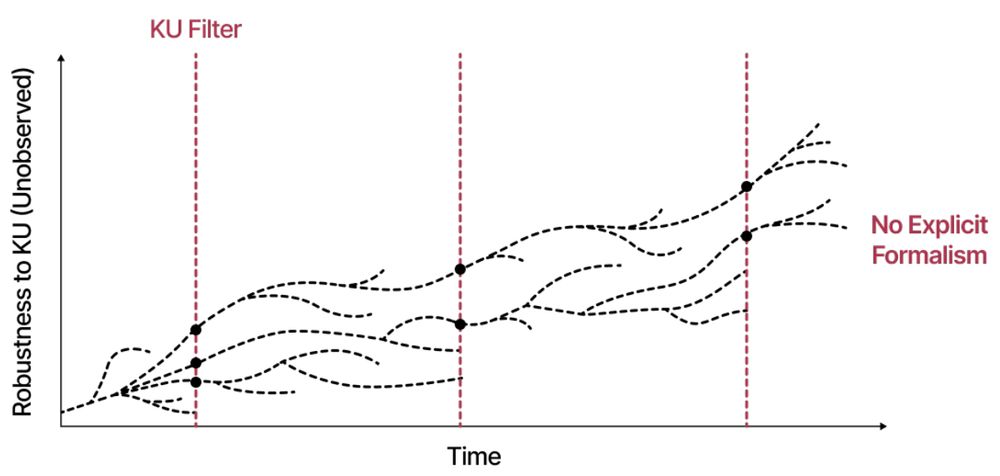

E.g. given 1 additional edge-case example, sometimes more effective to 1) filter many divergent models through it, b/c more reflective of: "face a novel problem 0-shot" then 2) just train on it, which will help generalize to similar situations but not further unknown unknowns

24.01.2025 16:00 —

👍 2

🔁 0

💬 1

📌 0

Rather than rely only on IID-aimed generalization, evolution takes bitter lesson to logical extreme: learns specialized architectures / learning algos that help organisms generalize to unforeseen situations, tested over time by shocks in a constantly-changing world.

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

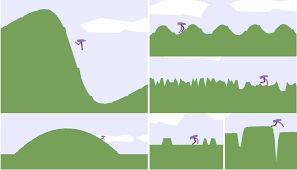

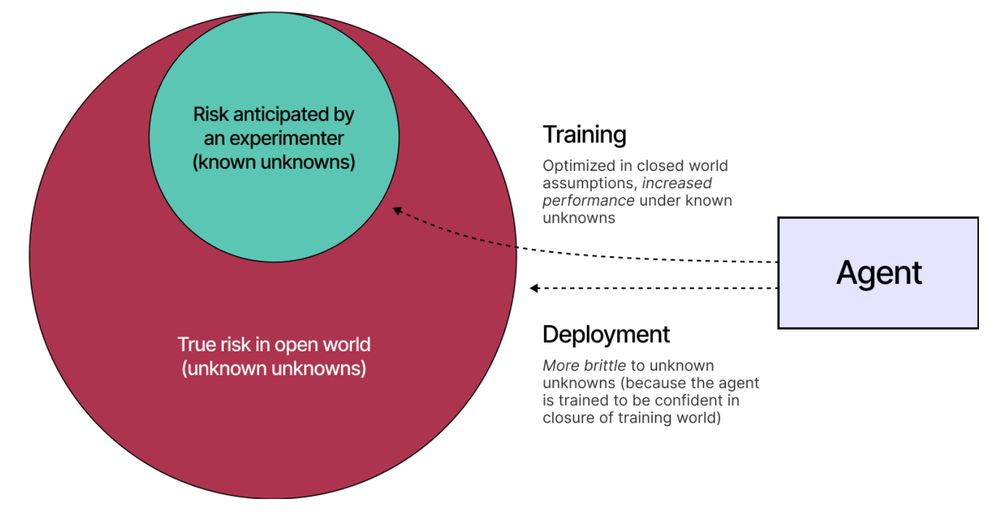

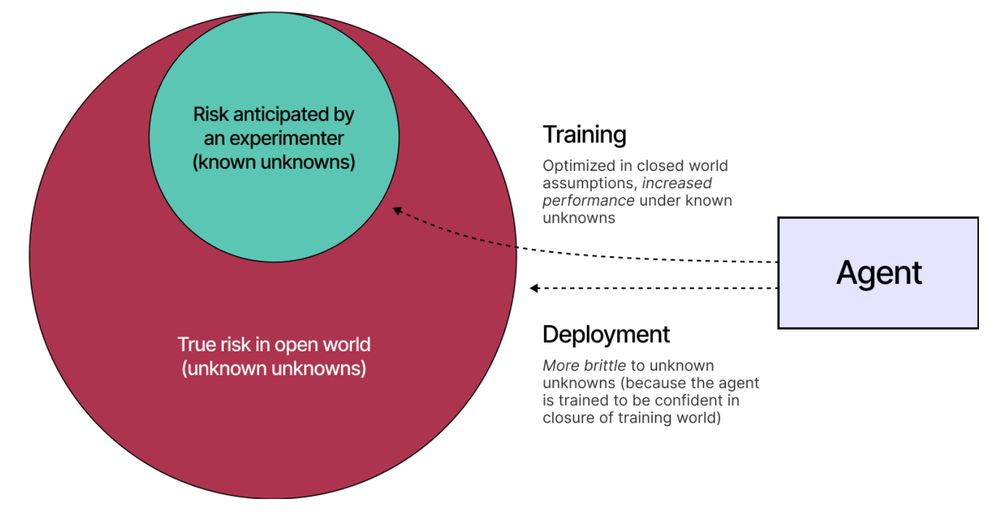

This isn't a dig at LLMs, which are amazing but still interestingly fragile at times. Generalization of big NNs is great, but underlying assumption is train world = test world = static. The paper argues NN generalization does not directly target robustness to open unknown future.

24.01.2025 16:00 —

👍 1

🔁 0

💬 1

📌 0

Contrasting evolution with machine learning helps highlight the blind spot: a "dumb" algo w/ no gradients or formalisms can yet create much more open-world robustness. In hindsight it makes sense: If algo implicitly denies a problem's existence, why would they best solve it?

24.01.2025 16:00 —

👍 2

🔁 0

💬 1

📌 0

Evolution, like science or VC, can be seen as making many diverse bets, that future experiments may invalidate (diversify-and-filter). Organisms able to persist through many unexpected shocks are lindy, i.e. likely to persist through more. D&F can be integrated into ML methods.

24.01.2025 16:00 —

👍 2

🔁 0

💬 1

📌 0

Interestingly, evolution's products = remarkably robust. Invasive species evolve in one habitat, dominate another. Humans zero-shot generalize from US driving to the UK (i.e. w/o any UK data) -- still a big challenge for AI. How does evolution do it, w/o gradients or foresight?

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

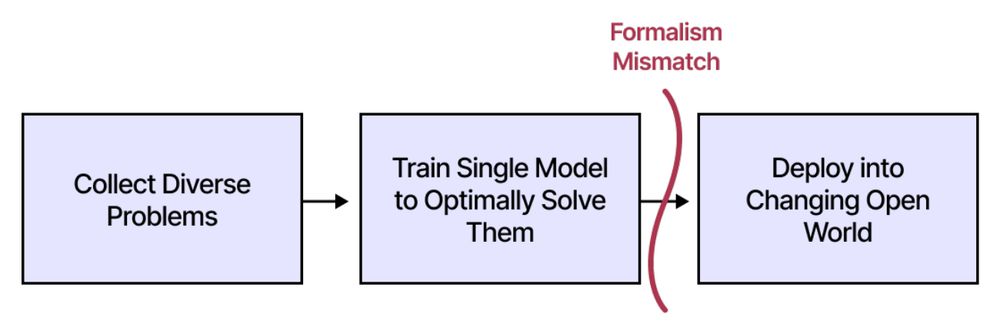

Most open-world AI (like LLMs) rely on "anticipate-and-train": Collect as much diverse data as possible, in anticipation of everything the model might later encounter. This often works! But training assumes a static, frozen world. This leads to fragility under new situations.

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

Kenneth Stanley (@kennethstanley.bsky.social)

In the past: CEO of Maven, Team Lead at OpenAI, head of basic/core research at Uber AI, professor at UCF.

Stuff I helped invent: NEAT, CPPNs, HyperNEAT, novelty search, POET, Picbreeder.

Book: Why Greatness Cannot Be Planned

Paper: arxiv.org/abs/2501.13075

w/ great colleagues Elliot Meyerson, Tarek El-Gaaly, kennethstanley.bsky.social, @tarinz.bsky.social

(more details below)

24.01.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

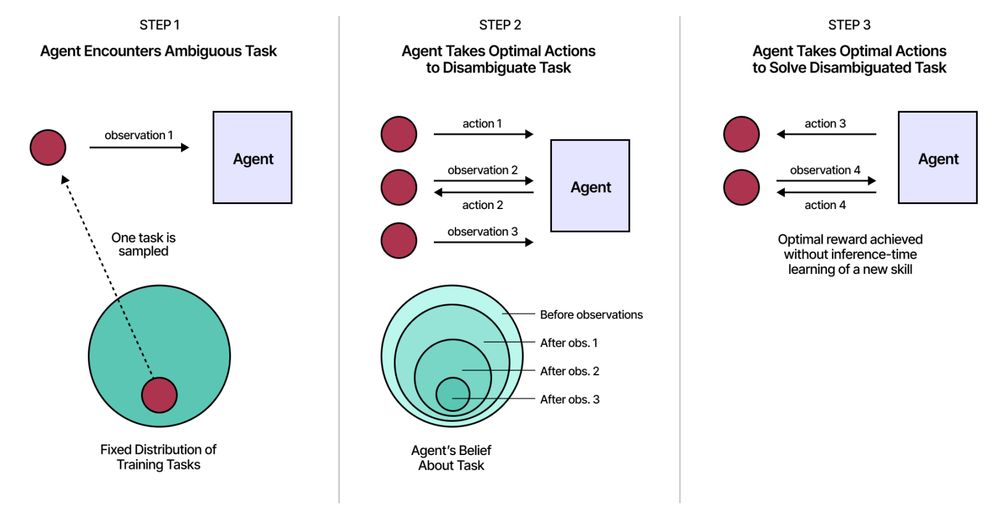

In short, we 1) highlight a blindspot in ML to unknown unknowns, through contrast with evolution, 2) abstract principles underlying evolution's robustness to UUs, 3) examine RL's formalisms to see what causes the blindspot, and 4) propose research directions to help alleviate it.

24.01.2025 16:00 —

👍 5

🔁 0

💬 1

📌 0

new paper: "Evolution and the Knightian Blindspot of Machine Learning"

Our ever-changing world bubbles with surprise and complexity. General AI must include handling unforeseen situations with grace. Yet this issue largely lies outside AI's formalisms: a blind spot. (1/n)

24.01.2025 16:00 —

👍 28

🔁 4

💬 2

📌 1

It’s the new year. Delete slack from your phone. Open your email after lunch. Disconnect the WiFi on Saturdays. Go to the woods on the weekend. Purchase a one way ticket to Alaska. Join a community of bears. Film a documentary. Post it on LinkedIn.

02.01.2025 18:38 —

👍 38

🔁 1

💬 0

📌 0

"Move then with new desires. / For where we used to build and love / Is no-man's land, and only ghosts can live / Between two fires."

-C. Day-Lewis

25.11.2024 19:32 —

👍 5

🔁 0

💬 0

📌 0

“Friendship Feed" is a feed with the goal of surfacing the people you care about, even if they don’t post a lot!

Mixology:

Find your mutuals, shuffle them, and show one post per person from their latest few.

LMKWYT :)

https://bsky.app/profile/did:plc:wmhp7mubpgafjggwvaxeozmu/feed/bestoffollows

10.08.2023 13:38 —

👍 99

🔁 40

💬 5

📌 9