NEW PAPER

The use of explainable AI in healthcare evaluated using the well known Explain, Predict and Describe taxonomy by Galit Shmueli

link.springer.com/article/10.1...

05.12.2025 09:08 —

👍 25

🔁 4

💬 1

📌 1

🧐

27.11.2025 19:50 —

👍 8

🔁 0

💬 0

📌 0

this is one of my favourite observations about sample size calculations. (afaik first articulated by Miettinen in 1985)

25.11.2025 10:56 —

👍 77

🔁 21

💬 1

📌 2

Ha! I did not know I quoted Miettinen :). Thanks for the reference

25.11.2025 11:41 —

👍 7

🔁 0

💬 0

📌 0

For some research studies the optimal sample size should be estimated at 0

25.11.2025 10:51 —

👍 62

🔁 6

💬 1

📌 2

“Data available upon reasonable request” is academic language for you can get my data OVER MY DEAD BODY

18.11.2025 11:10 —

👍 38

🔁 3

💬 2

📌 0

I take version control very seriously

14.11.2025 14:20 —

👍 9

🔁 0

💬 1

📌 0

Manuscript_Final_Version_actualFINALcopy_version9b_USETHISONE.docx

14.11.2025 14:16 —

👍 47

🔁 0

💬 6

📌 0

Prediction models that are used to guide medical decisions are usually regulated under medical device regulation. This means, putting a calculator out there to promote the use your new prediction model is likely to break some rules.

01.11.2025 13:46 —

👍 11

🔁 3

💬 1

📌 0

The lasso works really well in particular settings and for particular purposes. If you are after high prediction performance alone and you have a rather large sample size, it can be an excellent choice indeed. But most analytical goals are not only about prediction

12.10.2025 13:57 —

👍 4

🔁 0

💬 0

📌 0

Kind reminder: data driven variable selection (e.g. forward/stepwise/univariable screening) makes things *worse* for most analytical goals

08.10.2025 13:38 —

👍 34

🔁 10

💬 2

📌 3

Interpretable "AI" is just a distraction from safe and useful "AI"

22.09.2024 19:31 —

👍 10

🔁 2

💬 1

📌 1

This is right tho. Let’s therefore call them sensitivity positive predictive value curves bsky.app/profile/laur...

19.08.2025 15:28 —

👍 7

🔁 0

💬 1

📌 0

No.

19.08.2025 15:22 —

👍 11

🔁 2

💬 2

📌 0

I wonder who those people are who come here dying to know what GenAI has done with some prompt you put in

13.08.2025 09:21 —

👍 5

🔁 1

💬 1

📌 0

If you think AI is cool, wait until you learn about regression analysis

12.08.2025 11:44 —

👍 126

🔁 22

💬 5

📌 4

TL;DR: Explainable AI models often don't do a good job explaining. They can be very useful for description. We should be really careful when using Explainable AI in clinical decision making, and even when judging face validity of AI models

Excellently led by @alcarriero.bsky.social

11.08.2025 06:54 —

👍 11

🔁 0

💬 1

📌 0

NEW PREPRINT

Explainable AI refers to an extremely popular group of approaches that aim to open "black box" AI models. But what can we see when we open the black AI box? We use Galit Shmueli's framework (to describe, predict or explain) to evaluate

arxiv.org/abs/2508.05753

11.08.2025 06:53 —

👍 69

🔁 19

💬 6

📌 1

The healthcare literature is filled with "risk factors". This word combination makes research findings sound important by implying causality, while avoiding direct claims of having identified causal associations that are easily critiqued.

31.07.2025 08:32 —

👍 24

🔁 1

💬 2

📌 2

And taking this analogy one step further: it gives genuine phone repair shops a bad name

24.07.2025 08:26 —

👍 7

🔁 0

💬 0

📌 0

When forced to make a choice, my choice will be logistic regression model over linear probability model 103% of the time

23.07.2025 20:43 —

👍 35

🔁 2

💬 0

📌 0

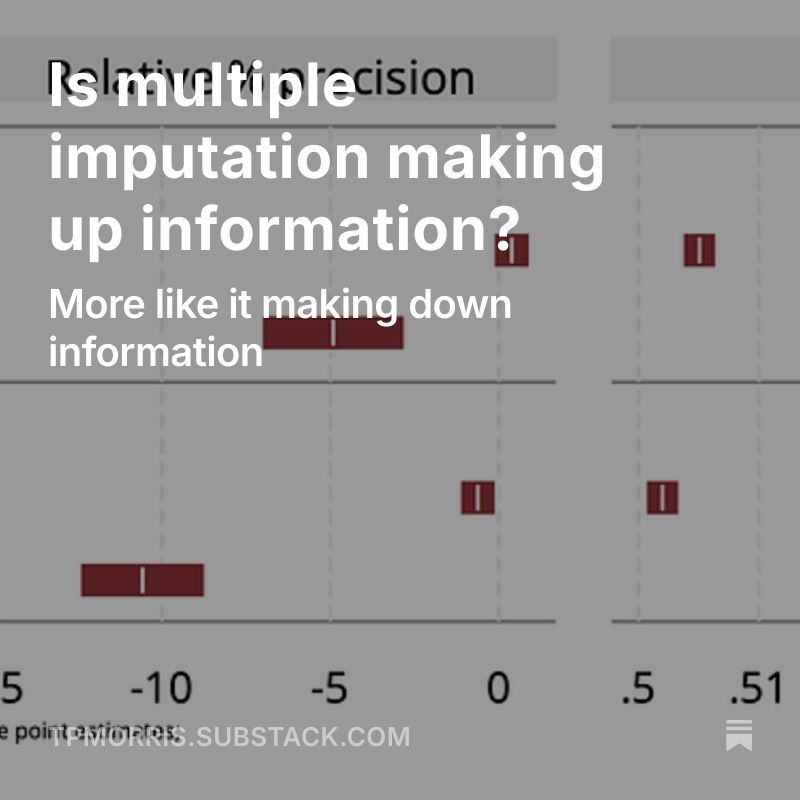

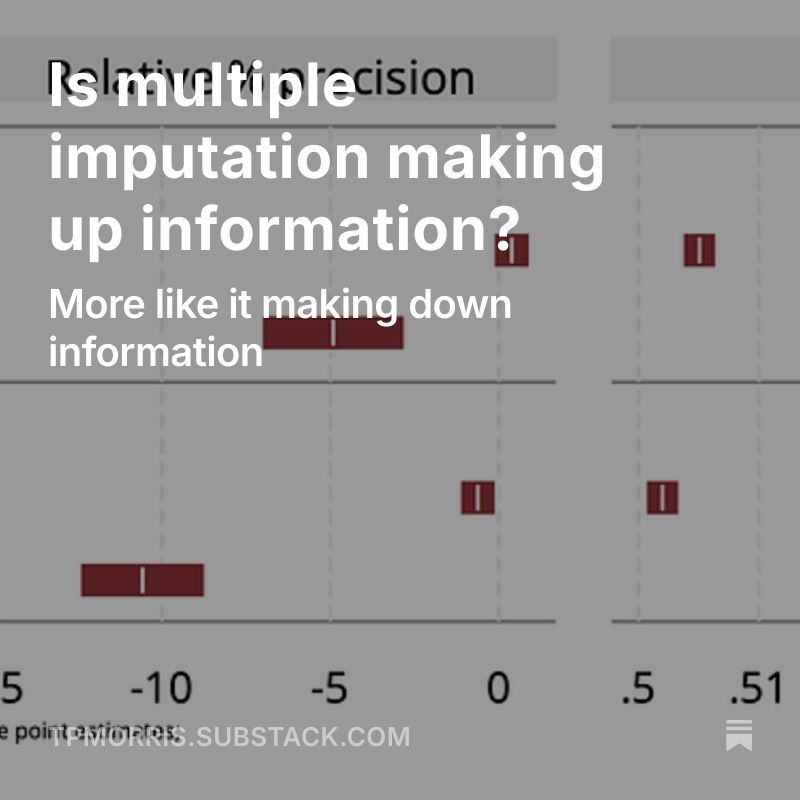

Cover picture with blog title & subtitle, and results graph in the background

Post just up: Is multiple imputation making up information?

tldr: no.

Includes a cheeky simulation study to demonstrate the point.

open.substack.com/pub/tpmorris...

23.07.2025 15:29 —

👍 39

🔁 10

💬 3

📌 0

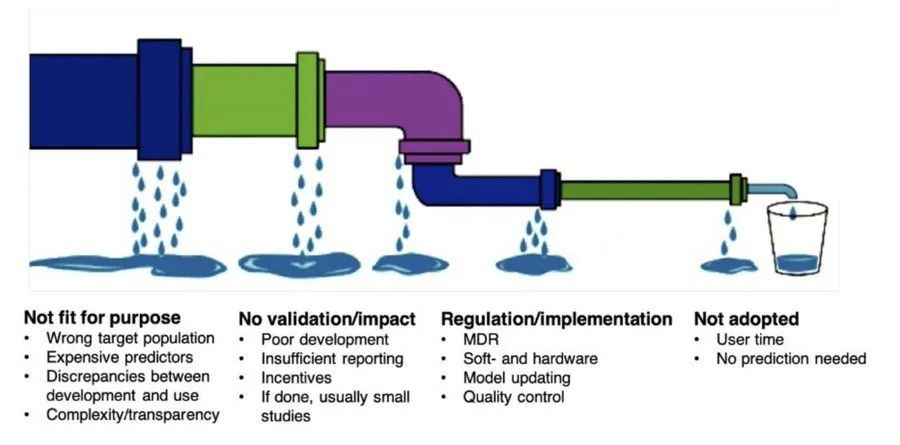

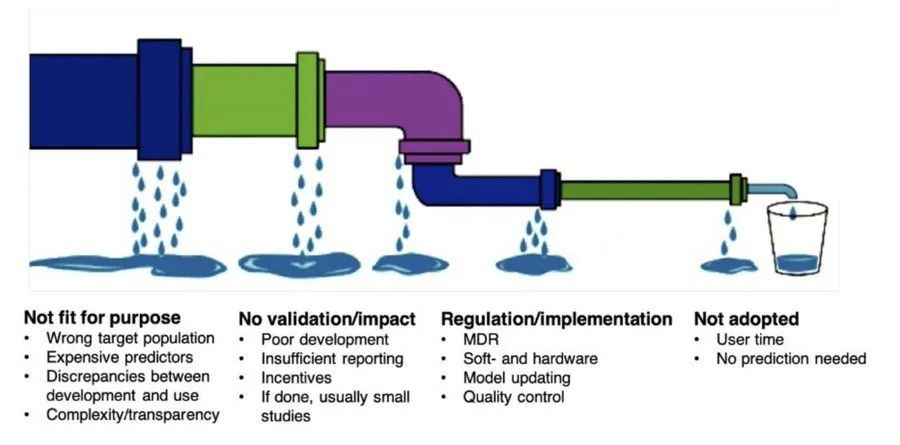

The leaky pipe of clinical prediction models. by @maartenvsmeden.bsky.social et al

You can have all the omni-omics data in the world and the bestest algorithms, but eventually a predicted probability is produced & it should be evaluated using well-established methods, and correctly implemented in the context of medical decision making.

statsepi.substack.com/i/140315566/...

14.07.2025 09:49 —

👍 37

🔁 14

💬 4

📌 0

Clients: “I want to find real, meaningful clusters”

Me: “I want world peace, which is more likely to happen than what you want”

11.07.2025 12:45 —

👍 3

🔁 0

💬 0

📌 0

Depending which methods guru you ask every analytical task is “essentially” a missing data problem, a causal inference problem, a Bayesian problem, a regression problem or a machine learning problem

10.07.2025 15:05 —

👍 58

🔁 6

💬 5

📌 3

07.07.2025 12:04 —

👍 35

🔁 6

💬 5

📌 0