This is quite insightful

27.02.2025 01:02 — 👍 0 🔁 0 💬 0 📌 0

How has DeepSeek improved the Transformer architecture?

This Gradient Updates issue goes over the major changes that went into DeepSeek’s most recent model.

Very good (technical) explainer answering "How has DeepSeek improved the Transformer architecture?". Aimed at readers already familiar with Transformers.

epoch.ai/gradient-upd...

30.01.2025 21:07 — 👍 282 🔁 64 💬 6 📌 5

Very interesting paper by Ananda Theertha Suresh et al.

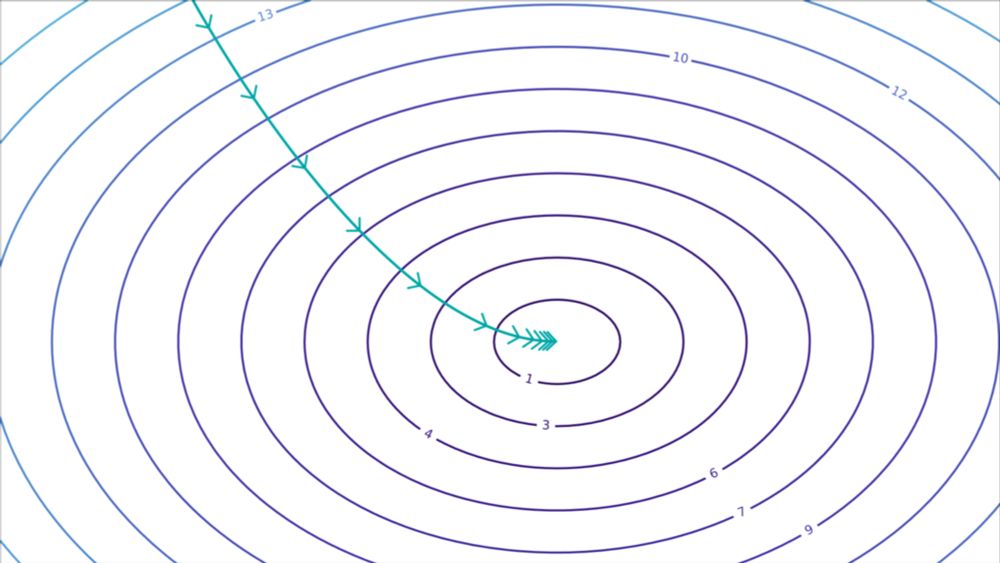

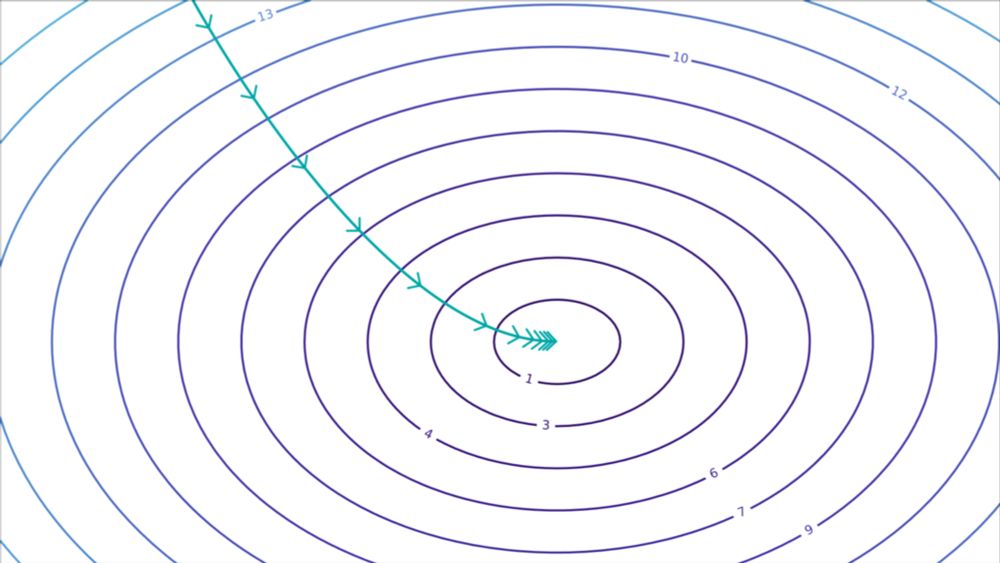

For categorical/Gaussian distributions, they derive the rate at which a sample is forgotten to be 1/k after k rounds of recursive training (hence 𝐦𝐨𝐝𝐞𝐥 𝐜𝐨𝐥𝐥𝐚𝐩𝐬𝐞 happens more slowly than intuitively expected)

27.12.2024 23:35 — 👍 35 🔁 5 💬 1 📌 0

I am an AI researcher working on safe AI. My most recent work can be found at arxiv.org/abs/2407.14937. I am trying to connect with other AI researchers on 🦋; follow me here, and I will follow you back.

19.11.2024 02:15 — 👍 0 🔁 0 💬 0 📌 0

Computational Linguists—Natural Language—Machine Learning

Bold science, deep and continuous collaborations.

Assistant Professor at the Department of Computer Science, University of Liverpool.

https://lutzoe.github.io/

Building personalized Bluesky feeds for academics! Pin Paper Skygest, which serves posts about papers from accounts you're following: https://bsky.app/profile/paper-feed.bsky.social/feed/preprintdigest. By @sjgreenwood.bsky.social and @nkgarg.bsky.social

Lawyer, author, EFF's first hire, Godwin's Law creator (he/him). Retweeting!=endorsing. I tell jokes here, mostly. My opinions here don't necessarily represent any employer or any client. You may have known me as @sfmnemonic on Twitter.

Independent AI researcher, creator of datasette.io and llm.datasette.io, building open source tools for data journalism, writing about a lot of stuff at https://simonwillison.net/

Senior Director, Research Scientist @ Meta FAIR + Visiting Prof @ NYU.

Pretrain+SFT: NLP from Scratch (2011). Multilayer attention+position encode+LLM: MemNet (2015). Recent (2024): Self-Rewarding LLMs & more!

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Researching reasoning at OpenAI | Co-created Libratus/Pluribus superhuman poker AIs, CICERO Diplomacy AI, and OpenAI o-series / 🍓

Chaque soir: Tente de conquérir le monde. Le reste du temps: MCF (Paris).

@GuillaumeG_ sur X

🇨🇵 🇬🇧 🇪🇸 🇮🇹

Math Assoc. Prof. (On leave, Aix-Marseille, France)

Teaching Project (non-profit): https://highcolle.com/

postdoc at MIT CSAIL working on solving combinatorial problems with neural networks

Asst Prof. @ UCSD | PI of LeM🍋N Lab | Former Postdoc at ETH Zürich, PhD @ NYU | computational linguistics, NLProc, CogSci, pragmatics | he/him 🏳️🌈

alexwarstadt.github.io

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

Bioinformatics Scientist / Next Generation Sequencing, Single Cell and Spatial Biology, Next Generation Proteomics, Liquid Biopsy, SynBio, Compute Acceleration in biotech // http://albertvilella.substack.com

Hon. Associate Professor UCL CS | Ex-Dir. Research AI for Good & Head of Element AI London Office | Ex-DeepMind. He/Him | https://cornebise.com

Incoming Assistant Prof @sbucompsc @stonybrooku.

Researcher → @SFResearch

Ph.D. → @ColumbiaCompSci

Human Centered AI / Future of Work / AI & Creativity