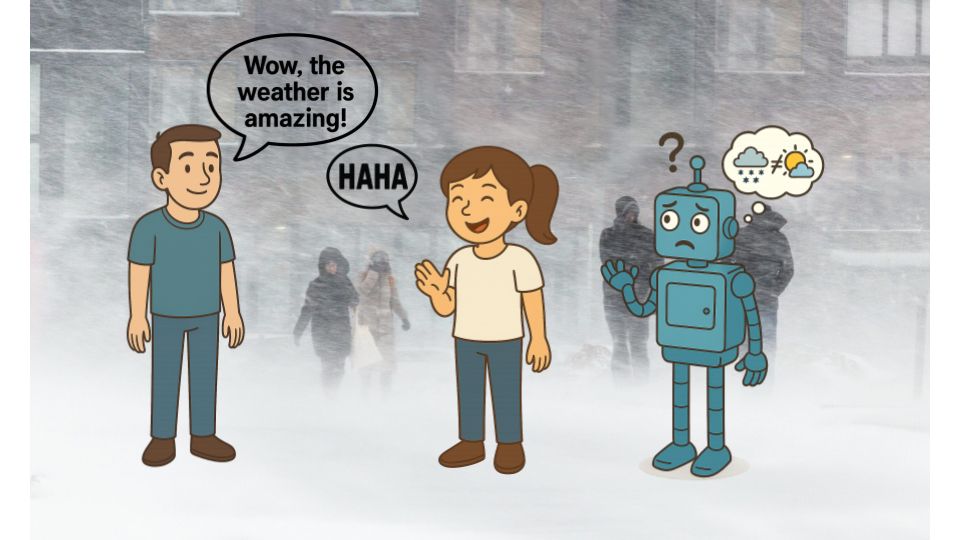

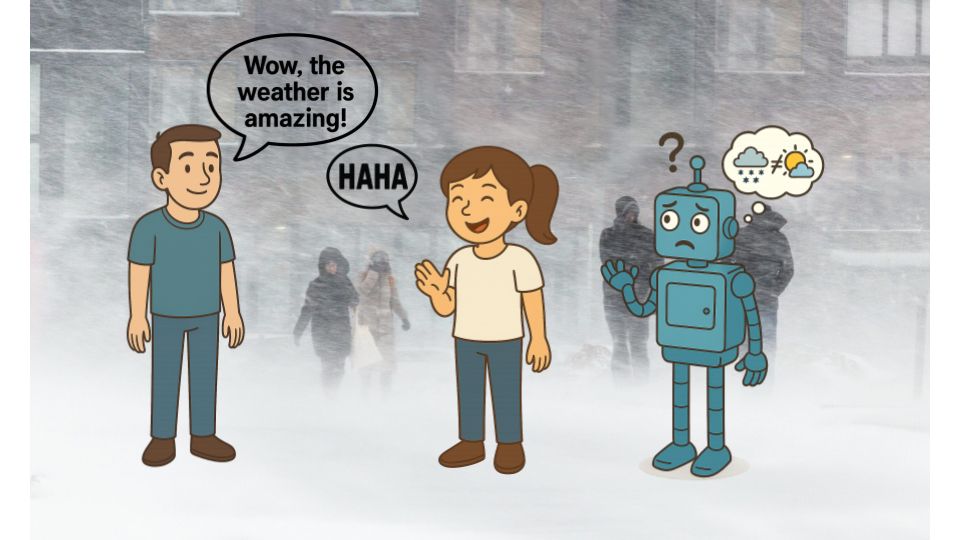

A blizzard is raging through Montreal when your friend says “Looks like Florida out there!” Humans easily interpret irony, while LLMs struggle with it. We propose a 𝘳𝘩𝘦𝘵𝘰𝘳𝘪𝘤𝘢𝘭-𝘴𝘵𝘳𝘢𝘵𝘦𝘨𝘺-𝘢𝘸𝘢𝘳𝘦 probabilistic framework as a solution.

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

26.06.2025 15:52 —

👍 15

🔁 7

💬 1

📌 4

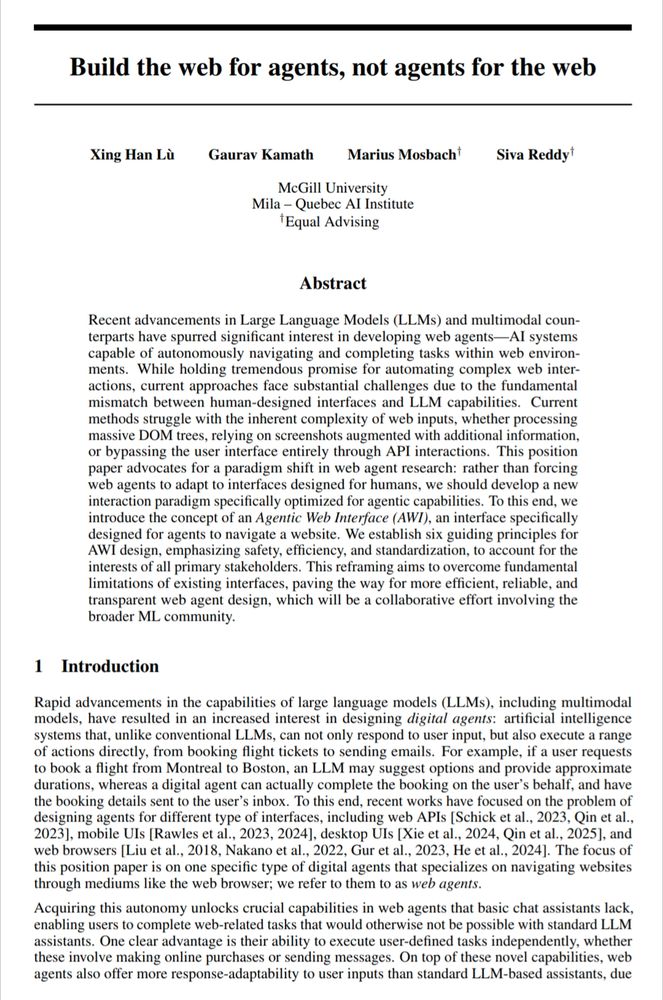

"Build the web for agents, not agents for the web"

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

14.06.2025 04:17 —

👍 6

🔁 4

💬 0

📌 0

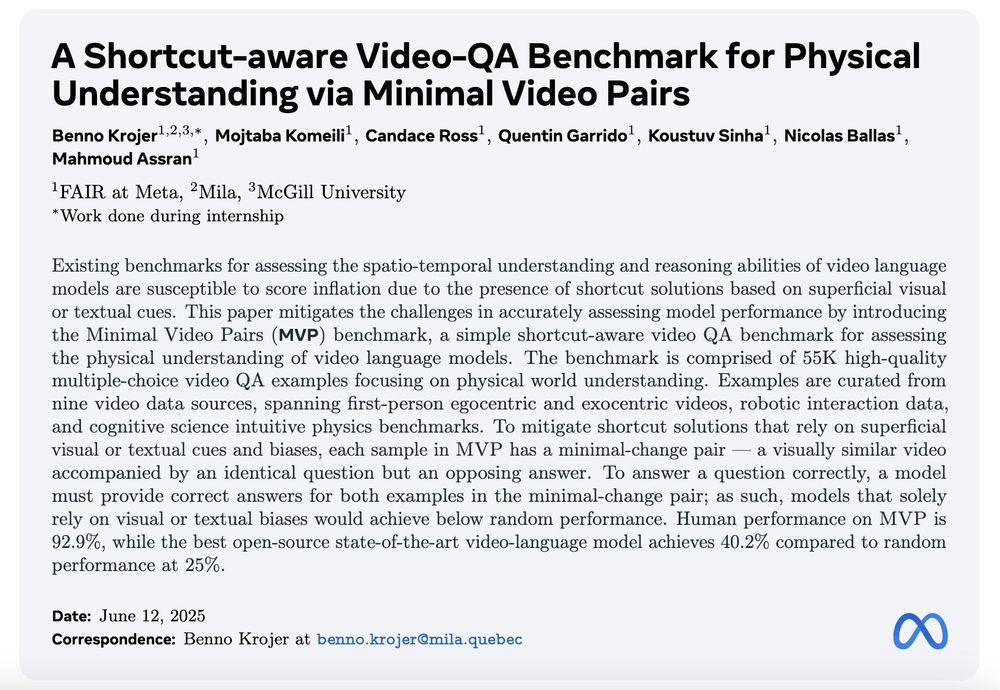

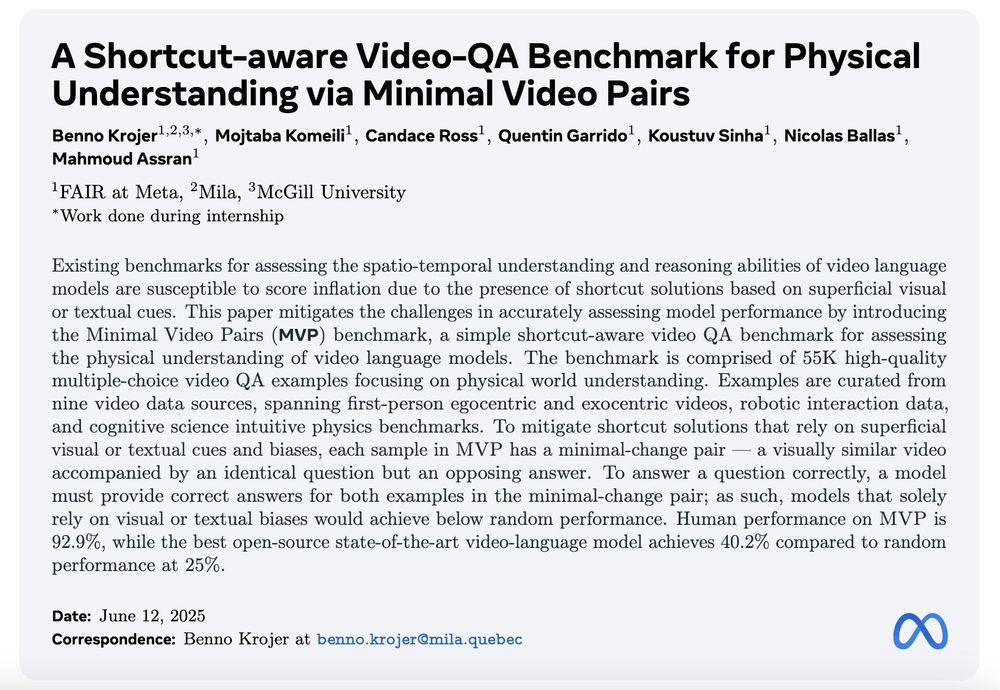

Excited to share the results of my recent internship!

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

13.06.2025 14:47 —

👍 16

🔁 5

💬 1

📌 0

Exciting work on hallucinations from @ziling-cheng.bsky.social

06.06.2025 18:15 —

👍 2

🔁 0

💬 0

📌 0

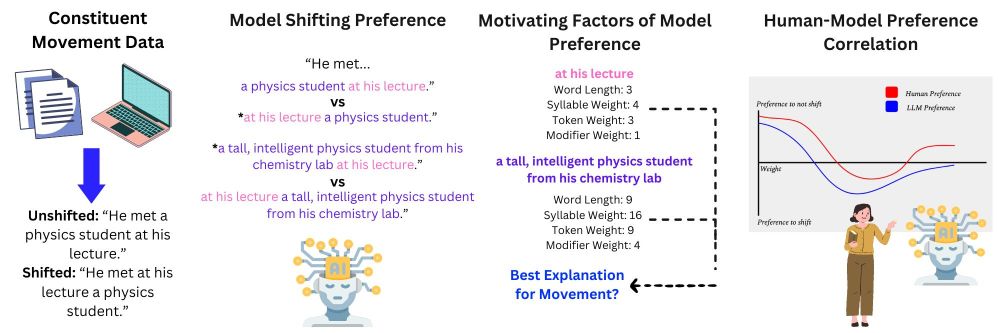

Incredibly proud of my students @adadtur.bsky.social and Gaurav Kamath for winning a SAC award at #NAACL2025 for their work on assessing how LLMs model constituent shifts.

01.05.2025 15:11 —

👍 17

🔁 5

💬 1

📌 0

Congratulations to Mila members @adadtur.bsky.social , Gaurav Kamath and @sivareddyg.bsky.social for their SAC award at NAACL! Check out Ada's talk in Session I: Oral/Poster 6. Paper: arxiv.org/abs/2502.05670

01.05.2025 14:30 —

👍 13

🔁 7

💬 0

📌 3

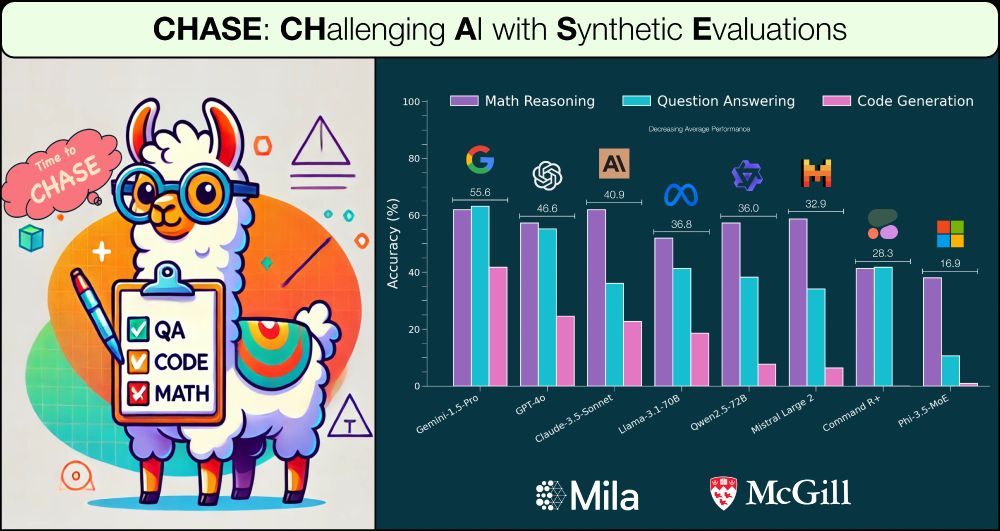

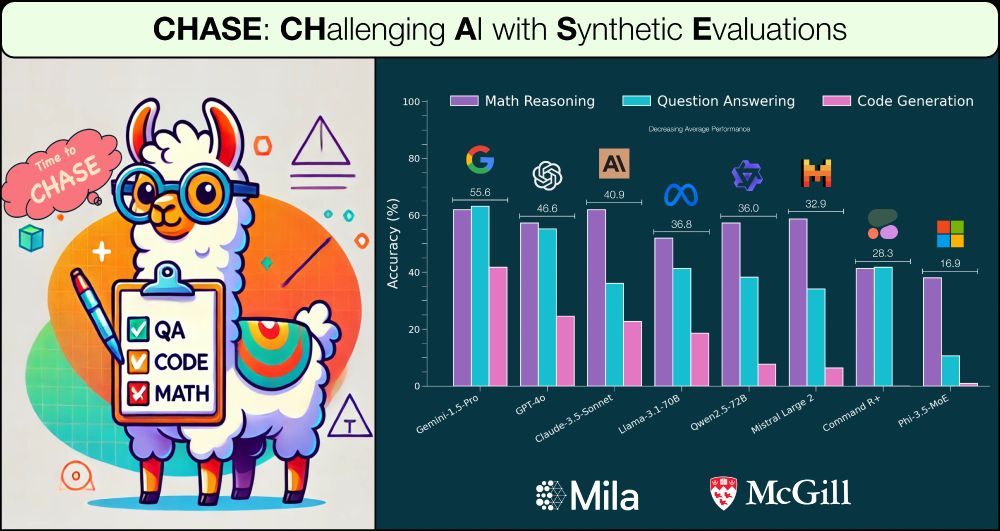

Presenting ✨ 𝐂𝐇𝐀𝐒𝐄: 𝐆𝐞𝐧𝐞𝐫𝐚𝐭𝐢𝐧𝐠 𝐜𝐡𝐚𝐥𝐥𝐞𝐧𝐠𝐢𝐧𝐠 𝐬𝐲𝐧𝐭𝐡𝐞𝐭𝐢𝐜 𝐝𝐚𝐭𝐚 𝐟𝐨𝐫 𝐞𝐯𝐚𝐥𝐮𝐚𝐭𝐢𝐨𝐧 ✨

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

21.02.2025 16:28 —

👍 17

🔁 8

💬 1

📌 1

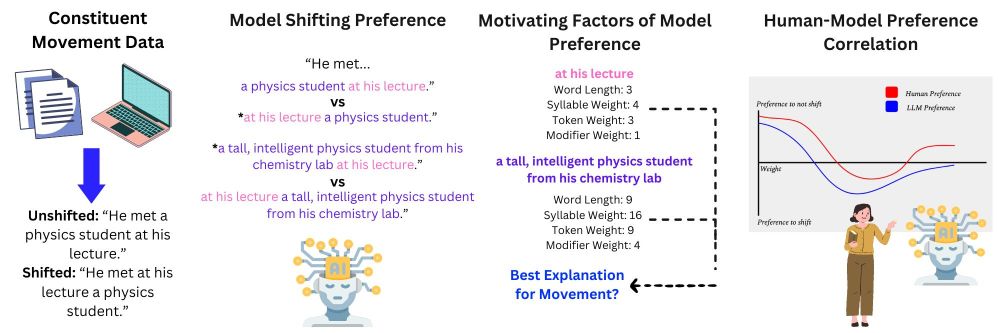

Overview figure for paper, showing creation of constituent movement data, in addition to three step experimentation: "Model Shifting Preference", "Motivating Factors of Model Preference", "Human-Model Preference Correlation"

Super excited to finally announce our NAACL 2025 main conference paper “Language Models Largely Exhibit Human-like Constituent Ordering Preferences”!

We examine constituent ordering preferences between humans and LLMs; we present two main findings… 🧵

19.02.2025 19:31 —

👍 5

🔁 2

💬 1

📌 1

At McGill we have an NLP lab that works on a lot of things, from human-AI collaboration, to evaluation, to low resource NLP (me).

@emnlpmeeting.bsky.social just happened in Miami, and my colleagues just presented six papers there:

24.11.2024 16:31 —

👍 8

🔁 1

💬 1

📌 0

Thank you for trying again! I haven't a solution to the search issue and might contact support soon. Will let you know once we're indexed!

24.11.2024 13:12 —

👍 1

🔁 0

💬 1

📌 0

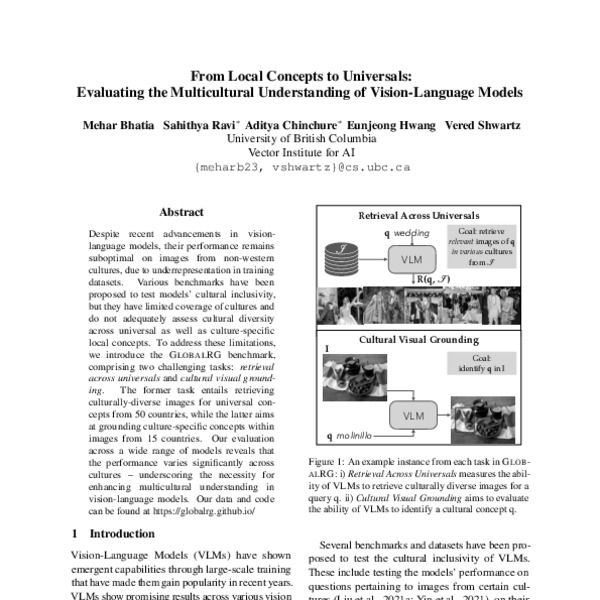

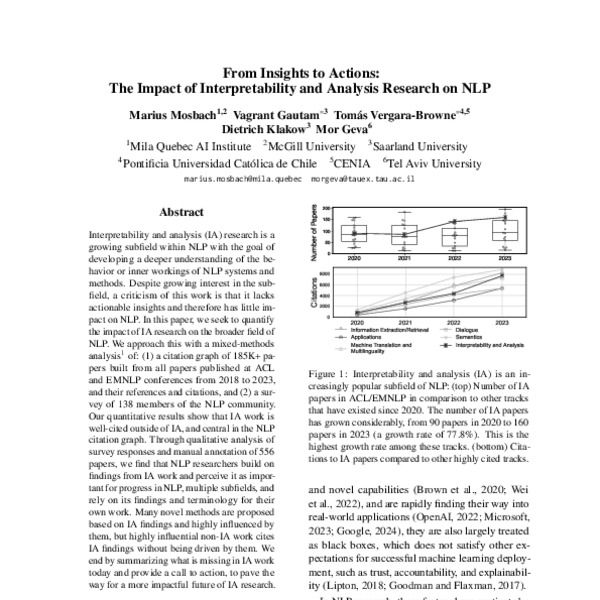

It turns out we had even more papers at EMNLP!

Let's complete the list with three more🧵

24.11.2024 02:17 —

👍 14

🔁 4

💬 1

📌 1

Our lab members recently presented 3 papers at @emnlpmeeting.bsky.social in Miami ☀️ 📜

From interpretability to bias/fairness and cultural understanding -> 🧵

23.11.2024 20:35 —

👍 19

🔁 6

💬 1

📌 2

Hello 👋 could you add us? Great initiative!

22.11.2024 19:55 —

👍 2

🔁 0

💬 1

📌 0