A new paper accepted in @colmweb.org COLM 2025! I led a group of 3 brilliant students to dive deep into the problem of discrimination in language models. We discovered that models that take racist decisions don’t always have biased thoughts!

25.07.2025 00:03 — 👍 2 🔁 2 💬 1 📌 0

Our new paper in #PNAS (bit.ly/4fcWfma) presents a surprising finding—when words change meaning, older speakers rapidly adopt the new usage; inter-generational differences are often minor.

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

29.07.2025 12:05 — 👍 34 🔁 17 💬 3 📌 2

What do systematic hallucinations in LLMs tell us about their generalization abilities?

Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :)

@mcgill-nlp.bsky.social @mila-quebec.bsky.social

28.07.2025 09:18 — 👍 2 🔁 2 💬 0 📌 0

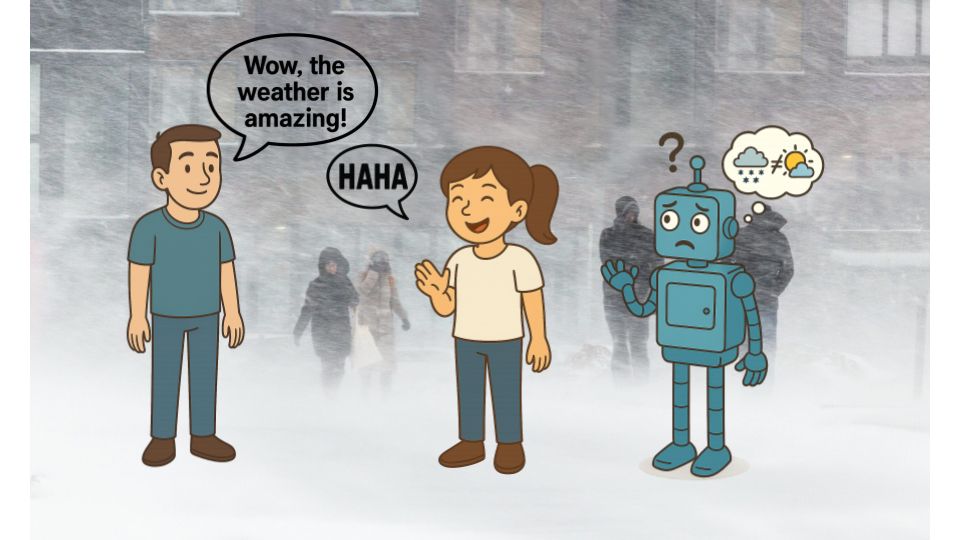

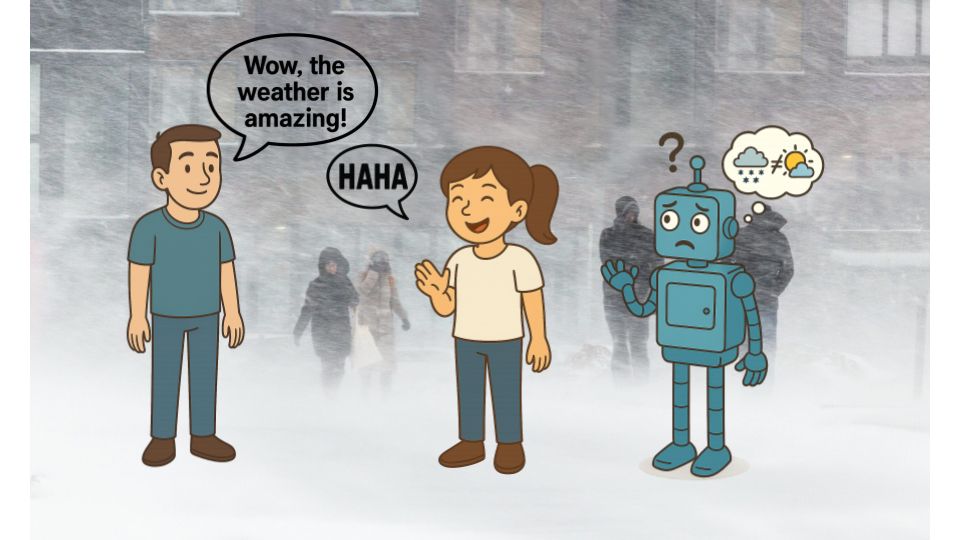

How can we use models of cognition to help LLMs interpret figurative language (irony, hyperbole) in a more human-like manner? Come to our #ACL2025NLP poster on Wednesday at 11AM (exhibit hall - exact location TBA) to find out! @mcgill-nlp.bsky.social @mila-quebec.bsky.social @aclmeeting.bsky.social

28.07.2025 09:16 — 👍 3 🔁 2 💬 0 📌 0

Thanks to collaborators David Austin, Pablo Piantanida and Jackie Cheung. We also received some amazing feedback from the @mila-quebec.bsky.social @mcgill-nlp.bsky.social community! And thanks to Jennifer Hu, Justine Kao and Polina Tsvilodub for sharing their datasets.

26.06.2025 15:57 — 👍 3 🔁 0 💬 0 📌 0

Other cool findings:

1. We prove that (RSA)^2 is more expressive than QUD-based RSA.

2. Naively applying RSA to LLMs leads to probability 𝘴𝘱𝘳𝘦𝘢𝘥𝘪𝘯𝘨, not 𝘯𝘢𝘳𝘳𝘰𝘸𝘪𝘯𝘨! Are there better ways to use RSA with LLMs?

3. What if we don't know the rhetorical strategies? We develop a clustering algorithm too!

26.06.2025 15:53 — 👍 0 🔁 0 💬 1 📌 0

What about LLMs? We integrate LLMs within (RSA)^2 and test them on a new dataset, PragMega+. We show that LLMs augmented with (RSA)^2 produce probability distributions which are more aligned with human expectations.

26.06.2025 15:53 — 👍 0 🔁 0 💬 1 📌 0

We test (RSA)^2 on two existing figurative language datasets: hyperbolic number expressions (e.g. “This kettle costs 1000$”) and ironic utterances about the weather (e.g. “The weather is amazing” during a Montreal blizzard). We obtain meaning distributions which are compatible with those of humans!

26.06.2025 15:53 — 👍 1 🔁 0 💬 1 📌 0

We develop (RSA)^2: a 𝘳𝘩𝘦𝘵𝘰𝘳𝘪𝘤𝘢𝘭-𝘴𝘵𝘳𝘢𝘵𝘦𝘨𝘺-𝘢𝘸𝘢𝘳𝘦 probabilistic framework of figurative language. In (RSA)^2 one listener will interpret language literally, another will interpret language ironically, etc. These listeners are marginalized to produce a distribution over possible meanings.

26.06.2025 15:52 — 👍 2 🔁 1 💬 1 📌 0

A blizzard is raging through Montreal when your friend says “Looks like Florida out there!” Humans easily interpret irony, while LLMs struggle with it. We propose a 𝘳𝘩𝘦𝘵𝘰𝘳𝘪𝘤𝘢𝘭-𝘴𝘵𝘳𝘢𝘵𝘦𝘨𝘺-𝘢𝘸𝘢𝘳𝘦 probabilistic framework as a solution.

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

26.06.2025 15:52 — 👍 15 🔁 7 💬 1 📌 4

02 | Gauthier Gidel: Bridging Theory and Deep Learning, Vibes at Mila, and the Effects of AI on Art

Behind the Research of AI · Episode

Started a new podcast with @tomvergara.bsky.social !

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

25.06.2025 15:54 — 👍 17 🔁 6 💬 1 📌 0

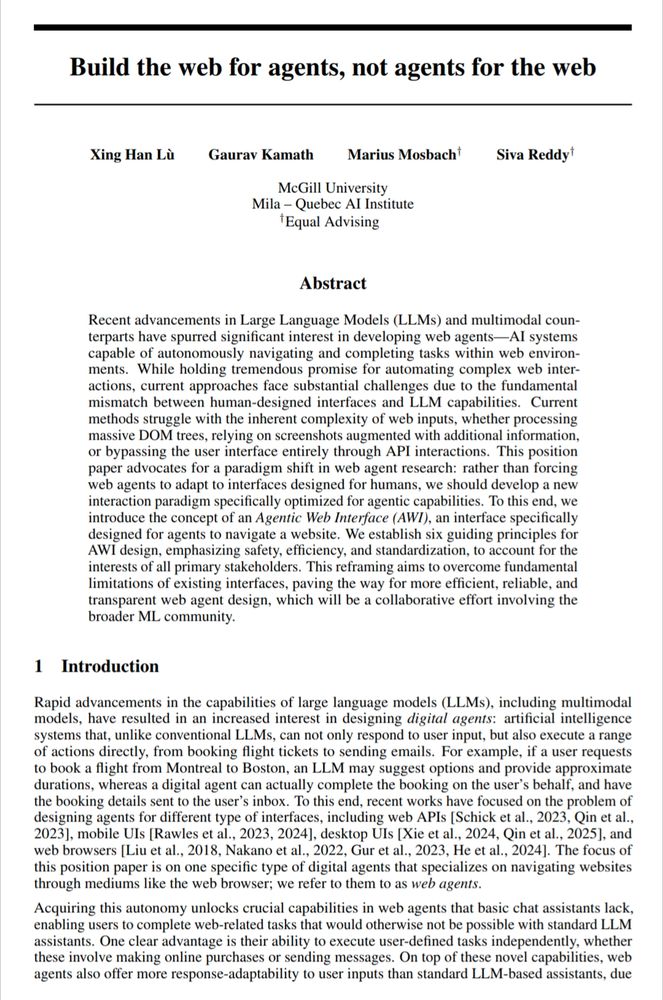

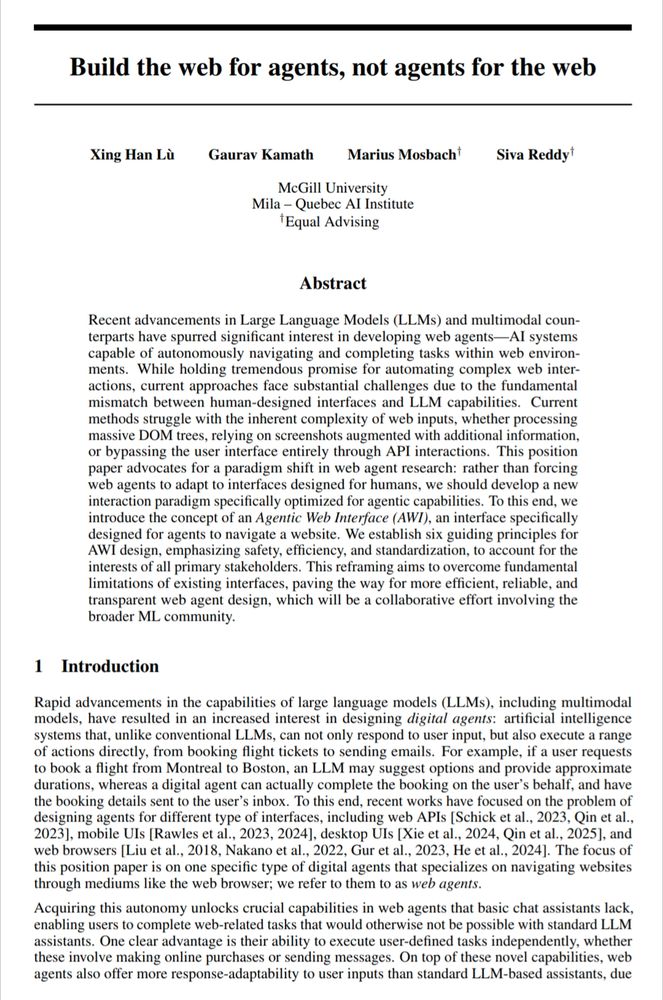

"Build the web for agents, not agents for the web"

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

14.06.2025 04:17 — 👍 6 🔁 4 💬 0 📌 0

New paper in Interspeech 2025 🚨

@interspeech.bsky.social

A Robust Model for Arabic Dialect Identification using Voice Conversion

Paper 📝 arxiv.org/pdf/2505.24713

Demo 🎙️https://shorturl.at/rrMm6

#Arabic #SpeechTech #NLProc #AI #Speech #ArabicDialects #Interspeech2025 #ArabicNLP

10.06.2025 10:07 — 👍 1 🔁 2 💬 1 📌 0

Do LLMs hallucinate randomly? Not quite.

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

06.06.2025 18:09 — 👍 46 🔁 18 💬 1 📌 3

Congratulations to Mila members @adadtur.bsky.social , Gaurav Kamath and @sivareddyg.bsky.social for their SAC award at NAACL! Check out Ada's talk in Session I: Oral/Poster 6. Paper: arxiv.org/abs/2502.05670

01.05.2025 14:30 — 👍 13 🔁 7 💬 0 📌 3

Language Models Largely Exhibit Human-like Constituent Ordering Preferences

Though English sentences are typically inflexible vis-à-vis word order, constituents often show far more variability in ordering. One prominent theory presents the notion that constituent ordering is ...

Ada is an undergrad and will soon be looking for PhDs. Gaurav is a PhD student looking for intellectually stimulating internships/visiting positions. They did most of the work without much of my help. Highly recommend them. Please reach out to them if you have any positions.

01.05.2025 15:14 — 👍 6 🔁 2 💬 1 📌 0

Great work from labmates on LLMs vs humans regarding linguistic preferences: You know when a sentence kind of feels off e.g. "I met at the park the man". So in what ways do LLMs follow these human intuitions?

01.05.2025 15:04 — 👍 7 🔁 3 💬 0 📌 0

Exploiting Instruction-Following Retrievers for Malicious Information Retrieval

Parishad BehnamGhader, Nicholas Meade, Siva Reddy

Instruction-following retrievers can efficiently and accurately search for harmful and sensitive information on the internet! 🌐💣

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

12.03.2025 16:15 — 👍 12 🔁 8 💬 1 📌 0

How to Get Your LLM to Generate Challenging

Problems for Evaluation? 🤔 Check out our CHASE recipe. A highly relevant problem given that most human-curated datasets are crushed within days.

21.02.2025 18:53 — 👍 4 🔁 2 💬 0 📌 0

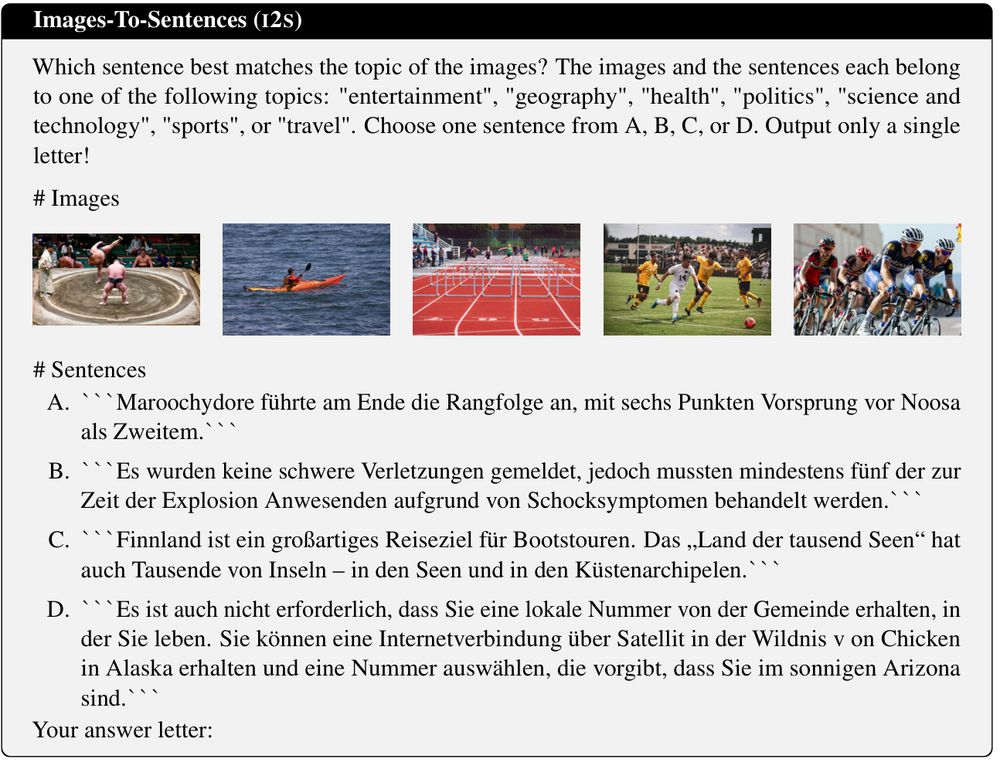

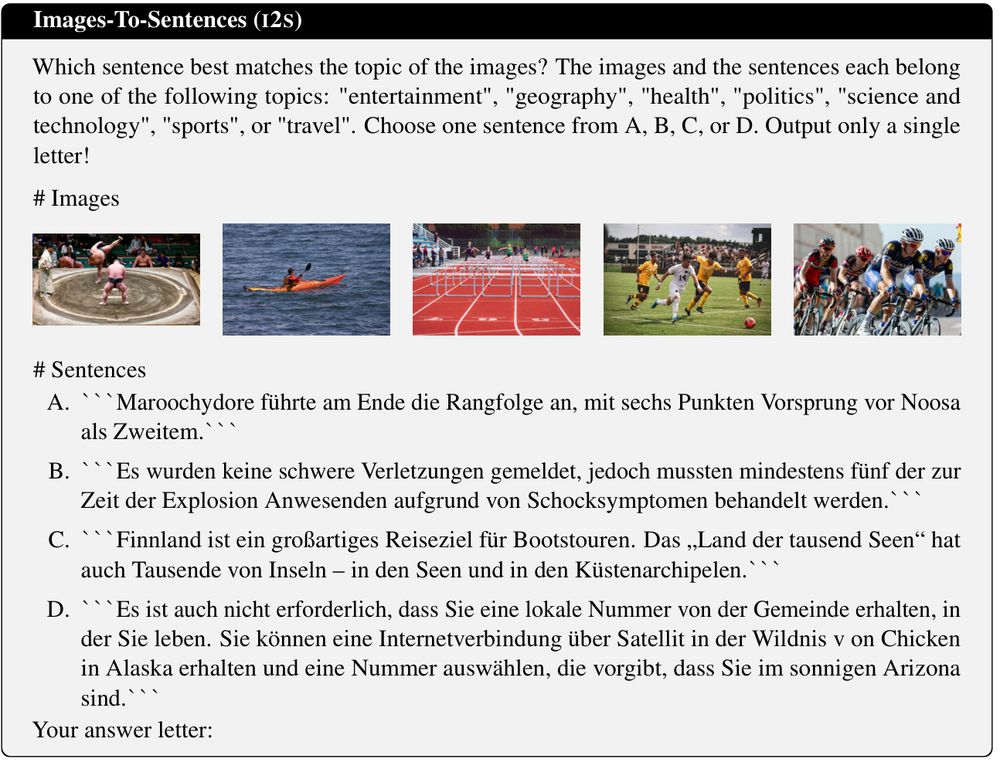

Introducing MVL-SIB, a massively multilingual vision-language benchmark for cross-modal topic matching in 205 languages!

🤔Tasks: Given images (sentences), select topically matching sentence (image).

Arxiv: arxiv.org/abs/2502.12852

HF: huggingface.co/datasets/Wue...

Details👇

21.02.2025 07:45 — 👍 4 🔁 5 💬 1 📌 0

Y’all we won!!!!!!!!! 🇨🇦

21.02.2025 04:32 — 👍 1 🔁 1 💬 1 📌 0

The submission deadline is in less than a month! We welcome encore submissions, so consider submitting your work regardless of whether it's been accepted or not #chi2025 😉

22.01.2025 15:32 — 👍 8 🔁 1 💬 0 📌 0

The image includes a shortened call for participation that reads:

"We welcome participants who work on topics related to supporting human-centered evaluation and auditing of language models. Topics of interest include, but not limited to:

- Empirical understanding of stakeholders' needs and goals of LLM evaluation and auditing

- Human-centered evaluation and auditing methods for LLMs

- Tools, processes, and guidelines for LLM evaluation and auditing

- Discussion of regulatory measures and public policies for LLM auditing

- Ethics in LLM evaluation and auditing

Special Theme: Mind the Context. We invite authors to engage with specific contexts in LLM evaluation and auditing. This theme could involve various topics: the usage contexts of LLMs, the context of the evaluation/auditing itself, and more! The term ''context'' is purposefully left open for interpretation!

The image also includes pictures of workshop organizers, who are: Yu Lu Liu, Wesley Hanwen Deng, Michelle S. Lam, Motahhare Eslami, Juho Kim, Q. Vera Liao, Wei Xu, Jekaterina Novikova, and Ziang Xiao.

Human-centered Evalulation and Auditing of Language models (HEAL) workshop is back for #CHI2025, with this year's special theme: “Mind the Context”! Come join us on this bridge between #HCI and #NLProc!

Workshop submission deadline: Feb 17 AoE

More info at heal-workshop.github.io.

16.12.2024 22:07 — 👍 44 🔁 10 💬 2 📌 4

It turns out we had even more papers at EMNLP!

Let's complete the list with three more🧵

24.11.2024 02:17 — 👍 14 🔁 4 💬 1 📌 1

Our lab members recently presented 3 papers at @emnlpmeeting.bsky.social in Miami ☀️ 📜

From interpretability to bias/fairness and cultural understanding -> 🧵

23.11.2024 20:35 — 👍 19 🔁 6 💬 1 📌 2

I’m putting together a starter pack for researchers working on human-centered AI evaluation. Reply or DM me if you’d like to be added, or if you have suggestions! Thank you!

(It looks NLP-centric at the moment, but that’s due to the current limits of my own knowledge 🙈)

go.bsky.app/G3w9LpE

21.11.2024 15:56 — 👍 36 🔁 10 💬 15 📌 1

I didn’t expect to wind up in the news over this but in hindsight, I guess it makes sense lol.

This is the first time I’ve been in the Herald since high school 😂.

20.11.2024 03:17 — 👍 112 🔁 17 💬 7 📌 0

MIT // researching fairness, equity, & pluralistic alignment in LLMs

previously @ MIT media lab, mila / mcgill

i like language and dogs and plants and ultimate frisbee and baking and sunsets

https://elinorp-d.github.io

PhD-ing at McGill Linguistics + Mila, working under Prof. Siva Reddy. Mostly computational linguistics, with some NLP; habitually disappointed Arsenal fan

Asst Prof at Johns Hopkins Cognitive Science • Director of the Group for Language and Intelligence (GLINT) ✨• Interested in all things language, cognition, and AI

jennhu.github.io

AI/ML Applied Research Intern at Adobe | NLP-ing (Research Masters) at MILA/McGill

MSc Master's @mila-quebec.bsky.social @mcgill-nlp.bsky.social

Research Fellow @ RBC Borealis

Model analysis, interpretability, reasoning and hallucination

Studying model behaviours to make them better :))

Looking for Fall '26 PhD

PhD candidate at Uni of Würzburg working on multilinguality & multimodality | prev. visited visit Mila & LTL@UniCambridge

https://fdschmidt93.github.io

Assistant Professor @EmoryCS Department. Alum @Mila/McGill

NLP, AI for Social Good, Interpretability, AI & Society

ali-emami.com

Research scientist at Amazon. I studied at Mila during my Ph.D. and focused on understanding why AI discriminates based on gender and race; and how to measure/fix that.

#NLP Postdoc at Mila - Quebec AI Institute and McGill University | Former PhD @ University of Copenhagen (CopeNLU)

🌐 karstanczak.github.io

PhD candidate at McGill and Mila (Quebec AI Institute) w/ Blake Richards and Doina Precup.

Doing research on AI and Neuroscience 🤖🧠

Based in Montreal. 🇨🇦

CS grad student @ Mila Quebec | Ex-Visiting Researcher @ ServiceNow Research | NLP, GNN, Network Science

she/her

McGillNLP & Mila

'can you be an expat if you were never a pat?' - leo hertzler

occasionally live on ckut 90.3 fm :-)

adadtur.github.io

PhD candidate @Mila and @McGill

Interested in interplay of knowledge and language

Love being outdoors!

PhD Student at MILA/McGill University with Prof. Siva Reddy and Prof. Vered Shwartz. Previously UBC-CS.

Studying societal impacts of AI, alignment and safety.

Based in Montreal🇨🇦

PhD Student at Mila and McGill | Research in ML and NLP | Past: AI2, MSFTResearch

arkilpatel.github.io

#NLP Postdoc at Mila - Quebec AI Institute & McGill University

mariusmosbach.com

https://mcgill-nlp.github.io/people/

Assistant Professor @Mila-Quebec.bsky.social

Co-Director @McGill-NLP.bsky.social

Researcher @ServiceNow.bsky.social

Alumni: @StanfordNLP.bsky.social, EdinburghNLP

Natural Language Processor #NLProc

PhD student at Johns Hopkins University

Alumni from McGill University & MILA

Working on NLP Evaluation, Responsible AI, Human-AI interaction

she/her 🇨🇦