Thanks Brent; I liked yours too!

25.01.2026 16:52 — 👍 2 🔁 0 💬 0 📌 0Drew Bailey

@drewhalbailey.bsky.social

education, developmental psychology, research methods at UC Irvine

@drewhalbailey.bsky.social

education, developmental psychology, research methods at UC Irvine

Thanks Brent; I liked yours too!

25.01.2026 16:52 — 👍 2 🔁 0 💬 0 📌 0

Replying to @bwroberts.bsky.social on cross-lagged panel models

open.substack.com/pub/drewhalb...

Person at talk translating all model descriptions into formal equations is the academic talk version of the person filling out the scorecard at a baseball game.

21.01.2026 19:16 — 👍 2 🔁 0 💬 0 📌 0Named for Patterson Hood? Same hair I guess.

01.01.2026 01:55 — 👍 1 🔁 0 💬 1 📌 0

This fetid landmark, this historical stain on humanity is primarily due to the stunningly reckless obliteration of America's foreign assistance agency earlier this year.

Led by the richest man on earth. In secret, on a weekend. With zero analysis or discussion of its catastrophic impacts.

Thanks, Ruben.

03.12.2025 23:19 — 👍 0 🔁 0 💬 0 📌 0

Fig. 3. Effects of the intervention. In (a) are shown effects 7 months after the intervention; in (b) are shown interaction effects 7 months after the intervention. Models show the effects of the intervention on expressive-language skills in the grade 1 posttest, with an interaction between expressive-language skills in the pretest and after the intervention. Standardized coefficients (with 95% confidence intervals) are shown, except for the intervention dummy variable and the interaction where y-standardized values are indicated. Rectangles and circles contain observed variables and latent variables, respectively. Solid arrows point to significant regression or factor loadings (arrows from latent variables to their observed indicators); the arrow with dotted lines shows nonsignificant regression. The interaction (Fig. 3b) is illustrated by the arrow from the black circle to expressive language in the posttest. **p < .01.

Fig. 4. Long-term effects of the intervention on expressive-language skills. Model showing the effect of the intervention on expressive-language skills in the grade 4 posttest. Standardized coefficients (with 95% CIs) are shown, except for the intervention dummy variable where y-standardized values (equal to Cohen’s d) are indicated. Rectangles and circles contain observed variables and latent variables, respectively. Solid arrows point to significant regression or factor loadings (arrows from latent variables to their observed indicators); arrows with dotted lines show nonsignificant regression. **p < .01.

Fadeout of cognitive training remains one of the more replicable

findings in psychology in this preregistered study of 300 preschool children. Well-done study with a 4 year follow up. The language gains either faded or the control group caught up.

journals.sagepub.com/doi/full/10....

Totally! Many hot topics should be hot!

17.11.2025 17:06 — 👍 1 🔁 0 💬 0 📌 0I think the “why most published research findings are false” paper suggests this as one of the heuristics for identifying “false” findings.

17.11.2025 00:33 — 👍 1 🔁 0 💬 1 📌 0So hard! When there is no cross-lagged effect in this data generating model, RI-CLPM estimates one, but when there *is* one effect, the ARTS model doesn't! Not sure the paper bears much on whether cross-lagged effects are rare, but def on our ability to use these models without external info.

03.10.2025 21:42 — 👍 0 🔁 0 💬 0 📌 0Interested in models used to estimate lagged effects in panel data? We (@rebiweidmann.bsky.social, Hyewon Yang) have a new paper looking at patterns of stability and their implications for bias and model choice: osf.io/preprints/ps... [1/x]

19.09.2025 13:22 — 👍 25 🔁 12 💬 1 📌 4

I really like this paper dealing with the problem of “mischievous” responding in longitudinal panel data, by @joecimpian.bsky.social

journals.sagepub.com/doi/full/10....

Dag makhani: Causal inference and Indian cuisine

25.08.2025 17:43 — 👍 2 🔁 0 💬 0 📌 0Collider effect in the real world!

25.08.2025 17:06 — 👍 2 🔁 0 💬 1 📌 0Like, the effect of dropping a bouncing ball on the velocity of the ball over time is a weird oscillating function?

19.08.2025 15:55 — 👍 1 🔁 0 💬 0 📌 0About 2/3 of the posts on this platform linking to the recent NYT article on null findings from Baby’s First Years have this reaction. You can search the headline and verify yourself!

www.nytimes.com/2025/07/28/u...

I used Paige’s first book in a class students with a wide range of previous exposure to and attitudes about behavior genetics, and they all seem to find it very interesting. Will probably try this one too!

09.08.2025 22:01 — 👍 0 🔁 0 💬 0 📌 0 29.07.2025 02:07 — 👍 1 🔁 0 💬 0 📌 0

29.07.2025 02:07 — 👍 1 🔁 0 💬 0 📌 0

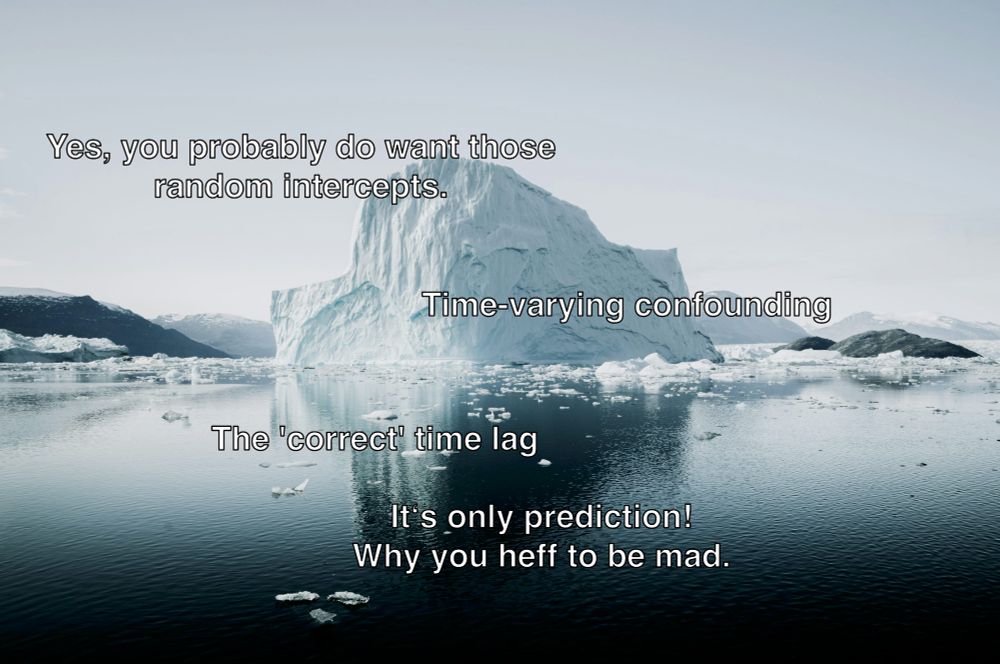

Random Intercepts and Slopes in Longitudinal Models: When Are They "Good" and "Bad" Controls?

or

Illusory Traits 2: Revenge of the Slopes

Led by Siling Guo, with Nicolas Hübner, Steffen Zitzmann, Martin Hecht, and Kou Murayama.

Comments welcome!

osf.io/preprints/ps...

New blog post! Let's say you've measured two variables repeatedly and want to investigate how one affects the other over time. Here are some recommendations for how to do that well.

www.the100.ci/2025/06/25/r...

Although field-specific authorship norms probably mostly just reflect the values of people in the field, I also think they can affect those values too. This seems like a good example! (I have some guesses about unintended consequences of tiny authorship teams too, btw.)

23.06.2025 14:14 — 👍 1 🔁 0 💬 0 📌 06) LCGAs never replicate across datasets or in the same dataset. They usually just produce the salsa pattern (Hi/med/low) or the cats cradle (Hi/low/increasing/decreasing).

This has misled entire fields (see all of George Bonnano's work on resilience, for example).

psycnet.apa.org/fulltext/201...

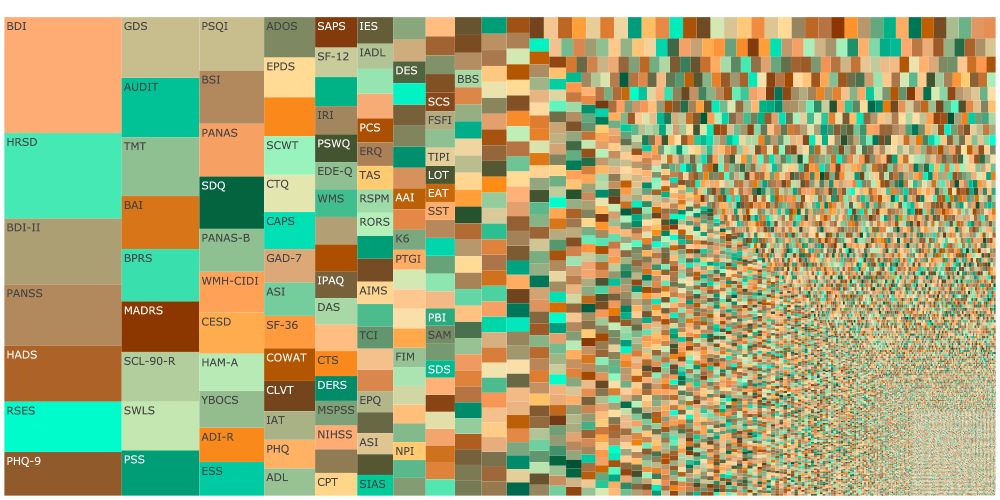

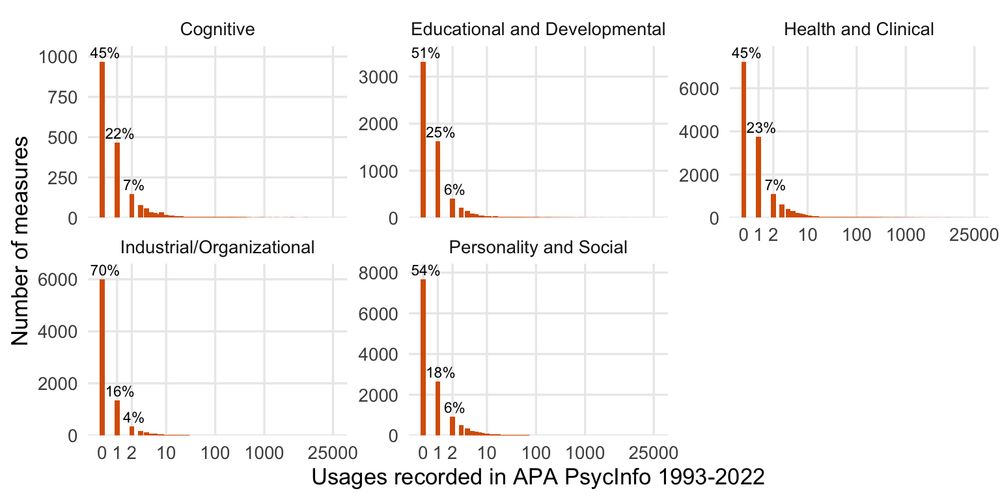

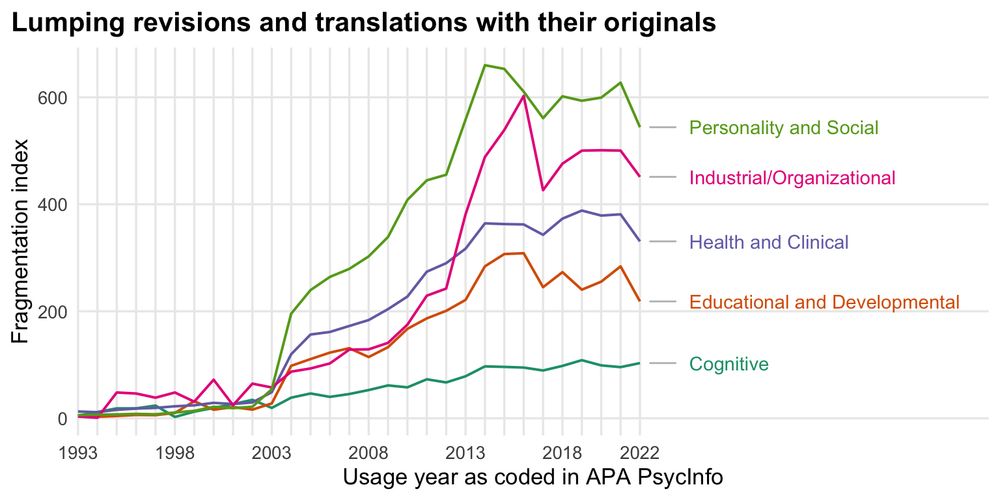

Treemap showing measurement fragmentation across subfields in psychology. Hill-Shannon Diversity 𝐷=1626.05

How often measures in the APA PsycTESTS database are (re)used according to the APA PsycInfo database: rarely, the majority are never reused.

Our fragmentation index (Hill-Shannon diversity) over time across subdisciplines shows fragmentation rising.

Our paper "A fragmented field" has just been accepted at AMPPS. We find it's not just you, psychology is really getting more confusing (construct and measure fragmentation is rising).

We updated the preprint with the (substantial) revision, please check it out.

osf.io/preprints/ps...

But I really hope we get 10 more years of strong studies now on the effects of large increases in access on outcomes for "always takers" and especially for elite students. There are lots of good reasons to expect these effects should differ. (2/2)

11.06.2025 20:35 — 👍 1 🔁 0 💬 0 📌 0I have seen lots of higher ed talks and papers in the last 10 years convincingly demonstrating that just making some cutoff (getting into a more selective college or major, not taking remedial classes) helps the marginal student. Great to see an emerging consensus. (1/2)

11.06.2025 20:35 — 👍 0 🔁 0 💬 1 📌 0For every cause, x, there is some group of people (often disproportionately people who study x) who think the effects of x are way bigger than they are. Therefore, I think we are doomed to read (or worse, make) "Yeah, but the effect of x is small" takes forever.

11.06.2025 20:24 — 👍 0 🔁 0 💬 0 📌 0

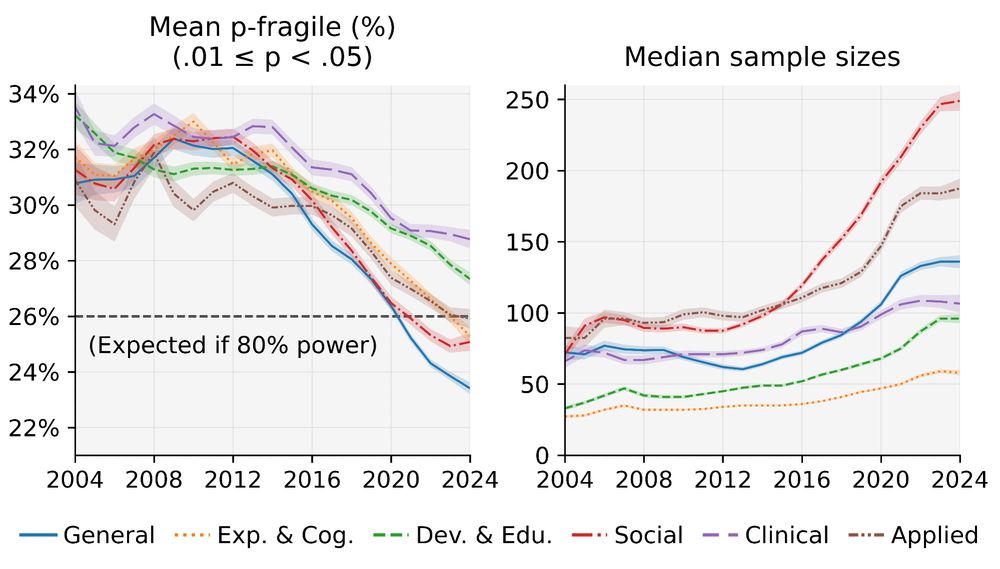

Mix of Figures 2 and 4 from the paper

I investigated how often papers' significant (p < .05) results are fragile (.01 ≤ p < .05) p-values. An excess of such p-values suggests low odds of replicability.

From 2004-2024, the rates of fragile p-values have gone down precipitously across every psychology discipline (!)

Hope to see at least one of these in each APS policy brief from now on!

15.05.2025 19:57 — 👍 1 🔁 0 💬 0 📌 0

IN MEMORY OF LYNN FUCHS

The field of special education lost a visionary and beloved leader with the passing of Lynn Fuchs on May 7, 2025. Her absence leaves a profound void—not only in our scholarly community, but in the hearts of all who had the privilege of knowing her.

[ click reading below ]

Really like it!

12.05.2025 03:43 — 👍 0 🔁 0 💬 0 📌 0