Gemini changelog from 13.03 was updated:

- Update to Gemini 2.0 Flash Thinking (experimental) in Gemini

- Deep Research is now powered by Gemini 2.0 Flash Thinking (experimental) and available to more Gemini users at no cost

@rsdickerson.bsky.social

Exploring the changing world of work | Host of the Good Fit Careers Podcast | Former Executive Headhunter

Gemini changelog from 13.03 was updated:

- Update to Gemini 2.0 Flash Thinking (experimental) in Gemini

- Deep Research is now powered by Gemini 2.0 Flash Thinking (experimental) and available to more Gemini users at no cost

Claude Sonnet 3.7 evals are here too 👀

A huge jump on the SWE bench 🔥

Claude 3.7 Sonnet

24.02.2025 18:57 — 👍 36 🔁 14 💬 2 📌 2Claude 3.7 Sonnet is also available to free users!

It seems like thinking experience you will be able to try only ones though.

Forget “tapestry” or “delve” these are the actual unique giveaway words for each model, relative to each other. arxiv.org/pdf/2502.12150

19.02.2025 03:04 — 👍 103 🔁 17 💬 6 📌 9

Thoughts on Sam's post:

1) It echoes the story from multiple labs about the confidence of scaling up to AGI fast (but you don't have to believe them)

2) There is no clear vision of what that world looks like

3) The labs are placing the burden on policymakers to decide what to do with what they make

Making LLMs run efficiently can feel scary, but scaling isn’t magic, it’s math! We wanted to demystify the “systems view” of LLMs and wrote a little textbook called “How To Scale Your Model” which we’re releasing today. 1/n

04.02.2025 18:54 — 👍 95 🔁 28 💬 3 📌 8Training our most capable Gemini models relies heavily on our JAX software stack+Google's TPU hardware platforms.

If you want to learn more, see this awesome book "How to Scale Your Model":

jax-ml.github.io/scaling-book/

Put together by several of my Google DeepMind colleagues listed below 🎉.

o3-mini is really good at writing internal documentation - feed it a codebase, get back a detailed explanation of how specific aspects of it work simonwillison.net/2025/Feb/5/o...

05.02.2025 06:09 — 👍 183 🔁 16 💬 6 📌 2

Open Thoughts project

They are building the best reasoning datasets out in the open.

Building off their work with Stratos, today they are releasing OpenThoughts-114k and OpenThinker-7B.

Repo: github.com/open-thought...

Together.ai's Agent Recipes

Explore Agent Recipes: Explore common agent recipes with ready to copy code to improve your LLM applications. These agent recipes are largely inspired by Anthropic's article.

www.agentrecipes.com

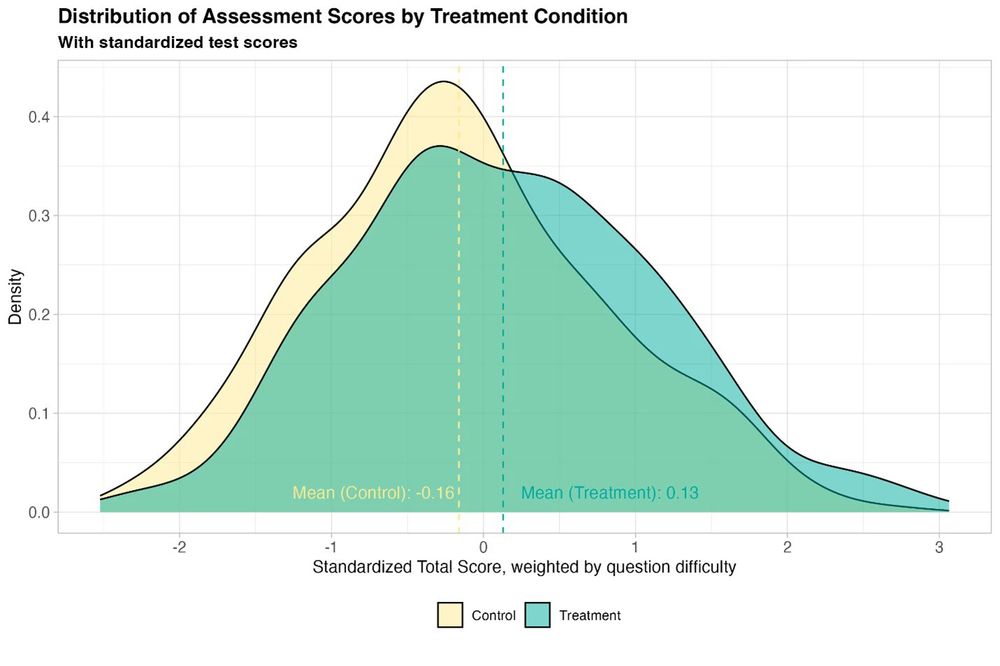

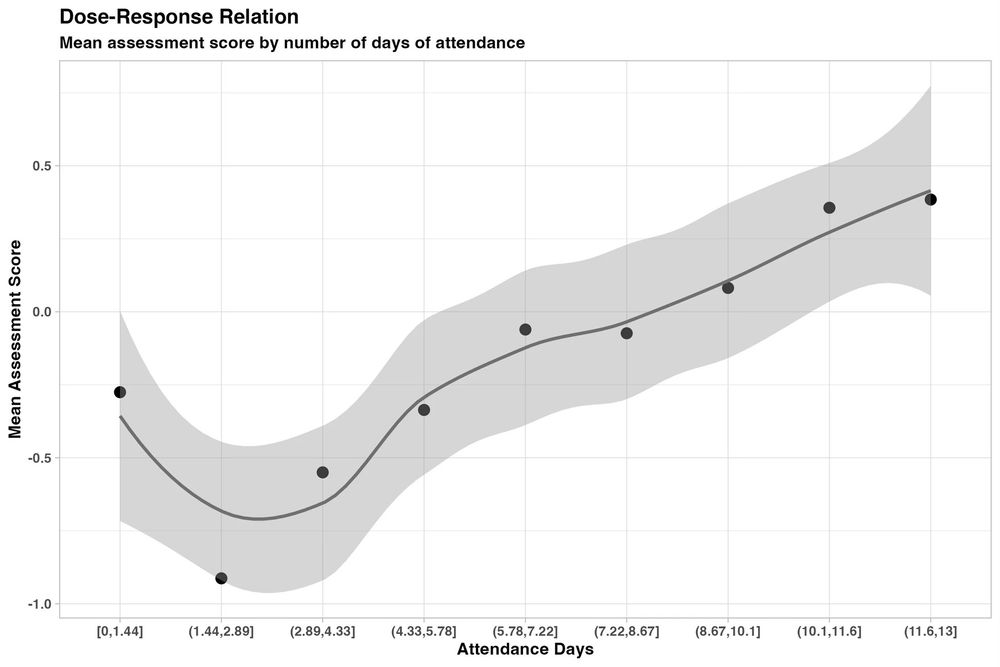

New randomized, controlled trial by the World Bank of students using GPT-4 as a tutor in Nigeria. Six weeks of after-school AI tutoring = 2 years of typical learning gains, outperforming 80% of other educational interventions.

And it helped all students, especially girls who were initially behind.

✨ Bluesky search tips and tricks

Add `from:me` to find your own posts

Add `to:me` to find replies/posts that mention you

Add `since:YYYY-MM-DD` and/or `until:YYYY-MM-DD` to specify a date range

Add `domain:theonion.com` to find posts linking to The Onion

See more at

bsky.social/about/blog/0...

Tensor Product Attention (TPA), a novel attention mechanism that uses tensor decompositions to represent queries, keys, and values compactly, significantly shrinking KV cache size at inference time.

- Better memory efficiency and improved performance.

- Perfect for handling longer context windows

Anthropic, as one of the first frontier AI labs, received ISO 42001 certification

14.01.2025 09:14 — 👍 1 🔁 1 💬 1 📌 0

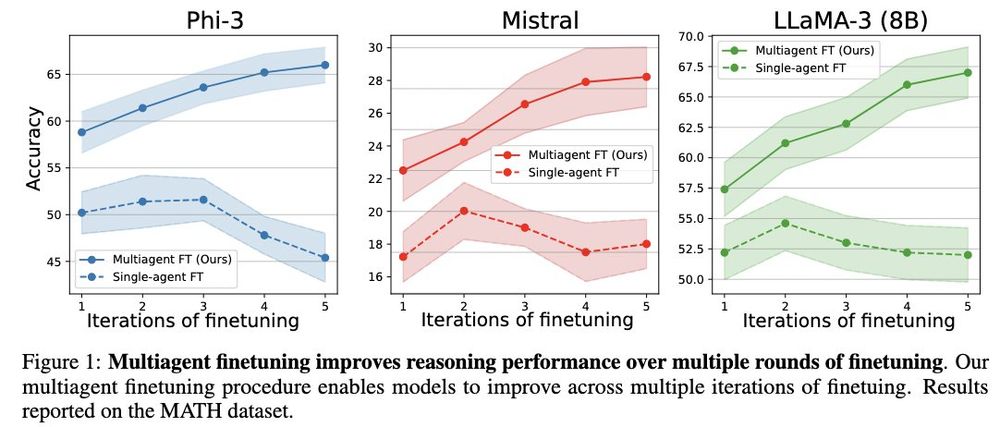

Multiagent Finetuning: Self Improvement with Diverse Reasoning Chains

Instead of self improving a single LLM, they self-improve a population of LLMs initialized from a base model. This enables consistent self-improvement over multiple rounds.

Project: llm-multiagent-ft.github.io

Trying a little experiment: "Hey, Claude, turn the first four stanzas of The Lady of Shalott into a film by describing 8 second clips..."

I pasted those into veo 2 verbatim & overlayed the poem for reference (I also asked for one additional scene revealing the Lady, again using the verbatim prompt)

It seems like Google is working on Interactive Mindmaps for NotebookLM 👀

"Generate an interactive mind map for all the sources in the notebook"

What does it mean to "use" test-time compute wisely? How to train to do so? How to measure that scaling it is useful?

"Optimizing LLM Test-Time Compute Involves Solving a Meta-RL Problem"

blog.ml.cmu.edu/2025/01/08/o...

I have a draft of my introduction to cooperative multi-agent reinforcement learning on arxiv. Check it out and let me know any feedback you have. The plan is to polish and extend the material into a more comprehensive text with Frans Oliehoek.

arxiv.org/abs/2405.06161

Simulated AI hospital where “doctor” agents work w/ simulated “patients” & improve: “After treating around ten thousand patients, the evolved doctor agent achieves a state-of-the-art accuracy of 93.06% on a subset of the MedQA dataset that covers major respiratory diseases” arxiv.org/abs/2405.02957

08.01.2025 03:16 — 👍 88 🔁 12 💬 5 📌 2Hi folks! I'm excited to be on BlueSky! I'm looking forward to posting about computer science research, ML, scientific advances, tasty food, nature, and making groan-worthy puns.

05.01.2025 21:48 — 👍 475 🔁 42 💬 45 📌 5Cursor now can "apply" with 5k tokens per second 🤯

How soon will we be getting isomorphic code that changes as you speak? 👀

Title card: Alignment Faking in Large Language Models by Greenblatt et al.

New work from my team at Anthropic in collaboration with Redwood Research. I think this is plausibly the most important AGI safety result of the year. Cross-posting the thread below:

18.12.2024 17:46 — 👍 126 🔁 29 💬 5 📌 11

Anthropic API updates - now generally available:

- Models API (query models, validate IDs, resolve aliases)

- Message Batches API (process large message batches at 50% of standard cost)

- Token Counting API (calculate token usage before sending messages)

Here is a preview of the ChatGPT tasks and automations ("jawbone" - work-in-progress)

17.12.2024 23:21 — 👍 2 🔁 1 💬 1 📌 2

ChatGPT Search Day

16.12.2024 17:52 — 👍 3 🔁 1 💬 0 📌 0

It’s official: F.02 humanoid robots have arrived at our commercial customer

The robots are connecting to the network and performing pre-checks this morning

Our time from filing the C-Corp to shipping commercially was 31 months

Microsoft just released a tool that lets you convert Office files to Markdown. Never thought I'd see the day.

Google also added Markdown export to Google Docs a few months ago.

github.com/microsoft/markitdown