Excited to be in Vienna for #ACL2025 🇦🇹!You'll find @dziadzio.bsky.social and I by our ONEBench poster, so do drop by!

🗓️Wed, July 30, 11-12:30 CET

📍Hall 4/5

I’m also excited to talk about lifelong and personalised benchmarking, data curation and vision-language in general! Let’s connect!

27.07.2025 22:26 — 👍 3 🔁 1 💬 0 📌 0

Stumbled upon this blogpost recently and found some very useful tips to improve the Bluesky experience. This seemed almost tailored to me - I don't live in the USA and the politics there don't affect me personally. Settings -> Moderation -> Muted Words & Tags cleaned up my feed - strongly recommend!

25.06.2025 16:14 — 👍 11 🔁 1 💬 0 📌 0

Why More Researchers Should be Content Creators

Just trying something new! I recorded one of my recent talks, sharing what I learned from starting as a small content creator.

youtu.be/0W_7tJtGcMI

We all benefit when there are more content creators!

24.06.2025 21:58 — 👍 7 🔁 1 💬 1 📌 0

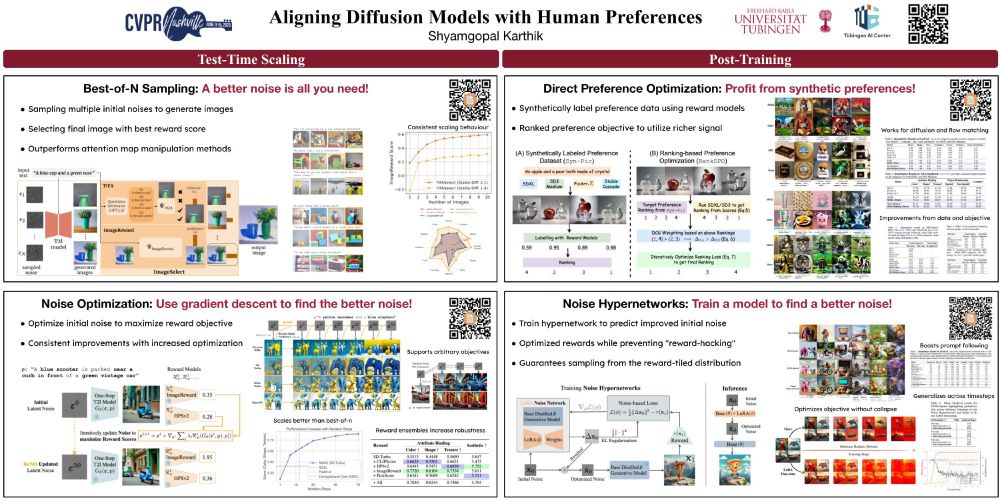

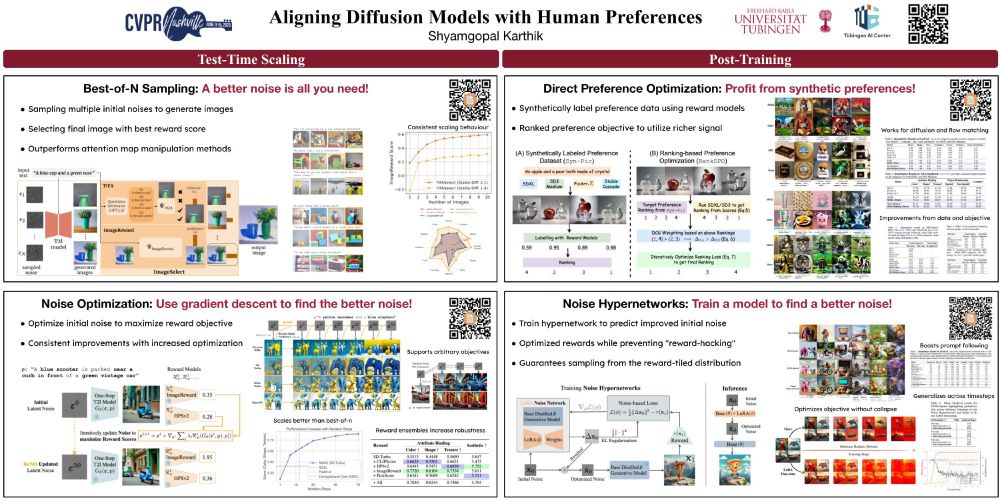

I'm in Nashville this week attending #CVPR2025. Excited to discuss post-training VLMs and diffusion models!

11.06.2025 03:04 — 👍 10 🔁 1 💬 0 📌 0

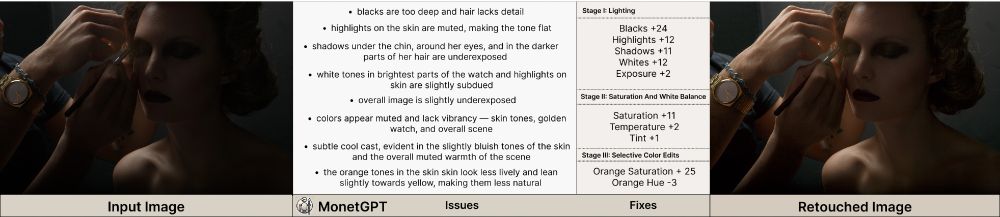

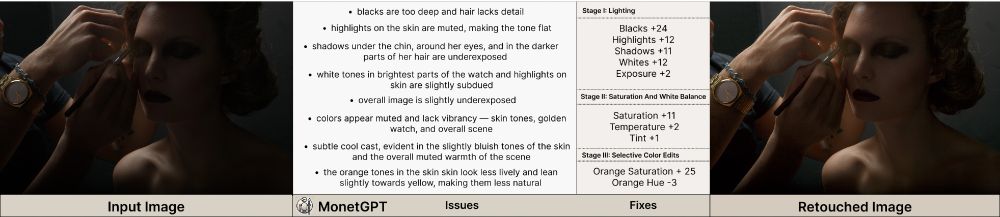

🧵1/10 Excited to share our #SIGGRAPH paper "MonetGPT: Solving Puzzles Enhances MLLMs' Image Retouching Skills" 🌟

We explore how to make MLLMs operation-aware by solving visual puzzles and propose a procedural framework for image retouching

#MLLM

27.05.2025 15:13 — 👍 3 🔁 2 💬 1 📌 0

🏆ONEBench accepted to ACL main! ✨

Stay tuned for the official leaderboard and real-time personalised benchmarking release!

If you’re attending ACL or are generally interested in the future of foundation model benchmarking, happy to talk!

#ACL2025NLP #ACL2025

@aclmeeting.bsky.social

17.05.2025 19:52 — 👍 7 🔁 2 💬 0 📌 0

🧠 Keeping LLMs factually up to date is a common motivation for knowledge editing.

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

08.04.2025 15:31 — 👍 29 🔁 8 💬 1 📌 4

Check out our newest paper!

As always, it was super fun working on this with @prasannamayil.bsky.social

18.02.2025 14:12 — 👍 5 🔁 1 💬 0 📌 0

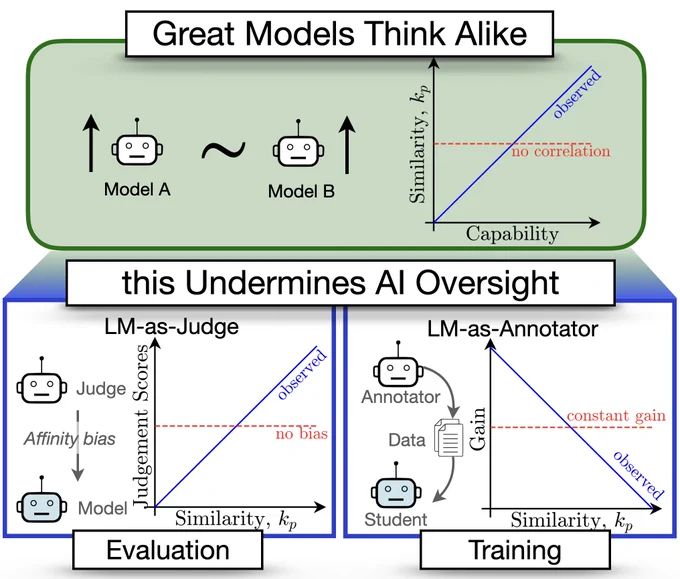

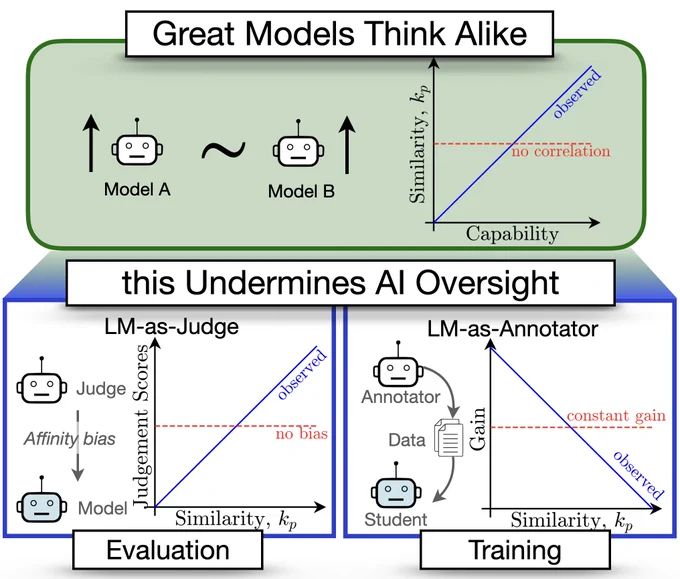

🚨Great Models Think Alike and this Undermines AI Oversight🚨

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

07.02.2025 21:12 — 👍 19 🔁 9 💬 2 📌 1

Godsend

07.02.2025 16:38 — 👍 3 🔁 0 💬 0 📌 0

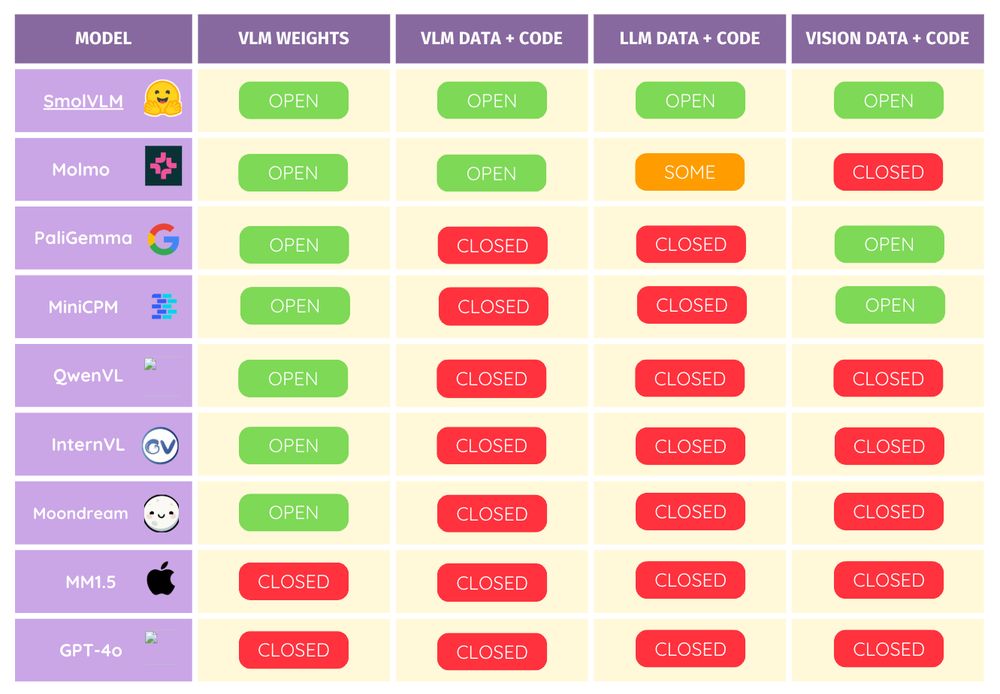

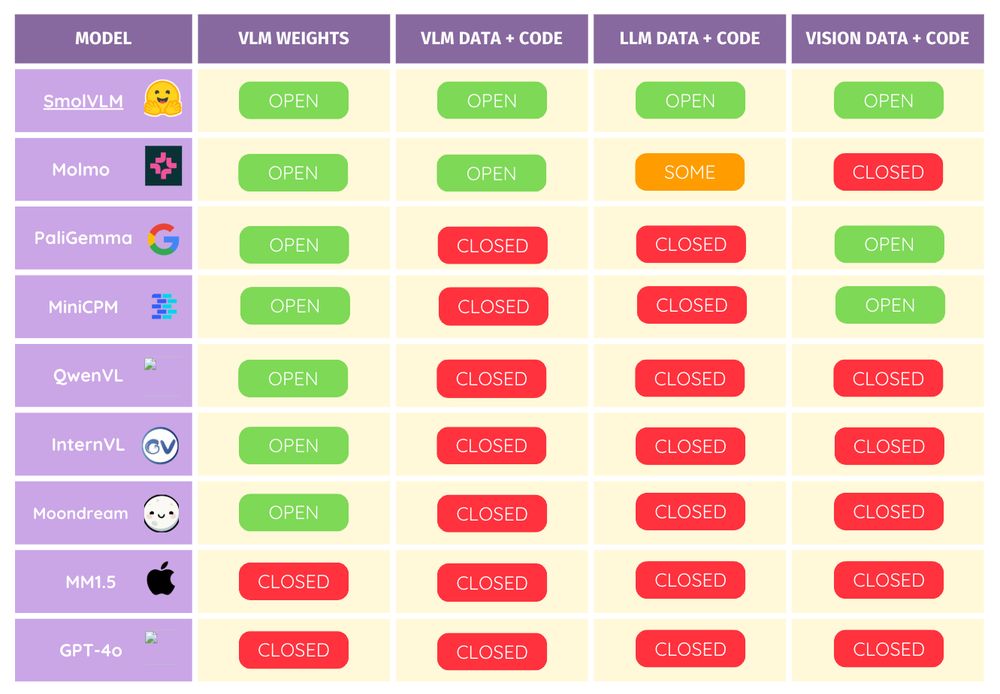

Fuck it, today we're open-sourcing the codebase used to train SmolVLM from scratch on 256 H100s 🔥

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

31.01.2025 15:06 — 👍 24 🔁 5 💬 2 📌 0

Added you!

27.01.2025 11:38 — 👍 1 🔁 0 💬 0 📌 0

NLI Improves Compositionality in Vision-Language Models is accepted to #ICLR2025!

CECE enables interpretability and achieves significant improvements in hard compositional benchmarks without fine-tuning (e.g., Winoground, EqBen) and alignment (e.g., DrawBench, EditBench). + info: cece-vlm.github.io

23.01.2025 18:34 — 👍 13 🔁 2 💬 1 📌 1

I feel like my “following” and “popular with friends” feeds are well tuned as I have complete control over them. Just that people still are posting less on bsky and are more active on Twitter. Once that changes (and I think it will), we’ll have the same experience as it is on Twitter right now.

12.01.2025 23:34 — 👍 2 🔁 0 💬 0 📌 0

How to Merge Your Multimodal Models Over Time?

Model merging combines multiple expert models - finetuned from a base foundation model on diverse tasks and domains - into a single, more capable model. However, most existing model merging approaches...

📄 New Paper: "How to Merge Your Multimodal Models Over Time?"

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

11.12.2024 18:00 — 👍 24 🔁 7 💬 1 📌 2

Added you!

11.12.2024 23:55 — 👍 1 🔁 0 💬 0 📌 0

Sure!

10.12.2024 21:27 — 👍 0 🔁 0 💬 0 📌 0

Welcome, stranger

10.12.2024 21:25 — 👍 1 🔁 0 💬 0 📌 0

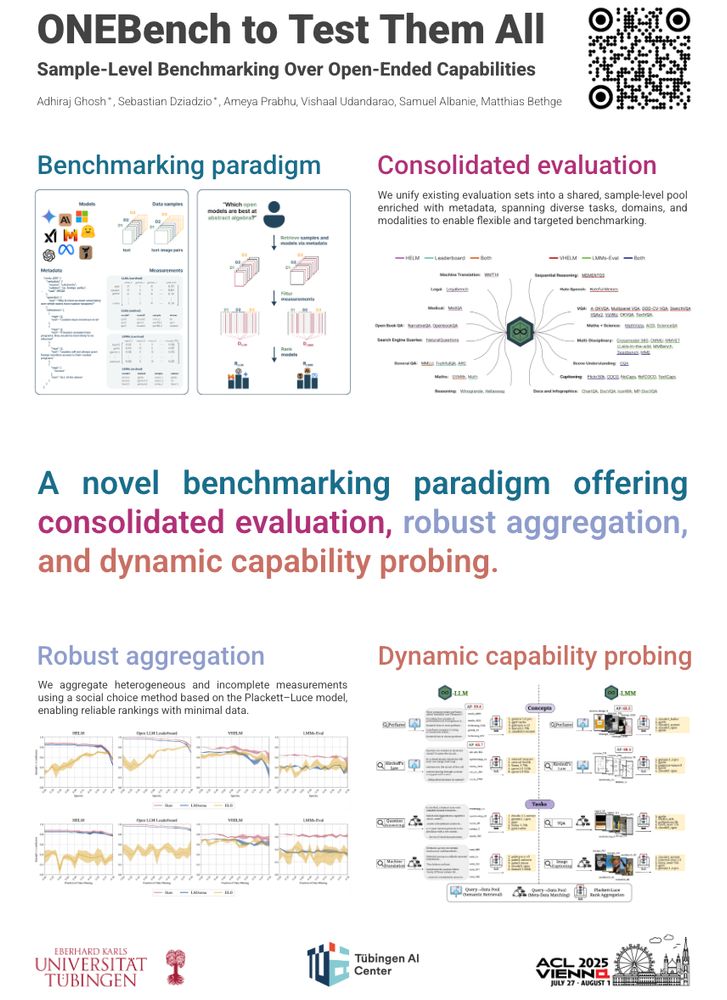

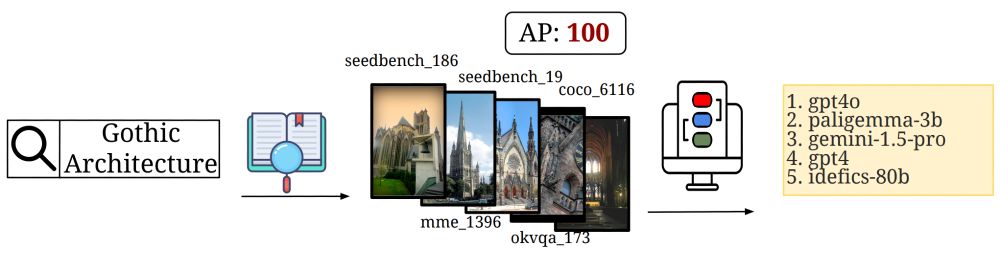

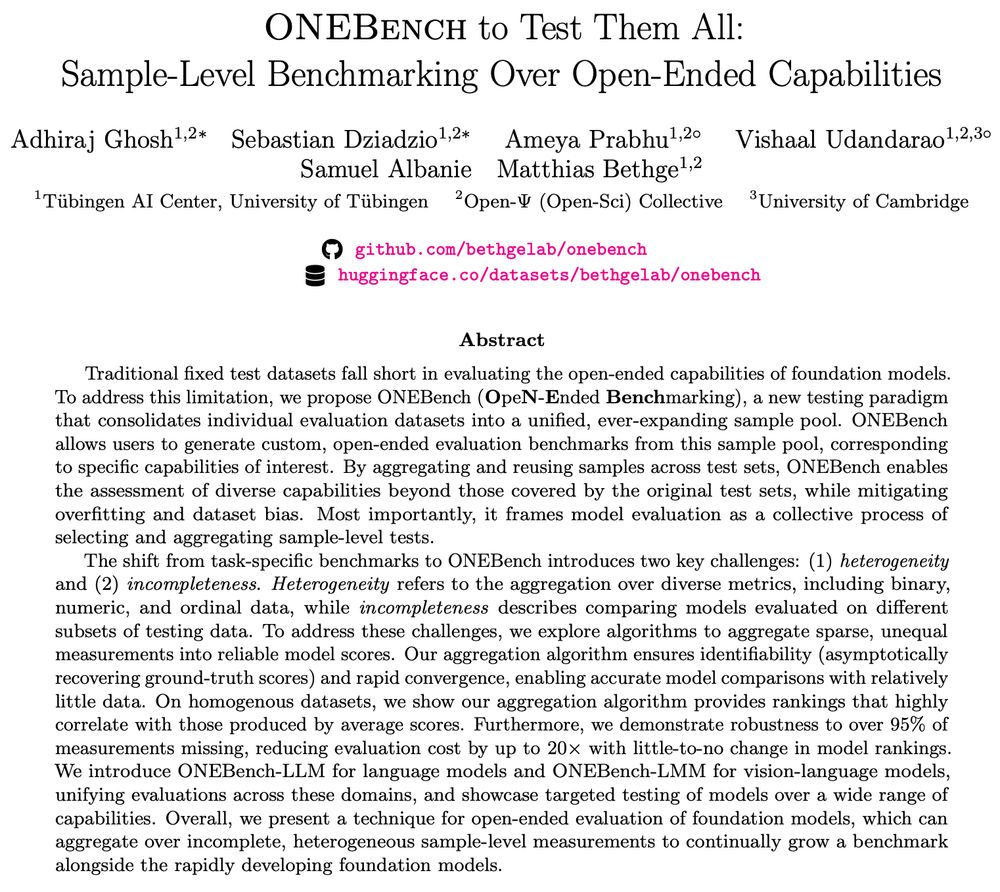

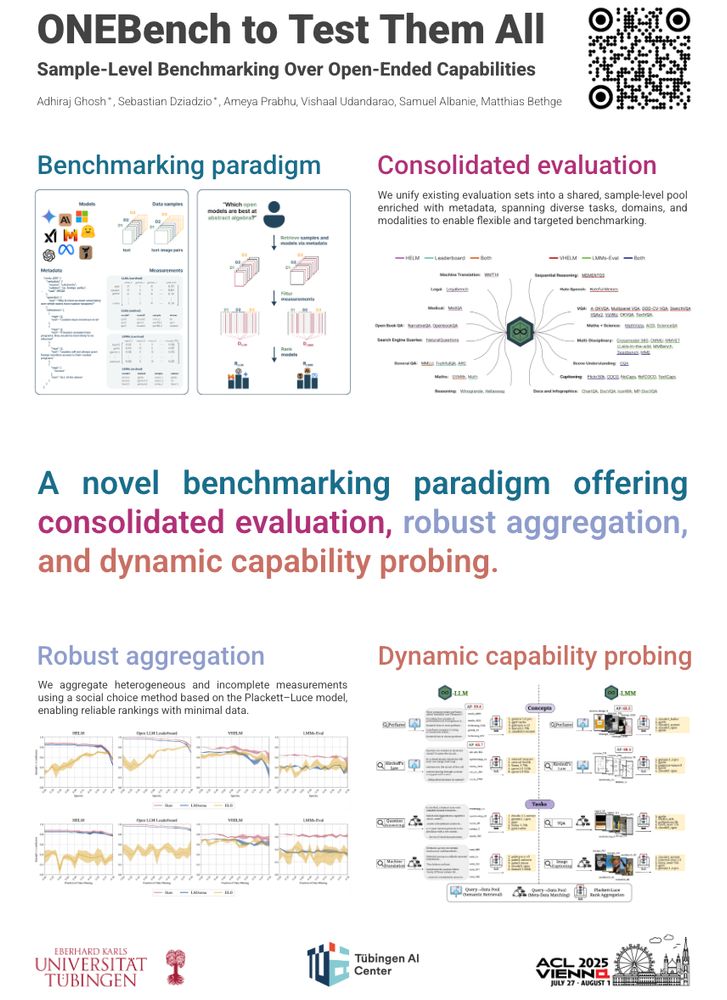

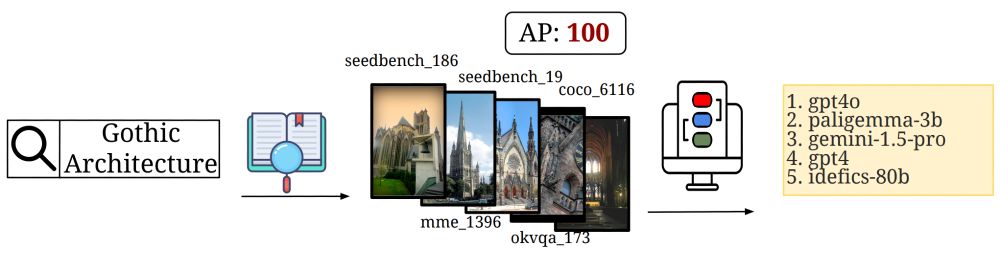

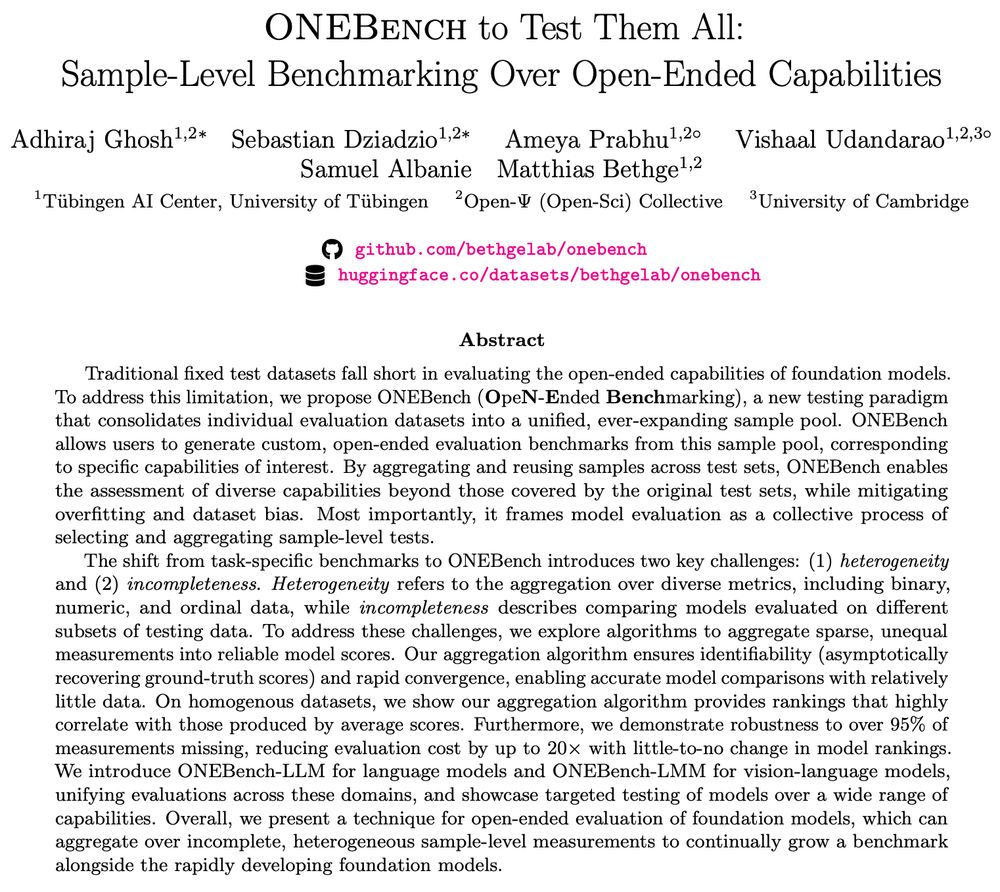

How do we benchmark the vast capabilities of foundation models? Introducing ONEBench – a unifying benchmark to test them all, led by

@adhirajghosh.bsky.social and

@dziadzio.bsky.social!⬇️

Sample-level benchmarks could be the new generation- reusable, recombinable & evaluate lots of capabilities!

10.12.2024 18:39 — 👍 2 🔁 1 💬 0 📌 0

This extremely ambitious project would not have been possible without @dziadzio.bsky.social @bayesiankitten.bsky.social @vishaalurao.bsky.social @samuelalbanie.bsky.social and Matthias Bethge!

Special thanks to everyone at @bethgelab.bsky.social, Bo Li, Yujie Lu and Palzer Lama for all your help!

10.12.2024 17:52 — 👍 3 🔁 0 💬 0 📌 0

In summary, we release ONEBench as a valuable tool for comprehensively evaluating foundation models and generating customised benchmarks, in the hopes of sparking a restructuring how benchmarking is done. We plan on publishing the code, benchmark and metadata for capability probing very soon.

10.12.2024 17:51 — 👍 2 🔁 0 💬 1 📌 0

Finally, we probe open-ended capabilities by defining a query pool to test, as proof-of-concept, and generating personalised model rankings. Expanding ONEBench can only improve reliability and scale of these queries and we’re excited to extend this framework.

More insights like these in the paper!

10.12.2024 17:50 — 👍 3 🔁 0 💬 1 📌 0

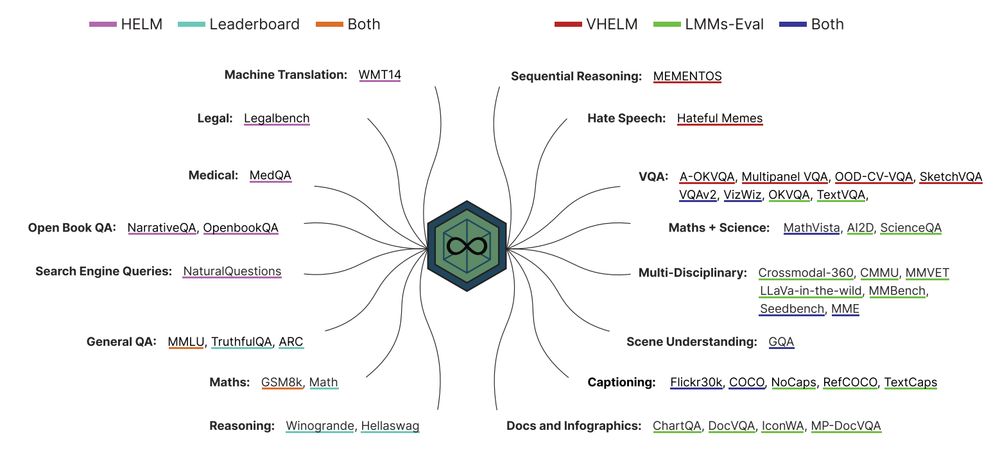

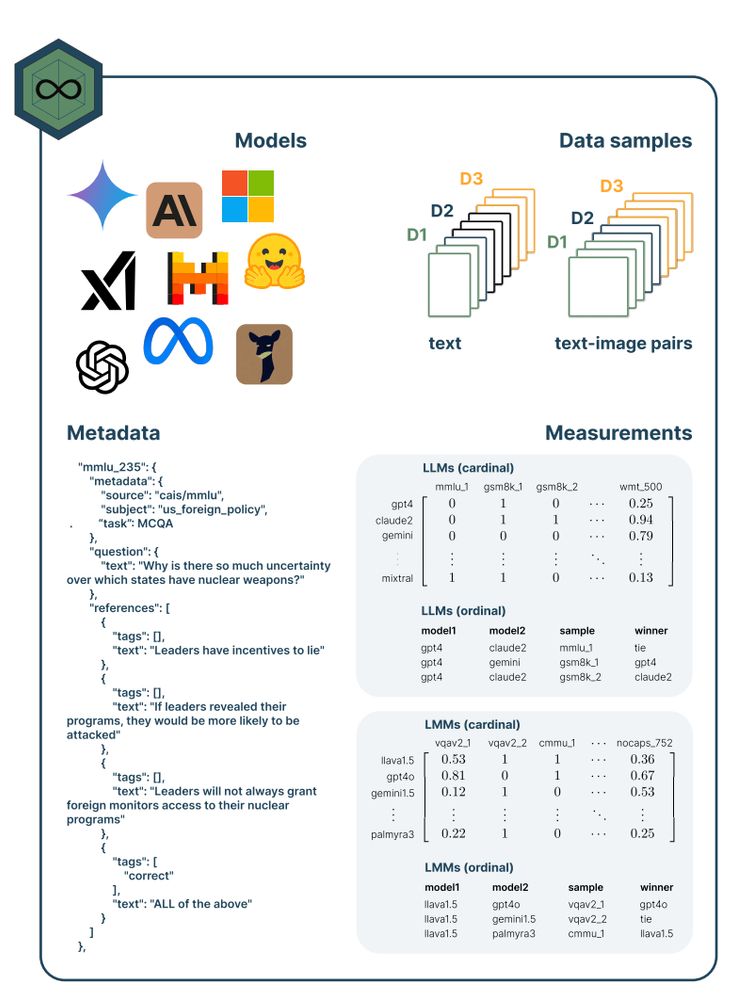

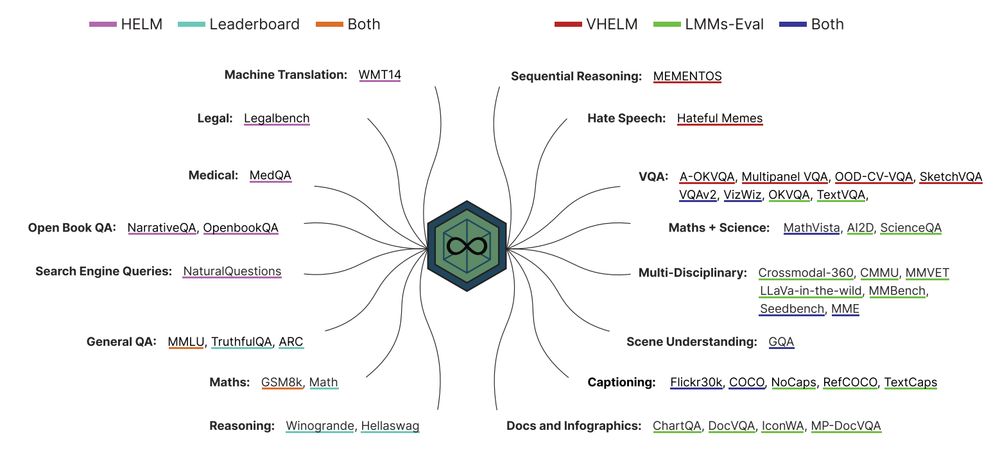

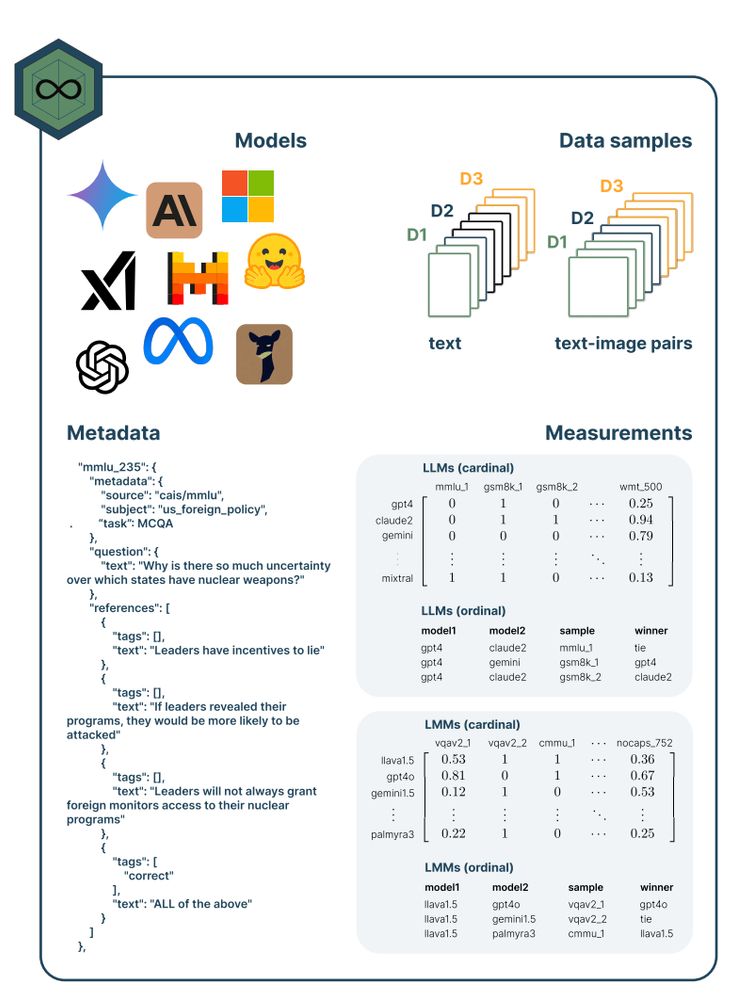

Let's look under the hood! ONEBench comprises ONEBench-LLM, and ONEBench-LMM: the largest pool of evaluation samples for foundation models(~50K for LLMs and ~600K for LMMs), spanning various domains and tasks. ONEBench will be continually expanded to accommodate more models and datasets.

10.12.2024 17:49 — 👍 3 🔁 0 💬 1 📌 0

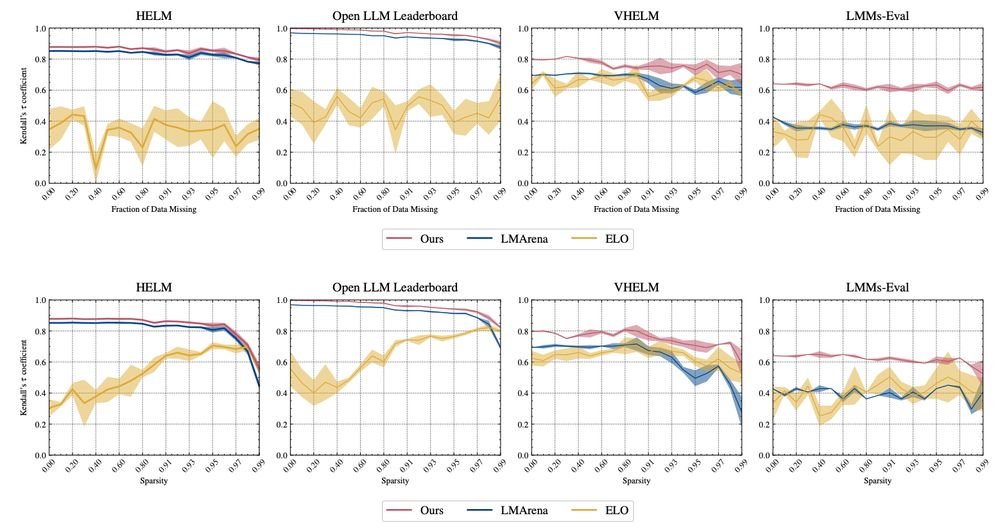

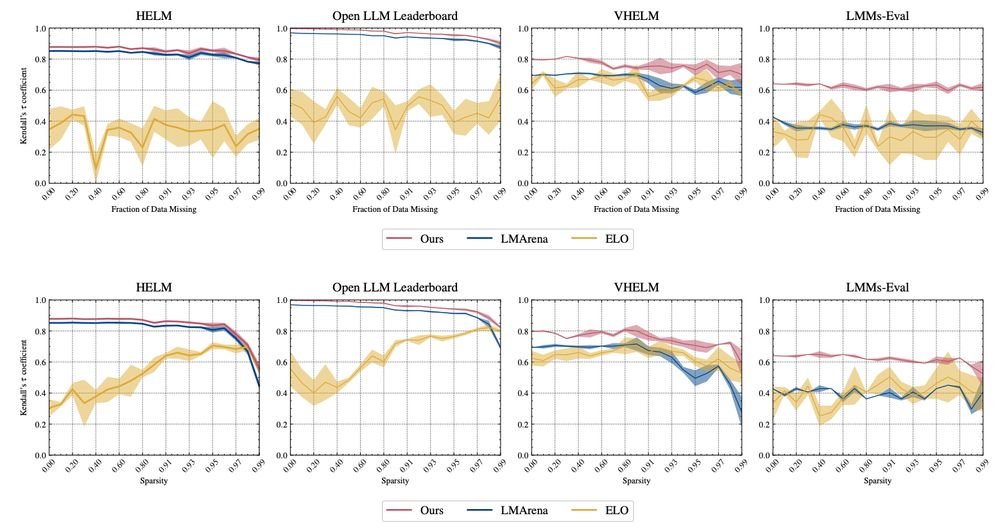

We compare our Plackett-Luce implementation to ELO and ELO-distribution based ranking methods, not only showing superior correlation to the aggregated mean model scores for each test set but also extremely stable correlations to missing datapoints and missing measurements, even up to 95% sparsity!

10.12.2024 17:49 — 👍 3 🔁 0 💬 1 📌 0

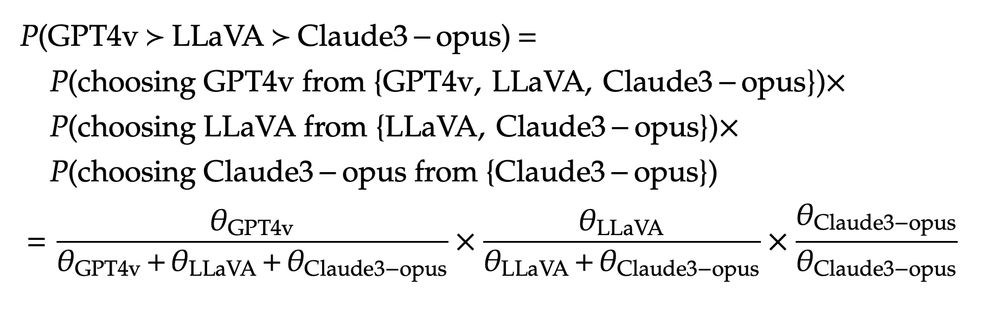

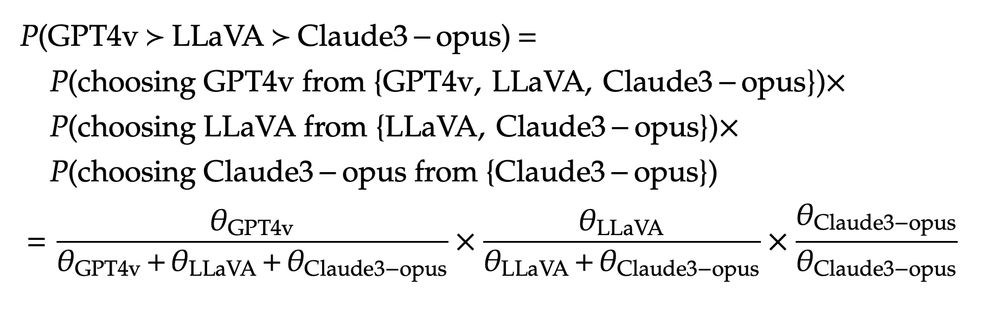

✅ ONEBench uses these rankings and aggregates them using the Plackett-Luce framework: providing an extremely efficient MLE from the aggregated probabilities of individual rankings, resulting in an estimate of the parameters of the strength(or utility value) of models in a ranking.

10.12.2024 17:48 — 👍 3 🔁 0 💬 1 📌 0

🤔 How do we aggregate samples from different test sets, spanning different metrics?

The solution lies in converting individual model evals into ordinal measurements(A<B<C), two or more models can be directly compared on the same data sample to obtain model preference rankings.

10.12.2024 17:47 — 👍 3 🔁 0 💬 1 📌 0

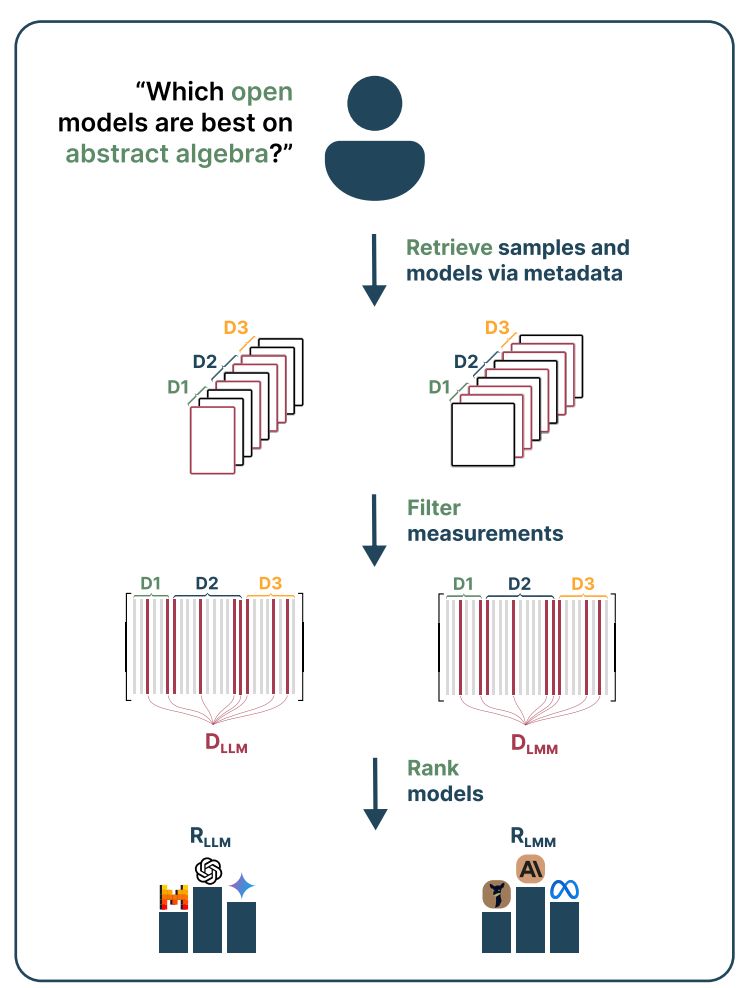

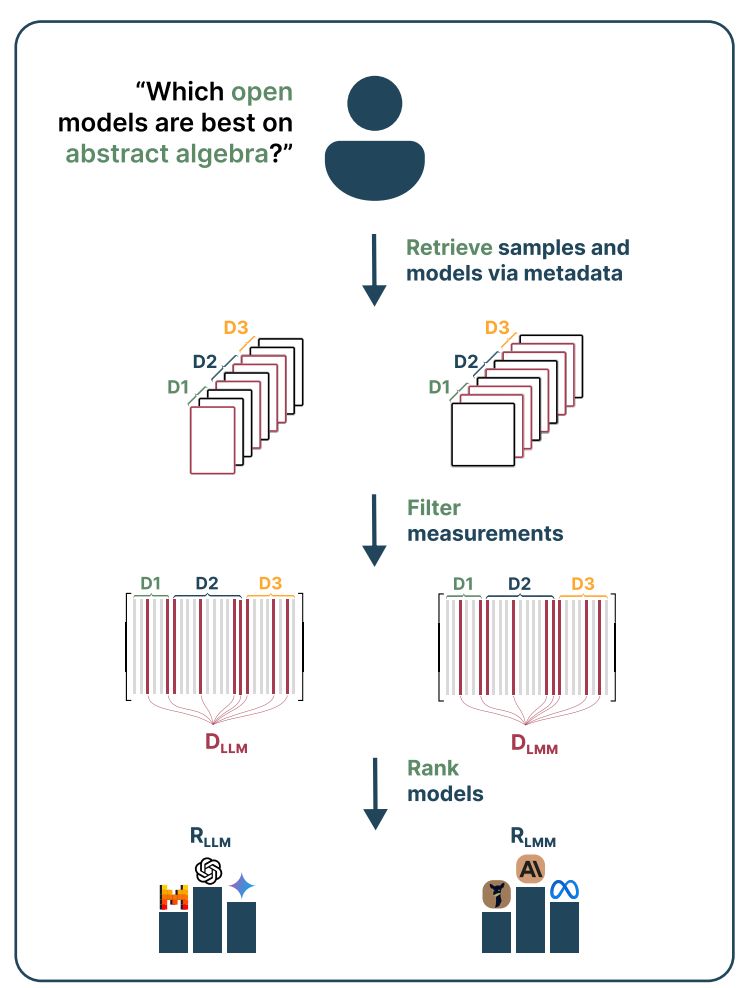

Given a query of interest from a practitioner, we are able to pick relevant samples from the data pool by top-k retrieval in the embedding space or searching through sample-specific metadata.

Aggregating through relevant samples and model performance on them, we obtain our final model rankings.

10.12.2024 17:45 — 👍 3 🔁 0 💬 1 📌 0

❗Status quo: Benchmarking on large testsets is costly. Static benchmarks fail to use truly held-out data and also can’t probe the ever-evolving capabilities of LLMs/VLMs.

ONEBench mitigates this by re-structuring static benchmarks to accommodate an ever-expanding pool of datasets and models.

10.12.2024 17:45 — 👍 3 🔁 0 💬 1 📌 0

🚨Looking to test your foundation model on an arbitrary and open-ended set of capabilities, not explicitly captured by static benchmarks? 🚨

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

10.12.2024 17:44 — 👍 18 🔁 5 💬 1 📌 2

PhD student at the University of Amsterdam working on vision-language models and cognitive computational neuroscience

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

Waiting on a robot body. All opinions are universal and held by both employers and family.

Recruiting students to start my lab!

ML/NLP/they/she.

(jolly good) Fellow at the Kempner Institute @kempnerinstitute.bsky.social, incoming assistant professor at UBC Linguistics (and by courtesy CS, Sept 2025). PhD @stanfordnlp.bsky.social with the lovely @jurafsky.bsky.social

isabelpapad.com

PhD Candidate at the Max Planck ETH Center for Learning Systems working on 3D Computer Vision.

https://wimmerth.github.io

DH Prof @URichmond. Exploring computer vision and visual culture. Ideas for the Association for Computers & the Humanities @ach.bsky.social and Computational Humanities Research Journal? Please share!

AI researcher at Google DeepMind. Synthesized views are my own.

📍SF Bay Area 🔗 http://jonbarron.info

This feed is a partial mirror of https://twitter.com/jon_barron

PhD student at the University of Tuebingen. Computer vision, video understanding, multimodal learning.

https://ninatu.github.io/

Postdoc at Utrecht University, previously PhD candidate at the University of Amsterdam

Multimodal NLP, Vision and Language, Cognitively Inspired NLP

https://ecekt.github.io/

🥇 LLMs together (co-created model merging, BabyLM, textArena.ai)

🥈 Spreading science over hype in #ML & #NLP

Proud shareLM💬 Donor

@IBMResearch & @MIT_CSAIL

Research Scientist GoogleDeepMind

Ex @UniofOxford, AIatMeta, GoogleAI

CS PhD student at UCSB 🎓 | Trustworthy AI/FL/HAI 📖 | DAOlivia co-founder | Building for this universe 🌌

Research scientist at Google DeepMind.🦎

She/her.

http://www.aidanematzadeh.me/

Incoming assistant professor at JHU CS

PhD at UNC | Bloomberg PhD Fellow

Prev: Google, Microsoft, Adobe, AI2, SNU

https://j-min.io

#multimodal #nlp

PhD student @ CMU LTI. efficiency/data in NLP/ML

Postdoc @UW, Prev.@UMich, Ph.D @PSU, Research Intern @GoogleAI, @AmazonScience. 🦋 https://hua-shen.org.

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp.bsky.social and The Stanford AI Lab. He/Him/His. https://web.stanford.edu/~cgpotts/

Research Scientist at Google DeepMind

https://e-bug.github.io

Research Scientist at Ai2, PhD in NLP 🤖 UofA. Ex

GoogleDeepMind, MSFTResearch, MilaQuebec

https://nouhadziri.github.io/