Excited to be in Albuquerque for #NAACL2025 next week!

I'll be presenting our work on SLMs for governing online communities on 4pm Wednesday, April 30th in Oral Session D (CSS.1) in Room Ruidoso .

Come by if you’re interested in LLM for governance or computational modeling of online communities!

25.04.2025 20:38 — 👍 5 🔁 0 💬 0 📌 0

🔗 Read the full paper here: arxiv.org/pdf/2410.13155

Thanks to my collaborators Xianyang Zhan, Yilun Chen,

@ceshwar.bsky.social, and @kous2v.bsky.social for their help!

#NAACL2025

[7/7]

25.04.2025 20:35 — 👍 0 🔁 0 💬 0 📌 0

⚖ Practical implications:

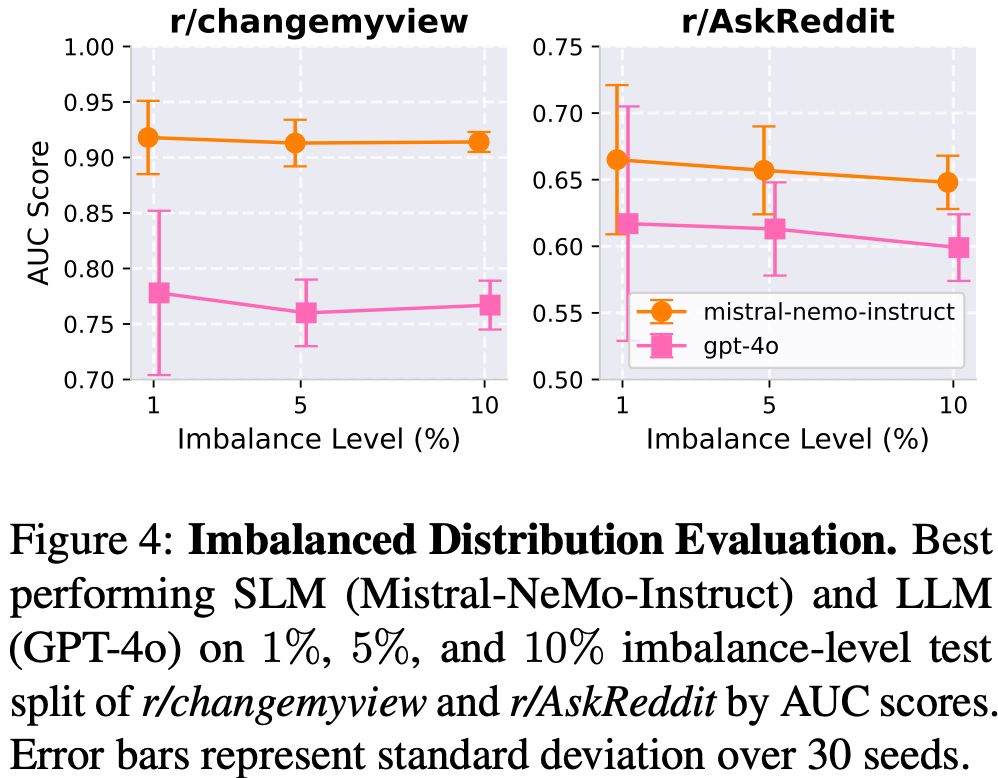

- SLMs are lighter and cheaper to deploy for real-time moderation

- They can be adapted to community-specific norms

- They’re more effective at triaging potentially harmful content

- The open-source nature provides more control and transparency

[6/7]

25.04.2025 20:35 — 👍 0 🔁 0 💬 1 📌 0

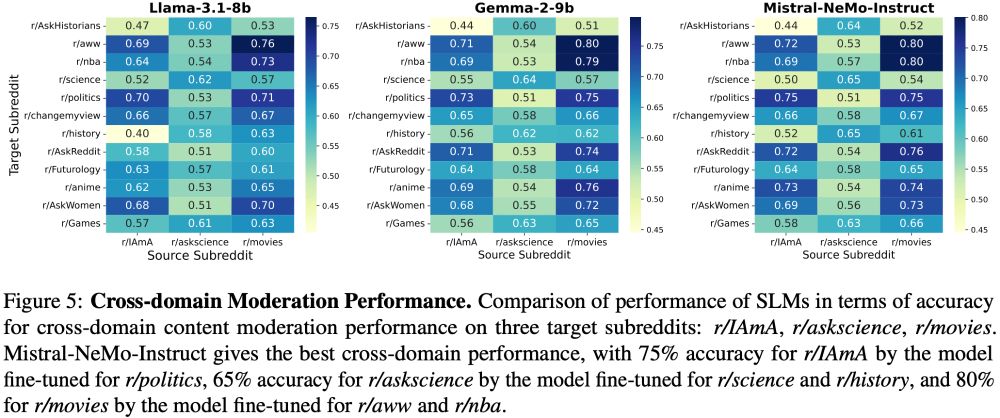

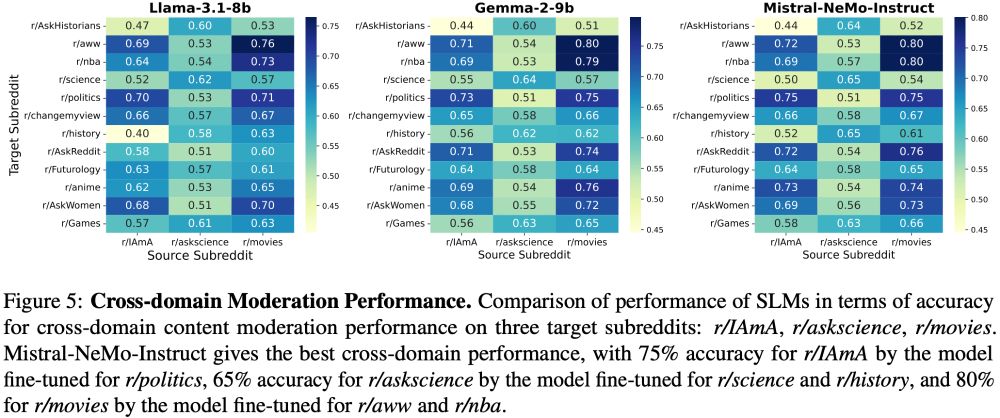

🌐 Cross-community transfer learning also shows promise! Fine-tuned SLMs can effectively moderate content in communities they weren’t explicitly trained on.

This has major implications for new communities and cross-platform moderation techniques.

[5/7]

25.04.2025 20:35 — 👍 0 🔁 0 💬 1 📌 0

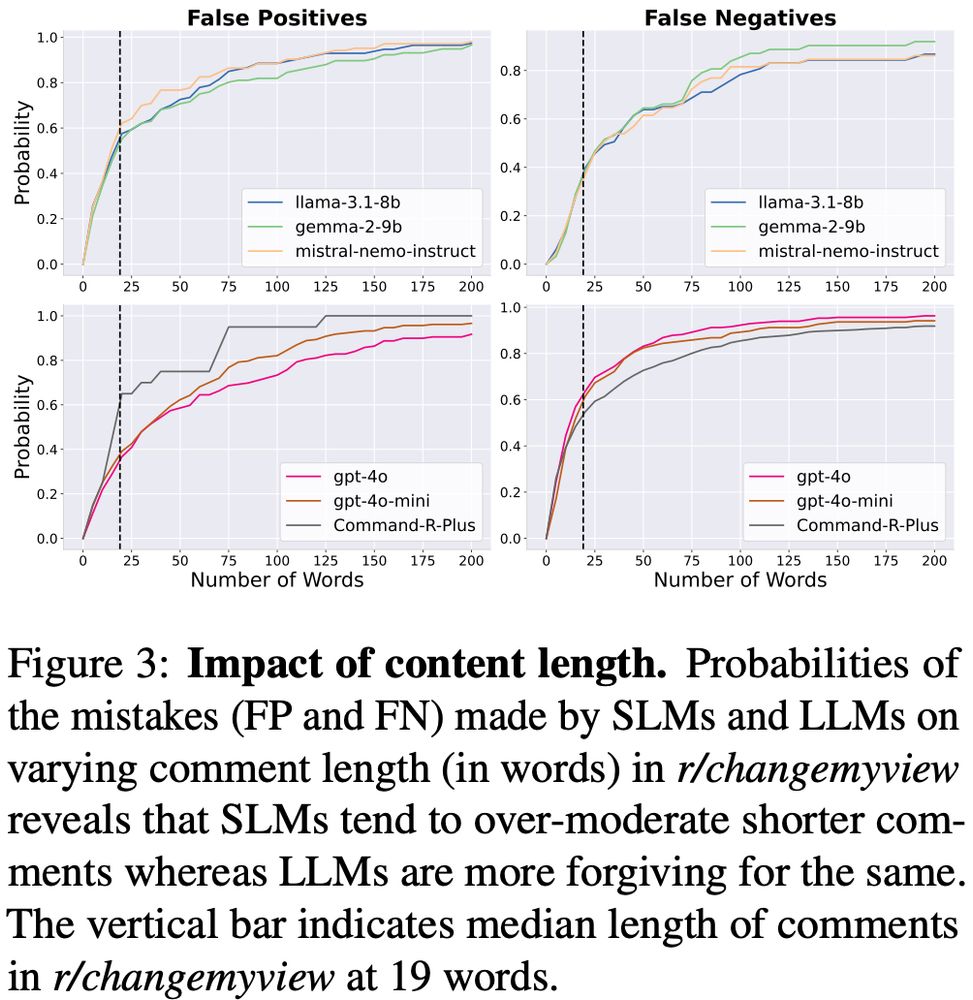

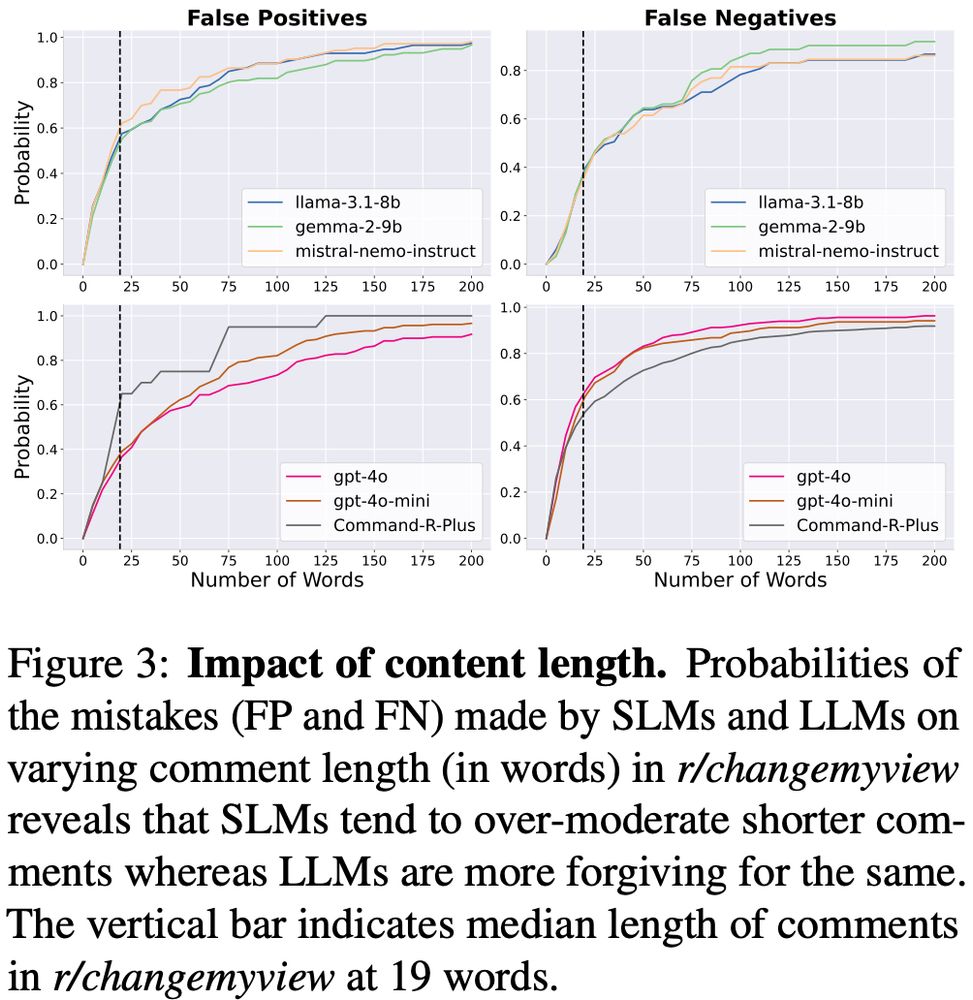

📊 Our error analysis reveals:

- SLMs excel at detecting rule violations in short comments

- LLMs tend to be more conservative about flagging content

- LLMs perform better with longer comments where context helps determine appropriateness

[4/7]

25.04.2025 20:35 — 👍 0 🔁 0 💬 1 📌 0

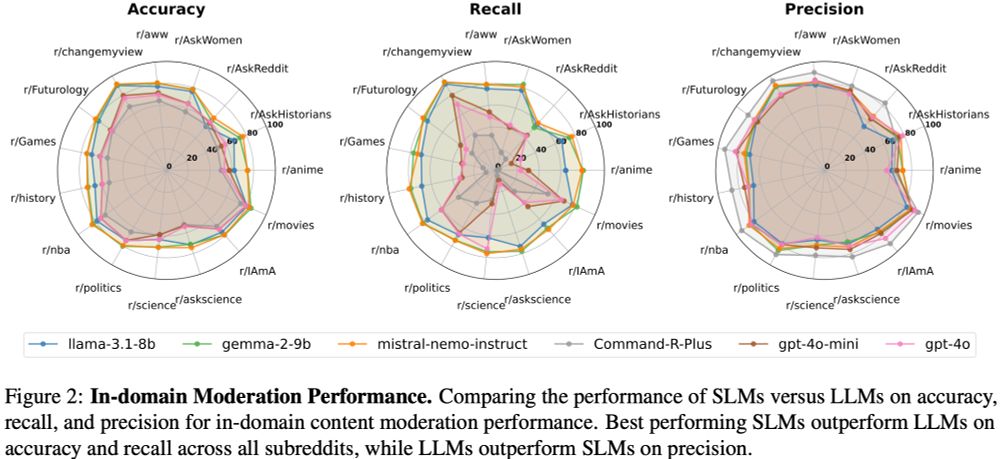

💡 Key insight: SLMs adopt a more aggressive moderation approach, leading to higher recall but slightly lower precision compared to LLMs.

This trade-off is actually beneficial for platforms where catching harmful content is prioritized over occasional false positives.

[3/7]

25.04.2025 20:35 — 👍 0 🔁 0 💬 1 📌 0

🚨 New #NAACL2025 paper alert: “SLM-Mod: Small Language Models Surpass LLMs at Content Moderation”

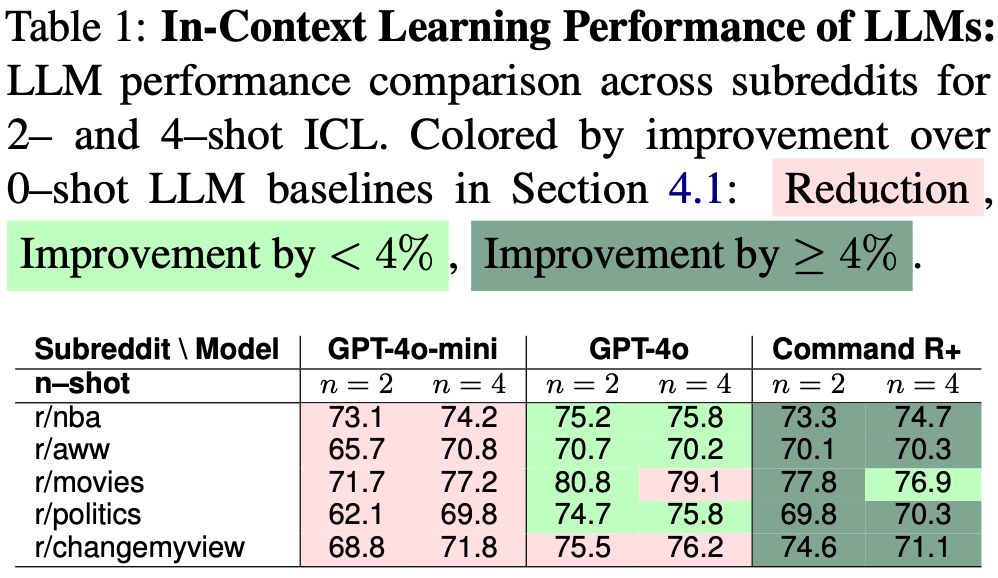

We show that fine-tuned small language models outperform LLMs like GPT-4o for content moderation tasks, with higher accuracy and recall!

🔗 arXiv: arxiv.org/pdf/2410.13155

🧵[1/7]

25.04.2025 20:35 — 👍 4 🔁 0 💬 1 📌 3

|| assistant prof at University of Montreal || leading the systems neuroscience and AI lab (SNAIL: https://www.snailab.ca/) 🐌 || associate academic member of Mila (Quebec AI Institute) || #NeuroAI || vision and learning in brains and machines

NLP Graduate Researcher at The University of Tehran #NLProc

👨💻 NLP PhD Student @ukplab.bsky.social

PhD candidate at Stony Brook University; Prev: Google Research, AI2 Aristo, Salesforce Research; MS from JHU

https://ykl7.github.io

PhD Researcher in Information Retrieval

Computational linguistics • Natural language processing • Formal linguistics • Machine translation | at Faculty of Mathematics and Physics, Charles University

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

NLP PhD student @ University of Maryland, College Park || prev lyft, UT Austin NLP

https://nehasrikn.github.io/

Incoming Assistant Professor of Computer Science @JohnsHopkins,

CS Postdoc @Stanford,

PHD @EPFL,

Computational Social Science, NLP, AI & Society

https://kristinagligoric.com/

PhD student @mainlp.bsky.social (@cislmu.bsky.social, LMU Munich). Interested in language variation & change, currently working on NLP for dialects and low-resource languages.

verenablaschke.github.io

#NLP #NLProc Lecturer (Assistant Professor) at Cardiff University.

http://nedjmaou.github.io

Center for Information and Language Processing (CIS): NLP research group at LMU Munich led by Hinrich Schuetze and @barbaraplank.bsky.social

University of Copenhagen Natural Language Understanding research group

Faculty: @iaugenstein.bsky.social @apepa.bsky.social

#NLProc #ML #XAI

Master's student @ltiatcmu.bsky.social, working on speech AI at @shinjiw.bsky.social

Postdoc in AI at the Allen Institute for AI & the University of Washington.

🌐 https://valentinapy.github.io

Incoming faculty at the Max Planck Institute for Software Systems

Postdoc at UW, working on Natural Language Processing

Recruiting PhD students!

🌐 https://lasharavichander.github.io/

PhD student @ltiatcmu.bsky.social. Previously, @ai2.bsky.social, @uwnlp.bsky.social, @appleinc.bsky.social, @ucberkeleyofficial.bsky.social; Social Intelligence in language +X. He/Him.🐳